- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

The Great Exposure Reset

24 February 2026 @ 5pm CET / 11am EST

AI Security Masters E4:

Introducing Cyata - Securing the Agenic AI Era

AI Security Masters E3:

AI-Generated Malware

CheckMates Go:

CheckMates Fest

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- High Performance Firewalls - ESX vs. Open Server

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

High Performance Firewalls - ESX vs. Open Server

In this article (R80.40 - Multi Queue on VMWare vmxnet3 drivers ) we discussed that ESX supports multi queueing with vmxnet3 drivers. Therefore we are able to install high performance firewalls under ESX. Now the question comes to my mind, what makes more sense if we look at the hardware compatibility of open server.

- Should we install the gateways on open server and we are bound to the Check Point HCL's?

or

- Should we install ESX on a server and GAIA gateway as virtual machine and so we are no longer bound to Check Point HCL's?

This is an interesting question for me!

What is the Check Point recommendation here?

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Tags:

- performance

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Guys,

I'm less interested in hard drive throughput. That shouldn't be interesting on a gateway. It's just a topic for an SMS or MDS with many log traffic.

The interesting question is, is the network throughput on ESXi with vmxnet3 network drivers and multi-queueing the same as an open server with ixgbe drivers and multi-queueing? Since R80.30 with kernel 3.10 and with R80.40 MQ is supported with vmxnet3 drivers. You can read more about this in this article: R80.40 - Multi Queue on VMWare vmxnet3 drivers

I have now checked the same test as described above in the LAB on a HP DL380 G9.

| Installation | Network throughput |

| HP DL380 G9 + VMware vSphere Hypervisor (ESXi) 7.0.0 + virtual gateway R80.40 (kernel 3.10 + multi queueing on two interfaces (ixgbe) + 2 SND + 6 CoreXL) |

8.34* GBit/s |

| HP DL380 G9 + Open Server (without ESXi) R80.40 (kernel 3.10 + multi queueing on two interfaces (vmxnet3) + 2 SND + 6 CoreXL) |

8.41* GBit/s |

* The values are not representative. It's just a quick test in the LAB.

The results are the same for me! The question is of course how this looks like with 40GBit+ throughput😀.

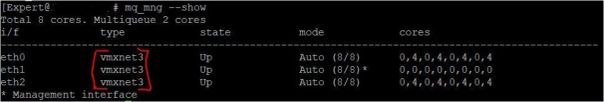

MQ settings:

Advantages for the ESXi solution:

- VMWare snapshots are possible

- Multiple firewalls on one hardware (without using VSX)

- Resource distribution e.g. for cores and memory under VMWare

- Independent of Check Point HCL for Open Server

- Backup of the gateway with VMWare backup tools

Disadvantage of this solution:

- Licenses per core is a problem with multiple firewalls

- More CPU utilization by the hypervisor

- No LACP (Bond Interface) possible

At this time I would not recommend the ESXi solution to any customer. But it would be interesting to hear what the Check Point guys have to say about this solution.

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

13 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Due to the complexities of scheduling a scheduler, running a firewall in a VM will always have a significant performance cost. Hypervisors which run full hardware VMs like ESX are particularly bad at I/O-heavy workloads. Paravirtualization like vmxnet3 interfaces helps somewhat, but you are very unlikely to ever get even 50% of the performance your box is capable of.

Now, this can be fine, as it gives you a lot of administrative flexibility, and lets you throw hardware at the problem with effectively no restrictions. I'm just pointing out you would need to throw a lot of hardware at it to beat the performance of direct installation on a high-end open server.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In a LAB environment I have just tested the following: VMWare installation on a HP DL380 G9 and Cisco Nexus with 10GBit/s interface. I installed the gateway virtually and enabled multi queueing for two interfaces. I have not activated any other software blades. With this I reach a throughput of about 8.34 GBit/s between several servers over the virtual FW.

I think this is a very good value for a virtual system.

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If installed directly on the DL380 G9, I would expect to be able to get over 80g of firewall throughput with only minimal effort. With a little more effort planning your cards, interrupt handler affinity, and CoreXL dispatcher cores, you could get well over 100g. The card and core planning is needed because three of the DL380 G9's PCIe slots go to one processor, the other three go to the other processor, and you don't want cross-processor interrupts. Data locality across NUMA nodes is a headache, but something you have to consider if you need to maximize performance.

One other potential point of interest: the biggest performance hit from hardware virtualization is to I/O, and you've already taken it in your performance figure. Enabling deep inspection features won't hurt your VM's throughput nearly as badly as it hurts throughput on a direct installation, because your current performance is interface-bound, not processor-bound. You won't ever get better performance than you would directly installed on the hardware, of course, but you won't take as large a hit until the processor performance cost of the features meets the already-high interface performance cost of the virtualization.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm assuming you didn't use IDE for the storage controller on the VM? 🙂

No KVM love?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

KVM?! bhyve 4 lyfe!

Just checked my R80.40j67 box, and I don't see VirtIO drivers under /usr/lib/modules/3.10.0-957.21.3cpx86_64/kernel/drivers/net/. I do see drivers for HyperV paravirtualized interfaces and for Thunderbolt, which is interesting. And for MII, in case you wanted to run your firewall on a microcontroller for some reason?

Check Point clearly supports running under KVM, since you can run the firewall in AWS. I'm probably just not seeing the driver (or not looking for the right name). I don't see any reason to expect it to handle I/O workloads significantly better than ESX, though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Guys,

I'm less interested in hard drive throughput. That shouldn't be interesting on a gateway. It's just a topic for an SMS or MDS with many log traffic.

The interesting question is, is the network throughput on ESXi with vmxnet3 network drivers and multi-queueing the same as an open server with ixgbe drivers and multi-queueing? Since R80.30 with kernel 3.10 and with R80.40 MQ is supported with vmxnet3 drivers. You can read more about this in this article: R80.40 - Multi Queue on VMWare vmxnet3 drivers

I have now checked the same test as described above in the LAB on a HP DL380 G9.

| Installation | Network throughput |

| HP DL380 G9 + VMware vSphere Hypervisor (ESXi) 7.0.0 + virtual gateway R80.40 (kernel 3.10 + multi queueing on two interfaces (ixgbe) + 2 SND + 6 CoreXL) |

8.34* GBit/s |

| HP DL380 G9 + Open Server (without ESXi) R80.40 (kernel 3.10 + multi queueing on two interfaces (vmxnet3) + 2 SND + 6 CoreXL) |

8.41* GBit/s |

* The values are not representative. It's just a quick test in the LAB.

The results are the same for me! The question is of course how this looks like with 40GBit+ throughput😀.

MQ settings:

Advantages for the ESXi solution:

- VMWare snapshots are possible

- Multiple firewalls on one hardware (without using VSX)

- Resource distribution e.g. for cores and memory under VMWare

- Independent of Check Point HCL for Open Server

- Backup of the gateway with VMWare backup tools

Disadvantage of this solution:

- Licenses per core is a problem with multiple firewalls

- More CPU utilization by the hypervisor

- No LACP (Bond Interface) possible

At this time I would not recommend the ESXi solution to any customer. But it would be interesting to hear what the Check Point guys have to say about this solution.

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are limiting the test to just 10g interfaces, then you are probably hitting limits of the endpoints (or maybe limits of the ixgbe driver) rather than limits of the firewall.

Do you have any 40g or 100g switches around? A Mellanox dual-port 10/25/40/50/100g card using the same chip as the ones Check Point sells for their appliances is around $800, and would let you do some serious stress testing. Just keep in mind PCIe 3.0 is 8gbps per lane, so an x16 slot can only get you 128g of total throughput.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Bob_Zimmerman,

I know the points. I also build many FW's with more than 100GBit throughput and we can also use Maestro or 64K appliances.

You can also read many tuning tips on your topics in my articles:

- R80.x - Performance Tuning Tip - Intel Hardware, SMT (Hyper Threading), Multi Queue, User Mode Firewall vs. Kernel Mode Firewall, Dynamic split of CoreXL in R80.40, Control SecureXL / CoreXL Paths, Falcon Modules and R80.20

and all other here:

- R80.x Architecture and Performance Tuning - Link Collection

We have several customers who use ESXi solutions. Now with R80.30 kernel 3.10 and R80.40 we have the possibility to use MQ. Without MQ we have reached a throughput of 2-3 GBit/s in the history. Now my tests have shown that you can reach up to 8.34 GBit/s with MQ on a 10GBit interface on a test system.

I just want to know how a vmxnet3 driver differs in performance from a real driver (ixgbe, i40e, mlx5_core) on HW basis when we use multi-queuing.

And the next question would be, what is the maximum number of RX queues on vmxnet3 driver?

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

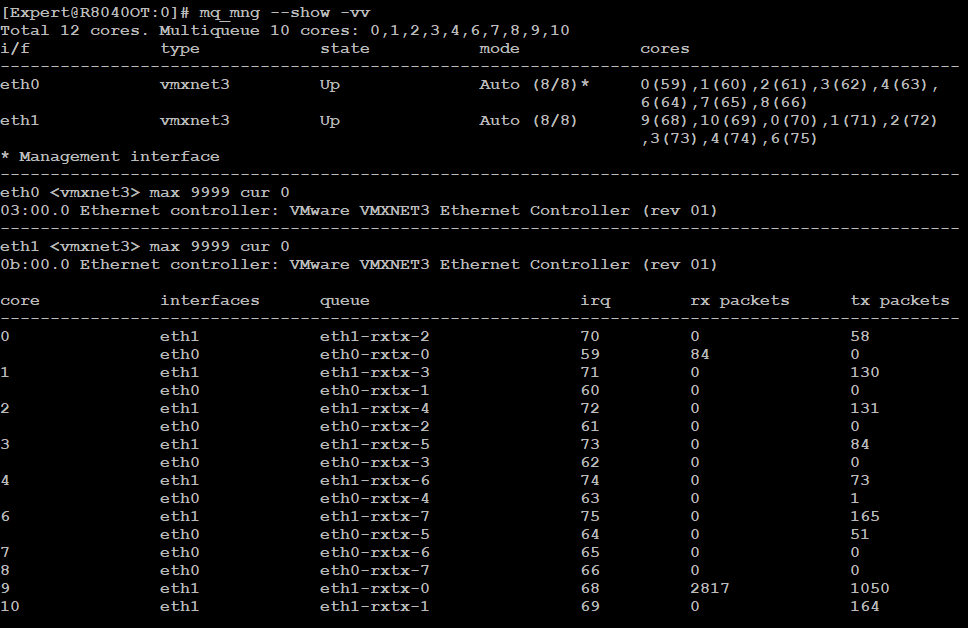

And the next question would be, what is the maximum number of RX queues on vmxnet3 driver?

Looks like the answer is a maximum of eight queues, based on this screenshot taken on a 12 core VM system with a 10/2 split assuming I'm reading it right:

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Tags:

- performance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What are you using for test software etc?

We using DL360G10 boxes with 8Core and running VSX, the boxes has 2 Bonds built on 2 x 25GB each.

Just a simple test with iperf and 1 VS allocated 2 VS instans easily manage 10Gbit firewall only.

Without multi queue the maximum i have seen is 3.5Gbit.

https://www.youtube.com/c/MagnusHolmberg-NetSec

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At iperf I always get sceptical. You need a server that can use MQ, otherwise you have the same problems as a firewall. Furthermore you need several servers in the backend and frontend to really test the throughput and it depends on the number of sessions.

During my tests, for example, I did not switch on any more blades. So everything work about SecureXL fast path.

As I said, these are not representative throughput values. I just tested this at LAB under ideal conditions. I just wanted to see if there are differences with and without ESXi when I use the same hardware server and MQ.

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure, i mean i production we see about 8-10Gbit per VSX node of traffic and they peaking on about 26% CPU useage over a 5min intervall.

And in these cases its more or less 90% of the traffic in fast path, most VS has less then 200 rules and its server to server traffic.

When it comes to openservers its tricky, we run the 8core so we can have a high GHZ on them.

If you add more cores then the CPU speed are lower, depending on your traffic pattern it will affect your performance.

https://www.youtube.com/c/MagnusHolmberg-NetSec

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you make a calculation of TCO for either open server or VMware based FWs in comparison to appliances?

From a technical perspective, adding yet another software abstraction layer definitely does not help with performance figures.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 28 | |

| 16 | |

| 10 | |

| 9 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 3 |

Upcoming Events

Tue 24 Feb 2026 @ 10:00 AM (CET)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - EMEATue 24 Feb 2026 @ 11:00 AM (EST)

Under The Hood: CloudGuard Network Security for Azure Virtual WANTue 24 Feb 2026 @ 10:00 AM (CET)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - EMEATue 24 Feb 2026 @ 11:00 AM (EST)

Under The Hood: CloudGuard Network Security for Azure Virtual WANThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Introducing Cyata, Securing the Agentic AI EraTue 03 Mar 2026 @ 04:00 PM (CET)

Maestro Masters EMEA: Introduction to Maestro Hyperscale FirewallsFri 06 Mar 2026 @ 08:00 AM (COT)

Check Point R82 Hands‑On Bootcamp – Comunidad DOJO PanamáAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter