- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: R80.20 CoreXL & Vsx best practices

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

R80.20 CoreXL & Vsx best practices

Hello,

During last months i've heard mutilple version from CP TAC regarding the best pratices in core affinity for FWK in vsx R80.20

- Put all available core (minus SND) to all VSs, add FWK instances (each time we have performance issues) with dynamic dyspatcher (current setup with 28 cores to all VSs, some cpu core are maxed ou some are doing nothing)

- Put specific cores to Specific VSs and their FWKs (eg vs 1 -> cpu 4-12, VS2-> cpu 13-20)

Where is the truth ? 😄

Kr,

Khalid

16 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where is the documentation of these best practices available ? I only have Check Point VSX Administration Guide R80.20 that explains CoreXL config starting p.87.

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's the what i asked the TAC when he suggested what was the best practices.

That's why i'm asking the community..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

More informations:

- R80.30 VSX Administration Guide

- R80.30 VSX Administration Guide

@Kaspars_Zibarts will share best practices on leveraging VSX technology to provide scalable and optimized security while keeping maximum performance.

- Presentation - nice presentation 100 points from me👍

And more Tuning tips from me:

- R80.x Architecture and Performance Tuning - Link Collection

- R80.x - Top 20 Gateway Tuning Tips

PS:

In your overview you should consider whether SMT (R80.x - Performance Tuning Tip - SMT (Hyper Threading)) is on or off. Here there can be massive performance differences with CoreXL, if the cores are not assigned correctly. The correct use of MQ (R80.x - Performance Tuning Tip - Multi Queue) should also be observed. The dynamic dispatcher (sk105261: CoreXL Dynamic Dispatcher in R80.10 and above) only brings a better distribution of connections in some situations, so I would only use it in specific cases.

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually it's very difficult to prescribe "best" model for VSX when it comes to CoreXL. Everyone is using it in different ways so solution at the end will be quite different. But I understand your frustration - it's not easy and it takes years to get some good understanding. And then when you think you know it all bam! New version and new tricks 🙂

First things first - all I know from "inside" is that you should not be running R80.20 on gateways, upgrade to R80.30 latest jumbo. I just heard that feedback and case numbers on R80.20 gateways (not mgmt!) was not great. We have been running VSX on R80.30 since january and it's been great. You might want to read this too if you decide to upgrade

Secondly, I'm not really good on VSX running on open servers - they seem to behave somewhat different, looks like open servers are more efficient and you can just run all VSes sharing the same FWK cores. At least that's what I've heard from "big" customers. We run appliances, mix of 23800, 26000 and 41000 chassis and they all needed tweaking to get best results.

Your next decision will be based on blades you use - is it just FW or also advanced blades. Basically VSX runs better without hyperthreading or SMT enabled if you only use FW and most traffic is accelerated. If you see a lot of PXL and you use advanced blades, you definitely will benefit from extra cores.

One special high CPU case for us for example was Identity Awareness (pdpd and pepd) - therefore we run those on dedicated cores so that they do not affect real firewalling.

To give you short answer - I prefer dedicated cores for each VS and even processes as it really helps troubleshooting, especially high CPU cases. Plus you are protecting your other VSes from being impacted.

It's a lot of careful work to plan your CoreXL split manually, especially if you use hyperthreading - you must consider CPU core sibblings! That's very important.

But it would be very difficult to give you exact answer without knowing exact circumstances.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thx for your insight.

We are running on CP hardware 14000 series cluster, with 32 cores.

Currently we have 2 VSs running FW/IPS/urlfiltering blade (no https inspec), you can below the cpu affinity, only 2 cores are almost always maxed, the rest is doing nothing (dynamic dispatcher is on)

Plan is to segment further the traffic to new VSs and also upgrade to 80.30

[Expert@EU933055-OSS:0]# fw ctl affinity -l

Mgmt: CPU 0

eth2-01: CPU 1

eth2-02: CPU 1

eth2-03: CPU 1

eth2-04: CPU 1

eth2-05: CPU 2

eth2-06: CPU 2

eth2-07: CPU 2

eth2-08: CPU 2

VS_0: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_0 fwk: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_0 smt_status: CPU 1 2 3

VS_1: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_1 fwk: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_2: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_2 fwk: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_3: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_3 fwk: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

We are running on CP hardware 14000 series cluster, with 32 cores.

Currently we have 2 VSs running FW/IPS/urlfiltering blade (no https inspec), you can below the cpu affinity, only 2 cores are almost always maxed, the rest is doing nothing (dynamic dispatcher is on)

Plan is to segment further the traffic to new VSs and also upgrade to 80.30

[Expert@EU933055-OSS:0]# fw ctl affinity -l

Mgmt: CPU 0

eth2-01: CPU 1

eth2-02: CPU 1

eth2-03: CPU 1

eth2-04: CPU 1

eth2-05: CPU 2

eth2-06: CPU 2

eth2-07: CPU 2

eth2-08: CPU 2

VS_0: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_0 fwk: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_0 smt_status: CPU 1 2 3

VS_1: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_1 fwk: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_2: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_2 fwk: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_3: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_3 fwk: CPU 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is hyperthreading enabled? You can run this command to check

if [ `grep ^"cpu cores" /proc/cpuinfo | head -1 | awk '{print $4}'` -ne `grep ^"siblings" /proc/cpuinfo | head -1 | awk '{print $3}'` ]; then echo HT; else echo no-HT; fi

if [ `grep ^"cpu cores" /proc/cpuinfo | head -1 | awk '{print $4}'` -ne `grep ^"siblings" /proc/cpuinfo | head -1 | awk '{print $3}'` ]; then echo HT; else echo no-HT; fi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HT is on 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, the HT sibling allocation is wrong I'm afraid. How many cores are set per each VS? You can do this:

cat $FWDIR/state/local/VSX/local.vsall | grep "vs create vs" | awk '{print "VS-"$4" instances: "$12}'

cat $FWDIR/state/local/VSX/local.vsall | grep "vs create vs" | awk '{print "VS-"$4" instances: "$12}'

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here you go

[Expert@Exxxxxxxx:0]# cat $FWDIR/state/local/VSX/local.vsall | grep "vs create vs" | awk '{print "VS-"$4" instances: "$12}'

VS-1 instances: 1

VS-1 instances: 1

VS-3 instances: 12

VS-3 instances: 12

VS-2 instances: 12

VS-2 instances: 12

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

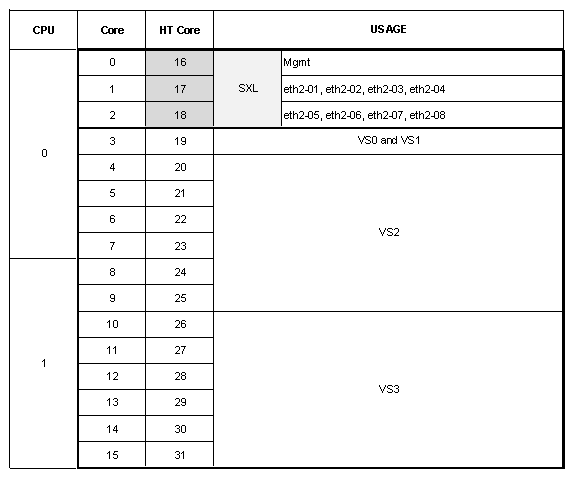

I would start with something like this. It's not ideal as VS-2 is stretched over 2 physical CPUs but it might help to fix overloaded CPUs

Note that cores 16-18 must not be used for FWKs at all! They are HT sibblings for cores 0-2 that are used for SND.

Which two cores are maxing out BTW?

What are throughput, connections per second and concurrent connections on each VS? You can check that with cpview on corresponding vsenv

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actual commands to achieve this

fw ctl affinity -s -d -vsid 0 1 -cpu 3 19

fw ctl affinity -s -d -vsid 2 -cpu 4-9 20-25

fw ctl affinity -s -d -vsid 3 -cpu 10-15 26-31

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CPU 16 and 17 are always in RED 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you must exclude cores 16-18 from FWK pool. As said before - they are HT siblings of 0-2 and must not be used for FWKs.

simple test would be (all VSes sharing the same resources):

fw ctl affinity -s -d -vsid 0-3 -cpu 3-15 19-31

simple test would be (all VSes sharing the same resources):

fw ctl affinity -s -d -vsid 0-3 -cpu 3-15 19-31

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Such a change has any impact on live prod traffic i guess ?

Will plan this.

Correct me if i'm wrong your recommendation is still to dedicate specific cores per VS ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Correct, I would stick with dedicated cores for VS as it allows easier troubleshooting and better resource protection.

I have done it during daytime without any impact on our VSX, but choice is yours of course. I'm not CP and cannot promise anything 🙂

I have done it during daytime without any impact on our VSX, but choice is yours of course. I'm not CP and cannot promise anything 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Plus you can always do these changes on standby node first, fail over and see what happens , then do the other one

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 20 | |

| 20 | |

| 16 | |

| 8 | |

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Fri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Fri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!About CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter