- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

What's New in R82.10?

10 December @ 5pm CET / 11am ET

Improve Your Security Posture with

Threat Prevention and Policy Insights

Overlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Re: First impressions R80.30 on gateway - one step...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

First impressions R80.30 on gateway - one step forward one (or two back)

Ok, we were finally "forced" to go ahead and upgrade our gateways from R80.10 to R80.30 for fairly small things - we wanted to be ale to use O365 Updatable Object (instead of home grown scripts) and improve Domain (FQDN) object performance issues when all FWK cores were making DNS queries causing a lot of alerts (see https://community.checkpoint.com/t5/General-Topics/Domain-objects-in-R80-10-spamming-DNS/m-p/19786)

Positive things - upgrades were smooth and painless - both on regular gateways and VSX.

All regular gateways seems to be performing as before, but I have to be honest that they are "over-dimensioned" and having rather powerfull HW for the job - 5900 with 16 cores.

VSX though threw couple of surprises.

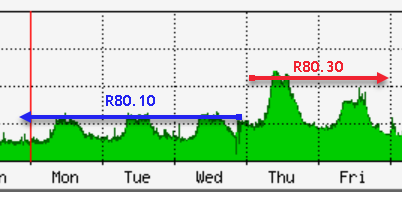

SXL medium path usage. CPU jumped from <30% to above 50% on the busiest VS that only has FW and IA blades enabled. Ok, there is also VPN but only one connection:

I haven't spent enough time digging into it but for some reason 1/3 of all connections took medium path whereas before in R80.10 it was nearly all fully accelerated. And most of it was HTTPS (95%) with next most used LDAP-SSL (2%)

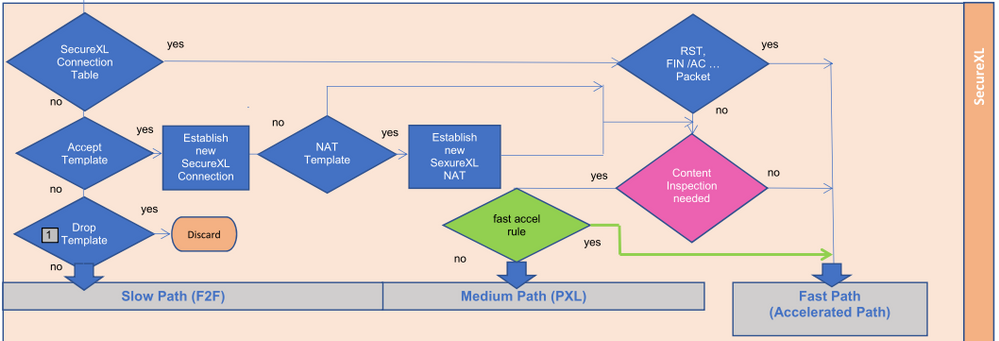

I used the SXL fast accelerator feature (thanks @HeikoAnkenbrand https://community.checkpoint.com/t5/General-Topics/R80-x-Performance-Tuning-Tip-SecureXL-Fast-Accele...) to exclude our proxies and some other nets and you can see that on friday CPU load was reduced by 10% but nowhere near what it used to be.

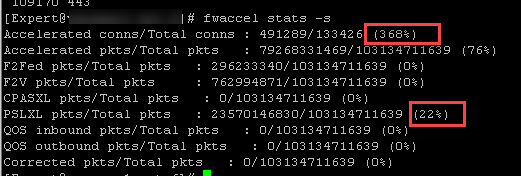

I just find it impossible to explain why would gateway with only FW blade enabled start to to throw all (by the looks of it) traffic via PXL. And statistics are a bit funny too:

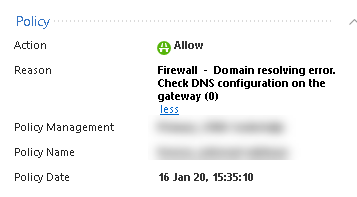

FQDN alerts in logs. I can definitely confirm that only one core now is doing DNS lookups (against all DNS server you have defined, in our case 2). But we are still getting a lot of alerts like these: Firewall - Domain resolving error. Check DNS configuration on the gateway (0)

Especially after I enabled updatable object for O365 in the rulebase.

As said before - I have not spent too much time on this as we had other "fun" stuff to deal with on our chassis, so it's fairly "raw". I will report more once I had some answers

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I got a "tip off" from inside CP! Verifying if I'm allowed to publish it here but seems like my PXL issue is resolved! Yeehaa! Power of community! Thanks to @Ilya_Yusupov

And the "secret stuff" here:

- Regarding medium path – you see most traffic in medium path due to a known bug we have since R80.20, TLS parser is enabled when the following combinations of blades are enabled

- FW + IDA or/and Monitoring or/and VPN (exactly our case!)

- You can validate me by running the following command - “fw ctl get int tls_parser_enable” it will bring 1

- As WA you can disable it by running the following on the fly - “fw ctl set int tls_parser_enable 0” è for permanent disabled put it under $FWDIR/boot/modules/fwkern.conf tls_parser_enable=0 and reboot.

- The above will bring the traffic to be fully accelerated as in previous version.

25 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just had a closer look at the IPs that are being sent to medium path and all points to O365 / MS.

Strange as O365 object is fully removed now from rules and DB.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ouch! Penny just dropped, not even sure how I overlooked the fact that CPUSE upgrade changed our hyper-threading from OFF to ON but (!) kept original manual affinity settings. So not surprising that CPU usage was screwed. I.e. our multiqueue was running on 6 "half" cores instead of 6 "full"! etc etc

Something to watch out for if you are using manual affinities on VSX!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Fast Acceleration (picture 1 green) feature lets you define trusted connections to allow bypassing deep packet inspection on R80.20 JHF103 and above gateways. This feature significantly improves throughput for these trusted high volume connections and reduces CPU consumption.

During my tests, I could reduce CPU (Core) usage about 10%-30%. It is also logically, no more content inspection is executed.

I like that you showed that graphically.👍

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kaspars,

Thanks for your report, a few comments:

1) What do you have set in the Track field of your rules? If using Detailed or Extended logging this can pull traffic into PSLXL to provide the extra detail being requested. Found out about this one while writing the third edition of my book.

2) Do you have any services with Protocol Signature enabled in the Network/Firewall policy if using ordered layers, or in the top level of rules if using inline? This can also cause some of what you are seeing and you should try to stick to simple services (just a port number) in those layers if possible, then call for Protocol Signatures and applications/URLs/content in subsequent layers.

3) As far as that wacky Accelerated Conns percentage, you must have very large amount of stateless traffic, see sk109467: 'Accelerated conns' value is higher than 'Accelerated pkts' in the output of 'fwaccel stat....

4) As you noticed the gateway is much more dependent on speedy DNS starting in R80.20 due to Updatable objects, rad, wsdnsd and a lot of other daemons.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I got a "tip off" from inside CP! Verifying if I'm allowed to publish it here but seems like my PXL issue is resolved! Yeehaa! Power of community! Thanks to @Ilya_Yusupov

And the "secret stuff" here:

- Regarding medium path – you see most traffic in medium path due to a known bug we have since R80.20, TLS parser is enabled when the following combinations of blades are enabled

- FW + IDA or/and Monitoring or/and VPN (exactly our case!)

- You can validate me by running the following command - “fw ctl get int tls_parser_enable” it will bring 1

- As WA you can disable it by running the following on the fly - “fw ctl set int tls_parser_enable 0” è for permanent disabled put it under $FWDIR/boot/modules/fwkern.conf tls_parser_enable=0 and reboot.

- The above will bring the traffic to be fully accelerated as in previous version.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow nice one Kaspars, don't think I would have ever figured that one out. Will disabling the TLS parser as shown cause issues with other blades should they get enabled later?

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Timothy_Hall as far as I understood R&D are working on proper long term solution to fix it.

As for FQDN alerts, now I can confirm that O365 updatable object is definitely causing it but only on our busy VSX. I haven't seen the same issue on regular gateways.

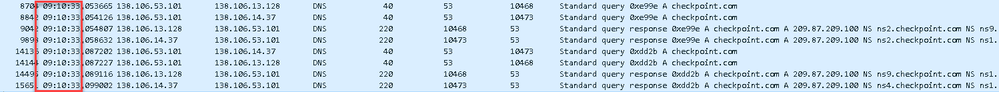

According to CP, alert is issued when resolver cannot get response to checkpoint.com query. I took a tcpdump and confirmed that DNS is actually responding but it does generate wsdnsd log, here's example of packet capture and matching wsdnsd.elg entry:

[wsdnsd 32546]@vsx1-ext[20 Jan 9:10:33] Warning:cp_timed_blocker_handler: A handler [0xf6f213d0] blocked for 44 seconds.

[wsdnsd 32546]@vsx1-ext[20 Jan 9:10:33] Warning:cp_timed_blocker_handler: Handler info: Library [/opt/CPshrd-R80.30/lib/libResolver.so], Function offset [0x2b3d0].

[wsdnsd 32546]@vsx1-ext[20 Jan 9:10:33] Warning:cp_timed_blocker_handler: Handler info: Nearest symbol name [_Z10Sock_InputiPv], offset [0x2b3d0].

Still digging through my packet capture to see if i can find any strange names / responses etc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Timothy_Hall - Indeed when you will enabled blades that will require tls parser you will need to remove the WA i suggested.

The WA is currently only for the combinations i sent.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That makes sense, thanks. Will add this workaround to the upcoming R80.40 addendum but be careful to add caveats for which blades are enabled.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Note that the long-term fix for the TLS parser being inappropriately invoked with certain blade combinations has been fixed in R80.40 Jumbo HFA Take 78+. This fix is also going to be backported into R80.20 and R80.30 Jumbo HFAs as well as mentioned in my R80.40 addendum for Max Power 2020. It is always preferable to have this fix present if possible rather than manually tampering with the TLS parser, as doing so can cause further problems.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

👍

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just a quick update on FQDN object alerts.

All was caused by missing rule that would permit DNS requests using TCP from gateway. I have added full details at the corresponding thread about FQDN here:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

we upgraded to 80.30 with latest HF, and even with disabling the parser (we dont use other blade) 49% of trafic is going trough medium path, how can we check further ?

Case atm.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Output of enabled_blades please.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FW,Identity Awerness, IPS.

Got info from TAC (canada) that tcp/445 and https will use medium path on 80.30 regardless of PSL parser disabled or not.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's correct - file shares (445) is forced via PXL. There's a procedure available to exclude it but it's fairly complex from memory

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

well we used fast_accel to bypass the big flows we had and PXL % droped to 20% now.

That traffic is now overloading SND core .... and fwk are still quiet high....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

More than 4 months laters, multiple sessions with TAC, and still no solutions yet (one special hotfix that did not help), this seems to be a known issue and TAC is not able to sync with r&d to get a fix ? wondering if someone in the community was able to get a fix at the end.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Illya, are you referring to The fix from sk166700 is integrated into take 219 of the jumbo for R80.30 as (look for the article or PRJ-14368, PRJ-15747, or PRHF-10818 in sk153152) Because in case 6-0002031094 we had a private fix for those on our current JHF. I would like to avoid doing a change and giving hope to my client for nothing. Thanks a lot

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Illya, after JHF 219 installation, the issue is still the same, i still need to force use fastaccel to accelerate tcp 443/445 otherwise the fwks are saturated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Khalid_Aftas ,

You mention port 445 which is SMB protocol not sure it is same issue as i'm sure the TLS parser issue described in the SK solved in JHF 219.

For SMB protocol if you have majority of such traffic the only possible solution i'm aware of is putting in under fast_accel.

Did you tried to put only 445 under fast_accel?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I used fast accel with combination of specifics source/destination and smb (the top talkers) and saw a decrease directly.

Using fastaccel for all smb traffic is another story, with this workaround all the security features are useless ...

Is this a known limitation on r80.30 ? that SMB traffic is not accelerated ? because we had 0 issues on 77.30

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Khalid_Aftas ,

As far as I know in R77.30 we had same behavior, I suggest to open TAC case for further investigation.

Thanks,

Ilya

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 31 | |

| 18 | |

| 7 | |

| 7 | |

| 6 | |

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |

Upcoming Events

Wed 03 Dec 2025 @ 10:00 AM (COT)

Última Sesión del Año – CheckMates LATAM: ERM & TEM con ExpertosThu 04 Dec 2025 @ 12:30 PM (SGT)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - APACThu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAThu 04 Dec 2025 @ 02:00 PM (EST)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - AmericasWed 03 Dec 2025 @ 10:00 AM (COT)

Última Sesión del Año – CheckMates LATAM: ERM & TEM con ExpertosThu 04 Dec 2025 @ 12:30 PM (SGT)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - APACThu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAThu 04 Dec 2025 @ 02:00 PM (EST)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - AmericasAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter