- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Call For Papers

Your Expertise, Our Stage

Ink Dragon: A Major Nation-State Campaign

March 11th @ 5pm CET / 12pm EDT

AI Security Masters E4:

Introducing Cyata - Securing the Agenic AI Era

The Great Exposure Reset

AI Security Masters E3:

AI-Generated Malware

CheckMates Go:

CheckMates Fest

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Hybrid Mesh

- :

- Firewall and Security Management

- :

- Re: R80.20 - R80.40 Performance Issues due to inco...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

R80.20 - R80.40 Performance Issues due to incorrect Selective Acknowledgements (SACKs)

I thought I should post this given all the issues with performance that I read about on the internet, following upgrades from R77.X to R80.X, as it may help some. I should say I’m still waiting for acceptance of the issue and feedback on a fix from Check Point support. Apologies in advance for the length of the post, but it's complicated.

We were initially moving to a new upgraded firewall gateway cluster with new hardware on R80.20 (with the new Gaia 3.10 kernel) from an old cluster on R77.20. However, each time we made the R80.20 cluster live, we started getting reports of performance issues for data transfers and random instances of connections being lost (Reset). Mainly from our 85 remote sites over a COIN, each with their own Sophos XG firewall protecting the local site. Each time we’ve had to revert to the old hardware on R77.20. Almost 10 months later and Check Point support, right up to R&D level have not been able to identify the issue.

The issue, as I initially described it to Check Point, is that we’re seeing an awful lot of ‘first packet isn’t SYN’ messages in the logs and a rapidly increasing cumulative total when you use the cpview command. Closer inspection of packet captures showed that there are significantly more connections resets, when moving to R80.20 (now R80.40) connections are randomly being dropped and this is causing the log entries and first packet isn’t SYN.

Recently I noted that it’s actually the Sophos XG firewalls at our remote sites that are dropping the connections, but oddly only when the R80.X cluster was active, not R77.20. Of course upon me telling Check Point it’s the Sophos XG that’s dropping the connections, they immediately closed the call, with a rather abrupt response.

Subsequently, I believe I've found the issue. The problem appears to be related R80.20 (and newer), incorrectly altering the Selective Acknowledgement (SACK) packets that are used to efficiently resolve packet loss (lost segment) issues. You can see what happens in the packet captures below.

Capture from Internal Interface (Where the server is connected)

Packet Time Source Destination Protocol Length Info

14 2020-07-21 07:52:13.987323 SERVER CLIENT TCP 60 443 → 53954 [ACK] Seq=1896 Ack=2014 Win=35456 Len=0

15 2020-07-21 07:52:14.026565 CLIENT SERVER TCP 1506 [TCP Previous segment not captured] 53954 → 443 [ACK] Seq=3482 Ack=1896 Win=65536 Len=1452 [TCP segment of a reassembled PDU]

16 2020-07-21 07:52:14.027036 CLIENT SERVER TCP 1506 53954 → 443 [ACK] Seq=4934 Ack=1896 Win=65536 Len=1452 [TCP segment of a reassembled PDU]

17 2020-07-21 07:52:14.027050 SERVER CLIENT TCP 66 [TCP Window Update] 443 → 53954 [ACK] Seq=1896 Ack=2014 Win=38272 Len=0 SLE=3482 SRE=4934

Simultaneous Capture from External Interface (Where the client is connected)

Packet Time Source Destination Protocol Length Info

14 2020-07-21 07:52:13.987378 SERVER CLIENT TCP 54 443 → 53954 [ACK] Seq=1896 Ack=2014 Win=35456 Len=0

15 2020-07-21 07:52:14.026552 CLIENT SERVER TCP 1506 [TCP Previous segment not captured] 53954 → 443 [ACK] Seq=3482 Ack=1896 Win=65536 Len=1452 [TCP segment of a reassembled PDU]

16 2020-07-21 07:52:14.027024 CLIENT SERVER TCP 1506 53954 → 443 [ACK] Seq=4934 Ack=1896 Win=65536 Len=1452 [TCP segment of a reassembled PDU]

17 2020-07-21 07:52:14.027060 SERVER CLIENT TCP 66 [TCP Window Update] 443 → 53954 [ACK] Seq=1896 Ack=2014 Win=38272 Len=0 SLE=905734836 SRE=905736288

In packet 17, the SLE (left edge) and SRE (right edge) sequence numbers are altered from when they enter the R80.X firewall to when they leave. The values are not valid sequence numbers (much too high and not previous sequence numbers in this connection) so our Sophos XG firewalls at the remote sites are correctly dropping the packets as invalid.

Today I tried going live with R80.40 in our environment again, but turned off selective acknowledgements in Gaia. Although this obviously isn't the long term fix, everything is working fine, no reports of connections being lost (reset) or performance issues! I await Check Point to acknowledge and fix the issue that's persisted since R80.20 and will be affecting a lot of customers, but at least I have found a workaround in the meantime and hope it helps someone.

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Finally some good news, I got the long awaited update from Check Point through our 3rd party support company.

"Checkpoint have advised that your custom Hotfix is included in T119, (non GA, released 4th July 21)."

I found the release notes here

It appears to be true, the below is included in those release notes for R80.40 Take 119.

PRJ-25598,PRHF-12228 | Security Gateway | In some scenarios, packets are dropped due to incorrect SACK translation when SACK and sequence translation are being used together.

It looks like we'll all either have to wait for Take 119 to go GA, or get a custom hotfix, this time for Take 118.

25 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wonder if disabling SecureXL would also resolve this issue (versus disabling SACK).

Not that it's a solution, of course, but it does help isolate where the issue might be.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PhoneBoy in R80.20 and up, you cannot disable SXL completely. Just a reminder 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi PhoneBoy, thanks for the response. In an effort to keep the post as short as I could (I failed huh), I left out a lot of the detail. Check Point support tried various things over the last 10 months, turning off SecureXL was one of the first. They tried:

Turning of SecureXL (Gaia)

Turning off IPS (Gaia)

Turning off 'drop out of state TCP packets' (Policy - Global Settings & Gaia)

Increasing the TCP end timeout (Policy - Global Settings)

They fiddled with the IPS 'Initial Sequence number spoofing' protection

Enabling Fast Acceleration (Gaia - essentially bypassing the firewall)

A fw.kern.conf entry I don't understand (fw_tcp_enable=0)

There's a load of other things too, but I gave up taking note as I was informed it was all documented and any required reverting would be done.

My solution of disabling SACK in the kernel involved

Altering $FWDIR/boot/modules/fwkern.conf and $PPKDIR/conf/simkern.conf

to add this line to both and rebooting: tcp_sack_permitted_remove_option=1

Subsequently now I've identified what the actual issue is and what the firewall is doing with the SACK packets, Check Point R&D are interested again. They have responded with the more elegant solution of removing the above kernel entries (then rebooting) and using the on the fly command:

fw ctl set int fw_sack_enable 0

This has also worked (SACK packet SLE and SRE numbers are not being altered) and is where we stand at the moment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well this has been an interesting thread for sure. It looks like the fw_sack_enable variable was set to 0 by default in R80.10 and earlier (which agrees with the various SK's referencing this variable), yet it seems to be set to 1 by default starting in R80.20 for some reason. Would be interesting to hear the rationale behind that change from Check Point.

Also do you happen to know if the problematic traffic was being handled by Active Streaming (CPASXL path) due to something like HTTPS Inspection being enabled? If this problematic traffic was actually being handled in the Medium path (PSLXL), I find it concerning that the SACK values were modified in this manner as this would seem to be a violation of the "passive" streaming concept.

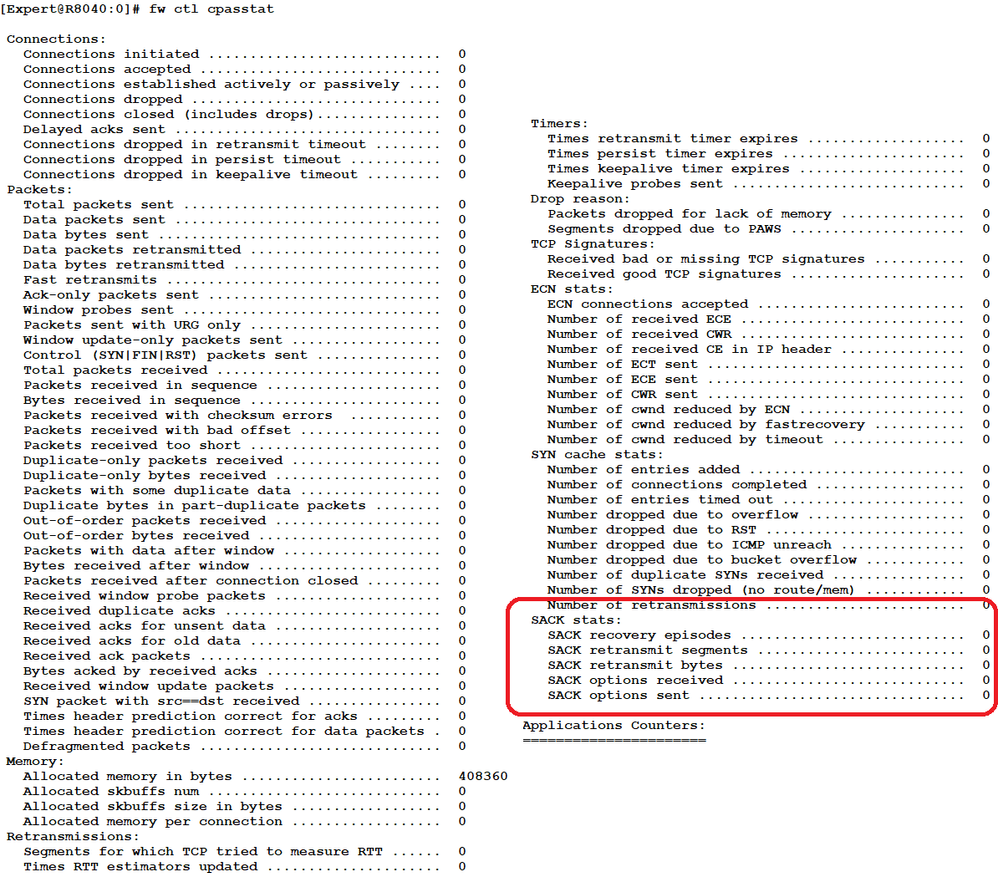

For future reference, if the problematic traffic was being handled by CPASXL, a new command was introduced in R80.20 called fw ctl cpasstat which shows detailed active streaming traffic handling statistics including SACK counters that might be helpful:

New Book: "Max Power 2026" Coming Soon

Check Point Firewall Performance Optimization

Check Point Firewall Performance Optimization

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Finally a few hours ago, it's taken 10 long months, hundreds of packet captures and I dread to think how many hours investigating, but today Check Point R&D finally acknowledged the problem. They stated that "RnD have found this is indeed an issue and is because the ISN Spoofing protection is enabled by default from R80.30 upwards. RnD are still investigating but do not have an ETA on a permanent fix for the issue (whether that is a portfix or added to the next jumbo hotfix accumulator)."

@Timothy_Hall That's useful info thanks, helpful to anyone trying to work out if they're impacted by this issue, I suspect most customers are, whether they know it or not. As you say it must have been introduced earlier than R80.30, as we first upgraded to R80.20 and the problem existed in this version.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How big of a deal is this? We're starting to look at some R80.30 boxes. I don't think we've had reports of issues but we looking into it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume CheckPoint will soon release SK about this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@John_Fleming Some more knowledgeable than me will be able to answer this more effectively, but it should only be an issue if you have packet loss in your network, as this is when SACK packets are used. For us it was very obvious as we discovered our service provider used PPPoE for connections to our remote sites, reducing the MTU from default 1500 bytes (we used) to 1492. This caused packet loss for one high profile application in particular and the Check Point SACK problem lead to connections being reset all the time, because our remote site firewalls TCP sequence inspection was not happy with the Check Point altered SACKs.

Some other applications experienced performance issues, I suspect because if SACKs were required they were incorrect and this lead to lots of retransmissions. Our network statistics monitor shows us that the number of connections resets on our network averaged 200 million per week (it's a very busy network) for the previous 5 weeks, this last 7 day period since the workaround we've had only 62 million.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So is it effecting your PPPoE links only or is it effecting other links as well? I don't know if we're tracking resets (which btw from a cooll graph view sounds awesome). Would you be ok with showing cpasstat output? We checked ours and based on the inet pipe size its a grain of sand on the beach numbers wise.

Thanks for this post BTW!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's difficult for me to say, as most of our traffic is to our remote sites on our COIN, over links where our service provider uses PPPoE (which ultimately caused the packet loss leading to the use of SACKs). This is where we focused all our attention with troubleshooting and Check Point support, as it was easy to replicate. Unfortunately now I have the workaround in place I can't check on on other traffic, going to the internet, not our remote sites. Equally during the process of applying the workaround, our gateway was rebooted, so all stats reset to zero. As I have the workaround in place, all the stats output from the fw ctl cpasstat are at zero, I didn't run this command while we were having the issue, so don't have a record unfortunately.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Understood. Is it possible the reset is being sent from the sophos on behave of the client? Like there is a IPS protection on the sophos thats causing the reset? Not asking to blame something just still trying to gauge if this is something I need to freak out or not. I only ask because I've been poking around RFCs and i'm not seeing anything that says if the ranges aren't right it should be reset.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If the outputs of fw ctl cpasstat are all zero that just means you don't have any traffic that requires active streaming, and that (probably) the Selective ACK counters were being modified on traffic subject to passive streaming (Medium Path - PSLXL) prior to your workaround.

New Book: "Max Power 2026" Coming Soon

Check Point Firewall Performance Optimization

Check Point Firewall Performance Optimization

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had a session with Check Point earlier this week, they asked me to upgrade our environment to R80.40 JHF Take 77 and then upload CPINFO files, so they could use our build to create the fix. I've been told they're not yet sure whether they'll provide the fix as a hotfix or just add it to the next jumbo hotfix accumulator.

I'll post again when the fix is received and tested.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's the fix in place and working, there are no longer any issues with incorrect SLE and SRE values in selective acknowledgement packets. The fix was just a hotfix based on R80.40 JHF Take 77, so presumably it'll be rolled in to a future JHF at some point soon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've received a response from Check Point. R&D have confirmed the fix will be integrated into a future jumbo hotfix but have no ETA on this at the moment as it needs further evaluation.

This means we’re not going to be able to upgrade beyond our current version (R80.40 Jumbo HFA Take 77 with custom hotfix) until this fix has been fully implemented in a future release.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Paul,

We are having the same issue as you. Can you tell me if you are running routing protocols, and which ones? Also, is it a large routing table?

Thanks,

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mike,

Sorry to take so long to reply, I didn't get notification to my email address of your post. We're not using any routing protocols, just static routes and not a big routing table.

I'm yet to receive any feedback as to whether this has been rolled in to future releases yet, despite best efforts to find out.

Best Regards,

Paul.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Paul,

do you have any news from checkpoint? We might have similar problems. I've checked the list of resolved issues but couldn't find anything regarding TCP SACK or selective acknowledgements.

Do you know if the fw ctl set command had any impact on existing connections?

Best regards

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mike,

I contacted our Check Point account manager 3 weeks ago to ask if they'd chase this up for me, as it's all gone cold. Your messages has reminded me that I need to ask if they've got anywhere yet, so I've done that now and await an update.

In theory there could be a minor performance hit to some TCP connections if SACK isn't being permitted, but we didn't notice any problems when we implemented that as a workaround.

Hopefully I'll get a response back soon, as like you I've just been checking all JHF release notes, including the latest one R80.40 Jumbo Hotfix Accumulator - New Ongoing take #102 but still there's no mention of anything.

I'll be sure to post as soon as I hear anything.

Best Regards,

Paul.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Paul,

Great thread. I've got similar issue noticing too many "First packet isn't SYN" errors for my liking.

Do you have a case reference or something that we could all chase Checkpoint with?

May get a stronger response with more people complaining about the same isssue?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I've actually now got a memory leak issue and as part of the TAC case I've been asked to upgrade to the latest R80.40 take, which obviously I cannot do, due to the custom hotfix previously provided. This could actually be a good thing, as Check Point will hopefully have to let me know if this issue has been resolved in the latest take or not.

If so then I can happily upgrade, if not I'll despair that they've continued to allow this bug to persist in their software for yet another year.

I'll update when I receive a response.

As you asked, the TAC case reference changed multiple times, but it was 6-0001812618 (then I think they opened an internal case for themselves with the "high performance team" 6-0001988570).

Best Regards,

Paul.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The brilliant "upgrade to latest version" trick!

OK, once I've got my other case closed, I'll contact them about this too!

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Finally some good news, I got the long awaited update from Check Point through our 3rd party support company.

"Checkpoint have advised that your custom Hotfix is included in T119, (non GA, released 4th July 21)."

I found the release notes here

It appears to be true, the below is included in those release notes for R80.40 Take 119.

PRJ-25598,PRHF-12228 | Security Gateway | In some scenarios, packets are dropped due to incorrect SACK translation when SACK and sequence translation are being used together.

It looks like we'll all either have to wait for Take 119 to go GA, or get a custom hotfix, this time for Take 118.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just a quick update for closure on this topic, I note that R80.40 Jumbo HF Take_120 and R81 Jumbo HF Take_36 have gone general release and contain the fix for this issue.

We decided to upgrade to do a blink image upgrade to R81 GA Take 392 + Jumbo HF Take_36 and have been running fine for a week now with no issues being reported.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 31 | |

| 19 | |

| 13 | |

| 10 | |

| 8 | |

| 6 | |

| 6 | |

| 5 | |

| 5 | |

| 5 |

Upcoming Events

Tue 03 Mar 2026 @ 04:00 PM (CET)

Maestro Masters EMEA: Introduction to Maestro Hyperscale FirewallsTue 03 Mar 2026 @ 03:00 PM (EST)

Maestro Masters Americas: Introduction to Maestro Hyperscale FirewallsTue 03 Mar 2026 @ 04:00 PM (CET)

Maestro Masters EMEA: Introduction to Maestro Hyperscale FirewallsTue 03 Mar 2026 @ 03:00 PM (EST)

Maestro Masters Americas: Introduction to Maestro Hyperscale FirewallsFri 06 Mar 2026 @ 08:00 AM (COT)

Check Point R82 Hands‑On Bootcamp – Comunidad DOJO PanamáTue 24 Mar 2026 @ 06:00 PM (COT)

San Pedro Sula: Spark Firewall y AI-Powered Security ManagementAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter