- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Problems with large VSX platforms running R80....

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Problems with large VSX platforms running R80.40 take 94

This is just heads up if you are running VSX with more than 4 SND / MQ cores and fairly high traffic volumes.

Please do not upgrade to R80.40 with jumbo less than 100 (fix is already included there) or request a portfix.

We upgraded from R80.30 take 219 to R80.40 take 94 and faced multiple issues:

- high CPU usage on all SND / MQ cores, spiking to 100% every half a minute

- packet loss on traffic passing through FW

- RX packet drops on most interfaces

- virtual router fwk was running constantly 100%

- clustering was reporting Sync interface down in fwk.elg

I don't want to go into the root cause details but lets say there is a miscalculation for resources required to handle info between SND and FWK workers. That's corrected in JHF 100 or above.

I just wanted to say that Checkpoint R&D was outstanding today, jumping on the case and working till it was resolved! Really impressed, I wish I could release names here but that would be inappropriate.

- Tags:

- kz

20 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the information and heads up

I just wanted to update you, PRJ-15447 already been released and its part of our latest ongoing (take 100)

Matan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

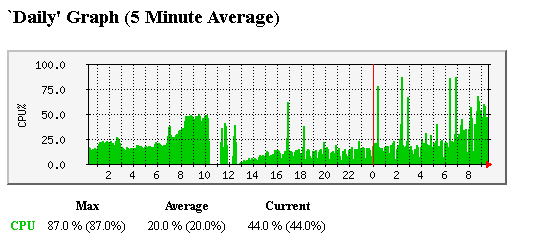

@MatanYanay not that great news this morning - virtual router CPU overload is back this morning including packet loss. You can see it's been growing gradually since the fix. I have failed over to standby VSX and it seems to be holding for now. Need your top guns back! 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Keep us updated @Kaspars_Zibarts 🙂 We have 4 large vsx clusters on open servers r80.40 take 91.

I can't say we experience massive drops - But I am always suspicious. The load is unexpectedly high and r80.40 has in general been an up hill struggle.

/Henrik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you seeing high CPU utilisation in comparison to low throughput? If so this matches what I see.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MatanYanay - PRJ-15447 is not mentioned in the JHF. Can you please elaborate.

/Henrik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

R&D confirmed that it is included @Henrik_Noerr1

quick check:

fw ctl get int fwmultik_gconn_segments_num

should return number of SND cores. When unpatched, it will return 4.

In all honesty - we have two other VSX clusters running VSLS and approx 10Gbps and we do not see issues there.

The difference I see is that this particular VSX is HA instead of VSLS and we have VR (virtual router) interconnecting nearly all VSes and carrying fairly high volume of traffic

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah - we get 4 on all nodes.

Usercenter unsuprisingly yelds no result for fwmultik_gconn_segments_num

Any info? I'm feeling too lazy creating a SR just before my Easter vacation 🙂

/Henrik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

indeed, this is one of those buried deep into system conf things. I just know the required values. If any of R&D guys want to expand on it, I'll let them do it. Don't want to spread incorrect or forbidden info! 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just ran this on my system and I get the following:

# fw ctl get int fwmultik_gconn_segments_num

fwmultik_gconn_segments_num = 4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Implemented JHFA102 and the above parameter reports correctly, strangely I had to reboot the node twice.

Also SNMPv3 user seemed to screw up, I had to delete and then add this back in or SNMP details would not be discovered.

In var/log/messages I have been seeing lots of the following since implementing the Jumbo:

kernel: dst_release: dst:xxxxxxxxxxxx refcnt:-xx

The above is explained in sk166363, which implies it can be safely ignored. This said I'm still going to ask TAC about it t cover myself.

So far I also believe CPU usage pattern has changed for the better but will tell tomorrow during a working day.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

we rolled back ours after 2 week struggle with CPU issues. Some of them later transpired to be unrelated to the upgrade. So another attempt is in planning. Still suspect our VSX setup: HA with Virtual router that's different to other VSX clusters we have (VSLS, no VR). Plus the load: 30Gbps vs 10Gbps

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Its interesting because I suspect a VSW issue and in yours a VR issue, both would use wrp links. and then have a single physical interface leading to the external network.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My other two VSX clusters both have VSW and they work just fine :). I suspect combination of HA cluster type + VR 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

more importantly update the SK to reflect everything fixed in the Jumbo.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wonder if this is also playing into my long standing issue with performance! I know we have discussed this in another thread.

I also found the cppcap is broken in R80.40 with JHFA91 and fixed in JHFA100 (or get an update rpm). I also suspect tcpdump is not quite working.

I found when running either of these I seem to only see one way traffic, example if I ping from a workstation the ping works, however tcpdump or cppcap report echo-request traffic, echo-reply is never seen (for tcpdump securexl was turned off, cppcap does not need to have securexl turned off).

I think Checkpoint need to slow down with there releases and really focus on reducing the bugs. This will help all of us, including TAC!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The inability to see two-way traffic with tcpdump/cppcap in VSX may be due to a little-known SecureXL feature known as "warp jump", this was mentioned in my Max Capture video series and is also mentioned here:

sk167462: Tcpdump / CPpcap do not show incoming packets on Virtual Switch's Wrp interface

fw monitor -F should be able to successfully capture this traffic.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Tim.

I do have a TAC case raised for this and provided examples for both tcpdump and cppcap, but it does sounds like the SK you have mentioned.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

whilst you're here @Timothy_Hall 🙂

had a very bizarre case of NFSD-TCP (2049) connections not being accelerated - it went partially via F2F and partially via PXL. After adding "plain" port service instead of CP pre-defined, acceleration kicked in. Else 3 out of 20 FWK workers were running flat out as 7 servers generated 5Gbps to a single destination server. Never seen this before with port 2049 and R&D are equally puzzled. We actually rolled back to R80.30 as we suspected upgrade was the root cause but it turned out that some application changes were done same night as our upgrade that started totally new flow to port 2049.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmm, any chance anti-bot and/or anti-virus were enabled? While this issue has been fixed long ago it sounds suspiciously familiar to this:

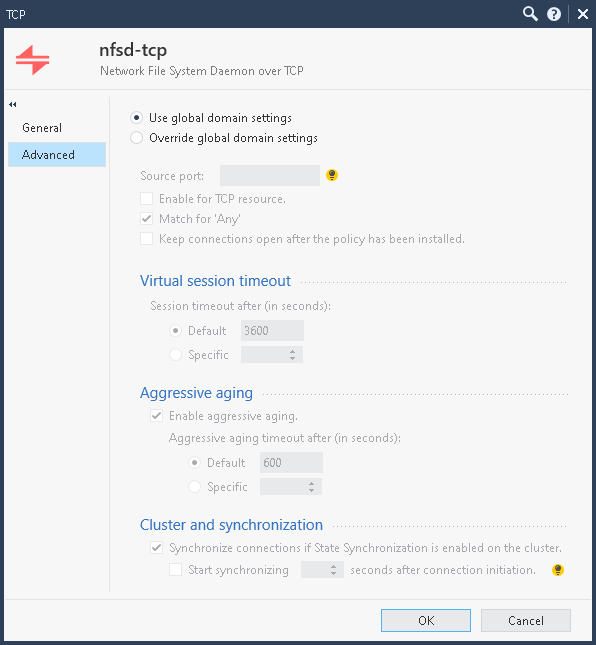

Also on the original nfsd-tcp service you were using, had any settings been overridden on the Advanced screen? I've seen that fully or partially kill acceleration whereas setting it back to "Use default settings" fixes it. You may have been able to achieve the same effect by creating a new TCP/2049 service with the default settings on the Advanced screen, or perhaps the original built-in nfsd-tcp service has some kind of special inspection hooks in it (even though the protocol type is "None") and you avoided those with the new TCP/2049 service.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We only use FW and IA blades on this VS. Nothing fancy. NFSD-TCP seems original and untouched as far as I can see 🙂

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 19 | |

| 17 | |

| 8 | |

| 8 | |

| 5 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter