- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

R82.10 and Rationalizing Multi Vendor Security Policies

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Check Point VSX Cluster - Virtual Systems down aft...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check Point VSX Cluster - Virtual Systems down after upgrade from R80.20 to R81.10

We recently upgraded a VSX VSLS cluster from R80.20 to R81.10 HF55 and the VSs are now reported as DOWN due to FWD pnote (FWD on Active VSX cluster member VSs are in Terminated state T).

Only one VS is in Active/Standby state while the rest are Active/Down.

Anyone faced such an issue recently?

See sample output.

Cluster name: CP-Cluster

Virtual Devices Status on each Cluster Member

=============================================

ID | Weight| CP-G| CP-G

| | W-1 | W-2

| | [local] |

-------+-------+-----------+-----------

2 | 10 | DOWN | ACTIVE

6 | 10 | ACTIVE(!) | DOWN

7 | 10 | DOWN | ACTIVE(!)

8 | 10 | DOWN | ACTIVE(!)

9 | 10 | DOWN | ACTIVE(!)

10 | 10 | STANDBY | ACTIVE

---------------+-----------+-----------

Active | 1 | 5

Weight | 10 | 50

Weight (%) | 16 | 84

Legend: Init - Initializing, Active! - Active Attention

Down! - ClusterXL Inactive or Virtual System is Down

Labels

- Labels:

-

VSX

33 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hopefully you are engaged with TAC on this issue, what was already attempted in respect to troubleshooting & recovery?

What process was followed to complete the upgrade, are both members upgraded at this point?

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- TAC was involved but not much done apart from collection of cpinfo.

- The MVC upgrade option was used.

- Both gateways had been upgraded but we ended up rolling back one gateway back to R80.20 using a snapshot.

- We attempted to rollback the 2nd gateway using a snapshot and it ended up corrupting the disks leading to a boot loop. We did a fresh install of R81.10 and reconfigured vsx.

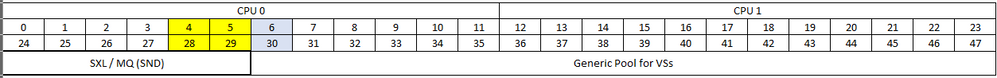

- The reinstalled R81.10 gateway is having intermittent performance issues in that traffic seems to be "dropped/lost" (TCP retransmissions seen on client-end Wireshark, no drops seen on zdebug + drop). When we failover to R80.20 gateway, all is well. We suspect default settings (see below) of CoreXL and MQ might be leading to the issues.

- Action plan -

- Increase number of SNDs to 12 - fw ctl affinity -s -d -fwkall 36

- Disable CoreXL on VS0 using cpconfig, assign VS0 instances manually - fw ctl affinity -s -d -vsid 0 -cpu 6 30

- Change MQ on Mgmt & Sync (bond0)?? - mq_mng -s manual -i Mgmt -c 5 29 AND mq_mng -s manual -i eth2-01 eth2-01 -c 4 28

- If performance improves on GW2, reimage GW1 and reconfigure it with similar settings as above.

@Chris_Atkinson what is the effect of enabling dynamic_balancing after making the above changes?

Is it better to leave defaults and enable dynamic_balancing ?

--- Current CoreXL affinity & MQ settings ----------------------------------

[Expert@CP-GW2:0]# fw ctl affinity -l -a

VS_0 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

VS_1 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

VS_2 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

VS_3 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

VS_4 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

VS_6 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

VS_7 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

VS_8 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

VS_9 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

VS_10 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47

Interface Mgmt: has multi queue enabled

Interface eth2-01: has multi queue enabled

Interface eth2-02: has multi queue enabled

Interface eth4-03: has multi queue enabled

Interface eth4-04: has multi queue enabled

Interface eth5-01: has multi queue enabled

Interface eth5-02: has multi queue enabled

Interface eth1-01: has multi queue enabled

Interface eth1-02: has multi queue enabled

[Expert@CP-GW2:0]#

[Expert@CP-GW2:0]# cpmq get -vv

Note: 'cpmq' is deprecated and no longer supported. For multiqueue management, please use 'mq_mng'

Current multiqueue status:

Total 48 cores. Available for MQ 4 cores

i/f driver driver mode state mode (queues) cores

actual/avail

------------------------------------------------------------------------------------------------

Mgmt igb Kernel Up Auto (2/2) 0,24

eth1-01 ixgbe Kernel Up Auto (4/4) 0,24,1,25

eth1-02 ixgbe Kernel Up Auto (4/4) 0,24,1,25

eth2-01 igb Kernel Up Auto (4/4) 0,24,1,25

eth2-02 igb Kernel Up Auto (4/4) 0,24,1,25

eth4-03 ixgbe Kernel Up Auto (4/4) 0,24,1,25

eth4-04 ixgbe Kernel Up Auto (4/4) 0,24,1,25

eth5-01 mlx5_core Kernel Up Auto (4/4) 0,24,1,25

eth5-02 mlx5_core Kernel Up Auto (4/4) 0,24,1,25

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A prerequisite to start Dynamic Balancing, is having all FWKs set to the default FWKs CPUs.

@AmitShmuel talks about it here:

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will assume default settings means out of the box configuration with coreXL enabled on VS0 with 40 instances. From there dynamic balancing is assumed to do its magic.

Also, On further analysis using FW monitor, we also suspect that our Mellanox 2x40G NIC cards might be having issues while in bonded state. We will also try a firmware upgrade of the cards after testing the coreXL/MQ settings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update

Seems the 40G NICs required a firmware update after moving to R81.10. Traffic was being dropped on the 40G bond interface causing TCP SYN retransmissions leading to slow loading of web applications. After upgrading the firmware all was good but we have ran into a new issue as seen below from TAC.

" It seems that you have experienced a segmentation fault that is recently common in the later takes of R81.10 - this segmentation fault is usually causing an FWK crash and initiates a failover.

We do not have an official SK about it as it is in an internal R&D investigation.

Please install the hotfix I have provided you with, as segmentation faults could harm the machine and it is very important to act quickly with this matter."

One of our VS crashed and failed over to the standby VS (still running on R80.20).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@KostasGR yes we tried to reboot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you noticing high CPU load for specific VS?

I ran into a similar issue after a VSX upgrade from R80.20 -> R81.10 but in HA mode.

Our standby member was flapping between Standby and DOWN due to missing Sync interface and the virtual router VS was missing its interfaces.

In addition the virtual router VS was consuming CPU up to 200% and a lot of Interface DRPs and OVR overall were visible.

We started tuning SNDs and FWKs but no change was resolving the issue.

Thanks to the last support engineer, who found a similar case, we were able to pinpoint it to priority queue and after disabling it the cluster became stable.

It would be worth in your case to check, if the spike detective is printing errors regarding the fwkX_hp process.

This one is responsible for the high priority queue in priority queue and clogged the specific VS in our case.

BR,

Markus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Markus_Genser could you share the commands used to identify the issue and how to kill the priority queues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure,

spike detective is reporting to /var/log/messages,

we got the following messages over and over again, especially during peak times with a lot of traffic passing through, note the fwk1_hp (according to TAC this is the high priority queue)

Jul 20 15:28:51 2022 <GWNAME> spike_detective: spike info: type: thread, thread id: 3383, thread name: fwk1_hp, start time: 20/07/22 15:28:26, spike duration (sec): 24, initial cpu usage: 100, average cpu usage: 100, perf taken: 0

Jul 20 15:28:57 2022 <GWNAME> spike_detective: spike info: type: cpu, cpu core: 5, top consumer: fwk1_hp, start time: 20/07/22 15:28:26, spike duration (sec): 30, initial cpu usage: 84, average cpu usage: 79, perf taken: 1

Jul 20 15:29:03 2022 <GWNAME> spike_detective: spike info: type: cpu, cpu core: 21, top consumer: fwk1_hp, start time: 20/07/22 15:28:56, spike duration (sec): 6, initial cpu usage: 85, average cpu usage: 85, perf taken: 0

Jul 20 15:29:03 2022 <GWNAME> spike_detective: spike info: type: thread, thread id: 3383, thread name: fwk1_hp, start time: 20/07/22 15:28:56, spike duration (sec): 6, initial cpu usage: 100, average cpu usage: 100, perf taken: 0For the virtual router, which is normally using 5-10% cpu, this is not normal

The rest was good old detective work with top to identify the VS causing the issue (virtual router in our case).

In the VS $FWDIR/log/fwk.elg to verify that the cluster status is caused by missing CCP packets, messages like this:

State change: ACTIVE -> ACTIVE(!) | Reason: Interface Sync is down (Cluster Control Protocol packets are not received)even though interfaces are up and you can ping the neighbour and we had enough SNDs to handle all the traffic and all in all the rest of the VS were consuming cpu in a low level, which showed most of the cores idling.

The TAC engineer used one additional command in the remote session, which i failed to note, that showed the various CPU hits for the kernel modules in percent which also displayed the fwk1_hp on top and following all that, he suggested to turn of priority queue.

To deactivate priority queue :

- fw ctl multik prioq

- Select 0 to disable the feature.

- Reboot the device.

BR,

Markus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Markus_Genser thanks.

My take is that the merger of the R80.X SP (Maestro etc) with the normal R80.X has brought about many issues on R81.X platform. Just like the move from SecurePlatform to Gaia OS a few years ago. Running on R81.X is like walking on egg shells in production.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Edward

I am sorry to hear that you had a bad experience with R81.10. We are investigating the reason for VSs to be down. As of the network interfaces firmware we moved towards auto-updates in future versions of the jumbo and major versions;

In general we get a great feedback about R81.10 quality from our partners and customers and we highly recommend upgrading to this version - not only management and regular gateways, but also Maestro environments, and actually the most widely used Maestro version is now R81.10 with the large number of Maestro customers that succesfully upgraded to R81.10.

Thank You

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Today we did a fresh install upgrade of our 2nd gateway and we hit another problem.

The VSs on GW2 are complaining that the cluster interface is down hence CCP packets are not being received. This causes the VS to failover in some instances. We are sure the bond interface is fine as everything was working well in the multiversion cluster (R80.20 + R81.10). This just started with the final upgrade of the 2nd GW.

The sync bond interface within the VS is UP in one direction only as seen in the output below.

[Expert@CP-GW2:6]# cphaprob -a if

vsid 6:

------

CCP mode: Manual (Unicast)

Required interfaces: 8

Required secured interfaces: 1

Interface Name: Status:

bond0 (S-LS) Inbound: UP

Outbound: DOWN (1245.8 secs)

[Expert@CP-GW2:6]# cphaprob stat

Active PNOTEs: LPRB, IAC

Last member state change event:

Event Code: CLUS-110305

State change: ACTIVE -> ACTIVE(!)

Reason for state change: Interface bond0 is down (Cluster Control Protocol packets are not received)

TAC have tried to analyze interface packet details to no avail. We will try a full reboot later on.

My advise is for customers to work with R80.40 for VSX based on the number of issues being faced. I believe that version is fairly mature and has been out there for long.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May I suggest that customer success engineer from R&D organization will work with you to review the environment and to help and resolve R81.10 issues that you're having ?

Thanks

Gera

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will be glad to work with someone in R&D. What would you need from me?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Edward_Waithaka please forward your contact details to @Gera_Dorfman via a personal message, or send them to me at vloukine@checkpoint.com, and I will pass it on to Gera and his team.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Done

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The ccp probe issue seems to clear on its own sometimes, then comes back later on.

[Expert@CP-GW2:2]# cphaprob stat

Cluster Mode: Virtual System Load Sharing (Primary Up)

ID Unique Address Assigned Load State Name

1 10.10.100.169 0% STANDBY CP-GW-1

2 (local) 10.10.100.170 100% ACTIVE CP-GW-2

Active PNOTEs: None

Last member state change event:

Event Code: CLUS-114904

State change: ACTIVE(!) -> ACTIVE

Reason for state change: Reason for ACTIVE! alert has been resolved

Event time: Wed Jul 27 09:43:58 2022

Last cluster failover event:

Transition to new ACTIVE: Member 1 -> Member 2

Reason: Available on member 1

Event time: Thu Jul 21 00:26:52 2022

Cluster failover count:

Failover counter: 1

Time of counter reset: Thu Jul 21 00:17:24 2022 (reboot)

[Expert@CP-GW2:2]# cat $FWDIR/log/fwk.elg | grep '27 Jul 9:43:'

[27 Jul 9:43:58][fw4_0];[vs_2];CLUS-120207-2: Local probing has started on interface: bond0

[27 Jul 9:43:58][fw4_0];[vs_2];CLUS-120207-2: Local probing has started on interface: bond2.xx

[27 Jul 9:43:58][fw4_0];[vs_2];CLUS-120207-2: Local probing has stopped on interface: bond2.xx

[27 Jul 9:43:58][fw4_0];[vs_2];CLUS-120207-2: Local Probing PNOTE OFF

[27 Jul 9:43:58][fw4_0];[vs_2];CLUS-114904-2: State change: ACTIVE(!) -> ACTIVE | Reason: Reason for ACTIVE! alert has been resolved

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

this behaviour was visible in our issue, depending on the load.

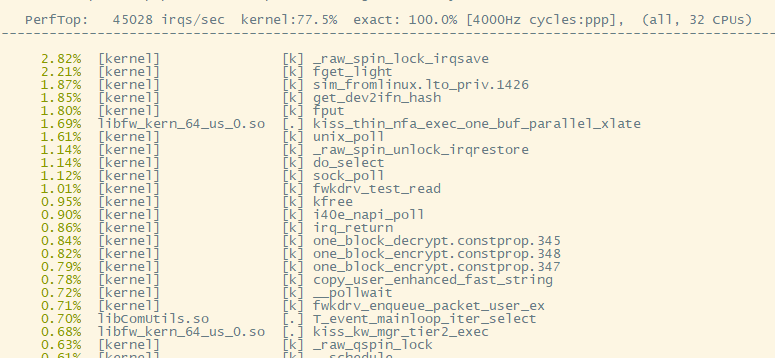

In the meantime i was able to get the command used by the TAC engineer it was "perf top" (in VS0)

On top it was visible that the fkw1 was consuming >100%. (Screenshot is just an example)

We also encountered the described clusterXL state after finishing vsx_util reconfigure, that was solved with a reboot of the active member.

I would suggest you take Geras offer for a remote with R&D.

BR,

Markus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Noted. We have planned for a reboot later tonight as we wait to hear more hopefully from R&D.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We managed to link up with the RnD team and they offered great assistance.

It was immediately identified that we hit a MVC bug. We disabled MVC now that all gateways were on R81.10, pushed policies and all was good.

MVC was causing an issue with CCP unicast communication. A R81.10 GW with MVC enabled (Auto unicast) and a R81.10 GW without MVC (Full manual unicast) run different unicast protocols for the CCP communication.

MVC enabled GW (1st GW to be upgraded)

---------------------------------------

[Expert@CP-GW2:0]# fw ctl get int fwha_default_unicast_mode

fwha_default_unicast_mode = 0

[Expert@CP-GW2:0]#

MVC DISabled GW (ideal state)

------------------------------

[Expert@CP-GW1:0]# fw ctl get int fwha_default_unicast_mode

fwha_default_unicast_mode = 1

[Expert@CP-GW1:0]#The issue with MVC caused 2 things, 1) perceived one way communication in the CCP protocol in some cases, this made the sync interface to be seen to be down. 2) The standby VS0 gateway was unable to communicate externally e.g. IPS updates, TACACS, Anti-bot url checks online (RAD process). This can lead to other issues especially with the AB blades if you don't have fail-open.

Any yes, if we would have gone up to the end of the upgrade process (disable mvc & push all polices), we wouldn't have been affected much by the bug. We decided to take precaution and figure out why things were not working as expected before completing the process.

RnD will be releasing a GA JHF soon with our bugs fixed, including the private HF we got for the segmentation fault. I don't think we are out of the woods yet but things are better so far, fingers crossed.

Lessons learnt so far.

- Prefer fresh install upgrade over in-place upgrade

- Complete upgrade process up to the end and start troubleshooting but ensure you have a fall back option

- Snapshots can fail. Always have other backup options on the table.

- Review your crash dump files for any crashes that may arise during the upgrade process. Open TAC case and give them the dumps for review.

- Sometimes latest and greatest is not the greatest unless you are willing to be the guinea pig.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think these are actually listed in the know issues list scheduled for next Jumbo; but at least your issue is resolved which is great!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you push policies on them as well, after the upgrade?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, we pushed several times.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We just did a R80.40 with R81.10 with JHFA66 (inplace upgrade).

We had an odd issue with one node where some wrp interface just appeared in VS0 and that cause a problem with HA.

We ended up removing VSX from the GW and then running vsx_util reconfigure which resolve the problem.

My main issues since upgrade is multicast has stopped working and we have determine the 'fw monitor' causes fwk process crash (yes TAC are engaged) and basically causes an outage.

What other surprises are waiting to be discovered..only time will tell.

b.t.w I am using dynamic balancing and so far not seeing an issue with this, it just works.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

All the best. We just hit another issue today!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We had another outage today, so since upgrading to R81.10 JHFA66 we have experienced outages every day.

Contacted TAC and requested that R&D are involved immediately.

This is pretty bad Checkpoint, considering its the recommended release. TAC are investigating but clearly they need some time to work through crash logs.

It would be good if I could get some top boys in Checkpoint to be all over my case with the TAC engineer (who is great b.t.w!)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@genisis__ try a fresh install upgrade maybe.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looking like a memory leak bug.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 11 | |

| 10 | |

| 10 | |

| 7 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationTue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationTue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter