- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: CPUSE will fail to install new Jumbo on restor...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CPUSE will fail to install new Jumbo on restored gateway

Just run into a interesting scenario with CPUSE failing to install take 203 on very last gateway (nearly 40 updated without any issues). Won't be creating TAC case out of pure laziness and too much to do as is

DA agent version is 1677, so all good there and gateway had take 154 installed before attempt to upgrade to 203.

What turned out was that this particular box was recently fully re-built from factory image due to SSD failure (second SSD dying on 5900 appliances! not good trend there). So we went R77.30 > R80.10 > take 154 > backup restore. All went great and box was running like a charm.

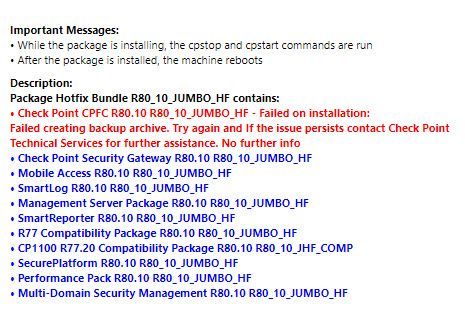

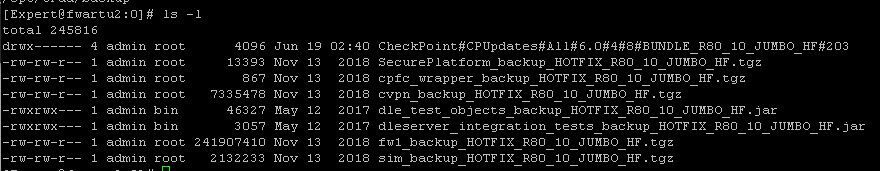

But now when I attempted to install take 203 it failed at very early stage with following error:

Digging into more detailed logs I found that CPUSE was looking for an older file that was not there (/opt/CPInstLog/install_cpfc_wrapper_HOTFIX_R80_10_JUMBO_HF.log)

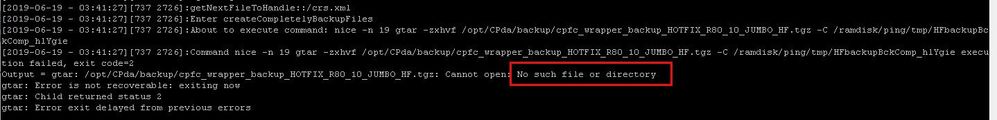

So I compared the deployment agent backup directory contents on both cluster members. /opt/CPda/backup/

This was restored node

and this was the secondary that was in it's "original" state

Ok - bunch of archives missing..

Then it clicked - when we restored the box from backup, we did not install all jumbo HFs that were installed over time originally but went straight to the latest take 154 that was running on the node when backup was taken.

So quick action was simply to copy all missing archives from "original" node /opt/CPda/backup to restored one and then take 203 installation succeeded.

It might be a known issue, but there's a definitely room for improvement for CPUSE in case you use backup for restore instead of snapshot

14 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just discussed this during User Group meeting in Tallinn at the beginning of this week, and before that, a couple of month ago, in Zurich.

CPUSE repository is in /var/log partition. This part of your filesystem is not backed up neither by snapshot nor backup tool. When restoring from one of those, it is likely you lose at least some of downloaded packages.

It looks to me that in your case CPUSE fails after backup restored cause some of those packages are missing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Valeri - backup restore succeeds but the next time we try to install JHF on such box, it will fail. You must have misunderstood the problem

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, I did not, but I fixed my previous comment to be more correct. It will fail because of some missing package info. Explained why

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

LOL, @Kaspars_Zibarts, you basically explained it yourself, I should read till the end before answering.

Anyhow, this is not a bug, this is expected behaviour, but not many people know that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would disagree on that - so you are saying that if you do disaster recovery using OS re-install and then backup restore, you won't be able to install new JHFs?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the CPUSE mechanism that is depending on backups in a directory/partition that is not included in snapshots and backup. The new HF over HF method may help a lot here as uninstall is not needed anymore for higher version installs.

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are forced to do a OS reinstall, HF / JT reinstall and restore...

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, but you don't want to re-install every single JHF that was existing on the gateway before it crashed as it can be rather many over 1-2 years. So you want to install the latest that was there when backup was taken. And then CPUSE should take care of any "missing" old JFH info that's irrelevant anyways. I understand that it can be tricky with custom HF but not generic Jumbos - they should be handled by CPUSE without jumping through loops and hoops.

I checked sk91400 and sk108902 but cannot find any reference that says that when you start restore from fresh OS install, you must re-install all old JHFs in exact order as they were done prior on that GW

I checked sk91400 and sk108902 but cannot find any reference that says that when you start restore from fresh OS install, you must re-install all old JHFs in exact order as they were done prior on that GW

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kaspars_Zibarts I hate to say that, but I think you are wrong here.

You have mentionedhttps://supportcenter.checkpoint.com/supportcenter/portal?eventSubmit_doGoviewsolutiondetails=&solut...

It says in the limitation section: "Restore is only allowed using the same Gaia version on the source and target computers"

That means, before pulling backup, you need to restore the exact combination of binaries, e.i vanilla plus HFAs, before pulling backups. If you do not do that, unexpected results are bound to happen.

Another case, https://supportcenter.checkpoint.com/supportcenter/portal?eventSubmit_doGoviewsolutiondetails=&solut... describes the optimal combination of snapshots and backups, that would allow you to avoid most of the hustle when restoring GWs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think there's a difference here between expected behavior (which you document) and optimal behavior 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As said earlier, I just think there's room for improvement. 🙂 In our case we had only local snapshots that died when SSD failed so the only option was fresh install along with JHF reinstallation and backup restore. You always want a system that's as simple as possible to restore in critical conditions. But we can park the case now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kaspars_Zibarts, Agree with you, there is a room to improve built-in disaster recovery tools.

On the other hand 🙂 Just yesterday during CheckMates Live event in Athens, I was drilling the guys that any critical backup should be taken out, snapshots included. Specifically to cover cases when SSD/HDD fails. It is not straight forward in case of snapshots, I know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seems like something we can improve upon. @Tsahi_Etziony

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We had the same issue on MDM 80.20 restored from backup , TAC had do modify cpregistry (after a mont) to make us able to install the latest JHF , guess indeed there are room for improvement here

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 19 | |

| 17 | |

| 8 | |

| 8 | |

| 5 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter