Hello there,

I've been trying to do some research about this on these forums as well as just on general networking forums and I'm having a hard time finding any good answers.

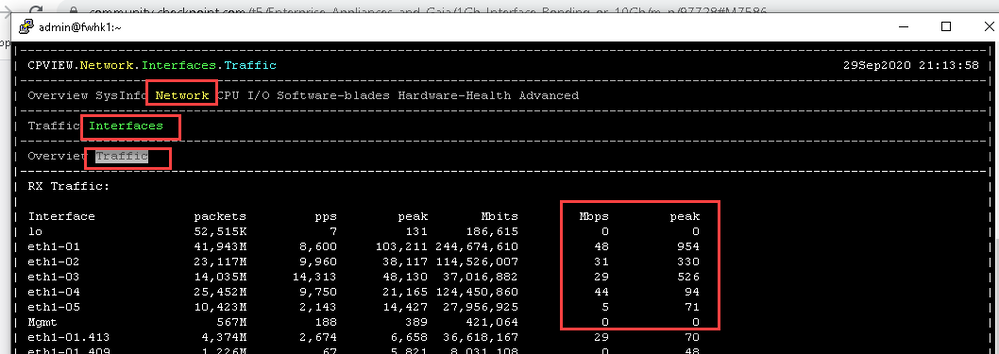

We're looking into replacing our aging 4600 cluster with something like a 6500/6600 and partly it's due to the 4600 being inadequate for our needs now but also because of the stability issues we've had. We've had many cases due to inexplicable failures that results in the entire cluster going down and fail-over not saving us. It seems to be due to either the 1Gb ethernet interfaces being overloaded or the CPU not handling the traffic from the NICs fast enough causing many tx-time outs, hardware unit hangs and reset interfaces.

One of the problems is currently we have a mishmash of interfaces where a single interface might be a regular network and others where we have multiple VLANs setup. Our WAN interface for example has 4 VLANs and fails often, our sync interface is direct between firewalls and fails often, and one of our LAN interfaces has no VLANs but also fails often.

I was hoping to avoid these issues on the new firewalls (even if the new hardware is all we need) by either bonding all of the internal interfaces, bonding several external interfaces, and then hopefully even bonding the sync interface - OR using 2x10Gb interfaces per firewall and still use dedicated regular or bonded interfaces for sync. I can't seem to confirm the best way to do this.

For example, on a 6500 I'd probably look at one of these scenarios per unit in the cluster:

- 4x1Gb Ethernet Bond for Internal VLANs/4x1Gb Ethernet Bond for External VLANs/Single 1Gb Sync interface (Since sync seems to be a specific port on these units. Maybe I could also steal the Mgmt port to bond sync)

- 1x10Gb Fibre for Internal VLANs/1x10Gb Fibre for External VLANs/2x1Gb Ethernet Bond for Sync

- 2x10Gb Fibre Bond with both Internal and External VLANs/2x1Gb Ethernet Bond for Sync

Ideally 4 10Gb ports per firewall would be nice for separation of internal/external VLANs as well as redundancy but then we'd require new Fibre modules on our Core Switches and it frankly seems overkill. I'm leaning towards option 2 just so that I don't have to deal with any potential issues that come with bonding protocols or CheckPoints compatibility with them, but it also gives a lot of bandwidth. The only thing I've thought with the 10Gb options is how much buffer do 10Gb interfaces get compared to multiple 1Gb ethernet interfaces? I just want to know of any pitfalls with the 10Gb approach over 1Gb other than the obvious cost of buying expansion modules, dealing with SFP+ modules and Fibre patch cables.

I know a lot of this might not matter with the appliances we're looking at I was just hoping someone could help confirm my rationale and let me know of any details I might need to consider.