- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

R82.10 and Rationalizing Multi Vendor Security Policies

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- SMB Gateways (Spark)

- :

- Brief introduction to SMB performance tuning

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Brief introduction to SMB performance tuning

Hello mates,

This is an attempt to list most important things I did to optimize performance on our SMB appliances. I tested and experimented many things over the 3 years since I have them and I am now very satisfied with the end result. This is mostly valid for centrally managed devices as you can’t do many of these tweaks on locally managed ones. I will try to keep it simple and not mention all possible commands and customizations and tweaks, only the ones that I find to be most significant.

But before I go into details let me describe my setup as a reference:

I have a cluster of centrally managed wired 1470 appliances. There are 6 LAN ports connected (some of them with VLANs), WAN and DMZ ports are also connected. Main Internet connection is 100 MB/s and backup one is 20 MB/s. There are few Site-to-Site VPNs, Remote Access users, usual inbound NATs (DNS, SMTP, Web, etc). Appliance is serving both as internal and external firewall. Other than that, nothing really special.

As we are consultant company, our users are very active on the Internet with constant requirements to provide different types of access. It is very dynamic and challenging sometimes but also very interesting.

Now, for most SMB usages there is really no need to tweak and think and do that much. But when the setup is at least as complex as mine is, then you will likely need to do this and that to optimize performance.

HARDWARE

As you all know SMB is very limited in hardware capabilities regarding upgrades and there is not much you can do here. You cannot upgrade any of the key components (CPU, RAM, HDD) and the most you can do is to add SD card to compensate a little for the limited amount of available disk space.

A mistake I did was to trust a local CheckPoint partner that convinced me at the time, 1470 will be more than enough for my needs. They also told me that if needed I can later unlock the 4th CPU core and effectively upgrade it to 1490. And you all know that is not true. Anyway, my point here is always go for an appliance with maximum amount of CPU cores. Nothing else boosts performance more than that.

One more thing to do is to ensure there are no problems with the connectivity. Use high quality cables of at least 1.5m length. Unfortunately, Gaia Embedded do not have so many diagnostic tools as Gaia but you can still use ‘ifconfig’ to analyze interface statistics and see if you have any errors, drops, overruns, etc. My appliances are connected to Cisco 3750 stack and I use its tools for that as well. If you have any interface “problems” solve them first and then either manually or automatically do periodic checks that everything is looking good.

Most SMBs are not very hot devices and require no special cooling. You may use ‘show diag’ command to monitor temperature and voltages. Unfortunately, those are not available for SNMP monitoring. Thing is that if CPU starts to overheat, OS may shutdown one or more cores and that will cause very dramatic performance degradation.

SMB appliances have very limited amount of RAM. Right after startup free memory without TP blades enabled will be ~1.5GB and with TP blades enabled ~1.3GB.

There is no swap space configured. Initially I thought that this is intentional but after checking vm.swapsiness kernel parameter I know think it is because of the lack of storage space reserved for that. It is possible to create (mkswap) and activate (swapon) swap space and experiment with how swap space affects performance. This is on my TODO list so stay tuned.

FIRMWARE

If you haven’t yet upgraded to latest R77.20.87 JHF (build 004 at present) do that now. This is the most stable and efficient firmware available to date and I highly recommend it. It still has some problems, but they are valid for all of the builds because of design issues and restrictions.

Linux kernel version is 3.10 and it is the same as on Gaia appliances. Something worth pointing out.

Firmware on SMBs boots relatively fast but then it does a lot of initialization things. For the first few minutes it might be really loaded with running scripts, checking for updates, preparing statistics, etc. In cluster environment do not hurry to fail over, wait at least 5-10 mins for the node to settle down.

MONITORING

Setup also monitoring via SNMP for all interconnected network devices. You can’t honestly optimize performance efficiently if you don’t have something like that. I use Zabbix but there are many other similar tools. You can monitor network interfaces, memory and storage, load average, VPNs, etc. on SMB and that is more than enough. Sadly, you do not have the Monitoring blade available and cannot benefit from it. Also, some of the readings in SmartConsole are not very accurate for SMB so don’t rely too much on that either.

LOGGING

No doubt, central management offers the best possible logging and you shall use it quite a lot to monitor ongoing traffic and adjust configuration. Prepare pre-defined queries for each enabled blade, for accepted and dropped traffic, for VPN and Remote Access blades. Make sure you know how to write filters, those can be quite handy to spot possible traffic anomalies.

Log files on SMB are pure mess but the ones I monitor the most are:

# tail -f /var/log/messages

Really not much to see here compared to Gaia.

# tail -f /var/log/log/sfwd.elg

This one is the most useful to watch for problems, errors, etc.

# dmesg

Not a file but command to display kernel message buffer.

Note: In case of OS crash there will be *panic* files in /logs directory. Download them and open SR for investigation if you have the nerves to tackle it with TAC 😀

Logging comes at a cost. It requires resources and may considerably slow the performance. Once you tune rule base think very carefully what you really need to be logged in and disable everything else. This is especially true for your ‘top hit’ rules. Think that sometimes in order to log something the appliance needs to do additional work (identify source, determine app category, etc.). For example, I do not log traffic going to NTP server. It is one of my top hit rules and logging it is not of much use anyway. Same applies to SNMP and ICMP protocols.

Always log dropped traffic unless you are absolutely sure you don’t need it! You will save yourself a lot of problems with that. Sacrificing performance here is well worth it. The only rule I do not log is the one that drops broadcast traffic.

Take some time to just sit down and observe what traffic goes on through the firewall. How much of it is dropped or rejected. How much is accepted or translated. How much is encrypted or inspected. Knowing that will almost immediately give you a clue what to tweak.

CONFIGURATION IN WEBUI

For centrally managed SMB there is not much you can do here to optimize performance. Make sure you are using reliable NTP server(s), setup SNMP and opt for setting Additional Management Settings - Move temporary policy files to storage to True. Also, if possible, avoid using built-in services such as DHCP, better use DHCP relay. Take a backup and logout; you are done here.

CONFIGURATION IN SMART CONSOLE

This is where all the fun happens. You will be tempted to go and enable everything possible from the very first time. Don’t do that. Start with enabling blades one by one. It takes more time, but it is much easier to identify where performance degradation comes from. I understand often this is not possible because it sacrifices security as well and, in such cases, at least limit initial blade configuration to be as “light” as possible and go from there.

Before you start adding rules make sure key firewall systems are activated and working. Use following commands:

# fwaccel stat

Accelerator Status : on

Accept Templates : enabled

Drop Templates : disabled

NAT Templates : disabled by user

# fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 0 | 694 | 1797

1 | Yes | 1 | 254 | 1334

2 | Yes | 2 | 328 | 1194

# fw ctl pstat

System Capacity Summary:

Memory used: 27% (289 MB out of 1050 MB) - below watermark

Concurrent Connections: 0% (1189 out of 149900) - below watermark

Aggressive Aging is not active

Only in cluster environment:

# cphaprob stat

Cluster Mode: High Availability (Active Up) with IGMP Membership

Number Unique Address Assigned Load State

1 10.231.149.1 100% Active

2 (local) 10.231.149.2 0% Standby

# top

Check Load Average, CPU load counters, memory values.

# ifconfig -a

Check for drops, errors, overruns.

There are even more diagnostic commands you may run but these are the bare minimum. Investigate and fix anything that looks suspicious. Do not postpone it. Later when things start to get complicated you may not have the possibility to do that anymore.

LOAD AVERAGES

I find this to be the most accurate indicator of system performance.

Many articles on Internet explain that on Linux ideal load average is equal to the number of CPU cores, meaning that on quad core processor for example it is 4.00. That is so, but due to design issues CPU cores are not equally loaded on Gaia Embedded and for that load averages over 2.00 usually indicate overloaded system.

There are load average measurements at 1, 5 and 15 minutes. On SMB load average goes high real quick so make sure you are taking samples from the 1 minute value at 1 minute frequency if you want to catch load spikes.

This is how load average affects stability and performance:

- < 1.00

Load average is low and there should not be performance or stability issues.

- 1.00 – 2.00

Appliance is loaded but not too much. There should not be stability issues but may be some performance degradation. If it stays like that for a long time you may think of optimizing this and that.

- 2.00 – 3.00

Appliance is moderately loaded. Again, there should be no stability issues, but users will likely start to notice it. This often happens when there is a large transfer going on (elephant flow). You may check interfaces and logs to identify where it comes from and do some optimizations if possible.

- 3.00 – 5.00

Appliance is heavily loaded. There might be packet drops, Site-to-Site VPNs may go down, voice connections start to suffer. Again, identify source and take necessary actions.

- > 5.00

Appliance is severely overloaded and different fancy things may happen including loss of connectivity, kernel crashes and spontaneous reboots.

Constant load above 4.00 means system is not configured optimally or the traffic is just too much for it. In very rare cases it might be hardware problem and that is a bit difficult to diag. If the traffic is just too much, then you don’t have many possibilities but to upgrade device to a more powerful one.

But if the system is moderately loaded or there are occasional spikes then there is usually room for optimization. Your first task is to locate where the problem comes from.

On most SMB, performance issues are almost always connected with Threat Prevention (TP) blades. I made a test and with only FW and VPN blades enabled even with SecureXL disabled, performance is still quite acceptable.

Unlike Gaia, Gaia Embedded does not have too much acceleration capabilities. In fact, SecureXL is the only one you can play with. There is CoreXL as well, but you can’t really tweak anything here that will give you performance boost. For that your first and only target is to optimize SecureXL work as much as possible.

For SMB only Accept templates are enabled by default. You can enable NAT Templates but I do not think that will help a lot and may actually cause problems. Drop templates are not supported.

Dropped packets take significant portion of the traffic especially if appliance is connected to Internet. One thing you can do to help your firewall with dropped traffic is to utilize ‘sim dropcfg’ tool.

Start with analyzing packets that are dropped. You will soon notice most of it coming from Internet are port scanners testing for common ports such as 22, 23, 80, 139, 445, 3389.

What you can do is prepare a file, name it simdop.db with the following content:

dport 22 proto 6

dport 23 proto 6

dport 80 proto 6

dport 139 proto 6

dport 445 proto 6

dport 3389 proto 6

And then use ‘sim dropcfg -e -y -f simdrop.db’. Also, if you are having an attack coming from single IP or entire network you can block it here as well. Dropping traffic using ‘sim dropcfg’ is more efficient than from policy and it does not require policy install. In case of DDoS you can’t do match as Gaia Embedded offers no way to protect from that. It is manual work but it is still better than nothing.

If you have cluster and you want to avoid modifying simdrop.db on both nodes you can put it on a Web server and use similar script to load it:

#!/bin/sh

#

# Download and activate new sim dropcfg database

#

# Set working directory

cd /pfrm2.0/etc/

# Download database from server

echo Downloading latest simdrop.db file

curl_cli -s http://192.168.0.1/simdrop.db > simdrop.db.new

# Check for possible error (not HTTP one though)

if [[ $? != 0 ]]; then

echo "Failed to download database file"

exit 1

fi

# Replace database file

mv -f simdrop.db.new simdrop.db

echo Reset simdrop

sim dropcfg -y -r

echo Load simdrop configurarion

sim dropcfg -e -y -f /pfrm2.0/etc/simdrop.db

If dropped traffic is still majority, try to re-arrange the rule base so that drop rules are as high in it as possible. For example, I use Negated cell to drop all traffic coming to my external IPs that is not on published ports. So much you can do minimize firewall work on dropped traffic.

If you follow the guidelines and best practices, you will have access policy with accepted traffic that is optimized for acceleration as much as possible. There is a lot written about this and it is all valid for Gaia Embedded as well. Just follow it.

Forget about load-balancing Internet connections. It will disable SecureXL and this is pretty much game over on SMB.

Application and TP policies are completely different story. Compared to latest Gaia versions there are a lot of differences, limitations, lack of optimizations and so on. For that properly tuned policy can make a lot of difference.

APCL/URLF

The rule to avoid Any Any Any applies here as well but unlike access policy you don’t need cleanup rule. Just remove it and ignore SmartConsole warning that it is missing. Organize rule base like that:

- Define group of destination IPs and networks for whom you don’t want to do inspection. This can be peer site-to-site networks, HTTPS VPN gateways, etc. Make an accept rule for it and put it as high in rule base as possible.

- Define group of URLs that you don’t want to inspect. Use Regular Expression mode only. For example:

(^|.*\.)*checkpoint\.com

- Define group of URLs that you want to block. Again, use Regular Expressions.

- Define group of categories that you want to block but you don’t want to show block page (for example Web Advertisements, Analytics sites, etc)

- Define group of IPs or Networks that are with unlimited access (this is needed for debug and troubleshooting).

- Define rest of your rule base in a way you need but following the rules regarding SecureXL optimization.

Avoid logging what is not necessary. Avoid showing block page where it is not necessary. Avoid using speed limits where it is not necessary. Avoid groups with exclusions and access roles. All these things affect performance. Keep in mind that filtering by URL (when possible) is more efficient than inspecting the entire stream to determine what application or category it is. Later is however more reliable and secure. Use common sense here.

Now, go to command line and check SecureXL stats with ‘fwaccel stats -s’. Majority of the traffic shall be in accelerated or medium (PXL) path. If not, then you are doing something wrong. To fine tune further, examine traffic in SmartConsole by using Tops pane to see what Apps and URLs are most commonly inspected and think if you can skip some of these inspections, for example by doing it only for certain internal IPs or networks.

Once you are satisfied with APCL/URLF performance it is time to look at HTTPS-I. With majority of the traffic nowadays being HTTPS, you just can’t avoid it anymore.

HTTPS-I

This is where the things start to be a bit ugly. HTTPS-I is an expensive task involving proxying connections, decryption, encryption, certificate checks, etc. It all requires a lot of precious CPU cycles. This blade is used not only by APCL/URLF but also IPS, AB and AV blades. As such it is the bottleneck and it is worth spending any minute to optimize it as much as possible.

You will work on the HTTPS-I policy in SmartDashboard. The default one will offer you set of Bypass rules and then Inspect rule as cleanup. This is all wrong and should be the other way around! Properly defined HTTPS-I policy shall be something like this:

- On top put rule to bypass the group with destination IPs and networks you already defined.

- Next is bypass rule for the group of URLs that you don’t want to inspect.

- Next is bypass rule for internal IPs and networks with unlimited access.

- Next are outbound and inbound inspect rules.

- Last is bypass rule with Any Any.

Service must always be HTTPS and HTTP_and_HTTPS_proxy. Never put Any here. I log only Inspect rules.

Now check again SecureXL status and load average status. Load average is off course higher now, but it shall still be bellow 2.00.

Similar to before inspect logs from HTTPS-I blade and see what is most commonly inspected and if you can avoid it somehow.

IPS

I haven’t really done anything different than to follow @G_W_Albrecht excellent post about optimizing IPS for SMB:

Be careful with ‘Bypass IPS under load’ option. If not properly tuned it will cause more problems than it solves. The thing is that if the CPU load fluctuates around preset limit the bypass will enter enable-disable loop and at the end you may loose connectivity. If acceptable for you, setup cron job that will disable (ips off) IPS blade for the time of the day that appliance is most busy. Don’t forget to re-enable it (ips on) after that though 😀 It is questionable as to how efficient is IPS on SMB. I have no problems to disable it from time to time.

AB/AV

I am using only AB blade, but I can’t recall doing any special optimizations here. If anyone can elaborate on this, it will be much appreciated. What I keep in mind is that AB blade puts almost all relevant traffic in PXL path.

Last but not least think of adding some Exceptions for TP blades. Sometimes these helps as well.

POLICY INSTALLATION

Policy installations are time consuming (especially with TP blades enabled) and can sometimes lead to temporary dramatic increase of load average. If you have IA blade enabled, after policy install few processes will spawn to refresh identity awareness data. Just try not to install policy when load average is high or expected to go high anytime soon.

FINAL WORDS

This is far from comprehensive guide and is still work in progress. Feel free to comment and experiment and share your findings here.

6 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your efforts putting this comprehensive guide together. 👍

I‘ll add a link to this in our 1400 and 1500 Appliance FAQ.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great work!

Note that the 1500 series is using a newer kernel (4.x, can't remember the exact version).

Note that the 1500 series is using a newer kernel (4.x, can't remember the exact version).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanx, glad that you like it.

1500 SMBs are using 4.14.76-release-1.3.0 kernel and that is certainly an advantage over 1400 ones.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

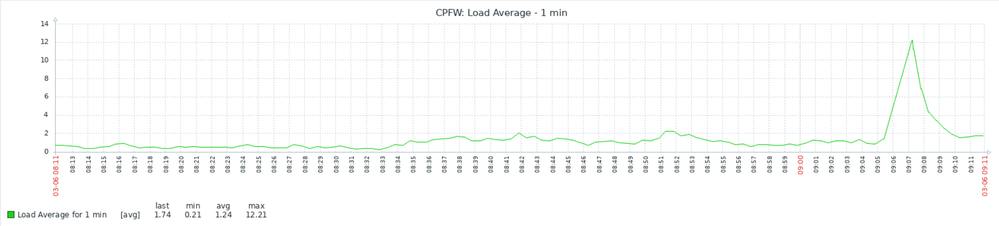

An example how load average can go crazy on SMB. Builds before 990173004 will definitely crash in such case. With latest JHF however only site-to-site VPNs went down and all IA processes crashed as well.

Btw, for some time now I suspect those sudden spikes are caused by IA blade because at the same time traffic was really not that much. Unfortunately disabling IA blade is not an option for me so I can't really test it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great article, thanks for sharing!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great article, only thing I would add is that using the "*" character for matching in custom application/site objects (such as (^|.*\.)*checkpoint\.com) causes a much heavier load on the gateway's Pattern Matcher code due to greedy matching. This was revealed relatively recently, and it is serious enough that the new versions of hcp look for this type of configuration and flag it. There is an easy alternative configuration that functions the same but avoids use of "*", see these:

Custom Sites and RegExp Wildcard Efficiency

Custom Application/Site Findings

sk165094: Best Practices - Custom Applications/Sites for Application Control and URL Filtering

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 1 | |

| 1 | |

| 1 | |

| 1 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter