- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

10 December @ 5pm CET / 11am ET

Announcing Quantum R82.10!

Learn MoreOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Re: "It's not the firewall"

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

"It's not the firewall"

Hi (check)mates,

We all know that "the firewall" is one of the first things people blame when there is a traffic issue. A security gateway (a "firewall") do a lot of "intelligent stuff" more than just routing traffic (and -in fact- many network devices today do also "a lot of things") so I understand there is a good reason for thinking about the firewall but, at the same time, there is a big number of times where it's not anything related to it, or when it's not directly related.

I'm looking to build a brief list of typical or somewhat frequent issues we face, where "the firewall" is reported as the root of the issue, but finally it isn't.

It's a quite generic topic, and in terms of troubleshooting it's probably even more generic. Probably there are several simple tools that one should use first, like: traffic logs, fw monitor, tcpdump/cppcap, etcetera. But what I would like to point is not the troubleshooting, but the issues themselves. Of course, assuming the firewall side is properly configured (which would be a "firewall issue" but due to a bad configuration).

To narrow down the circle, I'm specially focusing on networking issues, but every idea is welcomed.

Do you think it would be useful to elaborate such list? 🙂 What issues do you usually find?

Something to start

(I'll update this list with new suggested issues):

- A multicast issue with the switches, impacting the cluster behavior.

- A VLAN is not populated to all the required switches involved in the cluster communication, specially in VSX environments where not all the VLANs are monitored by default.

- Related to remote access VPN (this year has been quite active in that matter), some device at the WAN side is blocking the ISAKMP UDP 4500 packets directed to our Gateways, but not the whole UDP 4500. Typically, another firewall 🤣

- Asymmetric routing issues, where the traffic goes through one member and comes back through the other member of the cluster.

- Static ARP entries in the "neighbor" routing devices, or ARP cache issues.

- Any kind of issue with Internet access: DNS queries not allowed to Internet or to the corporate DNS servers (so we cannot solve our public domains), or TCP ports blocked, or any required URL blocked (typically by a proxy)...

- Traffic delays: these are typically more difficult to diagnose. fw monitor with timestamps is one of our friends here.

- Layer 1 (physical) issues. Don't forget to review the hardware interface counters!

- Missed route at the destination, especially related to the routes related to the encryption domains in a VPN.

- Why not: another firewall blocking the communication, of course 😊 Or a forgotten transparent layer-7 device in the middle (like an IPS), installed in a previous age. This may be a variant: "it's not my firewall"

- An application or server issue. The simplest example is that the server is not listening in the requested port. A more complex one would be an application layer issue.

Lastly, a little humor. 😊

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh boy, where do I even start with this one. Let's see if anyone here can top this story, it was many years ago but just about made me lose my mind.

I was asked to take a look at a problem for a midsize Check Point customer. Apparently a certain file was being blocked by the firewall with no drop log, and no one could figure out why. I agreed to take a remote look thinking it would be an easy fix, little did I know that it would stretch into many long days culminating in threats to rip out all Check Point products.

So when Customer Windows System A would try to transfer a certain file using cleartext FTP through the firewall to Customer Windows System B, the transfer was stalling at about 5MB and never recovering, always in the exact same place in the file. All other files could be transferred just fine between the two systems and there were no performance issues, and by this time the problematic file had been dubbed the "poison file". The file would also be blocked if it was sent to a completely different destination "System C" through the firewall. Sending the poison file between systems located on System A's same VLAN/subnet worked fine, so the finger was being pointed squarely at the firewall. Customer was only using Firewall and IPSec VPN blades on this particular firewall, so no TP or APCL/URLF. Matching speed and duplex were verified on all network interfaces and switchports.

So I login to the Check Point firewall and run fw ctl zdebug drop. Transfer of the poison file commences and stalls as expected. Nothing in the fw ctl zdebug drop output related to the connection at all, so it is not being dropped/blocked by the Check Point code. Next I disable SecureXL for System A's IP address and start an fw monitor -e. I see all packets of the poison file successfully traversing the firewall, then everything associated with that connection suddenly stops at the time of stall. No retransmissions, FIN/RST or anything at all, it just stops with no further packets. I see exactly the same thing with tcpdump, so it isn't SecureXL which is disabled anyway. Pull all packets up to that point in both the fw monitor and tcpdump captures into Wireshark, and everything is perfectly fine with TCP window, ack/seq numbers and everything else; Wireshark doesn't flag anything suspicious. The connection's packets just stop...

Theorize that perhaps System A is dropping the "poison" packet due to some kind of local firewall/antivirus software and never transmitting it, customer makes sure all that is disabled with no effect. Next I request to install Wireshark on System A as there was nowhere else to easily capture packets between System A and the firewall. At this point the grumbling starts by the customer's network team, who is not very fond of Check Point and would like nothing more than to see them all replaced with Cisco firewalls. Server A's administrator was not very happy about it either, but eventually agrees. We start a Wireshark capture on System A along with an fw monitor -e on the firewall. Poison file transmission starts and then stalls as expected. Notice in local Wireshark capture that at time of stall, System A starts retransmitting the same packet over and over again until the connection eventually dies. Thing is I'm not seeing this "poison packet" nor any of its retransmissions at all in fw monitor and tcpdump, all I see is all the packets leading up to it. So as an example System A is retransmitting TCP SEQ number 33 over and over again, but all I see are the sequence numbers up through 32 on the firewall's captures. The customer's network team certainly likes this finding, as System A has been verified as properly sending the poison packet to the firewall.

This eventually leads to a conversation where I ask exactly what is physically sitting between System A and the firewall's interface. Just a single Cisco Layer 2 switch and nothing else they tell me. I ask them to manually verify this by tracing cables (cue more grumbling) as I suspect there may be some kind of IPS or other device that doesn't like the poison packet or its retransmissions. They verify the path and only the Layer 2 Cisco switch is there. They try changing switchports for the firewall interface and System A, no effect. I personally inspect the configuration of the switch and it is indeed operating in pure Layer 2 mode with no reported STP events or anything else configured that could drop traffic so specifically like this.

Next step is to try replacing the switch, the customer wants to replace it with a switch of the same model but I insist that we use an unmanaged switch that is as stupid as possible during a downtime window, where we will cable System A and the firewall directly through the unmanaged switch. This requires coordination of a downtime window, and as a result I'm hearing that this issue is now being escalated inside the customer's organization beyond the Director level and VPs are starting to get involved. Thinly-veiled threats to pull out this Check Point firewall and the 10 others or so they have are starting to percolate. We get the downtime window approved and hook System A and the firewall together using a piece of crap $30 switch from Best Buy. We start the transfer and...and...

it still stalls at exactly the same place; everything still looks exactly the same in all Wireshark and firewall packet captures. The customer's network team is getting pretty smug now thinking they have won and they'll soon be using Cisco firewalls. At this point I'm on the brink of an existential crisis and starting to seriously question my knowledge and experience. Humbling to say the least.

Finally in desperation this conversation ensues:

Me: What is physically between System A and the firewall?

Them: We already told you, the Cisco switch which is clearly working fine.

Me: How far apart physically are the involved systems in your Data Center? (thinking electrical interference)

Them: Not sure how that is relevant but System A is about 10 feet from the switch and direct wired, and the firewall is on the other side of the Data Center about 80 feet away.

Me: System A is direct wired to the switch with a single cable?

Them: (Exasperated) Yes.

Me: And the firewall is direct wired to the switch with a long Ethernet cable run across the room?

Them: Er, no it is too far for that.

Me: Wait, what?

Them: There is a RJ45 patch panel in the same rack as the switch which leads to a patch panel on the other side of the room near the firewall.

Me: And you are sure there is not some kind of IPS or other device between the ports of the patch panels?

Them: Dude come on, it is just a big bundle of wire going across the room. The firewall is direct wired to the patch panel on that side.

Me: What is the longest direct Ethernet cable you have on hand?

Them: What?

Me: What is the longest direct Ethernet cable you have on hand?

Them: Dunno, probably 100 feet or so.

Me: I want you to direct wire the firewall to the switch itself, bypassing the patch panel. Throw the long cable on the floor for now.

Them: Come on man, that won't have any effect.

Me: Do it anyway please.

Them: I'm calling my manager, this is ridiculous.

(click)

So after some more teeth gnashing and approvals we get a downtime window, the customer has already reached out to Cisco for a meeting at this point and things are looking grim. The cable is temporarily run across the floor of the data center directly connecting the switch and firewall. From the firewall I see the interface go down after being unplugged from the patch panel and come back up with the direct wired connection. I carefully check the speed and duplex on both sides to ensure there is no mismatch. Watching on a shared screen with a phone audio conference active, the transfer of the poison file starts....

BOOM. The poison file transfers successfully in a matter of seconds.

An amazing litany of foul language spews from my phone's speaker, combined in creative ways that would make even a sailor blush. Eventually someone manages to slam the mute button on the other end. After they come back off mute, they incredulously ask what I changed. Nothing. Nothing at all. They don't believe me so I encourage them to hook everything back up through the same patch panel ports as before. They do so and launch the transfer. Immediate stall at 5MB again; they are much faster on the mute button this time. They move both sides to a different patch panel jack, poison file transfer succeeds no problem. Move it back to the original patch panel ports, and the poison file stall at 5MB is back.

At this point for the first time I check the firewall's network interface counters with netstat -ni which I hadn't really looked at due to the specificity of the problem. There are numerous RX-ERRs being logged, ethtool -S shows that they are CRC errors, which start actively incrementing every time a stall happens. Damnit.

So the best I can figure was a bad punch down in the RJ45 patch panel, and when a very specific bit sequence happens to be sent (that sequence was contained within the poison file) a bit would get flipped which then caused the CRC frame checksum to fail verification on the firewall. The packet would be retransmitted, the bit flip would happen again, and it was discarded again over and over. What I did not know at the time is that when a frame has a CRC error like this, it is discarded by the firewall's *NIC hardware* itself and never makes it anywhere near fw monitor and tcpdump, which is why I couldn't see it. There is no way to change this behavior on most NICs either. I never tried to figure out what the poison bit pattern was that caused the bit flip but I wouldn't be surprised if it consisted of the number 6 in groups of three or something. Jeez.

So the big takeaway: CHECK YOUR BLOODY NETWORK COUNTERS.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

61 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Arp cache often issues get blamed on the firewall.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Though this is good post to discuss, its very broad when it comes to knowledge and work done by different customers. Personally, I often find people would blame the firewall without even doing basic network tests (ping, traceroute, route lookup...). I think it would be VERY HELPFUL if there was an sk on some basic things to check on CP firewalls before engaging TAC.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sadly true. In fact, most of the issues (if not all) in this post would be valid for any firewall vendor.

Happy to know that you find it useful! I expect the wise people stop by this thread and leave their two cents 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh, don't I have those stories...

Once, a customer from Scandinavia was a severe case of FW breaking down VOIP system. After changing the FW appliances, they lost phone system, which could place the calls still, but parties could not hear each other. After several escalations, I was visiting the customer on prem, to see what the hell is going on. Long story short, someone put a static ARP entry on their GateKeeper, pointing to the "old" FW mac address.

On the other hand, I have quite a few opposite examples, where I was pretty confident it was not a FW myself, but proven wrong.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks!

I forgot the ARP issues and definitely that is one of the typical things that may happen outside the gateway. It's not so common, but it happens from time to time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll add also other things I've thought about.

- Any kind of issue with Internet access: DNS queries not allowed to Internet or to the corporate DNS servers (so we cannot solve our public domains), or TCP ports blocked, or any required URL blocked (typically by a proxy)...

- Traffic delays: these are typically more difficult to diagnose. fw monitor with timestamps is one of our friends here.

I'll update the original post 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DNS Conditional Forwarding has hosed things in the past. The joke is its always DNS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Drops for retransmitted SYN with different window scale, or retransmitted SYN with different sequence number are not problems on the firewall. They are instead symptoms. They happen when the client doesn't get a response to its connection, and it tries again with slightly different settings in case some box in the path didn't like the settings of the original SYN.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh boy, had an issue last week where VPN client traffic was severely impacted to the point of clients not being able to use any internal apps. Firewall was dropping packets with "First packet is not SYN". Hours of troubleshooting (of course nothing changed on the network) and one SpeedTest later, we discovered that the client upgraded the ISP link, but the ISP messed up the shaping on the upstream router, giving the client 100Mb down and 1024Kb up...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ooh, let me check dozens of incident tickets we have in firewall queue ...

"Firewall is blocking the traffic, allow communication NOW !" ... in fact - route on destination is missing...

"Firewall stopped passing traffic from 1.3.2021 00:01" .. in fact - regulary requested temporary time rule till the end of February expired (requested by the same person who created ticket)...

"Firewall is blocking specific tcp/udp port" ... in fact - port is closed on destination server

but to blame also "us", once you dont see any logs, it doesnt mean there is no traffic ... just rule is not logging 😉

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have queue full of the similar incidents, but the worst ones are for slowness.

They also wanted to disable TCP state checking => it has never help to fix the issue 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh boy, where do I even start with this one. Let's see if anyone here can top this story, it was many years ago but just about made me lose my mind.

I was asked to take a look at a problem for a midsize Check Point customer. Apparently a certain file was being blocked by the firewall with no drop log, and no one could figure out why. I agreed to take a remote look thinking it would be an easy fix, little did I know that it would stretch into many long days culminating in threats to rip out all Check Point products.

So when Customer Windows System A would try to transfer a certain file using cleartext FTP through the firewall to Customer Windows System B, the transfer was stalling at about 5MB and never recovering, always in the exact same place in the file. All other files could be transferred just fine between the two systems and there were no performance issues, and by this time the problematic file had been dubbed the "poison file". The file would also be blocked if it was sent to a completely different destination "System C" through the firewall. Sending the poison file between systems located on System A's same VLAN/subnet worked fine, so the finger was being pointed squarely at the firewall. Customer was only using Firewall and IPSec VPN blades on this particular firewall, so no TP or APCL/URLF. Matching speed and duplex were verified on all network interfaces and switchports.

So I login to the Check Point firewall and run fw ctl zdebug drop. Transfer of the poison file commences and stalls as expected. Nothing in the fw ctl zdebug drop output related to the connection at all, so it is not being dropped/blocked by the Check Point code. Next I disable SecureXL for System A's IP address and start an fw monitor -e. I see all packets of the poison file successfully traversing the firewall, then everything associated with that connection suddenly stops at the time of stall. No retransmissions, FIN/RST or anything at all, it just stops with no further packets. I see exactly the same thing with tcpdump, so it isn't SecureXL which is disabled anyway. Pull all packets up to that point in both the fw monitor and tcpdump captures into Wireshark, and everything is perfectly fine with TCP window, ack/seq numbers and everything else; Wireshark doesn't flag anything suspicious. The connection's packets just stop...

Theorize that perhaps System A is dropping the "poison" packet due to some kind of local firewall/antivirus software and never transmitting it, customer makes sure all that is disabled with no effect. Next I request to install Wireshark on System A as there was nowhere else to easily capture packets between System A and the firewall. At this point the grumbling starts by the customer's network team, who is not very fond of Check Point and would like nothing more than to see them all replaced with Cisco firewalls. Server A's administrator was not very happy about it either, but eventually agrees. We start a Wireshark capture on System A along with an fw monitor -e on the firewall. Poison file transmission starts and then stalls as expected. Notice in local Wireshark capture that at time of stall, System A starts retransmitting the same packet over and over again until the connection eventually dies. Thing is I'm not seeing this "poison packet" nor any of its retransmissions at all in fw monitor and tcpdump, all I see is all the packets leading up to it. So as an example System A is retransmitting TCP SEQ number 33 over and over again, but all I see are the sequence numbers up through 32 on the firewall's captures. The customer's network team certainly likes this finding, as System A has been verified as properly sending the poison packet to the firewall.

This eventually leads to a conversation where I ask exactly what is physically sitting between System A and the firewall's interface. Just a single Cisco Layer 2 switch and nothing else they tell me. I ask them to manually verify this by tracing cables (cue more grumbling) as I suspect there may be some kind of IPS or other device that doesn't like the poison packet or its retransmissions. They verify the path and only the Layer 2 Cisco switch is there. They try changing switchports for the firewall interface and System A, no effect. I personally inspect the configuration of the switch and it is indeed operating in pure Layer 2 mode with no reported STP events or anything else configured that could drop traffic so specifically like this.

Next step is to try replacing the switch, the customer wants to replace it with a switch of the same model but I insist that we use an unmanaged switch that is as stupid as possible during a downtime window, where we will cable System A and the firewall directly through the unmanaged switch. This requires coordination of a downtime window, and as a result I'm hearing that this issue is now being escalated inside the customer's organization beyond the Director level and VPs are starting to get involved. Thinly-veiled threats to pull out this Check Point firewall and the 10 others or so they have are starting to percolate. We get the downtime window approved and hook System A and the firewall together using a piece of crap $30 switch from Best Buy. We start the transfer and...and...

it still stalls at exactly the same place; everything still looks exactly the same in all Wireshark and firewall packet captures. The customer's network team is getting pretty smug now thinking they have won and they'll soon be using Cisco firewalls. At this point I'm on the brink of an existential crisis and starting to seriously question my knowledge and experience. Humbling to say the least.

Finally in desperation this conversation ensues:

Me: What is physically between System A and the firewall?

Them: We already told you, the Cisco switch which is clearly working fine.

Me: How far apart physically are the involved systems in your Data Center? (thinking electrical interference)

Them: Not sure how that is relevant but System A is about 10 feet from the switch and direct wired, and the firewall is on the other side of the Data Center about 80 feet away.

Me: System A is direct wired to the switch with a single cable?

Them: (Exasperated) Yes.

Me: And the firewall is direct wired to the switch with a long Ethernet cable run across the room?

Them: Er, no it is too far for that.

Me: Wait, what?

Them: There is a RJ45 patch panel in the same rack as the switch which leads to a patch panel on the other side of the room near the firewall.

Me: And you are sure there is not some kind of IPS or other device between the ports of the patch panels?

Them: Dude come on, it is just a big bundle of wire going across the room. The firewall is direct wired to the patch panel on that side.

Me: What is the longest direct Ethernet cable you have on hand?

Them: What?

Me: What is the longest direct Ethernet cable you have on hand?

Them: Dunno, probably 100 feet or so.

Me: I want you to direct wire the firewall to the switch itself, bypassing the patch panel. Throw the long cable on the floor for now.

Them: Come on man, that won't have any effect.

Me: Do it anyway please.

Them: I'm calling my manager, this is ridiculous.

(click)

So after some more teeth gnashing and approvals we get a downtime window, the customer has already reached out to Cisco for a meeting at this point and things are looking grim. The cable is temporarily run across the floor of the data center directly connecting the switch and firewall. From the firewall I see the interface go down after being unplugged from the patch panel and come back up with the direct wired connection. I carefully check the speed and duplex on both sides to ensure there is no mismatch. Watching on a shared screen with a phone audio conference active, the transfer of the poison file starts....

BOOM. The poison file transfers successfully in a matter of seconds.

An amazing litany of foul language spews from my phone's speaker, combined in creative ways that would make even a sailor blush. Eventually someone manages to slam the mute button on the other end. After they come back off mute, they incredulously ask what I changed. Nothing. Nothing at all. They don't believe me so I encourage them to hook everything back up through the same patch panel ports as before. They do so and launch the transfer. Immediate stall at 5MB again; they are much faster on the mute button this time. They move both sides to a different patch panel jack, poison file transfer succeeds no problem. Move it back to the original patch panel ports, and the poison file stall at 5MB is back.

At this point for the first time I check the firewall's network interface counters with netstat -ni which I hadn't really looked at due to the specificity of the problem. There are numerous RX-ERRs being logged, ethtool -S shows that they are CRC errors, which start actively incrementing every time a stall happens. Damnit.

So the best I can figure was a bad punch down in the RJ45 patch panel, and when a very specific bit sequence happens to be sent (that sequence was contained within the poison file) a bit would get flipped which then caused the CRC frame checksum to fail verification on the firewall. The packet would be retransmitted, the bit flip would happen again, and it was discarded again over and over. What I did not know at the time is that when a frame has a CRC error like this, it is discarded by the firewall's *NIC hardware* itself and never makes it anywhere near fw monitor and tcpdump, which is why I couldn't see it. There is no way to change this behavior on most NICs either. I never tried to figure out what the poison bit pattern was that caused the bit flip but I wouldn't be surprised if it consisted of the number 6 in groups of three or something. Jeez.

So the big takeaway: CHECK YOUR BLOODY NETWORK COUNTERS.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lol, that reminds me something else.

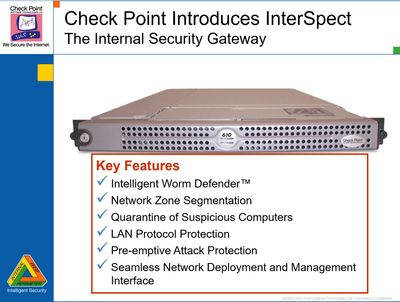

Once upon a time, there was a product called "InterSpect", kinda FW in a bridge mode with Any-Any-Accept rule, aimed to Threat Prevention only.

I was an EA engineer at the time. So I went to install a box to one Israeli customer. And it did not work. OSPF would not re-connect when a cable was cut, and we put the box in between. It was weird, but we did some troubleshooting and it seemed the box was in fault.

I took it back and spent two days in the lab, trying to reproduce. Nothing, OSPF ran as a clock. So I called the guy and said, look man, I suspect there is something with the cables on your end. He went ballistic and hang up on me.

Two days later he calls laughing, saying I was correct. They found a mouse in the networking cabinet. That poor creature bit one of the cables, and died apparently. They found it by the smell. By luck, that was cable going to the patch we used for our box. One of 8 wires was damaged. The connection was going up, but not functioning properly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great point about deliberately damaging faulty cables, done that many times myself. I heard long after the fact from someone who wasn't directly involved in the patch panel dust-up I posted earlier in this thread about the fate of the faulty patch panel jack we found. As it turns out the network team was so spooked they didn't attempt to recut the bad patch panel cable and punch it down again; they simply took two uncrimped RJ45 connectors sans cable, snipped off almost all of the narrowest part of the locking tabs and stuck them in the bad jack on both ends. By doing so it became impossible to remove them without sticking some kind of sharp object into it to lift up the snipped locking tab; hopefully the time that it would take someone to do that would cause them to question exactly why it had been jammed in there like that in the first place. Maybe.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Timothy_Hall -- thanks for the great story. I'm surprised at the final response by customer to treat it by avoidance vs simply re-punching the connectors? This likely raises my naivete on the specifics of data center space - especially a co-lo facility --and the "line of demarcation" for responsibility.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Everyone was pretty traumatized by that point, so I was perfectly fine with that avoidance solution. My assumption was a bad punch-down on the RJ45 biscuits, but I suppose it is possible that the specific cable across the room was in just the wrong spot to be in a severe EM hot zone alongside a high voltage cable for an extended run or something. Also found out later that this customer had all kinds of issues and even payment disputes with the contractor who had installed the data center's cable plant and done shoddy work.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Rather than the line of responsibility, my bet would be on cable bundling. Patch panel cables are often run in bulk, cut to length, and people often use zip ties to secure the bulk wires. Can't just trim an inch off and punch it down again, as there isn't enough slack at the end. Replacing one cable would involve cutting ~100 zip ties or more, fishing the cable out, running a new one, reapplying all the zip ties (or replacing them with velcro), then punching it down. Massively more labor than "replacing one cable" sounds like. It's easier to just get twice as many patch panel ports as you need punched in the first place, then deal with failures.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

absolutely makes sense. I had not thought of running pre-packaged loom of 20-50-100 cables with specific length. Definitely not something to open up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had a issue where we had issues going from a Nokia cluster, it was random no idea why, went to site and started with Layer 1, found a faulty patch lead connected to a faulty patch point, what are the odds of that!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've done a pair of these in the past when repatching was not an option (difficult access in a high-frequencey trading environment). Crimped short pieces of vinyl tubes in RJ45s with the "Bad Port" labels.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

haha @_Val_ -- good memories there. circa 2004.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lol that's the one! Too bad it was put on ice. Micro-segmentation, user access control, compliance checks, internal threat prevention, and some more great ideas...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This reminded me of an old website called routergod.com, where they had a fictional character called Max Throughput - CCIE. And he was the private eye you called when things got weird on your network. Found the site on the waybackmachine, had a good chuckle now 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hah, awesome! I had several similar issues with customers over the years that ultimately was a bad cable of some variety. While not "firewall", I had a customer trying to establish a 4x interface port-channel between a Catalyst and Nexus switch that just wouldn't get established, no matter what they tried. They banged on it for hours before finally calling me to help. I looked around the config and stats on the Catalyst switch and found 1 interface was clocking errors. I told one person to swap the cable on that interface, then BOOM, it came up cleanly! I then told the guy to cut off the ends of that cable, cut into pieces, and throw it in the trash. He laughed at me; I gave him a serious look and said "dude, I'm not kidding; don't put a cable in the trash, cut it." The trash can was beside a filing cabinet that had a pile of cables on it. You can just imagine someone else walking into the room and see "oh this perfectly good cable just fell off the cabinet. I'll be helpful and put it back in the pile...". I think he finally cut the ends off the cable.

Separately, I was working with a customer doing a rip-and-replace of the network gear with a brand new pile of cables. We found at least 6 cables in the pile of 100 that were factory-new, but arrived as bad. Yikes!

Lesson learned: Never forget Layer 1 as a suspect. 🙂

I like your "Lesson Learned" to use "ethtool" as evidence for Layer 1 suspicions. Thank you for the reminder!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

another great story! how often to you think data center wiring folks actually test patch panel cables BEFORE install using something more than a simple continuity test? I like the testers that put a load on the cable with duration that allows for someone to play around with cable in attempt to uncover potential problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I totally agree, many times have I've reverted to OSI model when troubleshooting, and more importantly when installing or replacing I insist on understand the layer 1 topology. Another good thing is recon. If possible always site survey and understand device access.

Recently did a installation where I had a remote resource and we need to access a device via console port, found that someone had installed a patch panel on the rear of the cabinet blocking access to power and console port.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What a good story!

It was a quite strange issue, but it is a fantastic example of how challenging may be sometimes to prove it is not the firewall. I'll add your conclussion to the original list 😊

Also, I like this moment:

At this point I'm on the brink of an existential crisis and starting to seriously question my knowledge and experience.

Although this is not your case, I find that people often reach that point too soon. Or, at least, there is too much insecurity to just tell the customer or partner that everything is fine at the firewall side. I'm a humble guy with these matters and I like to be as much collaborative as I can, but when everything seems to be working fine, I always tell the customer something like "we're going to continue looking for what is happening and we're together with you on this, but at this point I tell you that based on my experience I sincerely doubt that this is a firewall issue, and I strongly recommend that you start looking at other places. I may be wrong, but the soon you start looking at the right place, the better".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Long time ago I worked for network support. (IP phones, PCs , Firewalls, switches, Proxies) a lot of common issue was the CRC ERRORs (Direct cable or patch pannel).

Sometimes we fortget the ISO LAYER.. (Layer1)

Great post!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good list! One more to add, similar to your item "remote site blocking traffic":

"Check the remote/destination end routing" to make sure the packets can get back. I've had several issues over the years with Remote Access VPN (or even site-to-site VPN) that ultimately was due to the destination end of the connection didn't have a return route for the VPN Office Mode network.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 17 | |

| 16 | |

| 8 | |

| 7 | |

| 7 | |

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |

Upcoming Events

Fri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Fri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter