- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Re: VSX and CoreXL fwk instances

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

VSX and CoreXL fwk instances

We are having R77.30 VSX with 16 licensed CPU cores and 4 virtual systems, initially corexl was was configured with 14 fwk instances and then we change it to 12,rebooted gateway and in SmartConsole assign 3 cores for each VS to have some free cpu cores for interfaces, when load increase.

Current ouput of affinity:

# fw ctl affinity -l -a

eth6: CPU 1

eth7: CPU 1

eth8: CPU 1

eth9: CPU 1

eth0: CPU 1

eth11: CPU 1

eth10: CPU 1

eth5: CPU 1

VS_0 fwk: CPU 0

VS_1 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15

VS_2 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15

VS_3 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15

VS_4 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15

Is this as it should be ? i would suspect that amount of CPU cores assigned to VS_1-4 will be just 12 and not 14 (CPU 2-15).

Per CP TAC we should manually define which cores should be assigned to VS systems. Does anybody have same experience?

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

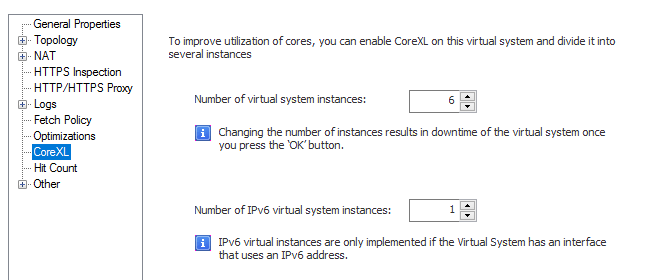

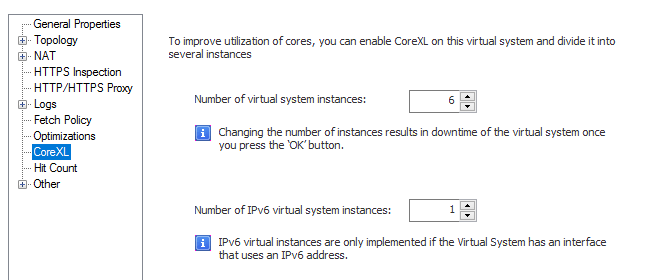

You configure VS cores on the gateway object GUI and not cpconfig (that only adjust cores on VS0 and normally i would not enable CoreXL on VS0 at all). For example VS below is configured with 6 cores

Then you may use fw ctl affinity and sim affinity (for SXL) commands to adjust which cores handle which VS

Below is a sample of VSX with 7 VS and 20 core system. Cores 0-3 are reserved for SXL / Interfaces and rest is set depending on VS requirements. VS3 and VS6 in this case has dedicated cores for Identity Awareness btw.

fw ctl affinity -l

eth1-01: CPU 1

eth1-02: CPU 2

eth1-03: CPU 3

eth1-04: CPU 3

eth1-06: CPU 0

eth2-01: CPU 2

eth2-02: CPU 3

Mgmt: CPU 1

Sync: CPU 1

VS_0: CPU 4

VS_0 fwk: CPU 4

VS_1: CPU 4

VS_1 fwk: CPU 4

VS_2: CPU 4

VS_2 fwk: CPU 4

VS_3: CPU 7

VS_3 fwk: CPU 7

VS_3 pdpd: CPU 5 6

VS_3 pepd: CPU 5 6

VS_4: CPU 7

VS_4 fwk: CPU 7

VS_5: CPU 4

VS_5 fwk: CPU 4

VS_6: CPU 10 11 12 13 14 15 16 17 18 19

VS_6 fwk: CPU 10 11 12 13 14 15 16 17 18 19

VS_6 pdpd: CPU 5 6

VS_6 pepd: CPU 5 6

VS_7: CPU 7

VS_7 fwk: CPU 7For config above you would run these commands

fw ctl affinity -s -d -vsid 6 -cpu 10-19

fw ctl affinity -s -d -vsid 0 -cpu 4

fw ctl affinity -s -d -vsid 1 -cpu 4

fw ctl affinity -s -d -vsid 2 -cpu 4

fw ctl affinity -s -d -vsid 5 -cpu 4

fw ctl affinity -s -d -vsid 3 -cpu 7

fw ctl affinity -s -d -vsid 4 -cpu 7

fw ctl affinity -s -d -vsid 7 -cpu 7

fw ctl affinity -s -d -pname pdpd -vsid 3 -cpu 5 6

fw ctl affinity -s -d -pname pepd -vsid 3 -cpu 5 6

fw ctl affinity -s -d -pname pdpd -vsid 6 -cpu 5 6

fw ctl affinity -s -d -pname pepd -vsid 6 -cpu 5 6 So you kind of have to look at your current CPU consumption and design it from there.

Remember there is no right or wrong answer - it all depends on your environment and requirements. We tend to allocate dedicated cores for "important" VSes and less important share the same cores so in case something goes terribly wrong on less important VSes, they do not affect important ones at all. Feel free to PM if you want to

12 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You configure VS cores on the gateway object GUI and not cpconfig (that only adjust cores on VS0 and normally i would not enable CoreXL on VS0 at all). For example VS below is configured with 6 cores

Then you may use fw ctl affinity and sim affinity (for SXL) commands to adjust which cores handle which VS

Below is a sample of VSX with 7 VS and 20 core system. Cores 0-3 are reserved for SXL / Interfaces and rest is set depending on VS requirements. VS3 and VS6 in this case has dedicated cores for Identity Awareness btw.

fw ctl affinity -l

eth1-01: CPU 1

eth1-02: CPU 2

eth1-03: CPU 3

eth1-04: CPU 3

eth1-06: CPU 0

eth2-01: CPU 2

eth2-02: CPU 3

Mgmt: CPU 1

Sync: CPU 1

VS_0: CPU 4

VS_0 fwk: CPU 4

VS_1: CPU 4

VS_1 fwk: CPU 4

VS_2: CPU 4

VS_2 fwk: CPU 4

VS_3: CPU 7

VS_3 fwk: CPU 7

VS_3 pdpd: CPU 5 6

VS_3 pepd: CPU 5 6

VS_4: CPU 7

VS_4 fwk: CPU 7

VS_5: CPU 4

VS_5 fwk: CPU 4

VS_6: CPU 10 11 12 13 14 15 16 17 18 19

VS_6 fwk: CPU 10 11 12 13 14 15 16 17 18 19

VS_6 pdpd: CPU 5 6

VS_6 pepd: CPU 5 6

VS_7: CPU 7

VS_7 fwk: CPU 7For config above you would run these commands

fw ctl affinity -s -d -vsid 6 -cpu 10-19

fw ctl affinity -s -d -vsid 0 -cpu 4

fw ctl affinity -s -d -vsid 1 -cpu 4

fw ctl affinity -s -d -vsid 2 -cpu 4

fw ctl affinity -s -d -vsid 5 -cpu 4

fw ctl affinity -s -d -vsid 3 -cpu 7

fw ctl affinity -s -d -vsid 4 -cpu 7

fw ctl affinity -s -d -vsid 7 -cpu 7

fw ctl affinity -s -d -pname pdpd -vsid 3 -cpu 5 6

fw ctl affinity -s -d -pname pepd -vsid 3 -cpu 5 6

fw ctl affinity -s -d -pname pdpd -vsid 6 -cpu 5 6

fw ctl affinity -s -d -pname pepd -vsid 6 -cpu 5 6 So you kind of have to look at your current CPU consumption and design it from there.

Remember there is no right or wrong answer - it all depends on your environment and requirements. We tend to allocate dedicated cores for "important" VSes and less important share the same cores so in case something goes terribly wrong on less important VSes, they do not affect important ones at all. Feel free to PM if you want to

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for explanation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For VSX you can simply disable CoreXconfig L using cpas that only pertains to VS0 aka the management VS or host.

CoreXL for a VS is set from SmartConsole. You set the amount of instances per VS that you want to run. This is because the VS's are actually user mode processes contrary to kernel processes which makes this a different approach from a physical gateway. The built-in Linux scheduler does a realtime check to see what core is loaded the least and dynamically assigns the core to a process (in this example the fwk).

This means you can both undersubscribe and oversubscribe and also apply relative weights per VS (by making one more important over the other)

Hope that helps

Peter !!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My reply was mangled a little, the initial sentence should read:

For VSX you can simply disable CoreXL using cpconfig as that only pertains to VS0 aka the management VS or host.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Firstly the question, what do you mean saying: "We are having R77.30 VSX with 16 licensed CPU cores and 4 virtual systems, initially corexl was was configured with 14 fwk instances and then we change it to 12"?

Do I understand correctly that is about amount of SXL cores? You have two by default and assigned two more?

Now, after doing that, you need to set up affinity for NICs and VSs. In your output, there is only Core 1 that is assigned to NICs. Same per VS; if you do not change settings manually, all remaining cores will be assigned randomly and listed all together, as in your output above.

Please refer to ATRG: CoreXL when adjusting the settings

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Via cpconfig we had it configured with 14 instances for CoreXL, now it's disabled completely and cores are manually assigned via affinity and interfaces are in auto mode.

fw ctl affinity -l -a

eth6: CPU 1

eth7: CPU 4

eth8: CPU 2

eth9: CPU 3

eth0: CPU 1

eth1: CPU 5

eth10: CPU 4

eth11: CPU 5

eth2: CPU 3

eth3: CPU 3

eth4: CPU 5

eth5: CPU 2

VS_0 fwk: CPU 0

VS_1 fwk: CPU 6 7 8

VS_2 fwk: CPU 9 10 11

VS_3 fwk: CPU 12 13

VS_4 fwk: CPU 14 15

The current license permits the use of CPUs 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15 only.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hold on. How many cores do you use for SXL? you said 4, right? I can see Cores 4 and 5 also assigned to NICs. You should only assign those which are bined to SXL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question when dynamic split is available on VSX (Which I'm told is coming) is there still a place for manual affinity?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe you can choose either, similar to physical FW case

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Correct, and similar to R80.40, the manual affinity will be the default one, Dynamic Split will need to be enabled.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do we have an ETA on this? I appreciate this may not be something that can be answered, but hey may as well ask the question.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure, it is targeted to the upcoming JHFs.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 16 | |

| 10 | |

| 10 | |

| 8 | |

| 7 | |

| 3 | |

| 2 | |

| 2 | |

| 2 | |

| 1 |

Upcoming Events

Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter