- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Re: Understanding load and SecureXL change when ch...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Understanding load and SecureXL change when changing affinity of CPU cores

Hi,

in an effort to optimize performance on our new R80.30 kernel 3.1 cluster (16core open server) we changed the core assignement from 8/8 (SND/fw_worker) to 5/11. Sync interface has a dedicated SND assigned.

Now we do not understand the changes that happened on the system regarding CPU usage and SecureXL.

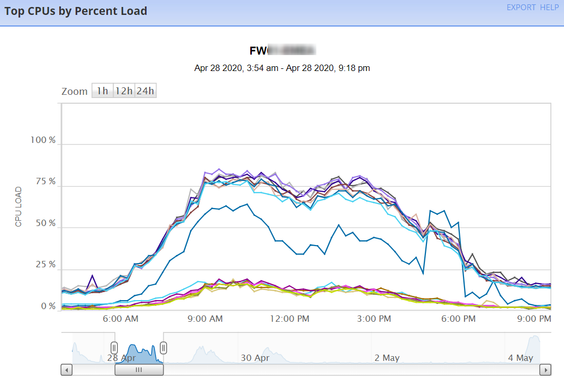

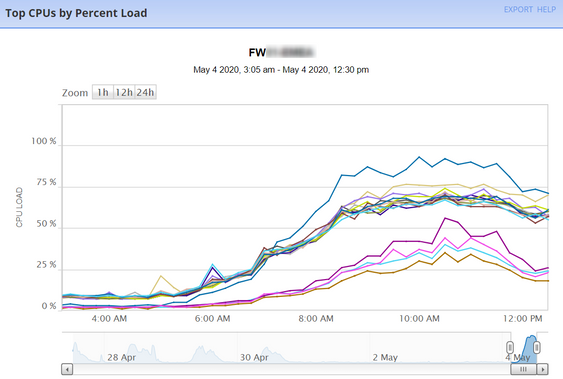

Performance with 8/8 config

CPU #1 - Sync interface - Max 60% CPU

CPU #2,3,4,9,10,11,12 - SNDs - max 20% CPU

CPU #5,6,7,8,13,14,15 - fw_worker - max 80% CPU

SecureXL accelerated conns: 50%

SecureXL accelerated packets: 67%

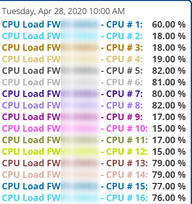

Performance with 5/11 config (core assignment change done two days ago)

CPU #1 - Sync interface - Max 85% CPU

CPU #2,3,4,9,10,11,12 - SNDs - max 50% CPU

CPU #5,6,7,8,13,14,15 - fw_worker - max 70% CPU

SecureXL accelerated conns: 4%

SecureXL accelerated packets: 60%

In general SNDs and fw_worker load changed as expected.

But can anyone explain why I can see a much higher load on the core assigned to the sync interface and also why I now have such a low value of accelerated connections ?

Maybe there is a connection between high load on sync cpu and low rate of accelerated conns ?

Thanks and regards

Thomas

13 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @TomShanti The scenario does not make sense. Are you sure you did not change policy along with the Core split?

It seems that you have lost most of your templated acceleration. You need to figure out why. This is the main question

Less templated acceleration may then lead to higher load on sync, especially if you are using delayed sync for some services. That would explain higher CPU on sync interface.

On another subject, do you use open server listed in here https://www.checkpoint.com/support-services/hcl/ for 3.10 specifically?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Valeri,

"Are you sure you did not change policy along with the Core split? "

No change in policy

"Less templated acceleration may then lead to higher load on sync"

I expected something like this. From a config standpoint nothing has changed:

[Expert@FWxxxx:0]# fwaccel stat

+-----------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+-----------------------------------------------------------------------------+

|0 |SND |enabled |eth4,eth5,eth6,eth7, |

| | | |eth10,eth11,eth12,eth13, |

| | | |eth8,eth9 |Acceleration,Cryptography |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,NULL,3DES,DES,CAST, |

| | | | |CAST-40,AES-128,AES-256,ESP, |

| | | | |LinkSelection,DynamicVPN, |

| | | | |NatTraversal,AES-XCBC,SHA256 |

+-----------------------------------------------------------------------------+

Accept Templates : enabled

Drop Templates : enabled

NAT Templates : enabled

So the question is now how do I get into troubleshooting ?

Regards Thomas

PS: Server is fully HCL compliant - HP DL380 G10

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Timothy_Hall , what's your take on that?

@TomShanti Is it 16 physical cores or 8 with multi-thread?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is a two socket (CPU) 8 core system.

So 16 physical cores. No Hyper-threading enabled.

Regards Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What you are describing after the split change doesn't make sense, at least not to me. The way your cores are allocated would suggest that you actually do have SMT enabled, but that may just be the way you manually assigned them. Are you licensed for all 16 cores?

Please provide the output of the Super Seven commands along with enabled_blades for analysis.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Timothy,

as far as I can read lscpu my open server has two physical CPUs with 8 cores each:

[Expert@FW02-EMEA:0]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 16

On-line CPU(s) list: 0-15

Thread(s) per core: 1

Core(s) per socket: 8

Socket(s): 2

NUMA node(s): 4

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Xeon(R) Gold 6134 CPU @ 3.20GHz

Stepping: 4

CPU MHz: 3200.000

BogoMIPS: 6400.00

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 1024K

L3 cache: 25344K

NUMA node0 CPU(s): 0-3

NUMA node1 CPU(s): 4-7

NUMA node2 CPU(s): 8-11

NUMA node3 CPU(s): 12-15

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch epb cat_l3 cdp_l3 invpcid_single intel_pt spec_ctrl ibpb_support tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm mpx rdt_a avx512f avx512dq rdseed adx smap clflushopt clwb avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts

So no Hyperthreading involved as far as I can see

cmd1

[Expert@FWxxxx:0]# fwaccel stat

+-----------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+-----------------------------------------------------------------------------+

|0 |SND |enabled |eth4,eth5,eth6,eth7, |

| | | |eth10,eth11,eth12,eth13, |

| | | |eth8,eth9 |Acceleration,Cryptography |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,NULL,3DES,DES,CAST, |

| | | | |CAST-40,AES-128,AES-256,ESP, |

| | | | |LinkSelection,DynamicVPN, |

| | | | |NatTraversal,AES-XCBC,SHA256 |

+-----------------------------------------------------------------------------+

Accept Templates : enabled

Drop Templates : enabled

NAT Templates : enabled

cmd2

[Expert@FWxxxxx:0]# fwaccel stats -s

Accelerated conns/Total conns : 10138/205848 (4%)

Accelerated pkts/Total pkts : 26047385330/42303022874 (61%)

F2Fed pkts/Total pkts : 270320445/42303022874 (0%)

F2V pkts/Total pkts : 190564992/42303022874 (0%)

CPASXL pkts/Total pkts : 0/42303022874 (0%)

PSLXL pkts/Total pkts : 15985317099/42303022874 (37%)

QOS inbound pkts/Total pkts : 0/42303022874 (0%)

QOS outbound pkts/Total pkts : 0/42303022874 (0%)

Corrected pkts/Total pkts : 0/42303022874 (0%)

cmd3

[Expert@FWxxxxx:0]# grep -c ^processor /proc/cpuinfo

16

cmd4

[Expert@FWxxxxx:0]# fw ctl affinity -l -r

CPU 0: eth7

CPU 1:

CPU 2:

CPU 3: fw_9

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

CPU 4: fw_7

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

CPU 5: fw_5

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

CPU 6: fw_3

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

CPU 7: fw_1

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

CPU 8:

CPU 9:

CPU 10: fw_10

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

CPU 11: fw_8

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

CPU 12: fw_6

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

CPU 13: fw_4

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

CPU 14: fw_2

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

CPU 15: fw_0

mpdaemon fwd pdpd lpd pepd in.acapd dtlsd vpnd in.asessiond rtmd dtpsd cprid cpd

All:

Interface eth4: has multi queue enabled

Interface eth5: has multi queue enabled

Interface eth6: has multi queue enabled

Interface eth10: has multi queue enabled

Interface eth11: has multi queue enabled

Interface eth12: has multi queue enabled

Interface eth13: has multi queue enabled

Interface eth8: has multi queue enabled

Interface eth9: has multi queue enabled

cmd5

[Expert@FWxxxxx:0]# netstat -ni

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

eth4 1500 0 2434625 0 25534 0 2287137 0 0 0 BMRU

eth4.3xxx 1500 0 2399507 0 9 0 2287450 0 0 0 BMRU

eth5 1500 0 8762159 0 6381 0 4964024 0 0 0 BMRU

eth5.3xxx 1500 0 1305085 0 0 0 792359 0 0 0 BMRU

eth5.3xxx 1500 0 401611 0 0 0 1489 0 0 0 BMRU

eth5.3xxx 1500 0 3156978 0 0 0 1743269 0 0 0 BMRU

eth5.3xxx 1500 0 273200 0 0 0 43901 0 0 0 BMRU

eth5.3xxx 1500 0 261594 0 0 0 12321 0 0 0 BMRU

eth5.3xxx 1500 0 138487 0 0 0 68 0 0 0 BMRU

eth5.3xxx 1500 0 181043 0 0 0 1027 0 0 0 BMRU

eth5.3xxx 1500 0 138489 0 0 0 3105 0 0 0 BMRU

eth5.3xxx 1500 0 2773936 0 77 0 2366561 0 0 0 BMRU

eth6 1500 0 911627 11 6380 0 532714 0 0 0 BMRU

eth6.4xx 1500 0 627401 0 0 0 532734 0 0 0 BMRU

eth7 1500 0 78849528 0 0 0 26406053 0 0 0 BMRU

eth8 1500 0 32826906 0 6381 0 6552656 0 0 0 BMRU

eth8.3xxx 1500 0 11505957 0 39 0 2161112 0 0 0 BMRU

eth8.3xxx 1500 0 14825428 0 267 0 1597151 0 0 0 BMRU

eth8.3xxx 1500 0 354828 0 9 0 2768 0 0 0 BMRU

eth8.3xxx 1500 0 1255742 0 0 0 1844956 0 0 0 BMRU

eth8.3xxx 1500 0 102552 0 0 0 1859 0 0 0 BMRU

eth8.3xxx 1500 0 295471 0 195 0 192740 0 0 0 BMRU

eth8.3xxx 1500 0 239986 0 39 0 95922 0 0 0 BMRU

eth8.3xxx 1500 0 99658 0 0 0 67 0 0 0 BMRU

eth8.3xxx 1500 0 220546 0 0 0 134983 0 0 0 BMRU

eth8.3xxx 1500 0 625625 0 0 0 521212 0 0 0 BMRU

eth9 1500 0 172080171 0 6381 0 180826059 0 0 0 BMRU

eth9.xx 1500 0 171085697 0 316 0 180126064 0 0 0 BMRU

eth9.3xxx 1500 0 960794 0 636 0 701633 0 0 0 BMRU

eth10 1500 0 90596096 0 6377 0 103516618 0 0 0 BMRU

eth10.3xxx 1500 0 90590353 0 0 0 103516974 0 0 0 BMRU

eth11 1500 0 426353169 0 6443 0 236835747 0 0 0 BMRU

eth11.3xxx 1500 0 55688095 0 0 0 49306146 0 0 0 BMRU

eth11.3xxx 1500 0 15976048 0 54 0 238769 0 0 0 BMRU

eth11.3xxx 1500 0 108562 0 0 0 9736 0 0 0 BMRU

eth11.3xxx 1500 0 333089 0 81 0 241127 0 0 0 BMRU

eth11.3xxx 1500 0 100907 0 0 0 68 0 0 0 BMRU

eth11.3xxx 1500 0 101509 0 0 0 79 0 0 0 BMRU

eth11.3xxx 1500 0 104033 0 0 0 2413 0 0 0 BMRU

eth11.3xxx 1500 0 168608908 0 4911 0 37264907 0 0 0 BMRU

eth11.3xxx 1500 0 7375167 0 292 0 6573741 0 0 0 BMRU

eth11.3xxx 1500 0 1836349 0 36 0 1356875 0 0 0 BMRU

eth11.3xxx 1500 0 99925 0 0 0 468 0 0 0 BMRU

eth11.3xxx 1500 0 141180 0 0 0 27447 0 0 0 BMRU

eth11.3xxx 1500 0 191290 0 102 0 18962 0 0 0 BMRU

eth11.3xxx 1500 0 137456 0 24 0 2362 0 0 0 BMRU

eth11.3xxx 1500 0 100906 0 0 0 67 0 0 0 BMRU

eth11.3xxx 1500 0 89786516 0 0 0 85385196 0 0 0 BMRU

eth11.3xxx 1500 0 191863 0 0 0 90419 0 0 0 BMRU

eth11.3xxx 1500 0 487745 0 0 0 4309 0 0 0 BMRU

eth11.3xxx 1500 0 123773 0 0 0 21426 0 0 0 BMRU

eth11.3xxx 1500 0 57946462 0 0 0 35966200 0 0 0 BMRU

eth11.3xxx 1500 0 2433490 0 628 0 2023382 0 0 0 BMRU

eth11.3xxx 1500 0 17242904 0 94 0 16257126 0 0 0 BMRU

eth11.3xxx 1500 0 1659699 0 56 0 1533958 0 0 0 BMRU

eth11.3xxx 1500 0 624714 0 38 0 511067 0 0 0 BMRU

eth12 1500 0 12243819 0 6380 0 163756701 0 0 0 BMRU

eth12.3xxx 1500 0 12015918 0 36 0 163756746 0 0 0 BMRU

eth13 1500 0 824428 0 6386 0 530262 0 0 0 BMRU

eth13.3xxx 1500 0 787882 0 0 0 530302 0 0 0 BMRU

lo 65536 0 286089 0 0 0 286089 0 0 0 LMPORU

cmd6

[Expert@FWxxxxx:0]# fw ctl multik stat

I will provide this when system is under load tomorrow

cmd7

cpstat os -f multi_cpu -o 1

I will provide this when system is under load tomorrow

[Expert@FWxxxxx:0]# enabled_blades

fw vpn ips identityServer mon vpn

We currently suspect IPS signature updates as a root cause.

This was the only "policy" change applied after core assignment change.

Thanks and regards

Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is eth7 both your sync and management interface? A little odd but shouldn't cause a problem.

Everything seems to look more or less OK other than 1% RX-DRP on eth4.

Much more likely that IPS is the cause of a low templating rate, to test try running command ips off, fwaccel stats -r, wait 5 minutes, then check statistics again with fwaccel stats -s. Then ips on of course.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Timothy,

Disabling IPS increases accelerated conns to approx 30% (better but still far away from the 50% before our change).

When digging through the fwaccel commands I realized the following:

[Expert@FWxxxxx:0]# fwaccel stats -s

Accelerated conns/Total conns : 45552/184569 (24%)

[Expert@FWxxxxx:0]# fwaccel conns -s

Total number of connections: 178628

This looks like a mismatch to me ?

Regards Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update:

So the high load on the "sync" interface (Core 0) probably turned out to be no sync traffic or SecureXL issue.

We even turned off the sync interface still the load (SI) on core 0 was nearly 100%.

After a lot of investigation we finally found out that there is a bug causing a lot of VPN client connection to cumulate on one SND core despite that other cores exist:

Currently we installed the Jumbo but it only seems to have a slight improvement on the issue.

Regards Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the update hadn't heard of that one, I wouldn't worry too much about the different connections numbers between separate SecureXL commands.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just a note on the SK.

It mentions HF is included in

Jumbo Hotfix Accumulator for R80.30 since Take 195

Which is actually wrong. We had to additionally install the two dedicated HFs on top of JHF195.

Regards Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are checking this issue and will update.

Thanks,

Yifat Chen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi again..

Fix exist in Jumbo take 195, but only for kernel 2.6.18.

Same fix for kernel 3.10 will be part of our next ongoing take

SK will be updated accordingly.

Thanks.

Yifat Chen

Release Manager

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 16 | |

| 10 | |

| 10 | |

| 9 | |

| 7 | |

| 3 | |

| 2 | |

| 2 | |

| 2 | |

| 1 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter