- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

The Great Exposure Reset

24 February 2026 @ 5pm CET / 11am EST

CheckMates Fest 2026

Watch Now!AI Security Masters

Hacking with AI: The Dark Side of Innovation

CheckMates Go:

CheckMates Fest

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Re: New Core Switch - Failure

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

New Core Switch - Failure

Hey There

So we are runing a Old Cisco Nexus 5000K Switch stack, Checkpoint 15000 <> N5KSwitches <> N5KSwitches <> Checkpoint 15000

the 15000 is runing VSX

Worked pretty good.

So this year we got new Cisco Nexus 9000K Switch, and today we tried to move them

Moving Firewall, no big issue and we connected it up to the 9000 and it reconncted with the other FIrewall and they connect to eatcher, we move the active firewalls from the firewall connected to the N5K switch to the firewall connected N9K switch.

And everything is working

But when we move the Firewall still conncted to N5K switch to the N9K, they start going active standby down and as soon as Firewall 1 has done this Firewall 2 will go active standby down and so on.

so the network works for 3-4 minutes then highlatancy and then works 3-4 minutes rinse and repeat.

Anyone seen this before?

//Niklas

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After talking to the Support its now verified that runing Cisco VPC and Checkpoint on Multicast there is a issue, switching to Broadcast made it work better.

19 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sounds like you have port security biting you, disable all port security on the ports going to the 2 FW's.

As soon as any VS is moving from 1 box to the other this is seen as illegal by some switches, same in VMware switches.

I myself never dealt with the 9000's but this is where I would look for the cause.

Also check how clustering is set, Multicast, Broadcast or Unicast (R80.20 and up).

"cphaprob -a if" will tell you which is used.

As soon as any VS is moving from 1 box to the other this is seen as illegal by some switches, same in VMware switches.

I myself never dealt with the 9000's but this is where I would look for the cause.

Also check how clustering is set, Multicast, Broadcast or Unicast (R80.20 and up).

"cphaprob -a if" will tell you which is used.

Regards, Maarten

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

description --Checkpoint-Core-FW--

switchport

switchport mode trunk

switchport trunk allowed vlan 2,4,8,12,14,16,20,24-25,32,44,48,52,60,71-72,84,88,92,96,104-105,108,112,201,204-205,701-705,1000,15

00

mtu 9216

channel-group 3 mode active

no shutdown

Channel Group 3

description --Checkpoint-Core-FW--

switchport

switchport mode trunk

switchport trunk allowed vlan 2,4,8,12,14,16,20,24-25,32,44,48,52,60,71-72,84,88,92,96,104-105,108,112,201,204-205,701-705,1000,15

00

mtu 9216

vpc 3

This is the port for the Trunk for the Checkpoint, no real Security Features on the interface (same conf as our current VMware environment is runing )

MOSJFDC01:0> cphaprob -a if

vsid 0:

------

Required interfaces: 2

Required secured interfaces: 1

Mgmt UP non sync(non secured), multicast

bond0 UP sync(secured), broadcast, bond High Availability -

Virtual cluster interfaces: 1

Mgmt 10.0.2.107

Looks like its broadcast.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How are you? First of all please tell us which version and JHF are you running on your 15k cluster.

How are the sync interfaces connected? Back to back (directly between appliances) or via a L2 device?

Please reffer to /var/log/messages to see why the cluster is flapping, that will give us a hint to point you in the right direction.

Best temporary solution would be to leave one of the members "off" with cphastop or cpstop until you can sort this out so you can avoid flappings.

_____

____________

https://www.linkedin.com/in/federicomeiners/

https://www.linkedin.com/in/federicomeiners/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is Check Point CPinfo Build 914000191 for GAIA

[IDA]

HOTFIX_R80_10

[CPFC]

HOTFIX_R80_10

HOTFIX_R80_10_JUMBO_HF Take: 189

[FW1]

HOTFIX_R80_10

HOTFIX_R80_10_JUMBO_HF Take: 189

FW1 build number:

This is Check Point's software version R80.10 - Build 161

kernel: R80.10 - Build 132

[SecurePlatform]

HOTFIX_R80_10_JUMBO_HF Take: 189

[CPinfo]

No hotfixes..

[PPACK]

HOTFIX_R80_10

HOTFIX_R80_10_JUMBO_HF Take: 189

[DIAG]

HOTFIX_R80_10

[CVPN]

HOTFIX_R80_10

HOTFIX_R80_10_JUMBO_HF Take: 189

[CPUpdates]

BUNDLE_R80_10_JUMBO_HF Take: 189

The Sync is via a L2 device also,

Oct 1 08:37:27 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Oct 1 08:37:27 2019 MOSJFDC01 last message repeated 3 times

Oct 1 08:37:31 2019 MOSJFDC01 clish[8150]: cmd in VS0 by admin: Start executing : exit (cmd md5: f24f62eeb789199b9b2e467df3b1876b)

Oct 1 08:37:31 2019 MOSJFDC01 xpand[24523]: admin localhost t -volatile:clish:admin:8150

Oct 1 08:37:31 2019 MOSJFDC01 clish[8150]: User admin logged out from CLI shell

Oct 1 08:37:35 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Oct 1 08:37:35 2019 MOSJFDC01 last message repeated 6 times

Oct 1 08:37:52 2019 MOSJFDC01 kernel: printk: 5 messages suppressed.

Oct 1 08:37:52 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Oct 1 08:37:52 2019 MOSJFDC01 last message repeated 3 times

Oct 1 08:38:11 2019 MOSJFDC01 kernel: printk: 2 messages suppressed.

Oct 1 08:38:11 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Oct 1 08:38:11 2019 MOSJFDC01 last message repeated 2 times

Oct 1 08:38:45 2019 MOSJFDC01 kernel: printk: 1 messages suppressed.

Oct 1 08:38:45 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Oct 1 08:40:13 2019 MOSJFDC01 last message repeated 4 times

Oct 1 08:41:16 2019 MOSJFDC01 last message repeated 14 times

Oct 1 08:42:22 2019 MOSJFDC01 last message repeated 18 times

Oct 1 08:42:34 2019 MOSJFDC01 last message repeated 5 times

Oct 1 08:42:42 2019 MOSJFDC01 kernel: printk: 4 messages suppressed.

Oct 1 08:42:42 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Oct 1 08:42:46 2019 MOSJFDC01 kernel: printk: 1 messages suppressed.

Oct 1 08:42:46 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Oct 1 08:43:14 2019 MOSJFDC01 kernel: printk: 1 messages suppressed.

Oct 1 08:43:14 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Oct 1 08:43:18 2019 MOSJFDC01 last message repeated 5 times

Oct 1 08:43:35 2019 MOSJFDC01 kernel: printk: 2 messages suppressed.

Oct 1 08:43:35 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Oct 1 08:44:09 2019 MOSJFDC01 last message repeated 2 times

Oct 1 08:44:09 2019 MOSJFDC01 last message repeated 8 times

Oct 1 08:44:13 2019 MOSJFDC01 kernel: printk: 343 messages suppressed.

Oct 1 08:44:13 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Oct 1 08:44:18 2019 MOSJFDC01 kernel: printk: 347 messages suppressed.

Oct 1 08:44:18 2019 MOSJFDC01 kernel: wrpj192: received packet with own address as source address

Right no the Up links are shut down on bond0,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Have you set up the different MTU on the Check Point side? By default it's 1500.

- Please share with us LACP/Bond configuration from both ends, it's one of the possible causes for the bond to flap.

The "received packet with own address as source address" it's interesting and there are plenty of them. Do you have any loops by chance?

____________

https://www.linkedin.com/in/federicomeiners/

https://www.linkedin.com/in/federicomeiners/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes the MTU is raised to above 9100

Can you specifia what part of the config you want?

I am pretty green on Checkpoint commands 😀

on the Cisco its only the vpc command that is LACP

I have look and no switch is reporting a loop, and the spanning tree would have gone in and shut down that Interfance/Vlan on the switch side.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If I remember correctly Nexus VPCs follow the 802.3ad protocol. Please check the settings of the bond interface via Web Interface (Interfaces section) or by ussing the show bonding group <Bond Group ID> command.

Based on your chpahprob -a if I guess that you only have one bond (bond1). Make sure that you have 802.3ad selected in the Check Point side.

Mind sharing also how your cables are connected? I'm guessing that you have 2 links for each firewall.

Based on your chpahprob -a if I guess that you only have one bond (bond1). Make sure that you have 802.3ad selected in the Check Point side.

Mind sharing also how your cables are connected? I'm guessing that you have 2 links for each firewall.

____________

https://www.linkedin.com/in/federicomeiners/

https://www.linkedin.com/in/federicomeiners/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is from the one runing right now.

vPC Status: Up, vPC number: 3

Hardware: Port-Channel, address: 003a.9c07.358a (bia 003a.9c07.358a)

Description: --Checkpoint-Core-FW--

MTU 9216 bytes, BW 20000000 Kbit, DLY 10 usec

reliability 255/255, txload 1/255, rxload 1/255

Encapsulation ARPA, medium is broadcast

Port mode is trunk

full-duplex, 10 Gb/s

Input flow-control is off, output flow-control is off

Auto-mdix is turned off

Switchport monitor is off

EtherType is 0x8100

Members in this channel: Eth1/35, Eth1/36

Last clearing of "show interface" counters never

1 interface resets

30 seconds input rate 39071656 bits/sec, 8400 packets/sec

30 seconds output rate 25682112 bits/sec, 5314 packets/sec

Load-Interval #2: 5 minute (300 seconds)

input rate 36.56 Mbps, 8.29 Kpps; output rate 26.32 Mbps, 5.34 Kpps

RX

727912665 unicast packets 2097043 multicast packets 7819470 broadcast packets

737829178 input packets 463063288792 bytes

18855496 jumbo packets 0 storm suppression bytes

0 runts 0 giants 0 CRC 0 no buffer

0 input error 0 short frame 0 overrun 0 underrun 0 ignored

0 watchdog 0 bad etype drop 0 bad proto drop 0 if down drop

0 input with dribble 0 input discard

0 Rx pause

TX

392510538 unicast packets 168511 multicast packets 325945 broadcast packets

393004994 output packets 246338324965 bytes

17212302 jumbo packets

0 output error 0 collision 0 deferred 0 late collision

0 lost carrier 0 no carrier 0 babble 0 output discard

0 Tx pause

This is from the one that dint work

show interface port-channel 3

port-channel3 is down (No operational members)

admin state is up,

vPC Status: Down, vPC number: 3 [packets forwarded via vPC peer-link]

Hardware: Port-Channel, address: 0000.0000.0000 (bia 0000.0000.0000)

Description: --Checkpoint-Core-FW--

MTU 9216 bytes, BW 100000 Kbit, DLY 10 usec

reliability 255/255, txload 1/255, rxload 1/255

Encapsulation ARPA, medium is broadcast

Port mode is trunk

auto-duplex, 10 Gb/s

Input flow-control is off, output flow-control is off

Auto-mdix is turned off

Switchport monitor is off

EtherType is 0x8100

Members in this channel: Eth1/35, Eth1/36

Last clearing of "show interface" counters never

2 interface resets

30 seconds input rate 0 bits/sec, 0 packets/sec

30 seconds output rate 0 bits/sec, 0 packets/sec

Load-Interval #2: 5 minute (300 seconds)

input rate 0 bps, 0 pps; output rate 0 bps, 0 pps

RX

5084758 unicast packets 25079 multicast packets 10408 broadcast packets

5120245 input packets 2480425365 bytes

234574 jumbo packets 0 storm suppression bytes

0 runts 0 giants 0 CRC 0 no buffer

0 input error 0 short frame 0 overrun 0 underrun 0 ignored

0 watchdog 0 bad etype drop 0 bad proto drop 0 if down drop

0 input with dribble 0 input discard

0 Rx pause

TX

10313466 unicast packets 44129 multicast packets 463063 broadcast packets

10820658 output packets 5424750078 bytes

107134 jumbo packets

0 output error 0 collision 0 deferred 0 late collision

0 lost carrier 0 no carrier 0 babble 0 output discard

0 Tx pause

The Setup is

15000 Chp

4x10gb

runing to a Nexus VCP cluster

With 2x10 In First switch

And 2x10 in Second switch

Connect to sexondary Nexus VCP Cluster

With 2x10 In First switch

And 2x10 in Second switch

to the other 15000 Chp

Make sure that you have 802.3ad selected in the Check Point side. <- How is this achived 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>Make sure that you have 802.3ad selected in the Check Point side. <- How is this achived

" show bond bondXX " there should be 802.3ad as bond typ

Have a look at some problems with Nexus-OS (search for Nexus in the knowledgebase)

One problem we solved one year ago...

ClusterXL interfaces are flapping when connected to Cisco Nexus switches <= solved with new release for Nexus

Wolfgang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bonding Interface: 1

Bond Configuration

xmit-hash-policy layer2

down-delay 200

primary Not configured

lacp-rate slow

mode 8023AD

up-delay 200

mii-interval 100

Bond Interfaces

eth1-01

eth1-02

eth1-03

eth1-04

MOBUFDC01:0>

Bonding Interface: 1

Bond Configuration

xmit-hash-policy layer2

down-delay 200

primary Not configured

lacp-rate slow

mode 8023AD

up-delay 200

mii-interval 100

Bond Interfaces

eth1-01

eth1-02

eth1-03

eth1-04

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Btw also to the Nexus, we are runing os

NXOS: version 7.0(3)I7(3),

So that bug should not applie.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why do you have 2 interfaces on the Nexus side and 4 interfaces on the Check Point side? Keeping in mind that they belong to the same bond.

Nexus

NX1: Members in this channel: Eth1/35, Eth1/36

NX2: Members in this channel: Eth1/35, Eth1/36

Check Point

Bond Interfaces (For each member I think)

eth1-01

eth1-02

eth1-03

eth1-04

____________

https://www.linkedin.com/in/federicomeiners/

https://www.linkedin.com/in/federicomeiners/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey

its like this

vPC Cluster 1

Nexus 01 eth1/35-35

Nexus 02 eth1/35-35

vPC Cluster 1

Nexus 03 eth1/35-35

Nexus04 eth1/35-35

You could say they are stacked more or less

so the nexus cluster acts like one switch

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

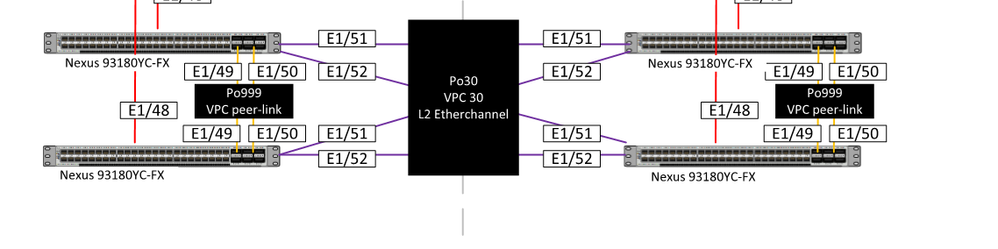

Hey So here is the setup on the switch side.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would immediately involve TAC and get it resolved instead of pasting endless outputs here !

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is also a Case open, sometimes the ppl that have the products in use also have the answers, and have a similer setup.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you also open a case with Cisco? That is where I would be putting my main focus.

Regards, Maarten

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes that is also done 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After talking to the Support its now verified that runing Cisco VPC and Checkpoint on Multicast there is a issue, switching to Broadcast made it work better.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 27 | |

| 19 | |

| 10 | |

| 9 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 4 |

Upcoming Events

Thu 19 Feb 2026 @ 03:00 PM (EST)

Americas Deep Dive: Check Point Management API Best PracticesMon 23 Feb 2026 @ 11:00 AM (EST)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - AMERTue 24 Feb 2026 @ 10:00 AM (CET)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - EMEAThu 19 Feb 2026 @ 03:00 PM (EST)

Americas Deep Dive: Check Point Management API Best PracticesMon 23 Feb 2026 @ 11:00 AM (EST)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - AMERTue 24 Feb 2026 @ 10:00 AM (CET)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - EMEAAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter