- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

10 December @ 5pm CET / 11am ET

Improve Your Security Posture with

Threat Prevention and Policy Insights

Overlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Core goes 100% when uploading a file to Sharepoint...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

Core goes 100% when uploading a file to Sharepoint site

Hello All!

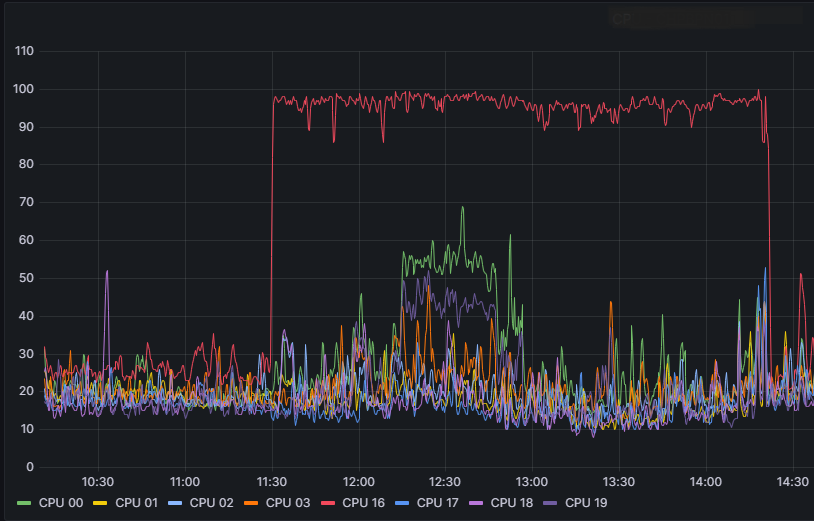

Few days ago we detect a core going to 100% with no apparently reason.

We check it using cpview -> CPU -> Top-Connections and discover that was one computer reaching some IP.

I reach the user and he explain was uploading a 42Gb file to a Sharepoint suppliers site for some specific work.

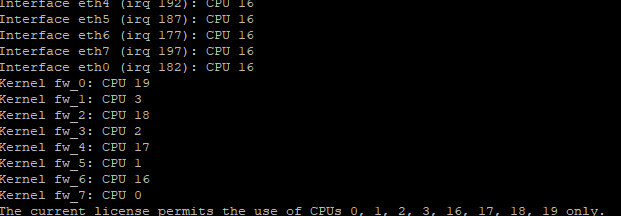

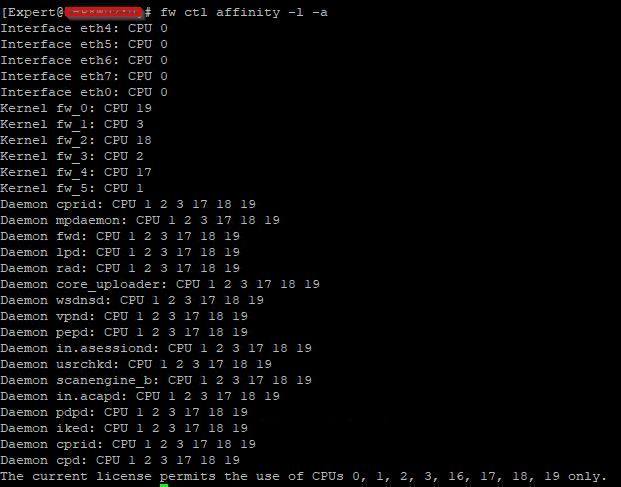

We check the distribution of cores using fw affinity, and apparently CPU 16 is taking care of several interfaces.

FW is part of a ClusterXL.

Blades: fw vpn cvpn urlf av appi ips identityServer SSL_INSPECT anti_bot ThreatEmulation mon.

We are concern because this was generated by one user, and we want to get ahead in case more users start doing things like this.

Probably someone all ready had this issue before and have some recommendations.

¿Is there a way to "adjust" this procces/connection? Meaning, is recommended to set manually the distribution of cores for the interfaces? how?

We could set a drop rule to that user to that IP, could it work? Or the CPU will keep reaching 100% (we didn't test it).

2 Solutions

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on those outputs 6 instances with a static 2/6 CoreXL split should be appropriate, then you can re-assess.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

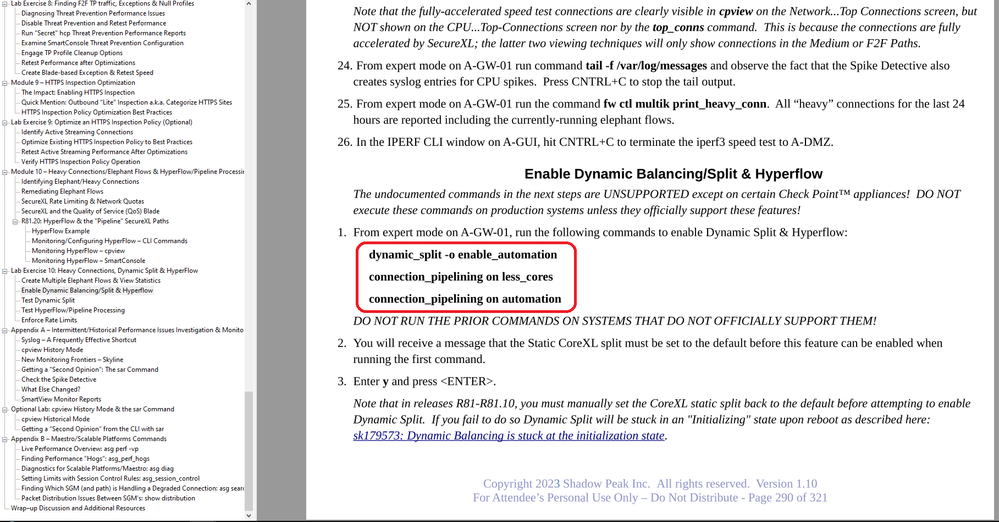

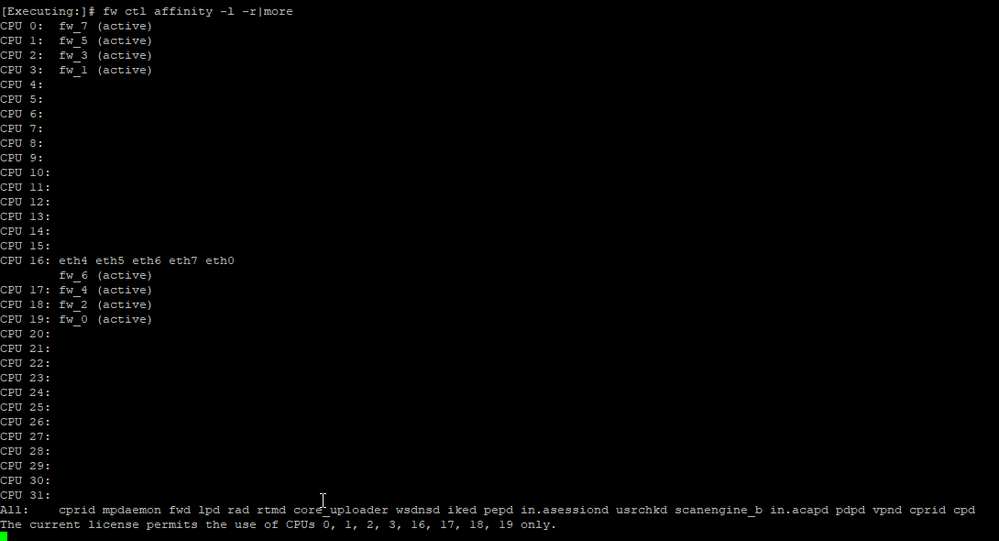

Here is the page from my Gateway Performance Optimization Course detailing the "secret" commands, for anyone else reading this please heed the warnings about not using these commands on production systems that don't support them. Use at your own risk!

The first circled command force-enables Dynamic Split, while the latter two enable Hyperflow even if the required minimum number of cores is not present. The firewall will need to be rebooted for these commands to take effect.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

23 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

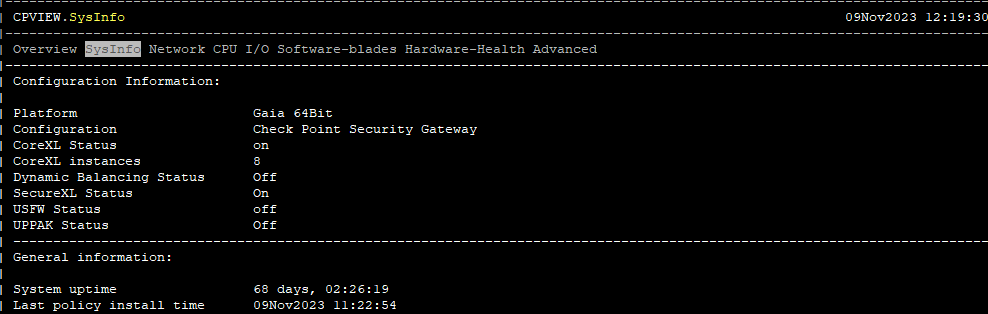

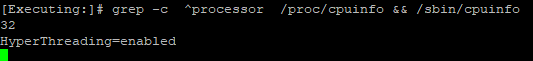

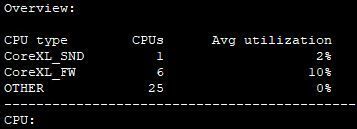

Your code version and Jumbo HFA? Looks like Dynamic Split/Workloads is not active.

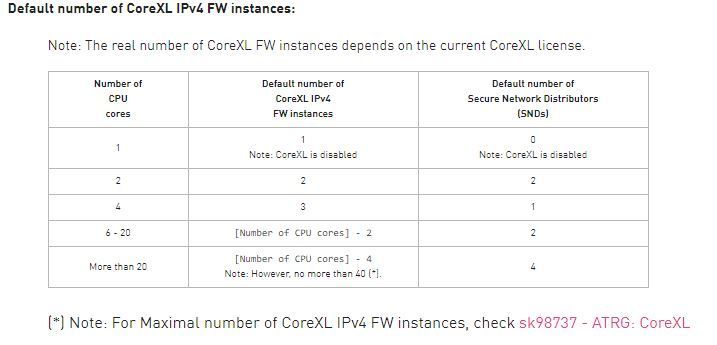

You are experiencing what I called the "core crunch" in the third edition of my Max Power book (pages 214-215). You are only licensed for 8 cores (really 4 physical cores w/ SMT) yet you have 8 firewall worker instances defined. So essentially many cores are pulling double duty as both SNDs & Workers in basically an 8/8 CoreXL split. This is very bad for performance as you have experienced.

You probably need to run cpconfig and reduce the number of firewall worker instances from 8 to 6 so that one of your physical cores can be dedicated to SND functions on two SND threads via SMT, but I'd advise providing your code version and posting the outputs of the "Super Seven" commands to this thread first before taking any action:

S7PAC - Super Seven Performance Assessment Command

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Timothy,

First of all, thank you very much for your response.

We're on R81.10 Jumbo Hotfix Take 110.

We've licensed 4 core instances and got duplicated due to hyperthreading. We can change the number of fw worker instances on a window.

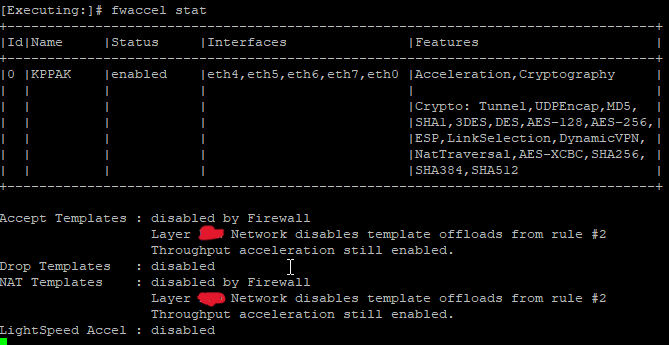

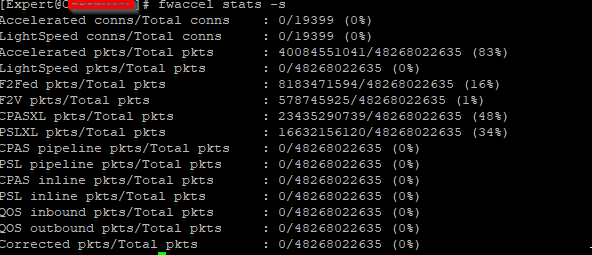

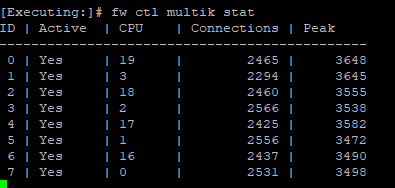

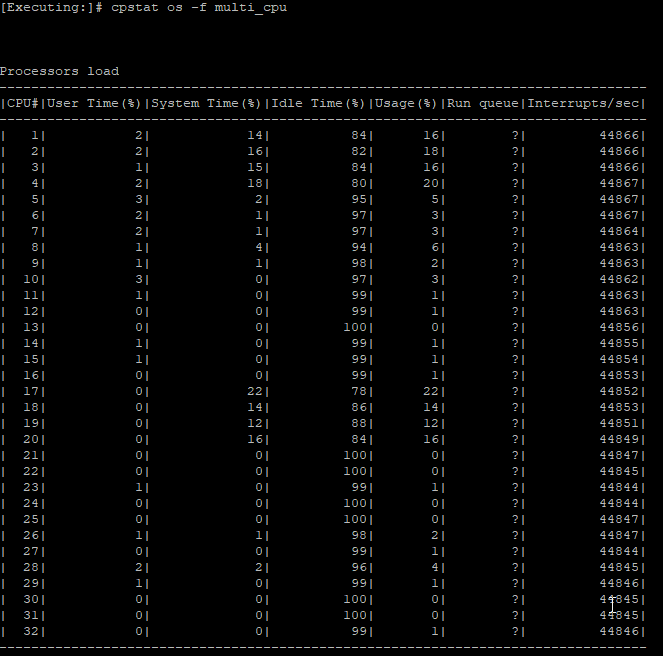

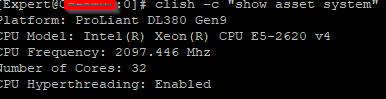

I'm adding the super 7 commands.

Thank you very much in advanced.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this firewall open hardware (i.e. not a Check Point appliance) or a VM? That would explain why Dynamic Balancing and possibly USFW is off.

If you want to stay with a static CoreXL split (or can't use Dynamic Balancing), definitely change number of instances to 6 to give you a 2/6 split.

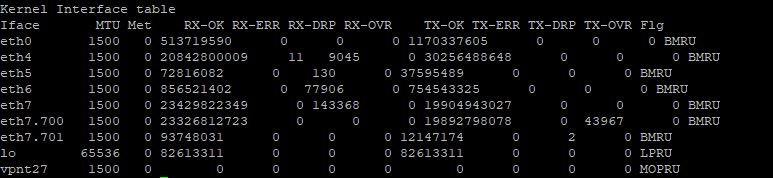

Can you please provide the output of netstat -ni as well? You might need more than 2 SND cores in your split depending on what we see in that output given the high percentage of fastpath traffic. Also is command fw ctl multik print_heavy_conn showing any elephant/heavy flows in the last 24 hours?

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Probably an Open Server given the output that license count allows to use specific cores out of 32.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is an open server, below the information.

Adding the response of the commands.

Edit, there are several heavy connection's using fw ctl multik print_heavy_conn. Several over 60% and a few near 84%.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on those outputs 6 instances with a static 2/6 CoreXL split should be appropriate, then you can re-assess.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much Timothy,

I'll make the change tomorrow and test accordingly.

Keep you posted.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

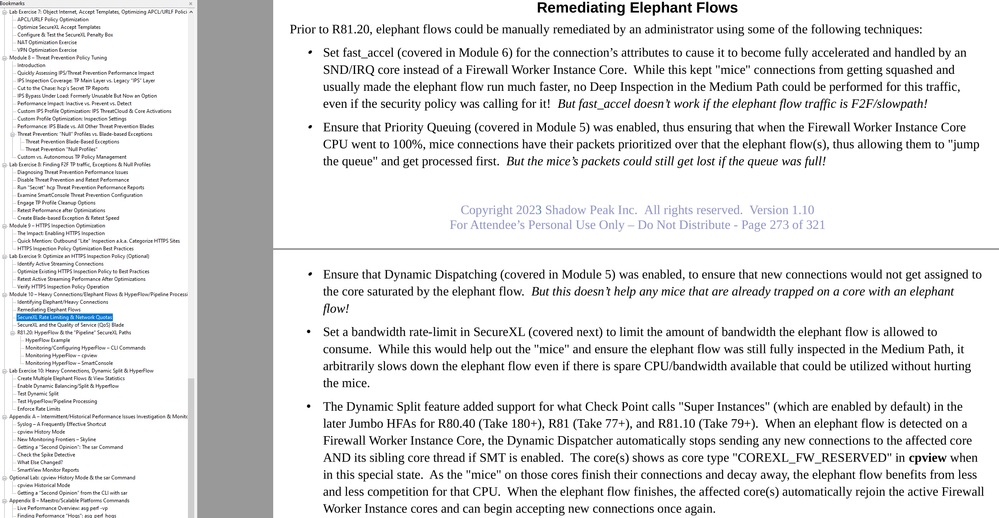

The split change will help get the most out of your licensed cores, but you will still get SharePoint-driven CPU spikes since all the packets of a single connection can only be handled on a single worker core in your version. The pre-Hyperflow options for dealing with elephant/heavy flows are covered in my course, but each approach has its own limitations and drawbacks. Here is the relevant page summarizing your options:

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Timothy,

Thank you for the info, we'll use fast_accel when we detect this flow again to jump the queue. I don't know if we can setup multi_queue, got to investigate that.

We've changed the corexl distribution using cpconfig but we lost one CPU (CPU 16) for SND (or at least is not showing up on the commands). I think is related to the amount of licensed cores that we've, which is 4 by the way; based on sk98348.

Below are some screenshots.

Thanks in advanced.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your split is 2/6 regardless of what those screenshots say; multi-queue should be on by default for all interfaces that support it. What is the driver type reported by ethtool -i (interfacename) for all your interfaces?

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Timothy,

Good to know, we where concern about the missing CPU.

Posting the out of commands for each interface.

eth0

driver: tg3

version: 3.137

firmware-version: 5719-v1.46 NCSI v1.5.33.0

expansion-rom-version:

bus-info: 0000:08:00.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: no

eth1

driver: tg3

version: 3.137

firmware-version: 5719-v1.46 NCSI v1.5.33.0

expansion-rom-version:

bus-info: 0000:08:00.1

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: no

eth2 (not in use)

driver: tg3

version: 3.137

firmware-version: 5719-v1.46 NCSI v1.5.33.0

expansion-rom-version:

bus-info: 0000:08:00.2

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: no

eth3 (not in use)

driver: tg3

version: 3.137

firmware-version: 5719-v1.46 NCSI v1.5.33.0

expansion-rom-version:

bus-info: 0000:08:00.3

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: no

eth4 (not in use)

driver: tg3

version: 3.137

firmware-version: 5719-v1.46 NCSI v1.5.33.0

expansion-rom-version:

bus-info: 0000:02:00.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: no

eth5

driver: tg3

version: 3.137

firmware-version: 5719-v1.46 NCSI v1.5.33.0

expansion-rom-version:

bus-info: 0000:02:00.1

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: no

eth6

driver: tg3

version: 3.137

firmware-version: 5719-v1.46 NCSI v1.5.33.0

expansion-rom-version:

bus-info: 0000:02:00.2

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: no

eth7

driver: tg3

version: 3.137

firmware-version: 5719-v1.46 NCSI v1.5.33.0

expansion-rom-version:

bus-info: 0000:02:00.3

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: no

eth8 (not in use)

driver: mlx4_en

version: 4.6-1.0.1

firmware-version: 2.42.5044

expansion-rom-version:

bus-info: 0000:04:00.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: no

supports-register-dump: no

supports-priv-flags: yes

eth9 (not in use)

driver: mlx4_en

version: 4.6-1.0.1

firmware-version: 2.42.5044

expansion-rom-version:

bus-info: 0000:04:00.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: no

supports-register-dump: no

supports-priv-flags: yes

Thanks in advanced.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

eth0-eth7 are using Broadcom driver tg3 and do not support Multi-Queue, this is the reason core 16 (sibling core to CPU 0 under SMT) is not showing up. However this is the optimal configuration since your single SND instance has exclusive access to CPU 0 to handle all interfaces and does not have another SND instance trying to compete for the same physical core via CPU 16. Assuming you don't rack up greater than 0.1% RX-DRP vs. RX-OK in output of netstat -ni, your single SND instance should be fine.

Broadcom NIC interfaces are usually the low cost bidder for inclusion of their NICs on the server motherboards, and do however suck quite mightily once they get put under load. But perhaps after having 10+ years to straighten out and stabilize their crap drivers, maybe Broadcom has finally has managed at long last to do so...maybe.

R81.20 will not make a huge difference to you here since you are on open hardware which does not support Hyperflow nor Dynamic Split. I'd just make sure you have the latest recommended R81.10 Jumbo HFA applied.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good to know Timothy,

Will gave this info to infra so they can search for some new server having those specs in mind.

For now seems to be working normally, so we'll keep the configuration and keep an eye on heavy flows to try fast_accel.

Thank you very much for your help, I really appreciate it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the software version of the GW? Asking because this is a heavy flow issue that might be already resolved by HyperFlow available with R81.20

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Val,

Thank you for asking, we're on R81.10 Jumbo Hotfix Take 110. We could jump to 81.20 on the 2nd FW of the cluster and test; but probably not today.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

HyperFlow is relevant for Check Point appliances - https://support.checkpoint.com/results/sk/sk178070

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @AmitShmuel ,

We have been looking into hyperflow. We are currently a heavy Open Server shop investigation appliance benefits - of which I see few.

Can you comment on this limitation?

-

When you enable only the Firewall Software Blade in the Security Gateway object, HyperFlow does not improve performance. This is because SecureXL accelerates connections not going through inspection, while HyperFlow accelerates connections going through inspection (blades).

SecureXL does not allow an elephant flow to be multithreaded in the firewall.

We would need to enabled ie. IDS - and ensure that the connections is inspected (causing some unknown performance hit) - To ensure that an elephant flow will be handled by multiple cores.

Is this correctly understood?

It feels like it is a hard sell for my organization. A lot of unknowns.

/Henrik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hyperflow is not supported on open hardware, although it can be force-enabled on open hardware/VMWare for lab testing purposes only.

Only connections being processed in the Medium Path (i.e. PSLXL and CPASXL) can be boosted by Hyperflow. Traffic in the fastpath (firewall blade only processing by SecureXL) and F2F/slowpath do not benefit from Hyperflow at all. Only Threat Prevention blades (with the exception of Zero Phishing) can benefit from having their operations boosted by Hyperflow in the pipeline paths.

I'd recommend thoroughly reading sk178070:HyperFlowin R81.20 and higher which spells out all the prerequisites and limitations quite well. Hyperflow is extensively covered and used during labs in my Gateway Performance Optimization class, so I am quite familiar with it and should be able to answer any additional questions you have.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How can you enable it on open servers?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is the page from my Gateway Performance Optimization Course detailing the "secret" commands, for anyone else reading this please heed the warnings about not using these commands on production systems that don't support them. Use at your own risk!

The first circled command force-enables Dynamic Split, while the latter two enable Hyperflow even if the required minimum number of cores is not present. The firewall will need to be rebooted for these commands to take effect.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would say you will have better luck with this if you install R81.20, for sure. One thing I always recall being successfull in the past was disable corexl, reboot, re-enable, reboot. That fixed it for few customers I worked with.

Best regards,

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Andy,

Thanks for the idea, will do it on next available window.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Its just something that worked for me in the past, but considering that @Timothy_Hall is way smarter than myself, I would go with his advice.

Best regards,

Andy

Best,

Andy

Andy

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 22 | |

| 21 | |

| 8 | |

| 7 | |

| 5 | |

| 5 | |

| 4 | |

| 3 | |

| 2 | |

| 2 |

Upcoming Events

Thu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAThu 04 Dec 2025 @ 02:00 PM (EST)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - AmericasThu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAThu 04 Dec 2025 @ 02:00 PM (EST)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - AmericasFri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter