My usual "morning after" report, in case it might help some one.

We were on R80.30 T215 before upgrade running on 23800 appliances that was NOT hyperthreaded before upgrade.

Good stuff:

Really impressed with CPUSE CLI upgrade! Especially considering the complexity - kernel upgrade from 2.6 to 3.10, enabling hyper-threading etc. Well done Checkpoint! I used Multi-Version Cluster (MVC) Upgrade option and it worked like a charm - connections synchronised in the cluster and I was able to failover one VS at a time.

Why did we decided against clean install and vsx_util reconfigure?

- easier rollback on the gateway as file system remains EXT3 you are able to use snapshots created prior R80.40. With clean install file system would change to XFS therefore snapshot revert would not work

- no need to take care of any customisations i.e.:

- manual CoreXL settings

- non-default IA settings

- scripts

- contents of user folders

- SSH keys and known hosts used by external monitoring

So actual upgrade was a breeze I have to admit!

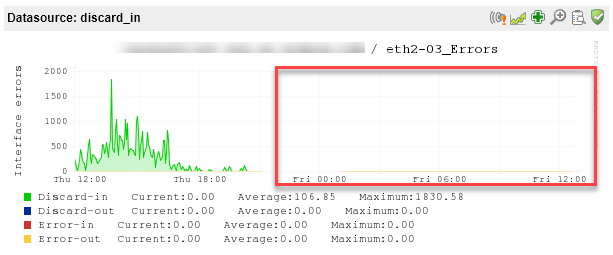

I do not want to celebrate too early but first indications are that our RX-DRP issues might be cured with a better MQ implementation in 3.10

Potential show-stoppers or things you will need to take care of:

- User crontab is reset, so you will have to add it back manually. For us it's a normal procedure anyways for any upgrade, but be mindful

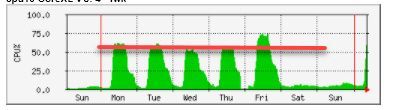

- Not 100% sure but for some reason on one box we saw IA nested groups reset to default setting of 20. We have it disabled. Just check it if you have customised it from 20. This is to deal with high CPU utilisation by pdpd

- Interface RX ring buffer settings were defaulted during upgrade. We were forced to increase it in R80.30 due to noticeable RX-DRP presence that affected Teams voice

- SNMP v3 stopped working after upgrade leaving us pretty much blind without any graphs to assess R80.40 performance properly. Major problem if you ask me. @Friedrich_Recht 💪 saved my night - here's link to the VSX SNMP v3 workaround , TAC case still open with CP for permanent fix

- SNMP OID ifDescr (1.3.6.1.2.1.2.2.1.2) has changed from interface name to interface card description. It is actually "correct" move but it "broke" our monitoring systems i.e. good old MRTG as it used ifDescr to fetch interface index therefore after upgrade it failed to match interface name to an index:

- MultiQueue manual settings will be replaced with default Auto. Left it at that for now, seems to do a good job

- Unable to display NAT table (fwx_alloc) on a busy VS. Only cpview works from R80.40 onwards (sk156852 ). But I'm unable to pull stats using SNMP as described in SK - only VS0 seems to be supported

- FQDN domain object issue (added 29/10). Description and workaround available here FQDN objects allow many unrelated IPs

LAST WORD: I personally would not recommend to deploy R80.40 on VSX with current take 78 in critical production environments due to too many issues with SNMP v3 as you loose service and performance visibility. Unless you need to resolve interface performance related issues i.e. RX buffer overflows that are causing operational problems. I will review and update this when we deploy next take