- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

Policy Insights and Policy Auditor in Action

19 November @ 5pm CET / 11am ET

Access Control and Threat Prevention Best Practices

Watch HereOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Traffic not accelerated by Secure XL

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Traffic not accelerated by Secure XL

Hi,

I have been dealing with the secure XL for a while and cannot have the traffic accelerated as you can see the output below.The problem is the cpus are going over %95 during day time and i think the reason is the secure XL not handling traffic as expected as everything is going through the slow path.

I have been through many topics here and I will put the outputs you may ask.

Just a brief information of the firewall, working with ClusterXL, 8 cpu (2 SND, 6 workers) , OPEN SERVER ( I'm not sure if this could be any issue) ,

This is an external firewall, having DMZ, vpn and internet traffic of users and servers and more as you can think.

#fwaccel stats -s

Accelerated conns/Total conns : 14/79668 (0%)

Accelerated pkts/Total pkts : 370720/214400236 (0%)

F2Fed pkts/Total pkts : 211158051/214400236 (98%)

PXL pkts/Total pkts : 2871465/214400236 (1%)

QXL pkts/Total pkts : 0/214400236 (0%)

# fwaccel conns -s

There are 211889 connections in SecureXL connections table

The template number is so low.

# fwaccel templates -s

There are 48 templates in SecureXL templates table

# fwaccel stat

Accelerator Status : on

Accept Templates : disabled by Firewall

Layer CL-EXT Security disables template offloads from rule #xxx ( just above the last rule)

Throughput acceleration still enabled.

Drop Templates : enabled

NAT Templates : disabled by Firewall

Layer CL-EXT Security disables template offloads from rule xxx ( just above the last rule)

Throughput acceleration still enabled.

NMR Templates : enabled

NMT Templates : enabled

I downloaded the fwaccel conns table and when investigated we see that most of traffic is about these 4 sources with 1 destination address (exchange related F5 traffic) as nearly 1/3 of the whole table is this connection.

| Source | Destination | DPort | PR | Flags C2S | i/f S2C | i/f Inst |

| A | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| B | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| B | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| C | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| A | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| D | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| C | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| D | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| C | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| B | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| B | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| D | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| A | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| C | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| D | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| C | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| B | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| C | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| A | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| B | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| A | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| B | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| B | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| A | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| D | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| D | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| A | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| B | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

| D | X | 443 | 6 | F..A...S...... | 40/32 | 32/40 |

My question is, how come this traffic isn't accelerated?

Thank you

23 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How many rules do you have ?

What is it the version of the MGMT and FW ?

In what rule the traffic stops being accelerated ?

In this rule, what are the services used ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

How many rules do you have ?

327

What is it the version of the MGMT and FW ?

mgmt - R80.30 - Build 484

fw - R80.10 - Build 161

In what rule the traffic stops being accelerated ?

325

In this rule, what are the services used ?

DCE rpc traffic, it is moved in the end not to cause problems for sexure xl.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Ok, what blades do you have ? Can you run "enabled_blades" ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

fw vpn urlf av appi ips identityServer SSL_INSPECT anti_bot ThreatEmulation mon vpn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe you have just a few templates because of the many blades that you have. Most of the traffic will pass in more than one blade and it is just accelerated in F2Fed and not in "total connections":

F2Fed pkts/Total pkts : 211158051/214400236 (98%)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My guess is you are improperly using the object Any in the Destination or Service of your HTTPS Inspection policy and it is pulling all traffic into F2F for active streaming. Use object Internet for the Destination (you will also need to make sure your firewall topology is completely and correctly defined to ensure this object is being calculated correctly) and only use explicit services like https in your HTTPS Inspection policy. You might have an "Any Any Any" cleanup rule at the end of your HTTPS Inspection policy, big no-no.

Another possibility is that all traffic is fragmented due to an incorrect MTU somewhere. Please provide the output of fw ctl pstat.

Last possibility is that you are using ISP Redundancy in Load Sharing Mode, Cluster Load Sharing with Sticky Decision Function enabled, or are using your firewall as an explicit HTTP/HTTPS web proxy, pretty much everything will go F2F as a result in any of those cases.

If practically all the traffic passing through this firewall is outbound user traffic to the Internet and subject to HTTPS Inspection, the 98% F2F might be legit.

Don't worry about templating rates, totally separate issue that is not the problem.

Could also be something in your TP policy causing the high F2F, we'll deal with that once you check your HTTPS Inspection Policy, fragmentation, and the three features I mentioned.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

https inspection rules are set with the internet object and https service. There isn't any any rule in the end.

I was curious about the any objects as the traffic i mentioned is passing through a firewall rule with any dest and service rule. I will add a specific rule for the traffic.

Could firewall policy with any objects be the problem?

We don't have a load sharing cluster, there is user traffic but not all of the traffic is for user internet access, there might be fragmentation as i have put the output but the first possibility might be the cause.

# fw ctl pstat

System Capacity Summary:

Memory used: 9% (8765 MB out of 96499 MB) - below watermark

Concurrent Connections: 110365 (Unlimited)

Aggressive Aging is enabled, not active

Hash kernel memory (hmem) statistics:

Total memory allocated: 10116661248 bytes in 2469888 (4096 bytes) blocks using 1 pool

Total memory bytes used: 0 unused: 10116661248 (100.00%) peak: 4717843200

Total memory blocks used: 0 unused: 2469888 (100%) peak: 1248346

Allocations: 566230308 alloc, 0 failed alloc, 538487847 free

System kernel memory (smem) statistics:

Total memory bytes used: 14461308200 peak: 15218299528

Total memory bytes wasted: 43889265

Blocking memory bytes used: 58531832 peak: 221029776

Non-Blocking memory bytes used: 14402776368 peak: 14997269752

Allocations: 2125678292 alloc, 0 failed alloc, 2125658331 free, 0 failed free

vmalloc bytes used: 14378068460 expensive: no

Kernel memory (kmem) statistics:

Total memory bytes used: 6862722468 peak: 8889698628

Allocations: 2691710826 alloc, 0 failed alloc

2663951514 free, 0 failed free

External Allocations: 24728832 for packets, 244282717 for SXL

Cookies:

3591315592 total, 2750222193 alloc, 2750204668 free,

2808169679 dup, 2969475638 get, 3163934437 put,

762173033 len, 660318754 cached len, 0 chain alloc,

0 chain free

Connections:

558916069 total, 404068565 TCP, 134111376 UDP, 20105413 ICMP,

630715 other, 8052 anticipated, 0 recovered, 110365 concurrent,

208312 peak concurrent

Fragments:

614867937 fragments, 306839276 packets, 52179 expired, 0 short,

7 large, 2022 duplicates, 1572 failures

NAT:

1829598225/0 forw, -1728821971/0 bckw, 67790129 tcpudp,

32972912 icmp, 433995668-632048567 alloc

Sync:

Version: new

Status: Able to Send/Receive sync packets

Sync packets sent:

total : 893794328, retransmitted : 1740, retrans reqs : 1371, acks : 1780599

Sync packets received:

total : 279528838, were queued : 2908698, dropped by net : 3761

retrans reqs : 748, received 7220298 acks

retrans reqs for illegal seq : 0

dropped updates as a result of sync overload: 0

Callback statistics: handled 7139038 cb, average delay : 1, max delay : 4098

I was curious about the any objects as the traffic i mentioned is passing through a firewall rule with any dest and service rule. I will add a specific rule for the traffic.

Could firewall policy with any objects be the problem?

We don't have a load sharing cluster, there is user traffic but not all of the traffic is for user internet access, there might be fragmentation as i have put the output but the first possibility might be the cause.

# fw ctl pstat

System Capacity Summary:

Memory used: 9% (8765 MB out of 96499 MB) - below watermark

Concurrent Connections: 110365 (Unlimited)

Aggressive Aging is enabled, not active

Hash kernel memory (hmem) statistics:

Total memory allocated: 10116661248 bytes in 2469888 (4096 bytes) blocks using 1 pool

Total memory bytes used: 0 unused: 10116661248 (100.00%) peak: 4717843200

Total memory blocks used: 0 unused: 2469888 (100%) peak: 1248346

Allocations: 566230308 alloc, 0 failed alloc, 538487847 free

System kernel memory (smem) statistics:

Total memory bytes used: 14461308200 peak: 15218299528

Total memory bytes wasted: 43889265

Blocking memory bytes used: 58531832 peak: 221029776

Non-Blocking memory bytes used: 14402776368 peak: 14997269752

Allocations: 2125678292 alloc, 0 failed alloc, 2125658331 free, 0 failed free

vmalloc bytes used: 14378068460 expensive: no

Kernel memory (kmem) statistics:

Total memory bytes used: 6862722468 peak: 8889698628

Allocations: 2691710826 alloc, 0 failed alloc

2663951514 free, 0 failed free

External Allocations: 24728832 for packets, 244282717 for SXL

Cookies:

3591315592 total, 2750222193 alloc, 2750204668 free,

2808169679 dup, 2969475638 get, 3163934437 put,

762173033 len, 660318754 cached len, 0 chain alloc,

0 chain free

Connections:

558916069 total, 404068565 TCP, 134111376 UDP, 20105413 ICMP,

630715 other, 8052 anticipated, 0 recovered, 110365 concurrent,

208312 peak concurrent

Fragments:

614867937 fragments, 306839276 packets, 52179 expired, 0 short,

7 large, 2022 duplicates, 1572 failures

NAT:

1829598225/0 forw, -1728821971/0 bckw, 67790129 tcpudp,

32972912 icmp, 433995668-632048567 alloc

Sync:

Version: new

Status: Able to Send/Receive sync packets

Sync packets sent:

total : 893794328, retransmitted : 1740, retrans reqs : 1371, acks : 1780599

Sync packets received:

total : 279528838, were queued : 2908698, dropped by net : 3761

retrans reqs : 748, received 7220298 acks

retrans reqs for illegal seq : 0

dropped updates as a result of sync overload: 0

Callback statistics: handled 7139038 cb, average delay : 1, max delay : 4098

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your firewall policy config is unlikely to be the problem causing high F2F, the policy config is related to templating and totally separate.

> Fragments:

> 614867937 fragments, 306839276 packets, 52179 expired, 0 short,

> 7 large, 2022 duplicates, 1572 failures

That looks a bit excessive, try running these commands to see where the fragments are coming from and how many are coming through the firewall live:

tcpdump -eni any '((ip[6:2] > 0) and (not ip[6] = 64))'

or

tcpdump -eni any "ip[6:2] & 0x1fff!=0"

The good news is that fragmented traffic no longer requires F2F in R80.20+, so an upgrade to R80.30 might be in order here.

If you don't see a lot of constant frags with tcpdump it could be Threat Prevention causing the high F2F, to test try this:

fwaccel stats -s (note F2F percentage)

fwaccel stats -r

ips off

fw amw unload

(wait 60 seconds)

fwaccel stats -s (note F2F percentage changes)

ips on

fw amw fetch local

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Wont the tcpdump increase the cpu usage with a string you have given? As we are already facing high cpu, i shouldn't try to burst it more,it might cause a problem for us if so.

We were planning to upgrade to R80.30 but we heard some issues and decided to wait for them to resolve.

IPS might be a cause in my opinion as it is used with many protections on.

Wont the tcpdump increase the cpu usage with a string you have given? As we are already facing high cpu, i shouldn't try to burst it more,it might cause a problem for us if so.

We were planning to upgrade to R80.30 but we heard some issues and decided to wait for them to resolve.

IPS might be a cause in my opinion as it is used with many protections on.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I also checked another cluster and the fwaccel view is slightly different,corexl is handling a portion of traffic but secureXL is not.

# fwaccel stats -s

Accelerated conns/Total conns : 0/11528 (0%)

Accelerated pkts/Total pkts : 0/153816765 (0%)

F2Fed pkts/Total pkts : 99482451/153816765 (64%)

PXL pkts/Total pkts : 54334314/153816765 (35%)

QXL pkts/Total pkts : 0/153816765 (0%)

on the fw i checked the

fw ctl pstat

and the fragmentation is not increasing at all for the live connections.

securexl is on' enabled.

approximately 300 rules and securexl is disabled just before the end of the rule base so similar stuff going on.

version is same with other firewall.

with the enabled blades:

#enabled_blades

fw vpn urlf av appi ips identityServer anti_bot ThreatEmulation mon vpn

it is a 4cpu open server and cpu usage is around %50 for this one.

# fwaccel stats -s

Accelerated conns/Total conns : 0/11528 (0%)

Accelerated pkts/Total pkts : 0/153816765 (0%)

F2Fed pkts/Total pkts : 99482451/153816765 (64%)

PXL pkts/Total pkts : 54334314/153816765 (35%)

QXL pkts/Total pkts : 0/153816765 (0%)

on the fw i checked the

fw ctl pstat

and the fragmentation is not increasing at all for the live connections.

securexl is on' enabled.

approximately 300 rules and securexl is disabled just before the end of the rule base so similar stuff going on.

version is same with other firewall.

with the enabled blades:

#enabled_blades

fw vpn urlf av appi ips identityServer anti_bot ThreatEmulation mon vpn

it is a 4cpu open server and cpu usage is around %50 for this one.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please run the IPS/Threat Prevention tests in my prior post. At worst the APCL/URLF blades will drive traffic into the PXL/PSLXL paths, not F2F. My guess would by IPS for the high F2F but the tests will tell you for sure.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will test it during a suitable time.

But what if it is the ips causing the problem, we wont be able to shut it down, we have inactivated to suggested signatures on the recommended guides using high cpu, i don't know how we will manage with all the other signatures.

Do you have any suggestions as if the problem might be the ips?

But what if it is the ips causing the problem, we wont be able to shut it down, we have inactivated to suggested signatures on the recommended guides using high cpu, i don't know how we will manage with all the other signatures.

Do you have any suggestions as if the problem might be the ips?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Need the results of the test first before we start speculating on what needs to be adjusted in IPS.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have upgraded one of our firewalls and the results are much more better as this is another cluster from then the one which I wrote before.

Before with R80.10

[Expert@cctor-fw1:0]# fwaccel stats -s

Accelerated conns/Total conns : 0/10382 (0%)

Accelerated pkts/Total pkts : 0/60391836 (0%)

F2Fed pkts/Total pkts : 30871026/60391836 (51%)

PXL pkts/Total pkts : 29520810/60391836 (48%)

QXL pkts/Total pkts : 0/60391836 (0%)

After with R80.30

cctor-fw1> fwaccel stats -s

Accelerated conns/Total conns : 97/2835 (3%)

Accelerated pkts/Total pkts : 668757495/1146490033 (58%)

F2Fed pkts/Total pkts : 120998512/1146490033 (10%)

F2V pkts/Total pkts : 177470213/1146490033 (15%)

CPASXL pkts/Total pkts : 0/1146490033 (0%)

PSLXL pkts/Total pkts : 356734026/1146490033 (31%)

QOS inbound pkts/Total pkts : 0/1146490033 (0%)

QOS outbound pkts/Total pkts : 0/1146490033 (0%)

Corrected pkts/Total pkts : 0/1146490033 (0%)

The thing is we didn't have any drops seen on netstat output but now i see RX-Drops on some interfaces.The percentage is around 0.005% but there wasn't any drops before so why now, that is bugging me.

Has something changed with the latest version or any change related to SecureXL handling traffic is now effecting the SND i am not sure.

This is an openserver with 4 CPU license , 1 SND + 3 FW_workers which i don't see utilization at the drops happening from my graphs.

Do we need to play with some buffers for nic cards on R80.30?

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your stats look pretty good and a drop rate of 0.005% is negligible, as mentioned in my book anything less than 0.1% is fine. I don't recommend increasing ring buffer sizes beyond the default except as a last resort. It is possible that the drops are due to something other than a ring buffer miss, please post the output of ethtool -S (interface) where you are seeing RX-DRPs for further analysis.

One other tip, run fwaccel stat and see if you can move rules around to improve your accept templating rate (Accelerated conns/Total conns). Probably some DCE-RPC services being referenced in your rulebase that need to be moved down.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Timothy,

Outputs are below.

I organized the policy rule as the DCE rules are at the bottom as SecureXL seems ok with the rule policy.

[Expert@cctor-fw1:0]# ethtool -S eth7

NIC statistics:

rx_packets: 405342352

tx_packets: 362595719

rx_bytes: 212595203218

tx_bytes: 216157859542

rx_broadcast: 59164

tx_broadcast: 303201

rx_multicast: 8225

tx_multicast: 8154

multicast: 8225

collisions: 0

rx_crc_errors: 0

rx_no_buffer_count: 2314

rx_missed_errors: 10029

tx_aborted_errors: 0

tx_carrier_errors: 0

tx_window_errors: 0

tx_abort_late_coll: 0

tx_deferred_ok: 0

tx_single_coll_ok: 0

tx_multi_coll_ok: 0

tx_timeout_count: 0

rx_long_length_errors: 0

rx_short_length_errors: 0

rx_align_errors: 0

tx_tcp_seg_good: 0

tx_tcp_seg_failed: 0

rx_flow_control_xon: 0

rx_flow_control_xoff: 0

tx_flow_control_xon: 0

tx_flow_control_xoff: 0

rx_long_byte_count: 212595203218

tx_dma_out_of_sync: 0

lro_aggregated: 0

lro_flushed: 0

lro_recycled: 0

tx_smbus: 0

rx_smbus: 0

dropped_smbus: 0

os2bmc_rx_by_bmc: 0

os2bmc_tx_by_bmc: 0

os2bmc_tx_by_host: 0

os2bmc_rx_by_host: 0

rx_errors: 0

tx_errors: 0

tx_dropped: 0

rx_length_errors: 0

rx_over_errors: 0

rx_frame_errors: 0

rx_fifo_errors: 10029

tx_fifo_errors: 0

tx_heartbeat_errors: 0

tx_queue_0_packets: 362595719

tx_queue_0_bytes: 213152806139

tx_queue_0_restart: 0

rx_queue_0_packets: 405342352

rx_queue_0_bytes: 209352464402

rx_queue_0_drops: 0

rx_queue_0_csum_err: 0

rx_queue_0_alloc_failed: 0

[Expert@cctor-fw1:0]# ethtool -S eth8

NIC statistics:

rx_octets: 233189541024

rx_fragments: 0

rx_ucast_packets: 318704358

rx_mcast_packets: 4686202

rx_bcast_packets: 17592

rx_fcs_errors: 0

rx_align_errors: 0

rx_xon_pause_rcvd: 0

rx_xoff_pause_rcvd: 0

rx_mac_ctrl_rcvd: 0

rx_xoff_entered: 0

rx_frame_too_long_errors: 0

rx_jabbers: 0

rx_undersize_packets: 0

rx_in_length_errors: 0

rx_out_length_errors: 0

rx_64_or_less_octet_packets: 0

rx_65_to_127_octet_packets: 0

rx_128_to_255_octet_packets: 0

rx_256_to_511_octet_packets: 0

rx_512_to_1023_octet_packets: 0

rx_1024_to_1522_octet_packets: 0

rx_1523_to_2047_octet_packets: 0

rx_2048_to_4095_octet_packets: 0

rx_4096_to_8191_octet_packets: 0

rx_8192_to_9022_octet_packets: 0

tx_octets: 130331680198

tx_collisions: 0

tx_xon_sent: 0

tx_xoff_sent: 0

tx_flow_control: 0

tx_mac_errors: 0

tx_single_collisions: 0

tx_mult_collisions: 0

tx_deferred: 0

tx_excessive_collisions: 0

tx_late_collisions: 0

tx_collide_2times: 0

tx_collide_3times: 0

tx_collide_4times: 0

tx_collide_5times: 0

tx_collide_6times: 0

tx_collide_7times: 0

tx_collide_8times: 0

tx_collide_9times: 0

tx_collide_10times: 0

tx_collide_11times: 0

tx_collide_12times: 0

tx_collide_13times: 0

tx_collide_14times: 0

tx_collide_15times: 0

tx_ucast_packets: 313497944

tx_mcast_packets: 4077

tx_bcast_packets: 4087

tx_carrier_sense_errors: 0

tx_discards: 0

tx_errors: 0

dma_writeq_full: 0

dma_write_prioq_full: 0

rxbds_empty: 1014

rx_discards: 17164

rx_errors: 0

rx_threshold_hit: 0

dma_readq_full: 0

dma_read_prioq_full: 0

tx_comp_queue_full: 0

ring_set_send_prod_index: 0

ring_status_update: 0

nic_irqs: 0

nic_avoided_irqs: 0

nic_tx_threshold_hit: 0

mbuf_lwm_thresh_hit: 0

[Expert@cctor-fw1:0]# netstat -ani

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

eth7 1500 0 405554017 0 10029 10029 362760290 0 0 0 BMRU

eth7.124 1500 0 661348 0 0 0 661383 0 0 0 BMRU

eth7.224 1500 0 303443 0 0 0 272134 0 0 0 BMRU

eth7.225 1500 0 14389328 0 0 0 18132537 0 0 0 BMRU

eth7.226 1500 0 389534190 0 0 0 342920865 0 0 0 BMRU

eth7.227 1500 0 943 0 0 0 111929 0 0 0 BMRU

eth7.231 1500 0 664801 0 0 0 661472 0 0 0 BMRU

eth8 1500 0 323631418 0 17164 0 313774069 0 0 0 BMRU

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your eth7 Intel-based interface just has some full ring buffer drops of packets, but once again the drop rate is so low I wouldn't worry about it. You can use command sar -n EDEV to see precisely when the RX-DRP counter was incremented, my guess is that the drops occurred under some transient high load such as during a policy install. As long as they are not racking up continuously, there is nothing to worry about.

eth8 on the other hand seems to have some ring buffer misses (1014) but is also reporting 17164 rx-discards. Typically discards are the receipt of unknown protocols from the network that the Ethernet driver is not configured to interact with (IPv6, IPX, Appletalk, etc); my book has a section titled "RX-DRP Revisited: Still Racking Them Up?" which covers how to track down these rogue protocols with tcpdump. The rate is so low though I wouldn't worry about it.

Unfortunately for you, the eth8 counters are associated with a Broadcom NIC and as such need to be taken with a grain of salt. Broadcom and Emulex were singled out for especially harsh criticism in my book, and I don't trust those vendors' NIC products whatsoever. If you can move that interface's traffic to an Intel-based NIC, you will save yourself a lot of problems.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Timothy_Hall I know this is an older post, but I was looking into this for optimizing our accelerated packets.

You're saying that an "any any any" cleanup rule in https inspection is a big no no.

The December 18th tech talk for ssl inspection had said that it is best practice to do an "any any any" bypass rule for cleanup.

As your one of the big experts on optimization, I'm a little lost as to what is the correct answer. I want to make sure I'm using the best method. Can you help clarify?

referenced tech talk:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The component of the HTTPS Inspection cleanup rule that I was indirectly calling out in my prior post was a service of "Any" which is actually the big no-no. A Source and Destination of Any on a cleanup rule with a Bypass action is OK and desirable. The recommendation for a Bypass cleanup rule in the TLS Inspection TechTalk appears to have been spawned by the SecureXL changes in R80.20, where CPAS was pulled out of the F2F path and put into its own path CPASXL, and the enhanced TLS parser introduced in R80.30. Here is some of the TLS Inspection Policy Tuning content from the third edition of my book that should hopefully clarify:

Click to Expand

HTTPS Inspection Policy Tuning

The HTTPS Inspection policy specifies exactly what types of TLS traffic the firewall should inspect via active streaming with the associated process space trips. Properly configuring this policy is critical to ensure reasonable firewall performance when HTTPS Inspection is enabled. Some recommendations:

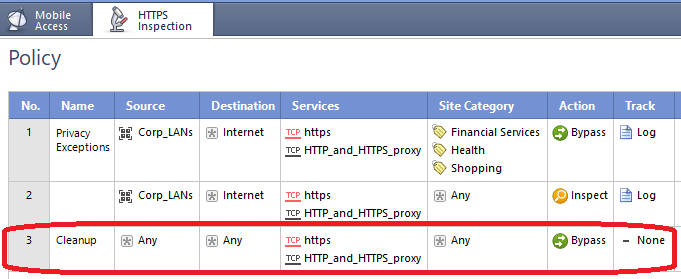

Make sure that your HTTPS Inspection policy has an explicit cleanup rule with Source and Destination of “Any”, and an action of “Bypass” as shown here:

Use of this explicit cleanup rule will ensure that only traffic requiring active streaming will be pulled into the CPASXL path. Failure to configure an explicit cleanup rule of this nature will cause much more active streaming to occur on the firewall than necessary, and has been reported to cause at least a 40% performance hit on firewalls performing HTTPS Inspection.

Avoid the use of “Any” in the Source & Destination columns of the HTTPS Inspection policy (except for the explicit cleanup rule mentioned above). Similarly to the Access Control and Threat Prevention policies covered earlier in this book, failure to heed this recommendation can result in massive amounts of LAN-speed traffic suddenly becoming subject to active streaming inadvertently, which can potentially crater the performance of even the largest firewalls. Proper use of object “Internet”, and negations of host/network objects and groups in the Source and Destination fields of the HTTPS Inspection policy can help keep this highly unpleasant situation from occurring.

Never set the Services column of the HTTPS Inspection Policy to “Any”. Be especially careful about accidentally setting the Services field of an HTTPS Inspection policy rule with an action of “Inspect” to “Any”, for the reasons described here: sk101486: Non-HTTPS traffic (FTP/S, SMTP/S and more) are matched to HTTPS Inspection Policy and perf....

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the response and info!

I already had the 'any' 'any' 'bypass' at the end of my policy, but I've replaced the 'any' object in my https inspection policy rules with more specific entries, and have seen a significant performance boost! (around 20%)

I've had various TAC cases opened to review our environment due to unexpected resource consumption, and none of them had seen anything wrong with my https inspection policy.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good to hear, as noted in my prior post these optimization strategies are relatively new and are still in the process of propagating. 🙂

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have been upgrading our firewall to R80.30 and are happy with the results as SecureXL looks to be much better with the new mechanism it is using.

But with the issue which I have mentioned on the topic mainly wasn't as expected after the upgrade.

To give an idea , this was the output with r80.10

# fwaccel stats -s

Accelerated conns/Total conns : 45/47726 (0%)

Accelerated pkts/Total pkts : 1374948/85775878 (1%)

F2Fed pkts/Total pkts : 33538998/85775878 (39%)

PXL pkts/Total pkts : 50861932/85775878 (59%)

QXL pkts/Total pkts : 0/85775878 (0%)

R80.30

fwaccel stats -s

Accelerated conns/Total conns : 18446744073709551615/291 (0%)

Accelerated pkts/Total pkts : 3324649332/29928619281 (11%)

F2Fed pkts/Total pkts : 23351470604/29928619281 (78%)

F2V pkts/Total pkts : 1981021/29928619281 (0%)

CPASXL pkts/Total pkts : 0/29928619281 (0%)

PSLXL pkts/Total pkts : 3252499345/29928619281 (10%)

QOS inbound pkts/Total pkts : 0/29928619281 (0%)

QOS outbound pkts/Total pkts : 0/29928619281 (0%)

Corrected pkts/Total pkts : 0/29928619281 (0%)

As we are experiencing much more SecureXL handling traffic I expected it from this cluster but this is what we have. Also with the detailed output below, could anyone have any ideas to check out for?

Thank you

# enabled_blades

fw vpn urlf av appi ips identityServer anti_bot ThreatEmulation mon vpn

# fwaccel stat

+-----------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+-----------------------------------------------------------------------------+

|0 |SND |enabled |eth1,eth2,eth8,eth9, |

| | | |eth10,eth11,eth4,eth6, |

| | | |eth7,eth12 |Acceleration,Cryptography |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,NULL,3DES,DES,CAST, |

| | | | |CAST-40,AES-128,AES-256,ESP, |

| | | | |LinkSelection,DynamicVPN, |

| | | | |NatTraversal,AES-XCBC,SHA256 |

+-----------------------------------------------------------------------------+

Accept Templates : disabled by Firewall

Layer FW Security disables template offloads from rule #below rules on the rule set

Throughput acceleration still enabled.

Drop Templates : enabled

NAT Templates : disabled by Firewall

Layer FW Security disables template offloads from rule #below rules on the rule set

Throughput acceleration still enabled.

fwaccel stats -p

F2F packets:

--------------

Violation Packets Violation Packets

-------------------- --------------- -------------------- ---------------

pkt has IP options 23816 ICMP miss conn 132651663

TCP-SYN miss conn 84611629 TCP-other miss conn 22809840829

UDP miss conn 365313631 other miss conn 950684

VPN returned F2F 2716 uni-directional viol 0

possible spoof viol 0 TCP state viol 0

out if not def/accl 0 bridge, src=dst 0

routing decision err 0 sanity checks failed 0

fwd to non-pivot 0 broadcast/multicast 0

cluster message 60690169 cluster forward 0

chain forwarding 0 F2V conn match pkts 16262

general reason 0 route changes 0

# fw ctl pstat

System Capacity Summary:

Memory used: 6% (6345 MB out of 96499 MB) - below watermark

Concurrent Connections: 47573 (Unlimited)

Aggressive Aging is enabled, not active

Hash kernel memory (hmem) statistics:

Total memory allocated: 10116661248 bytes in 2469888 (4096 bytes) blocks using 1 pool

Total memory bytes used: 0 unused: 10116661248 (100.00%) peak: 2892273060

Total memory blocks used: 0 unused: 2469888 (100%) peak: 750073

Allocations: 313103958 alloc, 0 failed alloc, 290068681 free

System kernel memory (smem) statistics:

Total memory bytes used: 13060201248 peak: 13584325624

Total memory bytes wasted: 51590450

Blocking memory bytes used: 40931152 peak: 49913880

Non-Blocking memory bytes used: 13019270096 peak: 13534411744

Allocations: 275507555 alloc, 0 failed alloc, 275481277 free, 0 failed free

vmalloc bytes used: 12981572012 expensive: no

Kernel memory (kmem) statistics:

Total memory bytes used: 5215567944 peak: 6114904284

Allocations: 588597677 alloc, 0 failed alloc

565542883 free, 0 failed free

External Allocations: 16059184 for packets, 20259020 for SXL

Cookies:

660113400 total, 444458826 alloc, 444456842 free,

2563541669 dup, 3939842552 get, 2102265806 put,

908833439 len, 2170526108 cached len, 0 chain alloc,

0 chain free

Connections:

126589063 total, 78487631 TCP, 44113491 UDP, 3954893 ICMP,

33048 other, 6120 anticipated, 2 recovered, 47573 concurrent,

51300 peak concurrent

Fragments:

25749658 fragments, 12636569 packets, 56 expired, 0 short,

0 large, 0 duplicates, 0 failures

NAT:

-471034387/0 forw, -270524211/0 bckw, 801229606 tcpudp,

14133055 icmp, 82601856-71925803 alloc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1) Do you have a lot of Mobile Access VPN connections given the current work-from-home situation? These are handled F2F and may account for part of that high percentage.

2) Next step is to run fwaccel conns and look for connections that are missing a s/S in their flags (or you may see f/F flags depending on version) as these connections are going F2F. What do those connections have in common? All going in a certain direction? Certain interface? Certain port? This should hopefully help you focus where to look next.

3) Since you have a variety of TP features enabled they are probably related to the high F2F, next steps to help you narrow it down is this:

A fast and easy way to identify if IPS is the cause of a performance problem is

the following, keep in mind this procedure will place the your environment

at risk for a short period:

1) fwaccel stats -s

2) Run command ips off

3) Run fwaccel stats -r then wait 2 minutes

4) fwaccel stats -s

5) Run command ips on

6) Compare F2F% from steps 1 and 4

A fast and easy way to identify if Threat Prevention blades (not including IPS)

are the cause of a performance problem is the following, keep in mind this

procedure will place your environment at risk for a short period:

1) fwaccel stats -s

2) Run command fw amw unload

3) Run fwaccel stats -r then wait 2 minutes

4) fwaccel stats -s

5) Run command fw amw fetch local

6) Compare F2F% from steps 1 and 4

This procedure will let you identify if the various TP blades are the high F2F culprit and clarify where to look.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 24 | |

| 18 | |

| 13 | |

| 12 | |

| 12 | |

| 10 | |

| 6 | |

| 5 | |

| 5 | |

| 4 |

Upcoming Events

Wed 19 Nov 2025 @ 11:00 AM (EST)

TechTalk: Improve Your Security Posture with Threat Prevention and Policy InsightsThu 20 Nov 2025 @ 05:00 PM (CET)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - AMERThu 20 Nov 2025 @ 10:00 AM (CST)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - EMEAWed 26 Nov 2025 @ 12:00 PM (COT)

Panama City: Risk Management a la Parrilla: ERM, TEM & Meat LunchWed 19 Nov 2025 @ 11:00 AM (EST)

TechTalk: Improve Your Security Posture with Threat Prevention and Policy InsightsThu 20 Nov 2025 @ 05:00 PM (CET)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - AMERThu 20 Nov 2025 @ 10:00 AM (CST)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - EMEAThu 04 Dec 2025 @ 12:30 PM (SGT)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - APACThu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAWed 26 Nov 2025 @ 12:00 PM (COT)

Panama City: Risk Management a la Parrilla: ERM, TEM & Meat LunchAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter