- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

10 December @ 5pm CET / 11am ET

Announcing Quantum R82.10!

Learn MoreOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Policy Installation failed on GW | sk125152 | High...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

Policy Installation failed on GW | sk125152 | High Cpu | Problem wih sync link

Hi All,

I would like to share with our ongoing issue which i cannot solved and so far have not received interesting feedback from TAC. So maybe you had something similar and you did manage to solve it.

Thus my cluser is cp 6600 in VRRP mode , sync only. gaia 81.10 , take 110.

My problem started from failed policy installation and we got following meesage :

"Policy instllation failed on gateway. Cluster policy instllation failed (see sk125152)"

After that we noticed higher cpu than normally and some cores had peaks up to 100%. Normally it was arround 20-30%. So in my view there is correlation between policy instllation failure and high cpu. Some acion could even prove it = i installed latest hotfix take 110 and after reboot all looked really good but again tried to install policy what ends with failure and high cpu re-occur.

So i was digging deeper and sk indicates that it could be a problem with HA/ClusterXL. I found out that i cannot ping 2nd Sync node ip address. weird thing is that i checked the switches where ports from firewalls are directly connected ( access vlan , both in a same,) and in both access switch there is no mac on direct port leading to sync interface... output from firewall just prove that in arp table ip which i am trying to ping has "incomplete" mac<->ip resolution.. Same on both ends on different access switch..

so topology is like below :

fw node1 Sync port ---> access switch dc1 vlan 1000 ---> fiber between dc --> access switch dc2 vlan 1000 -->fw node2 Sync port

do you know what i could check further??

i shut/unshut ports on fw/switches without any success. Is it possibile that some HA processes hanged, crushed and its not sending any traffic and switch cannot put mac on particular port ?

thank you in advance for any hints

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just managed to solve it.. it was absolutelly our fault as vlan was removed due to migration ( by mistake ) on vtp server what cause removing it also from all clients. So access vlan was configured on port etc but in fact there was no such vlan anymore 🙂 and noone was looking in the easiest part but digging in logs/changes etc ...

So recovering communication on a sync link solved high cpu ( quite interesting why , maybe due to having vrrp still in proper state but clusterXl sync had troubles ?? ) , installation of policy etc

thank you all for you suggestion and help

7 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This "Cluster policy installation failed" message no longer only means that the atomic load/commit failed or timed out on one of the cluster members, in R81+ it can also indicate that some kind of cluster sanity check failed during policy installation. You'll need to look in $FWDIR/log/cphaconf.elg on both members for clues about what is wrong. So far I've seen this message indicate:

1) One of the cluster members is set for MVC and one is not (sk179969: Policy installation fails with error "Policy installation failed on gateway. Clusterpolicy...")

2) The state of cluster enablement in cpconfig is incorrect (enabled for a non-cluster object, or disabled for a gateway that is part of a cluster object - sk180980: Policy installation failure with error message "Policy installation failed on gateway. Clu...

There are probably some other sanity checks I haven't run into yet.

The fact that you can't ARP on the sync network is a definite problem, and may be another one of the new sanity checks that are performed; namely making sure that the sync network is working, assuming state sync is enabled on the cluster object. ARP is never denied by a security policy or antispoofing so I'd look there. The high CPU is probably a symptom of the problem rather than the cause, unless it is so extreme it is causing a commit timeout on one of the gateways.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thx for joining to conversation. I will review mentioned logs by you : cphaconf.elg. Regarding SK which you shared both are not related to me :

1.

[Expert@fw-de-niest-01:0]# cphaprob mvc

OFF

[Expert@fw-de-niest-02:0]# cphaprob mvc

OFF

2. i have clusterxl sync only with vrrp and it is configured on mgmt

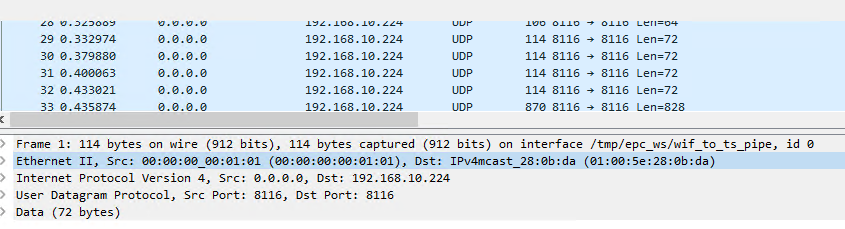

in addition to a problem we captured a packets on switch with direct connection to Sync port on FW where i am not seeing mac and there is only traffic like this :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to be sure of cluster state, can you send below from both members?

Andy

cphaprob roles

cphaprob state

cphaprob list

cphaprob -a if

cphaprob syncstat

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

here you are:

fw1:

[Expert@fw-de-niest-01:0]# cphaprob roles

ID Role

1 (local) Master

[Expert@fw-de-niest-01:0]# cphaprob state

Cluster Mode: Sync only (OPSEC) with IGMP Membership

ID Unique Address Firewall State (*)

1 (local) 192.168.10.226 Active

(*) FW-1 monitors only the sync operation and the security policy

Use OPSEC's monitoring tool to get the cluster status

[Expert@fw-de-niest-01:0]# cphaprob list

There are no pnotes in problem state

[Expert@fw-de-niest-01:0]# cphaprob -a if

CCP mode: Manual (Multicast)

Sync sync(secured), multicast

Mgmt non sync(non secured)

eth1-04 non sync(non secured)

eth1-02 non sync(non secured)

eth1-03 non sync(non secured)

eth1-01 non sync(non secured)

eth1-02 non sync(non secured)

S - sync, HA/LS - bond type, LM - link monitor, P - probing

Virtual cluster interfaces: 19

eth1-04 xxxxx ( x just to hide in use ip addresses )

eth1-02.2001 xxxxx

eth1-02.3507 xxxxxx

eth1-02.3503 xxxxx

eth1-02.3524 xxxxx

eth1-02.2100 xxxxx

eth1-02.3505 xxxxx

eth1-02.2030 xxxxx

eth1-03.2032 xxxxx

eth1-02.3504 xxxxx

eth1-01.2086 xxxxx

eth1-02.3508 xxxxx

eth1-02.2031 xxxxx

eth1-02.3529 xxxxx

eth1-02.3587 xxxxx

eth1-02.3588 xxxxx

eth1-02.3523 xxxxx

eth1-02.2084 xxxxx

eth1-02.3510 xxxxx

[Expert@fw-de-niest-01:0]# cphaprob syncstat

Delta Sync Statistics

Sync status: OK

Drops:

Lost updates................................. 0

Lost bulk update events...................... 0

Oversized updates not sent................... 0

Sync at risk:

Sent reject notifications.................... 0

Received reject notifications................ 0

Sent messages:

Total generated sync messages................ 45951141

Sent retransmission requests................. 0

Sent retransmission updates.................. 0

Peak fragments per update.................... 2

Received messages:

Total received updates....................... 0

Received retransmission requests............. 0

Sync Interface:

Name......................................... Sync

Link speed................................... 1000Mb/s

Rate......................................... 5178 [KBps]

Peak rate.................................... 7906 [KBps]

Link usage................................... 4%

Total........................................ 655036[MB]

Queue sizes (num of updates):

Sending queue size........................... 512

Receiving queue size......................... 256

Fragments queue size......................... 50

Timers:

Delta Sync interval (ms)..................... 100

Reset on Tue Sep 5 22:14:50 2023 (triggered by fullsync).

fw2:

[Expert@fw-de-niest-02:0]# cphaprob roles

ID Role

2 (local) Non-Master

[Expert@fw-de-niest-02:0]# cphaprob state

Cluster Mode: Sync only (OPSEC) with IGMP Membership

ID Unique Address Firewall State (*)

2 (local) 192.168.10.227 Active

(*) FW-1 monitors only the sync operation and the security policy

Use OPSEC's monitoring tool to get the cluster status

[Expert@fw-de-niest-02:0]# cphaprob list

There are no pnotes in problem state

[Expert@fw-de-niest-02:0]# cphaprob -a if

CCP mode: Manual (Multicast)

Sync sync(secured), multicast

Mgmt non sync(non secured)

eth1-04 non sync(non secured)

eth1-02 non sync(non secured)

eth1-03 non sync(non secured)

eth1-01 non sync(non secured)

eth1-02 non sync(non secured)

S - sync, HA/LS - bond type, LM - link monitor, P - probing

Virtual cluster interfaces: 19

eth1-04 xxxxxxx

eth1-02.2001 xxxxxxx

eth1-02.3507 xxxxxxx

eth1-02.3503 xxxxxxx

eth1-02.3524 xxxxxxx

eth1-02.2100 xxxxxxx

eth1-02.3505 xxxxxxx

eth1-02.2030 xxxxxxx

eth1-03.2032 xxxxxxx

eth1-02.3504 xxxxxxx

eth1-01.2086 xxxxxxx

eth1-02.3508 xxxxxxx

eth1-02.2031 xxxxxxx

eth1-02.3529 xxxxxxx

eth1-02.3587 xxxxxxx

eth1-02.3588 xxxxxxx

eth1-02.3523 xxxxxxx

eth1-02.2084 xxxxxxx

eth1-02.3510 xxxxxxx

[Expert@fw-de-niest-02:0]# cphaprob syncstat

Delta Sync Statistics

Sync status: OK

Drops:

Lost updates................................. 0

Lost bulk update events...................... 0

Oversized updates not sent................... 0

Sync at risk:

Sent reject notifications.................... 0

Received reject notifications................ 0

Sent messages:

Total generated sync messages................ 3349848

Sent retransmission requests................. 0

Sent retransmission updates.................. 0

Peak fragments per update.................... 2

Received messages:

Total received updates....................... 0

Received retransmission requests............. 0

Sync Interface:

Name......................................... Sync

Link speed................................... 1000Mb/s

Rate......................................... 16620 [Bps]

Peak rate.................................... 4815 [KBps]

Link usage................................... 0%

Total........................................ 4976 [MB]

Queue sizes (num of updates):

Sending queue size........................... 512

Receiving queue size......................... 256

Fragments queue size......................... 50

Timers:

Delta Sync interval (ms)..................... 100

Reset on Tue Sep 5 21:30:04 2023 (triggered by fullsync).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is your issue...BOTH members "think" they are active, as neither shows as backup. Can you verify in topology that you have configured all those interfaces as clustered AND you can also get interfaces without topology option?

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just managed to solve it.. it was absolutelly our fault as vlan was removed due to migration ( by mistake ) on vtp server what cause removing it also from all clients. So access vlan was configured on port etc but in fact there was no such vlan anymore 🙂 and noone was looking in the easiest part but digging in logs/changes etc ...

So recovering communication on a sync link solved high cpu ( quite interesting why , maybe due to having vrrp still in proper state but clusterXl sync had troubles ?? ) , installation of policy etc

thank you all for you suggestion and help

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yep, thats exactly it. Its important to remember, unlike most other major vendors, changes in CP cluster do NOT replicate automatically from master to backup, like they do in Cisco, FGT, PAN.

Great job btw 👍✔

Cheers,

Andy

Best,

Andy

Andy

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 26 | |

| 20 | |

| 16 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Fri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Fri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter