- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Access Control and Threat Prevention Best Practices

5 November @ 5pm CET / 11am ET

Firewall Uptime, Reimagined

How AIOps Simplifies Operations and Prevents Outages

Overlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Spark Management Portal and More!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Memory Lost from 40GB Free /64GB to 1GB in one...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Memory Lost from 40GB Free /64GB to 1GB in one hour !

Hello all,

on 81.10 we are experiencing hard troubles, the Gateway can fall from a normal use of Memory (45GB) to 1GB in one hour ! This is occuring about one time per month, and the only way to solve this is to reboot the Active Node of our Cluster. the CPU usage is normal : from 35% to 45%.

We updated in Take30 recently and we are waiting for to see the behavior with the Take30.

Did you know this kind of problem ?

Thanks a lot for your help.

François from France.

_____________

Technical infos :

Product version Check Point Gaia R81.10

OS build 335

OS kernel version 3.10.0-957.21.3cpx86_64

OS edition 64-bit

24 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi François,

Memory leaks are fairly common in unpatched devices.

What was the patch level before you patched?

Check here the SK, You'll see many memory fixes.

You did the right thing and patched the device.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please provide the output of free -m when the gateway is experiencing low free memory, it may not actually be a memory leak but perhaps a backup or other disk-intensive operation that is allocating memory for buffering/caching of disk operations which would be expected behavior and not indicate a problem.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had been dealing with CP for almost 15 years and I never seen that type of issue. As @Timothy_Hall advised, please provide free -m output, as well as top and ps -auxw

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Strange. Try searching "memory leak" in JHF release notes.

We had very similar behavior a while ago I believe with R80.30 or R80.40. Sudden memory consumption which makes gateway to freeze for a moment, of course following by traffic interruption.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What if you do a "ps -ef|grep ifi" ?

I have seen on a couple of gateways that the ifi_server process ends up not restarting properly resulting in thousands of processes.

Each process isn't that large in memory-consumption but the total number uses a lot...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

[Expert@Node1:0]# tecli show downloads ifi

TEDIFI Package

==============================================

UID: 48B68893-20D0-45B3-A6E9-426FE4259EDF

Revision: 243

Status: Ready

Size: 52.56MB

Start Download Time: Sun Dec 26 16:08:50 2021

[Expert@Node2:0]# tecli show downloads ifi

TEDIFI Package

==============================================

UID: 48B68893-20D0-45B3-A6E9-426FE4259EDF

Revision: 243

Status: Ready

Size: 52.56MB

Start Download Time: Sun Dec 26 16:08:50 2021

--- ifi Processus ---

[Expert@Node1:0]# ps -ef |grep "ifi"

admin 11417 1 0 16:59 ? 00:00:05 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11419 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11420 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11421 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11422 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11423 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11424 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11428 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11429 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11434 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11439 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11443 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11463 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11464 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11465 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11468 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11476 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11480 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11481 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11482 11417 0 16:59 ? 00:00:03 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11485 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11493 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11499 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11503 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11507 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11511 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11515 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11519 11417 0 16:59 ? 00:00:02 /tmp/ifiPython3 /tmp/ifi_server restart

nobody 11523 11417 0 16:59 ? 00:00:01 /tmp/ifiPython3 /tmp/ifi_server restart

admin 20551 20434 0 17:23 pts/2 00:00:00 grep --color=auto ifi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you can use "cpview -t" to go back to the point when the issue started and monitor other parameters of the gateway. Also, as others mentioned show us "free -m"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

how specify the timestamp of 08 February 2022 after the "cpview -t" command ?

When I enter "cpview -t" it starts at 23 January 19:43:15...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

open "cpview -t", then hit "t" button and enter the date as in the example. Also use +/- to move around.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Abihost !

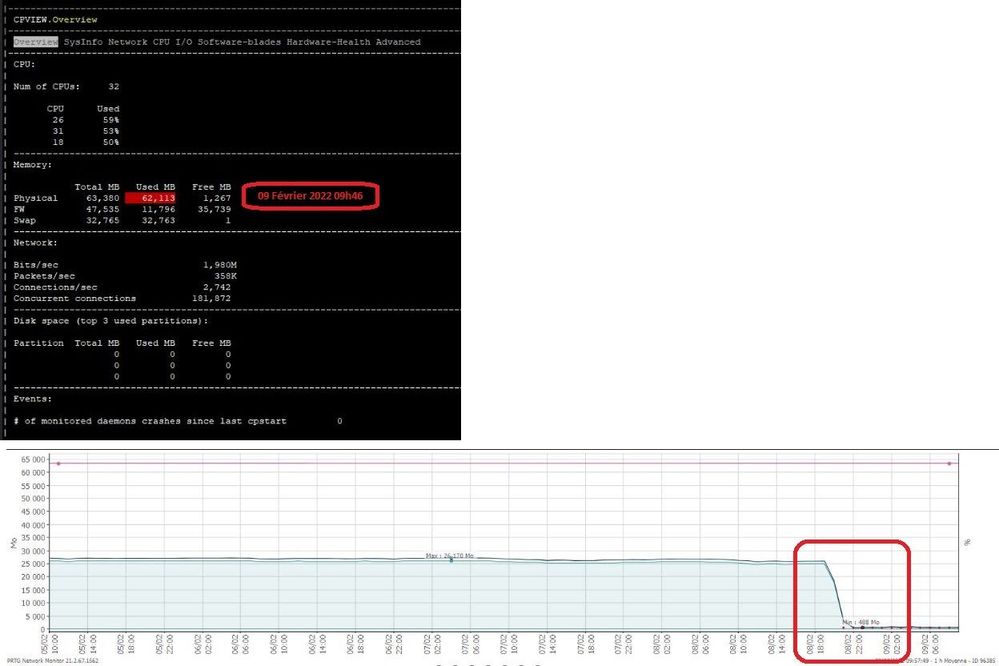

See the first result :

Leak of free Physical Memory in MegaBytes :

38743 (39GB) 08Feb2022 19:02:41

-- linear decrease -->

937 (0,9 MB) 08Feb2022 21:42:12

So, about 38 GigaBytes lost in 2 hours and 40 minutes !...

So what can I check now in CPVIEW history ?...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure, I see the same in attached graph, but please review all parameters about your gateway you can find in cpview history to see what have changed during those 3 hours.

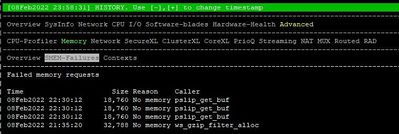

When we had an issue with memory, you could see failed memory allocation in Advanced -> memory -> SMEM-failures

Also you can setup a simple script to capture processes sorted out by memory consumption every minute and review the log once issue occurs. If you would open TAC ticket they would provide similar script which captures even more data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

See the results of SMEM Failures :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The connections continued to be taken into account by the Node 1 Active even with less of 1 GB of free physical memory !

No switch towards the Node 2 (Standby), CPU usage 35% !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've noticed this too, even on take 30. I think there is some sort of memory leak issue present. Have not opened a case yet.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May I have check something in this directory ?

/var/log/files_repository/Python/A00E6A3D-9EE2-4ADB-8022-DDB302981D54/2

[Expert@Node1:0]# ls -la

total 17832

dr-xr-sr-x 3 admin bin 125 Feb 9 19:43 .

drwx------ 3 admin root 15 Oct 5 20:04 ..

-rw-rw---- 1 admin bin 204 Feb 9 19:43 Persistency_conf

dr-xr-sr-x 4 admin bin 28 Nov 20 2017 PythonPack

-r-xr-xr-x 1 admin bin 18244620 Oct 5 20:04 python3.6.2.tar.gz

-r-xr-xr-x 1 admin bin 41 Oct 5 20:04 python3.6.2.tar.gz.sh1

-r-xr-xr-x 1 admin bin 19 Oct 5 20:04 revision_data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The memory leak occured again yesterday between 17h00 and 19h00 (GMT+1) !!

Being helped by our Support-Registred, we have participated to a Zoom meeting with an Engineer (Kumar).

He will provide to us a procedure to bring some modifications to our configuration and deploy a diagnostic script as soon as the phenomenon will occure again.

We will perform this on the two nodes of our Cluster on next wendsday cause it is the time range to changes.

On the other hand, my collegue thinks the problem could be linked to the implementation/activation of the Module NGTX-ICAP that has been performed the 2021 December the 15th, since the ICAP doesn't work properly...

But we cannot tell when the first memory leak has been detected.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dipisitrese !

hope you are doing well!!

Were you able to solve this issue with Support? I`m having the same issue with a customer (R81.10 Take 30), and in his case, it is happening daily!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We can confirm this problem at another customer - in this case R81.10 Take 38,

The problem is related to the python script "ifi_server" (as already mentioned ). Because it starts respawning rapidly for some reason. And because it is not exiting they all stays in memory

#ps -ef |grep ifi_server |wc -l

9062

... so in our case we have 9062 copies of this script in memory.

"hcp -r all" shows it nicely, too

| The top user space table helps to determine which process consumes high memory.

|

| +----------------------------------------------------------------------------------------------+

| | Top user-space processes (aggregated by name) |

| +-----------+----------------+-----------------------------------------+-----------+-----------+

| | Process | # of processes | Command | RAM used | Swap used |

| +===========+================+=========================================+===========+===========+

| | python3.6 | 9062 | /tmp/ifiPython3 /tmp/ifi_server restart | 33.1 GiB | 24.8 GiB |

| +-----------+----------------+-----------------------------------------+-----------+-----------+

| | fwk | 9 | fwk | 11.2 GiB | 0.0 KiB |

| +-----------+----------------+-----------------------------------------+-----------+-----------+

| | pdpd | 6 | pdpd 0 -t | 4.0 GiB | 5.0 GiB |

| +-----------+----------------+-----------------------------------------+-----------+-----------+

| | fw_full | 8 | fwd | 1.1 GiB | 312.9 MiB |

| +-----------+----------------+-----------------------------------------+-----------+-----------+

it is exactly the same IFI package version as you have:

# tecli show downloads ifi

TEDIFI Package

==============================================

UID: 48B68893-20D0-45B3-A6E9-426FE4259EDF

Revision: 243

Status: Ready

Size: 52.56MB

Start Download Time: Sun Dec 26 15:59:25 2021

We have an open case at TAC, no outcome till now

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can do a "pkill -9 ifiPython3" to kill all those processes and regain your memory.

We also have cases open (don't have the case-no in mind now though) so hopefully there will be more attention to this issue...

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can confirm the issue is also present in R81.10 (currently Ongoing) take 44.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not sure if this problem is related to a certain jumbo HF. Because the problem started at our side, when the latest IFI package was (automatically) updated - Dec. 26 2021

# tecli show downloads ifi

TEDIFI Package

==============================================

UID: 48B68893-20D0-45B3-A6E9-426FE4259EDF

Revision: 243

Status: Ready

Size: 52.56MB

Start Download Time: Sun Dec 26 15:59:25 2021

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can confirm the issue is also present in R81 take 58

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Open a SR with our TAC and ask them to take a look at the Internal SK176104

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 22 | |

| 22 | |

| 11 | |

| 9 | |

| 8 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 |

Upcoming Events

Wed 05 Nov 2025 @ 11:00 AM (EST)

TechTalk: Access Control and Threat Prevention Best PracticesThu 06 Nov 2025 @ 10:00 AM (CET)

CheckMates Live BeLux: Get to Know Veriti – What It Is, What It Does, and Why It MattersTue 11 Nov 2025 @ 05:00 PM (CET)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - AMERTue 11 Nov 2025 @ 10:00 AM (CST)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - EMEAAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter