- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Access Control and Threat Prevention Best Practices

5 November @ 5pm CET / 11am ET

Ask Check Point Threat Intelligence Anything!

October 28th, 9am ET / 3pm CET

Check Point Named Leader

2025 Gartner® Magic Quadrant™ for Hybrid Mesh Firewall

HTTPS Inspection

Help us to understand your needs better

CheckMates Go:

Spark Management Portal and More!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: It takes a long time for VS's to start

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

It takes a long time for VS's to start

Hi All,

We have a customer with three VSX clusters on 6200, 6900 and 16200 hardware. All managed by the same SmartCenter.

Software version is R81.10 on the 6200 and 6900 hardware. Management and 16200 hardware is R81.20.

Hardware was installed about 1.5 years ago and it was a fresh install (re-image) of the software with R81.10 ISO and USB stick.

We are facing reboot / start-up issues with the 16200 VSX cluster. It takes 15 minutes for the VS's on this cluster to start. The appliance itself and VS0 is OK after a couple of minutes. But the five VS's on this cluster take 15 minutes. When we look at resources, the appliance is not very busy.

We do not see this issue on the two other VSX cluster (with less powerfull hardware). They are back online with all VS's after a couple of minutes.

We have involved TAC and they advise us to re-install the appliances and perform a VSX reconfigure. But that is not something the customer is looking forward to.

So has anyone on CheckMates seen this issue before? If so, did you find the cause and a solution?

So to re-cap:

After rebooting a 16200 VSX cluster member, the appliance itself is back after a couple of minutes. And VS0 is Active/Standby depending on the member we are rebooting.

It takes 15 minutes for the VS's to reach the Active/Standby state (depending on the member we are rebooting).

We see the same on both appliances.

Regards,

Martijn

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PRJ-45520 included in:

- R81.20 JHF T43 and above

- R81.10 JHF T131 and above

CCSM R77/R80/ELITE

29 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How many VS are hosted on the impacted cluster?

@Magnus-Holmberg & @Kaspars_Zibarts each flagged an issue with something similar to this before and saw improvement with subsequent JHFs:

https://community.checkpoint.com/t5/Security-Gateways/VSX-boot-time/td-p/159666

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Chris,

Unfortunately there is no HFA to install because we are on the latest version.

Worked on many VSX cluster at different customers, but I have never seen a start-up time of 15 minutes for only five VS's.

Anything I can check?

Martijn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Clearly sounds like a bug perhaps related to the combination of R81.20 and 16000 appliance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll do some digging and come back to you.

To help:

- What blades are enabled for the VS?

- Any dynamic routing used?

- How many virtual systems?

- Is there many virtual switches / routers?

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm with Chris and Jozko here - we would need little more info to see whats the best nxt step.

Running console, definitely good idea to see if any strange error pop up?

Dynamic routing often a suspect?

Think of functional differences between 6000 appliances you have and 16000, maybe you can figure out something that stands out, i.e. routing, blades that are enabled on VSes, do all VSes have good connectivity to CP resources?

What does cphaprob stat report on each VS during these 15mins?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Chris,

I got the following information from our customer:

Blades:

Firewall / IPS

Dynamic Routes:

No

Number of VS's:

6

Number of virtual switches:

3

There are static routes configured:

Vs1= 8

Vs2= 33

Vs3= 69

Vs4= 25

Vs5= 323

Vs6= 186

Regards,

Martijn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Certainly sounds like you may need a TAC case for this. I also found post Chris mentioned, but does not appear there was solution to it.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

did you reboot over the console? Did you notice the console is stuck when VSs are in Lost/Down state and the console is again responding when VSs are starting ?

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey,

We have the exact same issue, on everything from r80.40 to r81.20 clusters.

We host upwards to 100 VS on one cluster - The boot time seems to become exponentially worse, the more VS residing on the cluster.

VS0 loads quickly and then the system is idle for +20 minuts.

Tailing all elg files I can find, the only relevant info is in routed.elg for each VS stating something like VS is not ready.

hardware: open servers Lenovo 850 / 32-48 cores

Blades: FW+IPS

ny dynamic routing - latest HF

1-4 virtual switches.

there could be a relation when 'propagate to adjacent devices' is used. I am not sure

/Henrik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VSX has always been slow to start. Recent experience with R80.40, R81, and R81.20 is at least 10-15 minutes from power on to a cluster member coming up as standby. That's on a range of hardware from open server to massively over-provisioned appliances and a small number of VS'. We had one environment where the upgrade required the base upgrade, a JHF, and a custom hotfix. 30-45 minutes of change window just for reboots on each device.

Despite the efficiency of license/hardware utilisation, it's one of the (many) reasons that I'd be unlikely to recommend VSX unless there is significant scale (service provider environments) to justify the pain.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just after writing this, have a look at the R82 EA section on VSX:

- Improves VSX provisioning performance and provisioning experience - creating, modifying, and deleting Virtual Gateways and Virtual Switches in Gaia Portal, Gaia Clish, or with Gaia REST API.

https://community.checkpoint.com/t5/Product-Announcements/R82-EA-Program-Production/ba-p/198695

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is a real problem. We have a customer with a VSLS cluster of 15600 with 64 GB RAM, running R81.10. There are 8 VSes in addition to VS0 and 4 virtual switches. No dynamic routing.

It takes between 15 and 20 minutes to reboot one node. We recognized that the load on the systems raises 1 or 2 minutes after reboot up to above 30 before it falls again on its normal value below 10. That is when the different VSes awake to life.

Our customer produces 24/7 and so it is a real problem to get maintenance windows. When each reboot of the cluster alone takes between 45 and 60 minutes, this problem is getting even bigger.

I think, the problem started with the upgrade from R80.30 to R81.10 and the switch from HA to VSLS. Even the memory usage raised. R80.30 ran with 32 GB memory, for R81.10 64 GB is a must in the actual configuration. (You do not want to see VSX swapping. Really not.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Oliver_Fink - you know that you still can run HA in R81? Changing-VSX-Cluster-Type

But that's besides the point of long booting times 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We did use VSX HA with R81.10 in the beginning and through a long escalation process after the upgrade – that took round about 6 months until we got everything running stable again – we were advised to change to VSLS.

And this does not make me confident in using HA any further with VSX R81.10 even more:

|

Mode |

Description |

|

High Availability. |

Ensures continuous operation by means of transparent VSX Cluster Member failover. All VSX Cluster Members and Virtual Systems function in an the Active/Standby mode and are continuously synchronized. (!) Note - This mode is available only if you upgrade a VSX Cluster from R81 or lower to R81.10. |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

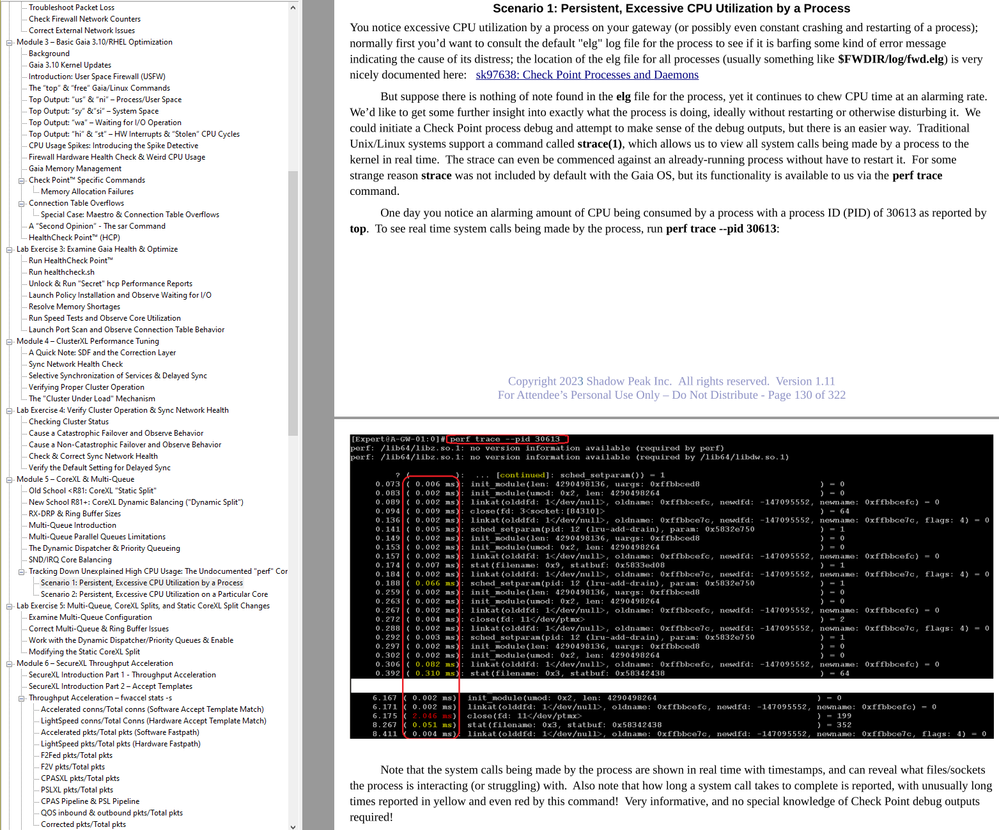

VSX is not really my area, but check these things:

1) DNS servers throughout the VSX system - test with nslookup and make sure all configured DNS servers are reachable and fast...this can cause a lot of startup delays

2) Single-core limits - I've noticed that the vast majority of unaccelerated policy installation time to a R81.20 cluster is spent with process fw_full (fwd) on the gateway pounding a single core to 100% and having to wait for it. I realize processes like this are legacy and single-threaded, but seeing this type of thing on a 32+ core system is maddening.

On your 16200's try running top with all individual core utilizations displaying. While overall CPU usage may look low, watch the individual core utilizations while a single VS is starting up. Is there one CPU sitting at 100% constantly that goes back to idle once the VS is finished? What process was causing that? You can do the equivalent of an strace against the process as described in my Gateway Performance Optimization Course, which may give you insight on what resource (file, socket, etc) was causing the process to get stuck:

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tim,

Was onsite today and we rebooted the standby VSX member and monitored the processes.

After a reboot it took 3 minutes and 40 seconds before the appliance could be reach again and VS0 was standby.

When logging in, we did not see any high load on the cores. This appliances has 48 cores and only 2 or 3 where busy at around 20%. Processes we noticed where mainly:

cpcgroup

fwget_pdm_interf

fwget_mq_interf

With 'cpwd_admin list' we could only see the processes for CTX started and 'cphaprob stat' did not show any VS's.

Dynamic Split is enabled on the appliances and we noticed this process starts initializing 17 minutes after the reboot. We can see this in 'top'. Once Dynamic Split is initialized and enabled, the VS's are in the correct state after 45 seconds. So 17 minutes and 45 seconds after reboot.

Maybe Dynamic Split has something to do with this?

Regards,

Martijn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'd be glad to review any logs you have from the boot procedure, specifically dsd.elg & dynamic_split.elg.

Pls contact me offline at amitshm@checkpoint.com

Thanks,

Amit

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Definitely possible, setting manual affinities or tampering with the default Multi-Queue configuration (probably related to the fwget_mq_interf process you are seeing) under VSX with Dynamic Split enabled can cause a variety of issues:

sk179573: Dynamic Balancing is stuck at the initialization state

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tim,

Thanks for your responses. Appreciate it.

I have send some files to Amit. Maybe he finds something.

Dymanic Split is initalizing and once that starts, it is enabled fast. It only take 17 minutes after reboot for Dynamic Split to start initializing.

2 years ago this was a green field installation, so we did not changed anything on affinity en MQ settings. Just enable Dynamic Split because in R81.10 it was disabled by default for VSX.

Let see what Amit finds.

Martijn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Dynamic Split init duration seems OK.

The delay probably happens somewhere before that, there have been some improvements to boot duration which should be integrated in the upcoming JHF, I've added the relevant people to our offline thread, will let you update accordingly from here on.

Thanks,

Amit

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Amit and I exchanged some information outsite CheckMates and he contacted one of his colleague's.

There will be a fix for this in de upcoming JHF.

For R81.10, the fix number is: PRJ-45520

For R81.20, the fix number is: PRJ-49177

Great to see Check Point engineers read this post and try to help whenever they can.

The power of CheckMates!!

Regards,

Martijn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That sounds great Martijn!

Can you share some details on the fix? Furthermore, are your buxes running xfs or ext3? I saw an sk that the latter could cause slow operation on vsx. On a recent greenfield, this would of course be xfs standard.

/Henrik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Henrik,

I do not have any details on the fix. Got this information from a Check Point engineer. I think we need to wait for the JHF and the release notes.

Our customer is green field. Clean install of R81.10.

Regards,

Martijn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you get Private hotfix from Check Point to be installed on problematic VSX in order to confirm if it fixed the issue?

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

No, we did not asked for a private fix. Check Point engineer told me the fix will be in the next JHF and customer would like to wait for this JHF.

Regards,

Martijn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Perfect news, right? 🙂 I hope it will be really fixed and integrated into "next" JHF. The question is just which "next" JHF it will be...

As of 20.12.2023, the latest JHF for R81.10 is 130.

As of 20.12.2023, the latest JHF for R81.20 is 41.

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lets hope fix is included in next recommended jumbo.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PRJ-45520 included in:

- R81.20 JHF T43 and above

- R81.10 JHF T131 and above

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 22 | |

| 17 | |

| 12 | |

| 10 | |

| 9 | |

| 9 | |

| 7 | |

| 7 | |

| 7 | |

| 5 |

Upcoming Events

Tue 28 Oct 2025 @ 11:00 AM (EDT)

Under the Hood: CloudGuard Network Security for Google Cloud Network Security Integration - OverviewTue 28 Oct 2025 @ 12:30 PM (EDT)

Check Point & AWS Virtual Immersion Day: Web App ProtectionTue 28 Oct 2025 @ 11:00 AM (EDT)

Under the Hood: CloudGuard Network Security for Google Cloud Network Security Integration - OverviewTue 28 Oct 2025 @ 12:30 PM (EDT)

Check Point & AWS Virtual Immersion Day: Web App ProtectionThu 30 Oct 2025 @ 03:00 PM (CET)

Cloud Security Under Siege: Critical Insights from the 2025 Security Landscape - EMEAThu 30 Oct 2025 @ 11:00 AM (EDT)

Tips and Tricks 2025 #15: Become a Threat Exposure Management Power User!About CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter