- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Installation of Gaia / Partitioning of disks on 16...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Installation of Gaia / Partitioning of disks on 16000/23000 appliances during a fresh installation

Dears,

We would like to know whether it is possible or not to change the size of the partitions on a 16000 and a 23800 appliance.

Both 16000 and 23800 appliances have 2 SDD disks of 480 GB, configured in a RAID1.

When we start the installation of Gaia on the 16000 and 23800 appliances, using the R80.40 ISO, we don't have the option to change the size of the partitions. This is very unfortunate when you would like to use VSX.

When the unattended installation is finished, we see:

- For 23800 appliance: 32 GB for lv_current, 192 for lv_log (file system: xfs).

- For 16000 appliance: 32 GB for lv_current, 292 for lv_log (file system: xfs).

On the 23800 appliances (VSX/VSLS) we found out in 2019 (the hard way) that 32 GB is not enough to host 25 virtual system; we used lvm_manager to extend lv_current to 64 GB; at the moment 58 GB is consumed.

Our plan is to host 15 virtual systems on the 16000 appliances (VSX/VSLS). Because the lv_log is 292 GB in size, we can't expand the lv_current partition to 64 GB (it is expanded to 48 GB). Taking a snapshot is barely possible. Please note that reducing the size of lv_log is not supported.

Questions:

- How does the Gaia installer calculate/determine the size of the partitions?

- Why is the size of lv_log different on 16000/23000 appliances, despite it uses the same size of SSDs (i.e. 480 GB)?

- Is it possible to define the size of the partitions on the 16000/23800 appliances during the installation of GAIA?

- Any other suggestions?

Notes:

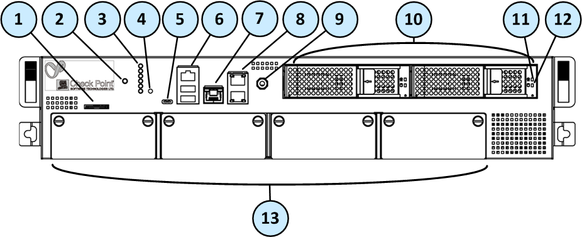

- Check Point TAC and RnD suggested to add an additional disk to the system. Not sure how that can be done on the 16000/23800 appliances where you have RAID1 configured and both slots (see picture below, label 10) are occupied with 480 GB SSDs.

- Sizing of appliances was done by Check Point and NTT. They were aware that we had to host 15 virtual systems.

- Attached you'll find the overview of the partition sizes (partitions.txt) for Check Point 16000 and 23800 appliances.

Thank you in advance for your feedback.

Kind regards,

Kris Pellens

15 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I haven't done it on a physical appliance in a while, but you should be able to, with an interactive install, set the partition sizes to your specifications.

Offhand, I don’t know if this can be specified with a non-interactive installation, which I assume was done with a USB drive prepared with isomorphic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1,they are set by our checkpoint design team per appliance distribution , talking into account usage ( Mgmt/GW) , disk space, max supported memory (as memory effects both swap partition and kernel core dumps size) and other factors , and is hard coded per disk size per appliance .

2,diffrent HW specs (memory support and other aspects ) , it can always be modified via lvm_manager after install (in xfs you can only increases )

3.no it is not supported (only vm'w , open servers , Smart-1 3050 ,Smart-1 3150 ,Smart-5050 ,Smart-1 5150 )

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very interesting discussion.

Please can someone explain the root partition limitation with the virtual-systems.

What is the problem, number of VS, number of interfaces of the VS anything else ?

Is there an official statement available from Check Point ?

Maybee some of the VSX guys here has similar experience. @Kaspars_Zibarts @Maarten_Sjouw @HeikoAnkenbrand @Danny

Wolfgang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you.

On the R80.40 ISO there's indeed a file (appliance_configuration.xml) where per appliance the sizes for the partitions (volumes) for lv_current and lv_log are defined. E.g. for a 16000 T with a RAID 1:

<appliance_partitioning>

<layout min_disksize="150528M" max_disksize="491520M">

<volume name="lv_current">32768M</volume>

<volume name="lv_log">299008M</volume>

<volume name="lv_fcd">8192M</volume>

<volume name="hwdiag">1024M</volume>

<volume name="max_swap">32768M</volume>

</layout>

<layout min_disksize="491520M">

<volume name="lv_current">32768M</volume>

<volume name="lv_log">364544M</volume>

<volume name="lv_fcd">8192M</volume>

<volume name="hwdiag">1024M</volume>

<volume name="max_swap">32768M</volume>

</layout>

</appliance_partitioning>

Because the size of lv_current is only 32 GB (on a 480 GB RAID 1), and because we can't increase the lv_current that much, the number of virtual systems (where you enable some software blades) is very limited, i.e. less than 15. As soon as you increase the size of lv_current, there is no space to create a snapshot anymore.

Any suggestions on how to provision 15 virtual systems on a 48 GB lv_current volume?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

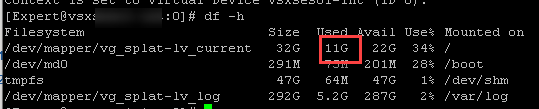

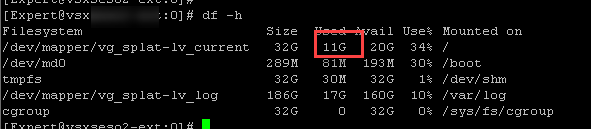

Interesting - we never had any issues with root partition on our appliances. This is screenshot from 26000T running R80.30 T219 and 20 VSes

and 23800 running R80.40 T78 with only 6 VSes

Can it be you are writing a lot in your user directories with some scripts? /home/xxx? Place your stuff then in /var/log/ instead

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kaspars_Zibarts thanks.

The same we can see on all of our customers VSX deployments. never had a problem with this.

@Kris_Pellens you wrote "On the 23800 appliances (VSX/VSLS) we found out in 2019 (the hard way) that 32 GB is not enough to host 25 virtual system; we used lvm_manager to extend lv_current to 64 GB; at the moment 58 GB is consumed."

Which directories are consuming the space? Any statement from TAC or RnD ?

Wolfgang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kris_Pellens Looking at your other article (Check Point 23800 appliances / VSX/VSLS / Files in /tmp folder ) about /tmp directory being large - seems like that's your problem. You need to check what's abusing /tmp 🙂 17GB is way too much

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Kaspars,

Thank you for your feedback. The /tmp directory requires indeed a cleanup. 😁

May I ask you how many blades you have turned on, on the appliance where you have 20 virtual systems?

On our 23800 appliances, we have 25 virtual systems:

- On all of them we have turned on four blades: Firewall, Monitoring, IPS, Anti-Virus, Anti-Bot.

- Additionally, on many of them we have turned on : Application Control, URL Filtering, Identity Awareness, and Content Awareness.

/tmp is 17 GB, /opt is 8.6 GB (see du.txt).

One day we had an outage because lv_current had no space anymore. Then Check Point TAC expanded lv_current from 32 GB to 64 GB.

Our concern: we may face the same in the future on 16000 T appliances.

Kind regards,

Kris Pellens

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Wolfgang,

Thank you for your feedback. /tmp is consuming the most. Then /opt. I included the du in the other reply.

Also, the more blades we turn on, the more disk is consumed (from lv_current); obviously.

May I ask you which blades you have turned on, on those virtual systems?

Thank you.

Regards,

Kris

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm afraid we're only using FW and IA on those. But I'm sure /tmp can be cleaned up, you just need to find what's causing most pain

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you try addressing this with a TAC case?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ADMIN NOTE: some confidential info has been removed.

Hi Val,

Thank you for your feedback. In March 2019 for the 23800s (VSX with 25 virtual systems): SR #**********. Here TAC resized lv_current from 32 GB to 64 GB. In November 2020 for the 16000s (VSX with 15 virtual systems): SR#***************. Here I decided to increase to 48 GB in order to make sure that one day I don't have to write an outage report to the director. 😁 Because of the resize, soon I will not be able to take snapshots (it's sometime good to have snapshots ... remember Schrödinger's cat).

We have a lot of blades enabled on the virtual systems. On the 23800s with 25 virtual system where lv_current never went under 32 GB anymore.

Today, I received from relevant group manager (SR#**************) the following message:

"********************.

This currently has to be taken up with the local office to understand the best recommended setup for hosting the number of Virtual Systems as the recommendations on the disk space required." In the afternoon I have a discussion with the sales team to see what can be done on the long run.

Many thanks. And also thank you for the great sessions you give.

Kind regards,

Kris Pellens

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will check and get back to you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Val,

Trust you are doing well.

Have you received any feedback from R&D?

We are no longer able to create a snapshot on the 16000T appliances, despite we have plenty of space left on lv_log.

In the end, it boils down that someone at Check Point modifies the appliance partitioning definition, see below.

Your promised feedback is highly appreciated.

Kind regards,

Kris

<appliance_partitioning>

<layout min_disksize="150528M" max_disksize="491520M">

<volume name="lv_current">32768M</volume>

<volume name="lv_log">299008M</volume>

<volume name="lv_fcd">8192M</volume>

<volume name="hwdiag">1024M</volume>

<volume name="max_swap">32768M</volume>

</layout>

<layout min_disksize="491520M">

<volume name="lv_current">32768M</volume>

<volume name="lv_log">364544M</volume>

<volume name="lv_fcd">8192M</volume>

<volume name="hwdiag">1024M</volume>

<volume name="max_swap">32768M</volume>

</layout>

</appliance_partitioning>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is an internal tread in progress, led by your account manager. I understand the gravity of the situation. Let me check again.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 22 | |

| 18 | |

| 11 | |

| 8 | |

| 7 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter