- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Access Control and Threat Prevention Best Practices

5 November @ 5pm CET / 11am ET

Firewall Uptime, Reimagined

How AIOps Simplifies Operations and Prevents Outages

Overlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Spark Management Portal and More!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: IN /VAR/LOG/messages keep getting Stopping CUL...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

IN /VAR/LOG/messages keep getting Stopping CUL mode

I am keep seeing below error messages in /var/log. However we realized at times our CPU utilization peaking over 80%. Since last 24 hours we are experiencing degraded Network performance and latency for the devices sitting behind the FW. Please advise.

Apr 8 10:33:13 2021 cor-fw02 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 17 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

Apr 8 10:33:19 2021 cor-fw02 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-02 usage (84%) on the local member increased above the configured threshold (80%).

Apr 8 10:33:30 2021 cor-fw02 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 11 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

Apr 8 10:33:35 2021 cor-fw02 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-02 usage (82%) on the local member increased above the configured threshold (80%).

Apr 8 10:33:49 2021 cor-fw02 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 14 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

Apr 8 10:33:50 2021 cor-fw02 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-02 usage (81%) on the local member increased above the configured threshold (80%).

Apr 8 10:34:00 2021 cor-fw02 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 10 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

Apr 8 10:34:04 2021 cor-fw02 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-02 usage (85%) on the local member increased above the configured threshold (80%).

Apr 8 10:34:15 2021 cor-fw02 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 10 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

Apr 8 10:34:18 2021 cor-fw02 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-02 usage (89%) on the local member increased above the configured threshold (80%).

Apr 8 10:34:28 2021 cor-fw02 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 10 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

Apr 8 10:34:44 2021 cor-fw02 kernel: [fw4_0];[10.1.3.230:28091 -> 10.45.44.112:25] [ERROR]: fileapp_parser_smtp_process_content_type: invalid state sequence

Apr 8 10:34:44 2021 cor-fw02 kernel: [fw4_0];[10.1.3.230:28091 -> 10.45.44.112:25] [ERROR]: fileapp_parser_execute_ctx: context 68 func failed

Apr 8 10:34:44 2021 cor-fw02 kernel: [fw4_0];[10.1.3.230:28091 -> 10.45.44.112:25] [ERROR]: fileapp_parser_print_execution_failure_info: encountered internal error on context 68

Apr 8 10:34:44 2021 cor-fw02 kernel: [fw4_0];[10.1.3.230:28091 -> 10.45.44.112:25] [ERROR]: fileapp_parser_print_execution_failure_info: parser_ins: flags = 1, state = 7

Apr 8 10:34:44 2021 cor-fw02 kernel: [fw4_0];[10.1.3.230:28091 -> 10.45.44.112:25] [ERROR]: fileapp_parser_print_execution_failure_info: parser_opq: type = 3, filename = NULL, uuid = 190975, flags = 93, handler type = -1

Apr 8 10:34:44 2021 cor-fw02 kernel: [fw4_0];[10.1.3.230:28091 -> 10.45.44.112:25] [ERROR]: fileapp_parser_print_execution_failure_info: failed for conn_key: <dir 0, 10.1.3.230:28091 -> 10.45.44.112:25 IPP 6>

We are running on below OS

[Expert@cor-fw02:0]# clish -c "show version all"

Product version Check Point Gaia R80.40

OS build 294

OS kernel version 3.10.0-957.21.3cpx86_64

OS edition 64-bit

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Spike detective logs are attached.

30 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CUL means Cluster Under Load. It is not a reason for slowness. Instead, this is an additional indication that your FW appliances are experiencing performance issues. You need to analyse what's causing CPU02 to spoke over 80% and try to rectify the root cause.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

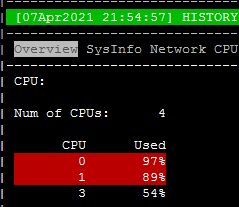

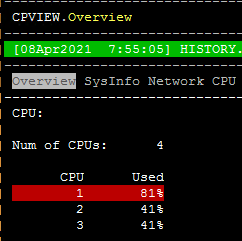

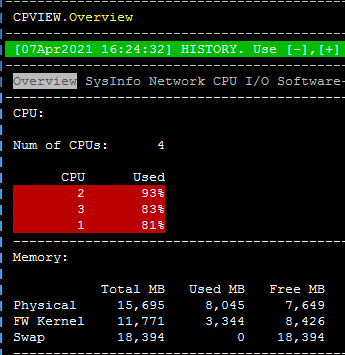

Thanks for the response. Its not just CPU 2 which is peaking i have picked some history llogs from CPVIEW -t please see the screen shots its all of them which are peaking. please point me to the direction where should i start checking ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Fine, but this does not change the recommendation. You need to investigate the root cause before anything else.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Agree with Val, CUL is just a symptom of the underlying problem, not the cause. If you have at least Jumbo HFA 69 loaded, Spike Detective might be helpful:

Next step is to determine what execution mode (user/kernel) the heavy CPU utilization is occurring in. Try running expert mode command sar which will show you that day's CPU statistics. Beyond that, please provide the output of the Super Seven for further analysis:

S7PAC - Super Seven Performance Assessment Command.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Timothy and Val thank for the quick response please see super 7 output below

[Expert@cor-fw02:0]# fwaccel stat

+---------------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+---------------------------------------------------------------------------------+

|0 |SND |enabled |eth1,eth5,eth2,eth4,Sync |Acceleration,Cryptography |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,NULL,3DES,DES,AES-128, |

| | | | |AES-256,ESP,LinkSelection, |

| | | | |DynamicVPN,NatTraversal, |

| | | | |AES-XCBC,SHA256,SHA384 |

+---------------------------------------------------------------------------------+

Accept Templates : enabled

Drop Templates : enabled

NAT Templates : enabled

[Expert@cor-fw02:0]# fwaccel stats -s

Accelerated conns/Total conns : 310/16733 (1%)

Accelerated pkts/Total pkts : 2767685456/2828887261 (97%)

F2Fed pkts/Total pkts : 61201805/2828887261 (2%)

F2V pkts/Total pkts : 12077955/2828887261 (0%)

CPASXL pkts/Total pkts : 94279305/2828887261 (3%)

PSLXL pkts/Total pkts : 2413309861/2828887261 (85%)

CPAS pipeline pkts/Total pkts : 0/2828887261 (0%)

PSL pipeline pkts/Total pkts : 0/2828887261 (0%)

CPAS inline pkts/Total pkts : 0/2828887261 (0%)

PSL inline pkts/Total pkts : 0/2828887261 (0%)

QOS inbound pkts/Total pkts : 1190521646/2828887261 (42%)

QOS outbound pkts/Total pkts : 1352420783/2828887261 (47%)

Corrected pkts/Total pkts : 0/2828887261 (0%)

[Expert@cor-fw02:0]# grep -c processor /proc/cpuinfo

4

[Expert@cor-fw02:0]# fw ctl affinity -l -r

CPU 0: eth1 eth5 eth2 eth4 Sync

CPU 1: fw_2

in.emaild.mta cp_file_convertd fwd scrub_cp_file_convertd scanengine_s mpdaemon rad vpnd pdpd scanengine_b in.acapd rtmd scrubd pepd in.geod mta_monitor wsdnsd lpd usrchkd watermark_cp_file_convertd fgd50 cprid cpd

CPU 2: fw_1

in.emaild.mta cp_file_convertd fwd scrub_cp_file_convertd scanengine_s mpdaemon rad vpnd pdpd scanengine_b in.acapd rtmd scrubd pepd in.geod mta_monitor wsdnsd lpd usrchkd watermark_cp_file_convertd fgd50 cprid cpd

CPU 3: fw_0

in.emaild.mta cp_file_convertd fwd scrub_cp_file_convertd scanengine_s mpdaemon rad vpnd pdpd scanengine_b in.acapd rtmd scrubd pepd in.geod mta_monitor wsdnsd lpd usrchkd watermark_cp_file_convertd fgd50 cprid cpd

All:

[Expert@cor-fw02:0]# netstat -ni

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

Sync 1500 0 3056044 0 0 0 16189862 0 0 0 BMRU

eth1 1500 0 277465925 0 0 0 277025714 0 0 0 BMRU

eth2 1430 0 166346 0 0 0 173374 0 0 0 BMRU

eth4 1500 0 1406127355 0 702 702 1233970185 0 0 0 BMRU

eth5 1500 0 1214968288 0 0 0 1398640000 0 0 0 BMRU

lo 65536 0 5776315 0 0 0 5776315 0 0 0 LMNRU

vpnt110 1500 0 0 0 0 0 0 0 0 0 MOPRU

vpnt130 1500 0 0 0 0 0 0 0 0 0 MOPRU

[Expert@cor-fw02:0]# fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 3 | 6016 | 22288

1 | Yes | 2 | 5957 | 22284

2 | Yes | 1 | 6019 | 22274

[Expert@cor-fw02:0]# cpstat os -f multi_cpu -o 1

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 40| 60| 40| ?| 302|

| 2| 9| 37| 54| 46| ?| 290|

| 3| 7| 38| 55| 45| ?| 290|

| 4| 7| 39| 54| 46| ?| 291|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 40| 60| 40| ?| 302|

| 2| 9| 37| 54| 46| ?| 290|

| 3| 7| 38| 55| 45| ?| 290|

| 4| 7| 39| 54| 46| ?| 291|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 1| 41| 59| 41| ?| 222479|

| 2| 5| 37| 58| 42| ?| 222487|

| 3| 7| 32| 61| 39| ?| 222487|

| 4| 6| 38| 56| 44| ?| 111244|

---------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question...is this something new that happened or did you ever have this issue before? Just one member showing this or both? Have you tried failing over or possibly if you run ps -auxw, it would show you cpu/mem utilization, so you can see whats actually "eating" up resources.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmm everything looks pretty well balanced, the firewall is just quite busy. It looks like you have a large number of blades enabled, please provide output of enabled_blades command.

One slightly unusual thing is that you have the QoS blade enabled which will cause a fair amount of CPU overhead. I assume you are relying on the QoS blade for prioritization of voice/video? If not you should turn it off. Also be careful about applying QoS between internal high-speed LANs, normally you'll just want QoS for traffic traversing your Internet-facing network interface.

Your PSLXL traffic % is pretty high, make sure that rules enforcing APCL/URLF are doing so only for traffic to and from the Internet, and not between high-speed internal LAN networks. Next step would be to look over your Threat Prevention policy and identify situations where it is being asked to scan traffic between high-speed internal LAN networks, and try to minimize that if possible with a "null" TP profile (which has all five blades unchecked) applied to high-speed internal traffic at the top of the TP policy. TP exceptions will not help in this case.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do you have an high number of VPN connections especially site-to-site? what is the model of your gateway?

in this pandemic time, where a lot of users work from home, I see an increment of CPU usage trend for my customers. In particular where the customer has gateway 5200 or 5400. These model doesn't support AES-NI technology and it means more CPU interrupts are required for VPN traffic.

an interesting read is the "Best Practices - VPN Performance" article (sk105119)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, we facing same issue with no performance impact but steady cul messages in messages file on 4 clusters running 80.40 on open server. Were you able to find the root cause ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which JHF take and how many cores are licensed on those machines out of interest?

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

JHF 180 , 4 cores licensed but 8 are present

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It just means your CPUs are running hot and the ClusterXL dead timer has been tripled from roughly 2 seconds to ~6 seconds while CUL is in effect, if the active member catastrophically fails you will experience a longer delay/outage before the standby transitions to active. Would need to see Super Seven outputs and output of enabled_blades to figure out why you are having CPU issues. S7PAC - Super Seven Performance Assessment Command

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the information. Please find below the super severn output.

[Expert@:0]# fwaccel stat

+---------------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+---------------------------------------------------------------------------------+

|0 |SND |enabled |eth0,eth5,eth1,eth3 |Acceleration,Cryptography |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,NULL,3DES,DES,AES-128, |

| | | | |AES-256,ESP,LinkSelection, |

| | | | |DynamicVPN,NatTraversal, |

| | | | |AES-XCBC,SHA256,SHA384 |

+---------------------------------------------------------------------------------+

Accept Templates : disabled by Firewall

Layer erv_rz2fwc1 Security disables template offloads from rule #118

Throughput acceleration still enabled.

Drop Templates : enabled

NAT Templates : disabled by Firewall

Layer erv_rz2fwc1 Security disables template offloads from rule #118

Throughput acceleration still enabled.

[Expert@rz2fwg2:0]#

[Expert@rz2fwg2:0]# fwaccel stats -s

Accelerated conns/Total conns : 52/4330 (1%)

Accelerated pkts/Total pkts : 48513062758/49294910628 (98%)

F2Fed pkts/Total pkts : 781847870/49294910628 (1%)

F2V pkts/Total pkts : 201423049/49294910628 (0%)

CPASXL pkts/Total pkts : 0/49294910628 (0%)

PSLXL pkts/Total pkts : 30167845360/49294910628 (61%)

CPAS pipeline pkts/Total pkts : 0/49294910628 (0%)

PSL pipeline pkts/Total pkts : 0/49294910628 (0%)

CPAS inline pkts/Total pkts : 0/49294910628 (0%)

PSL inline pkts/Total pkts : 0/49294910628 (0%)

QOS inbound pkts/Total pkts : 0/49294910628 (0%)

QOS outbound pkts/Total pkts : 0/49294910628 (0%)

Corrected pkts/Total pkts : 0/49294910628 (0%)

[Expert@rz2fwg2:0]#

[Expert@rz2fwg2:0]# grep -c ^processor /proc/cpuinfo

8

[Expert@]#

[Expert@]# lscpu | grep Thread

Thread(s) per core: 1

[Expert@]# fw ctl affinity -l -r

CPU 0: eth0 eth5 eth1 eth3

CPU 1: fw_2 (active)

mpdaemon fwd dtlsd rtmd in.asessiond lpd cprid in.acapd wsdnsd vpnd core_uploader dtpsd cprid cpd

CPU 2: fw_1 (active)

mpdaemon fwd dtlsd rtmd in.asessiond lpd cprid in.acapd wsdnsd vpnd core_uploader dtpsd cprid cpd

CPU 3: fw_0 (active)

mpdaemon fwd dtlsd rtmd in.asessiond lpd cprid in.acapd wsdnsd vpnd core_uploader dtpsd cprid cpd

CPU 4:

CPU 5:

CPU 6:

CPU 7:

All:

The current license permits the use of CPUs 0, 1, 2, 3 only.

[Expert@]#

[Expert@# netstat -ni

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

eth0 1500 0 6417265213 0 1686 0 7640613836 0 0 0 BMRU

eth1 1500 0 22646157052 0 17873 0 18174559309 0 0 0 BMRU

eth1.501 1500 0 17112194 0 0 0 16984648 0 0 0 BMRU

eth1.502 1500 0 2929008696 0 101 0 1594666941 0 153 0 BMRU

eth1.503 1500 0 707269728 0 8802 0 580288418 0 1290 0 BMRU

eth1.504 1500 0 2540551997 0 0 0 1820584865 0 178 0 BMRU

eth1.505 1500 0 2364126961 0 0 0 1620479898 0 5 0 BMRU

eth1.506 1500 0 12431712046 0 0 0 10989433852 0 378 0 BMRU

eth1.516 1500 0 111916444 0 0 0 113450039 0 0 0 BMRU

eth1.517 1500 0 136776410 0 3031 0 83500625 0 0 0 BMRU

eth1.518 1500 0 1353667603 0 6009 0 1355179448 0 82 0 BMRU

eth3 1500 0 349918471 0 0 0 329105077 0 0 0 BMRU

eth5 1500 0 20593367636 0 1320 0 23887869851 0 0 0 BMRU

lo 65536 0 51553642 0 0 0 51553642 0 0 0 ALMdNRU

[Expert@]#

[Expert@]# netstat -ni

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

eth0 1500 0 6417323737 0 1686 0 7640679315 0 0 0 BMRU

eth1 1500 0 22647018911 0 17873 0 18174795894 0 0 0 BMRU

eth1.501 1500 0 17112225 0 0 0 16984679 0 0 0 BMRU

eth1.502 1500 0 2929775379 0 101 0 1594829824 0 153 0 BMRU

eth1.503 1500 0 707271488 0 8802 0 580289748 0 1290 0 BMRU

eth1.504 1500 0 2540577663 0 0 0 1820603087 0 178 0 BMRU

eth1.505 1500 0 2364149383 0 0 0 1620495454 0 5 0 BMRU

eth1.506 1500 0 12431749187 0 0 0 10989469260 0 378 0 BMRU

eth1.516 1500 0 111916910 0 0 0 113450502 0 0 0 BMRU

eth1.517 1500 0 136776764 0 3031 0 83500854 0 0 0 BMRU

eth1.518 1500 0 1353671309 0 6009 0 1355182853 0 82 0 BMRU

eth3 1500 0 349919026 0 0 0 329105811 0 0 0 BMRU

eth5 1500 0 20593626411 0 1320 0 23888743000 0 0 0 BMRU

lo 65536 0 51553739 0 0 0 51553739 0 0 0 ALMdNRU

[Expert@]# fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 3 | 1642 | 24965

1 | Yes | 2 | 1841 | 22201

2 | Yes | 1 | 1527 | 22883

[Expert@]#

[Expert@# cpstat os -f multi_cpu -o 1

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 26| 74| 26| ?| 45961|

| 2| 1| 11| 87| 13| ?| 45960|

| 3| 2| 19| 80| 20| ?| 45961|

| 4| 0| 50| 50| 50| ?| 45960|

| 5| 0| 0| 100| 0| ?| 45960|

| 6| 0| 0| 100| 0| ?| 45960|

| 7| 0| 0| 100| 0| ?| 45960|

| 8| 0| 0| 100| 0| ?| 45960|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 26| 74| 26| ?| 45961|

| 2| 1| 11| 87| 13| ?| 45960|

| 3| 2| 19| 80| 20| ?| 45961|

| 4| 0| 50| 50| 50| ?| 45960|

| 5| 0| 0| 100| 0| ?| 45960|

| 6| 0| 0| 100| 0| ?| 45960|

| 7| 0| 0| 100| 0| ?| 45960|

| 8| 0| 0| 100| 0| ?| 45960|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 26| 74| 26| ?| 93577|

| 2| 3| 11| 86| 14| ?| 93584|

| 3| 2| 22| 76| 24| ?| 93588|

| 4| 1| 48| 52| 48| ?| 93593|

| 5| 0| 0| 100| 0| ?| 93599|

| 6| 0| 0| 100| 0| ?| 46802|

| 7| 0| 1| 100| 0| ?| 46802|

| 8| 0| 0| 100| 0| ?| 46801|

---------------------------------------------------------------------------------

^C

[Expert@rz2fwg2:0]#

[Expert@rz2fwg2:0]# cpstat os -f multi_cpu -o 1

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 26| 74| 26| ?| 50654|

| 2| 2| 10| 88| 12| ?| 50654|

| 3| 1| 42| 57| 43| ?| 50654|

| 4| 1| 44| 55| 45| ?| 50653|

| 5| 0| 0| 100| 0| ?| 50653|

| 6| 0| 0| 100| 0| ?| 50653|

| 7| 0| 0| 100| 0| ?| 50653|

| 8| 0| 0| 100| 0| ?| 50653|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 26| 74| 26| ?| 50654|

| 2| 2| 10| 88| 12| ?| 50654|

| 3| 1| 42| 57| 43| ?| 50654|

| 4| 1| 44| 55| 45| ?| 50653|

| 5| 0| 0| 100| 0| ?| 50653|

| 6| 0| 0| 100| 0| ?| 50653|

| 7| 0| 0| 100| 0| ?| 50653|

| 8| 0| 0| 100| 0| ?| 50653|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 25| 75| 25| ?| 97618|

| 2| 2| 13| 85| 15| ?| 97619|

| 3| 1| 61| 39| 61| ?| 48813|

| 4| 1| 41| 59| 41| ?| 48813|

| 5| 0| 0| 100| 0| ?| 97627|

| 6| 0| 0| 100| 0| ?| 48813|

| 7| 0| 0| 100| 0| ?| 48813|

| 8| 0| 0| 100| 0| ?| 48812|

---------------------------------------------------------------------------------

[Expert@]# enabled_blades

fw vpn ips mon

[Expert@]# free -m

total used free shared buff/cache available

Mem: 128110 13806 106169 7 8134 113238

Swap: 32765 0 32765

[Expert@:0]# fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 3 | 1606 | 24965

1 | Yes | 2 | 1699 | 22201

2 | Yes | 1 | 1694 | 22883

[Expert@]# fw ver

This is Check Point's software version R80.40 - Build 152

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

[Expert@]# tailf /var/log/messages

Feb 14 12:22:25 2023 rz2fwg2 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-00 usage (84%) on the local member increased above the configured threshold (80%).

Feb 14 12:22:35 2023 rz2fwg2 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 10 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

Feb 14 12:24:27 2023 rz2fwg2 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-00 usage (83%) on the local member increased above the configured threshold (80%).

Feb 14 12:24:37 2023 rz2fwg2 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 10 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

Feb 14 12:25:43 2023 rz2fwg2 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-00 usage (86%) on the local member increased above the configured threshold (80%).

Feb 14 12:25:53 2023 rz2fwg2 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 10 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

Feb 14 12:28:20 2023 rz2fwg2 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-00 usage (84%) on the local member increased above the configured threshold (80%).

Feb 14 12:28:31 2023 rz2fwg2 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 10 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

Feb 14 12:30:29 2023 rz2fwg2 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-00 usage (83%) on the local member increased above the configured threshold (80%).

Feb 14 12:30:39 2023 rz2fwg2 kernel: [fw4_1];CLUS-120202-2: Stopping CUL mode after 10 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your firewall is doing fine based on those outputs, as you said you aren't experiencing any noticeable issues. If you are experiencing a high connections rate (cpview...Overview screen and look at connections/sec) it might help to move rule 118 (which probably has a DCE/RPC object in it) further down your rule base to improve templating and save some rulebase lookup overhead. Other than that I'd suggest obtaining an 8-core license container.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the response. I did not get the statement

"It just means your CPUs are running hot and the ClusterXL dead timer has been tripled from roughly 2 seconds to ~6 seconds while CUL is in effect"

e.g.

Feb 14 12:30:29 2023 rz2fwg2 kernel: [fw4_1];CLUS-120200-2: Starting CUL mode because CPU-00 usage (83%) on the local member increased above the configured threshold (80%).

I understand that CPU0 is peaking obove threshold of 80%. However cpview does not display this peak but somehow a peak triggers CUL to start for a while. Is this expected behaviour?

Moreover, does this mean, that as long CPU0 (which is SND) is not under load most of the time, there is no need to tune something and the CUL messages are working as expected?

The proposal to purchase an 8-core license is intended to be able to use the additional 4 cores. Is that right?

Thank you for clarification.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Depending on how frequently CPU usage is polled, cpview may not see a very short-term CPU spike.

However, ClusterXL is fairly sensitive to this, which is why there are CUL messages when it does.

Given you only have a 4 core license, your tuning options are fairly limited

An 8 core license would allow for a 2/6 split of SND/Workers and give you a bit more headroom.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

where are you with this issue ?

i have 8 cores and encountering the same logs.

I dont think CPU count is the issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please provide the Super Seven outputs as well as the output of command enabled_blades, ideally while the CUL issue is occurring. It is difficult to form recommendations without knowing the specifics of your implementation, which may vary significantly from that of the original poster. Thanks!

S7PAC - Super Seven Performance Assessment Command...

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

working with checkpoint for three weeks now on this issue, no solution yet.

all logs and requested info has been provided to checkpoint.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What have you gotten from TAC so far? Any movement on the issue? Please keep us posted how it goes.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

nothing yet, a few meetings with no progress, three different clusters impacted by this issue.

81.10 take 81. will keep you posted.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

K, Im sure you are sick of repeating the issue all over again (who really isnt), but just briefly, what is exactly happening in your case? When you say you have issue with 3 clusters, that logically tells me its not just specific cluster issue, but possibly something with traffic...or who knows what else.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is what i am seeing everyday-random times

kernel: [fw4_1];CLUS-120200-1: Starting CUL mode because CPU-03 usage (83%) on the local member increased above the configured threshold (80%).

kernel: [fw4_1];CLUS-120202-1: Stopping CUL mode after 10 sec (short CUL timeout), because no member reported CPU usage above the configured threshold (80%) during the last 10 sec.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Right, but what comes afterfwards? Are you seeing traffic issues, fw lockup, what exactly?

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@cdelcarmen ... @Timothy_Hall is 100% right, as usual. Trust me, if he tells you to gather that info, you should do it mate. And by the way, its totally logical what he said, that this is indeed a symptom of another problem , most likely CPU issues.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had also a support case 6-0003441182 open and the recommend action plan was:

#####

After further investigating the files you have provided, we could see that a large number of connections do not accelerate, which might cause high CPU and cause our CUL messages.

Therefore I have created an action plan according to the files you have provided in order to optimize our environment and ease the load on each core.

Accelerate Trusted Connections:

Looking at the files we could see that there are connections that are not fully accelerated, If we trust those connections, we can bypass payload inspection on them to fully accelerate them.

Please refer to sk156672: SecureXL Fast Accelerator (fw fast_accel) for R80.20 and above for more information on accelerating trusted connections.

Rule Base Optimization:

The creation of templates is disabled from rule 115.

This means that connections that are matched on rules located below 115 are not being accelerated, which can impact the system.

Please review Section 1 (Acceleration of packets) of sk32578: SecureXL Mechanism for more information about which packets will not get accelerated.

Drop Templates:

Enable Drop templets according to sk90861 - "Optimized Drops feature"

Add kernel parameter to enable the drops template immediately regardless to the drop rate:

# fw ctl set -f int activate_optimize_drops_support_now 1*the "-f" flag should insert the kernel parameter to the fwkern.conf file. Please make sure that the parameter is set there as well.

IPS Blade:

Measure CPU time consumed by PM according to sk43733 - "How to measure CPU time consumed by PM"; the outputs should provide you with the critical impacting protections. In case they are not relevant to you or you are able to disable them according to your security policy then it would be preferable to disable them

Avoid setting IPS protections to run in "Detect" mode - it might increase CPU consumption (without increasing the security)

Monitoring Blade:

Please note that the implementation of the traffic's history report may have performance implications; you can see that in the GW object in SmartConsole under "monitoring software blade".

Traffic connections

Traffic throughput (bytes per second)

####

We disabled only the traffic history but this did not change anything. The other suggestion are still not done and can only partly performed. In the end I provided the information

in this checkmate community and based on what Timothy_Hall stated on 2023-02-14 I suppose the cul messages can be ignored.

I am still interested what is going on, but because no real issues coming up in our environment I did no more investigation so far.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 23 | |

| 23 | |

| 12 | |

| 12 | |

| 8 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 5 |

Upcoming Events

Wed 05 Nov 2025 @ 11:00 AM (EST)

TechTalk: Access Control and Threat Prevention Best PracticesThu 06 Nov 2025 @ 10:00 AM (CET)

CheckMates Live BeLux: Get to Know Veriti – What It Is, What It Does, and Why It MattersTue 11 Nov 2025 @ 05:00 PM (CET)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - AMERTue 11 Nov 2025 @ 10:00 AM (CST)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - EMEAAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter