- Products

Quantum

Secure the Network IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloudGuard CloudMates

Secure the Cloud CNAPP Cloud Network Security CloudGuard - WAF CloudMates General Talking Cloud Podcast Weekly Reports - Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Boosting Performance & Stability

with Harmony Endpoint E88.70!

Four Ways to SASE

April 23, 5PM CET | 11AM ET

It's Here!

CPX 2025 Content

Zero Trust: Remote Access and Posture

Help us with the Short-Term Roadmap

The Future of Browser Security:

AI, Data Leaks & How to Stay Protected!

CheckMates Go:

Recently on CheckMates

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Quantum

- :

- Security Gateways

- :

- Re: CPU Core Usage Imbalance

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

CPU Core Usage Imbalance

We have been trialing a 4-Core OpenServer checkpoint install to potentially replace our existing PFSense units. We have made reasonable progress after solving a couple of early issues, but are now faced with a significant imbalance of CPU usage.

From inspecting the output of the super7 commands, we can see that 99% of our traffic is accelerated, which given what we are using the FW currently makes sense.

Initially when using default configuration of 3 x Worker CoreXL cores, the unit started dropping packets under heavy load as the 1 remaining core was pegged at 100%. We have now reduced this to a 2/2 split which is the best we can do on a 4-core FW, but we are still faced with an issue where 2 of the cores are circa 50% usage, and the other 2 are at 2%

This seems mad to me to have those additional cores sat there, and paid for with licenses to do no work.

Any ideas on this?

+-----------------------------------------------------------------------------+

| Super Seven Performance Assessment Commands v0.5 (Thanks to Timothy Hall) |

+-----------------------------------------------------------------------------+

| Inspecting your environment: OK |

| This is a firewall....(continuing) |

| |

| Referred pagenumbers are to be found in the following book: |

| Max Power: Check Point Firewall Performance Optimization - Second Edition |

| |

| Available at http://www.maxpowerfirewalls.com/ |

| |

+-----------------------------------------------------------------------------+

| Command #1: fwaccel stat |

| |

| Check for : Accelerator Status must be enabled (R77.xx/R80.10 versions) |

| Status must be enabled (R80.20 and higher) |

| Accept Templates must be enabled |

| Message "disabled" from (low rule number) = bad |

| |

| Chapter 9: SecureXL throughput acceleration |

| Page 278 |

+-----------------------------------------------------------------------------+

| Output: |

+---------------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+---------------------------------------------------------------------------------+

|0 |SND |enabled |eth0,eth1,eth2,eth3 |Acceleration,Cryptography |

| | | | | |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,3DES,DES,AES-128,AES-256,|

| | | | |ESP,LinkSelection,DynamicVPN, |

| | | | |NatTraversal,AES-XCBC,SHA256, |

| | | | |SHA384,SHA512 |

+---------------------------------------------------------------------------------+

Accept Templates : enabled

Drop Templates : disabled

NAT Templates : enabled

+-----------------------------------------------------------------------------+

| Command #2: fwaccel stats -s |

| |

| Check for : Accelerated conns/Totals conns: >25% good, >50% great |

| Accelerated pkts/Total pkts : >50% great |

| PXL pkts/Total pkts : >50% OK |

| F2Fed pkts/Total pkts : <30% good, <10% great |

| |

| Chapter 9: SecureXL throughput acceleration |

| Page 287, Packet/Throughput Acceleration: The Three Kernel Paths |

+-----------------------------------------------------------------------------+

| Output: |

Accelerated conns/Total conns : 53603/53613 (99%)

Accelerated pkts/Total pkts : 30935196276/32079584842 (96%)

F2Fed pkts/Total pkts : 1144388566/32079584842 (3%)

F2V pkts/Total pkts : 133017283/32079584842 (0%)

CPASXL pkts/Total pkts : 0/32079584842 (0%)

PSLXL pkts/Total pkts : 1422288/32079584842 (0%)

CPAS pipeline pkts/Total pkts : 0/32079584842 (0%)

PSL pipeline pkts/Total pkts : 0/32079584842 (0%)

CPAS inline pkts/Total pkts : 0/32079584842 (0%)

PSL inline pkts/Total pkts : 0/32079584842 (0%)

QOS inbound pkts/Total pkts : 0/32079584842 (0%)

QOS outbound pkts/Total pkts : 0/32079584842 (0%)

Corrected pkts/Total pkts : 0/32079584842 (0%)

+-----------------------------------------------------------------------------+

| Command #3: grep -c ^processor /proc/cpuinfo && /sbin/cpuinfo |

| |

| Check for : If number of cores is roughly double what you are excpecting, |

| hyperthreading may be enabled |

| |

| Chapter 7: CoreXL Tuning |

| Page 239 |

+-----------------------------------------------------------------------------+

| Output: |

4

HyperThreading=disabled

+-----------------------------------------------------------------------------+

| Command #4: fw ctl affinity -l -r |

| |

| Check for : SND/IRQ/Dispatcher Cores, # of CPU's allocated to interface(s) |

| Firewall Workers/INSPECT Cores, # of CPU's allocated to fw_x |

| R77.30: Support processes executed on ALL CPU's |

| R80.xx: Support processes only executed on Firewall Worker Cores|

| |

| Chapter 7: CoreXL Tuning |

| Page 221 |

+-----------------------------------------------------------------------------+

| Output: |

CPU 0:

CPU 1:

CPU 2: fw_1

mpdaemon fwd pepd in.asessiond pdpd cprid core_uploader lpd vpnd cprid cpd

CPU 3: fw_0

mpdaemon fwd pepd in.asessiond pdpd cprid core_uploader lpd vpnd cprid cpd

All:

Interface eth0: has multi queue enabled

Interface eth1: has multi queue enabled

Interface eth2: has multi queue enabled

Interface eth3: has multi queue enabled

+-----------------------------------------------------------------------------+

| Command #5: netstat -ni |

| |

| Check for : RX/TX errors |

| RX-DRP % should be <0.1% calculated by (RX-DRP/RX-OK)*100 |

| TX-ERR might indicate Fast Ethernet/100Mbps Duplex Mismatch |

| |

| Chapter 2: Layers 1&2 Performance Optimization |

| Page 28-35 |

| |

| Chapter 7: CoreXL Tuning |

| Page 204 |

| Page 206 (Network Buffering Misses) |

+-----------------------------------------------------------------------------+

| Output: |

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

eth0 1500 0 15533331031 2 0 0 15221099885 0 0 0 BMRU

eth0.229 1500 0 15533334245 0 0 0 15221101840 0 3 0 BMRU

eth1 1500 0 15605607013 2 0 0 15270990974 0 0 0 BMRU

eth1.230 1500 0 15605603229 0 0 0 15270990983 0 0 0 BMRU

eth2 1500 0 717582608 2 0 0 540297021 0 0 0 BMRU

eth2.232 1500 0 134680581 0 8 0 166903228 0 18 0 BMRU

eth2.236 1500 0 23795039 0 0 0 39453839 0 0 0 BMRU

eth2.238 1500 0 28396758 0 0 0 51591299 0 0 0 BMRU

eth2.426 1500 0 377210454 0 6 0 217280203 0 0 0 BMRU

eth2.430 1500 0 26420369 0 10 0 28392175 0 0 0 BMRU

eth2.431 1500 0 126674327 0 0 0 36232270 0 0 0 BMRU

eth2.432 1500 0 405677 0 4 0 444286 0 16 0 BMRU

eth3 1500 0 7396905 2 0 0 58545011 0 0 0 BMRU

eth3.433 1500 0 7399011 0 0 0 58545797 0 3706 0 BMRU

lo 65536 0 1103435 0 0 0 1103435 0 0 0 ALMPNORU

interface eth0: There were no RX drops in the past 0.5 seconds

interface eth0 rx_missed_errors : 0

interface eth0 rx_fifo_errors : 0

interface eth0 rx_no_buffer_count: 0

no stats available

no stats available

no stats available

interface eth0.229: There were no RX drops in the past 0.5 seconds

interface eth0.229 rx_missed_errors :

interface eth0.229 rx_fifo_errors :

interface eth0.229 rx_no_buffer_count:

interface eth1: There were no RX drops in the past 0.5 seconds

interface eth1 rx_missed_errors : 0

interface eth1 rx_fifo_errors : 0

interface eth1 rx_no_buffer_count: 0

no stats available

no stats available

no stats available

interface eth1.230: There were no RX drops in the past 0.5 seconds

interface eth1.230 rx_missed_errors :

interface eth1.230 rx_fifo_errors :

interface eth1.230 rx_no_buffer_count:

interface eth2: There were no RX drops in the past 0.5 seconds

interface eth2 rx_missed_errors : 0

interface eth2 rx_fifo_errors : 0

interface eth2 rx_no_buffer_count: 0

no stats available

no stats available

no stats available

interface eth2.232: There were no RX drops in the past 0.5 seconds

interface eth2.232 rx_missed_errors :

interface eth2.232 rx_fifo_errors :

interface eth2.232 rx_no_buffer_count:

no stats available

no stats available

no stats available

interface eth2.236: There were no RX drops in the past 0.5 seconds

interface eth2.236 rx_missed_errors :

interface eth2.236 rx_fifo_errors :

interface eth2.236 rx_no_buffer_count:

no stats available

no stats available

no stats available

interface eth2.238: There were no RX drops in the past 0.5 seconds

interface eth2.238 rx_missed_errors :

interface eth2.238 rx_fifo_errors :

interface eth2.238 rx_no_buffer_count:

no stats available

no stats available

no stats available

interface eth2.426: There were no RX drops in the past 0.5 seconds

interface eth2.426 rx_missed_errors :

interface eth2.426 rx_fifo_errors :

interface eth2.426 rx_no_buffer_count:

no stats available

no stats available

no stats available

interface eth2.430: There were no RX drops in the past 0.5 seconds

interface eth2.430 rx_missed_errors :

interface eth2.430 rx_fifo_errors :

interface eth2.430 rx_no_buffer_count:

no stats available

no stats available

no stats available

interface eth2.431: There were no RX drops in the past 0.5 seconds

interface eth2.431 rx_missed_errors :

interface eth2.431 rx_fifo_errors :

interface eth2.431 rx_no_buffer_count:

no stats available

no stats available

no stats available

interface eth2.432: There were no RX drops in the past 0.5 seconds

interface eth2.432 rx_missed_errors :

interface eth2.432 rx_fifo_errors :

interface eth2.432 rx_no_buffer_count:

interface eth3: There were no RX drops in the past 0.5 seconds

interface eth3 rx_missed_errors : 0

interface eth3 rx_fifo_errors : 0

interface eth3 rx_no_buffer_count: 0

no stats available

no stats available

no stats available

interface eth3.433: There were no RX drops in the past 0.5 seconds

interface eth3.433 rx_missed_errors :

interface eth3.433 rx_fifo_errors :

interface eth3.433 rx_no_buffer_count:

+-----------------------------------------------------------------------------+

| Command #6: fw ctl multik stat |

| |

| Check for : Large # of conns on Worker 0 - IPSec VPN/VoIP? |

| Large imbalance of connections on a single or multiple Workers |

| |

| Chapter 7: CoreXL Tuning |

| Page 241 |

| |

| Chapter 8: CoreXL VPN Optimization |

| Page 256 |

+-----------------------------------------------------------------------------+

| Output: |

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 3 | 33063 | 194418

1 | Yes | 2 | 33249 | 192480

+-----------------------------------------------------------------------------+

| Command #7: cpstat os -f multi_cpu -o 1 -c 5 |

| |

| Check for : High SND/IRQ Core Utilization |

| High Firewall Worker Core Utilization |

| |

| Chapter 6: CoreXL & Multi-Queue |

| Page 173 |

+-----------------------------------------------------------------------------+

| Output: |

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 33| 67| 33| ?| 184972|

| 2| 0| 33| 67| 33| ?| 184973|

| 3| 2| 5| 93| 7| ?| 184974|

| 4| 3| 5| 92| 8| ?| 184973|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 33| 67| 33| ?| 184972|

| 2| 0| 33| 67| 33| ?| 184973|

| 3| 2| 5| 93| 7| ?| 184974|

| 4| 3| 5| 92| 8| ?| 184973|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 26| 74| 26| ?| 388493|

| 2| 0| 26| 74| 26| ?| 388498|

| 3| 1| 3| 96| 4| ?| 388495|

| 4| 1| 4| 96| 4| ?| 388497|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 26| 74| 26| ?| 388493|

| 2| 0| 26| 74| 26| ?| 388498|

| 3| 1| 3| 96| 4| ?| 388495|

| 4| 1| 4| 96| 4| ?| 388497|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 37| 63| 37| ?| 307033|

| 2| 0| 37| 63| 37| ?| 307033|

| 3| 0| 4| 96| 4| ?| 153517|

| 4| 1| 3| 97| 3| ?| 153516|

---------------------------------------------------------------------------------

+-----------------------------------------------------------------------------+

| Thanks for using s7pac |

+-----------------------------------------------------------------------------+

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try 4 instances and see what happens with double duty, such a low percentage of non-accelerated traffic might not cause as many CPU fast cache misses as usual, which is why you don't normally want to mix SND and workers on the same core as main memory access is roughly 10 times slower than the CPU fast caches.

NAT will cause some additional load but I'd think it would be pretty negligible as long as NAT templates are enabled; SecureXL can perform NAT operations independently.

What kind of processors is your open hardware using? (cat /proc/cpuinfo) Would need to compare it to the 6400's CPU model to provide a meaningful answer about the CPU load relative to performance that you are seeing.

Attend my Gateway Performance Optimization R81.20 course

CET (Europe) Timezone Course Scheduled for July 1-2

CET (Europe) Timezone Course Scheduled for July 1-2

13 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

These are really good values seen in the acceleration (99% conns, 96% packets). I think you are using only firewall blade and none of the others like IPS, AntiBot, AntiVirus, URL-Filter, ApplicationControl, ThreatPrevention... Do you know your througput on these system?

Regarding your max connections nearly 200.000 I had a feeling you need mor CPUs and more SNDs todo the work for your type of traffic.

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 3 | 33063 | 194418

1 | Yes | 2 | 33249 | 192480

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

If you are looking for solution with only firewall-blade enabled have alook at the newly inroduced Lightspeed appliances https://www.checkpoint.com/quantum/next-generation-firewall/lightspeed/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply.

We are only using the FW blade with a little bit of Remote Access VPN, however as you have noticed, the number of connections through the system is pretty intense!

In terms of actual throughput, we are looking at a fairly steady 1.5Gbps which can sometimes spike up to 2.5Gbps.

The Lightspeed boxes look good, just not in our budget.

I am aware that more cores would help, but my main concern is having 2 of them sat there doing nothing. Is there any way we can get it to use all cores for SND?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what I see, you need a bigger box. Your SecureXL cores are very busy, since, practically all traffic is accelerated. With 4 cores, 2 of them being SNDs, you are still in a danger zone, running 50% on SNDs and up. You need 8 cores to balance this properly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, so ignoring the licensing aspect for one moment, the boxes they are on only have a maximum of 4 physical cores on them. I have for the moment disabled hyper threading, but could enable this to get 8 "cores". Would this be sufficient, or are we looking at needing to replace the boxes as well?

also i have seen a few different answers on this, but am i correct that hyper threaded cores all count towards license count?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No do not enable hyperthreading as doing so does not help on systems that spend most of their time in SND functions due to high levels of acceleration, and enabling it can actually degrade performance under high load.

You can get to a 3/1 split by disabling CoreXL from cpconfig, you will also save some CoreXL coordination overhead. You can't use all four cores for SND unless you set 4 instances which will cause all cores to pull double duty as both an SND and worker which is really not a good idea performance-wise.

Whether licenses count for hyperthreaded cores on open hardware is dependent on code version, but I wouldn't recommend SMT in your case anyway.

Attend my Gateway Performance Optimization R81.20 course

CET (Europe) Timezone Course Scheduled for July 1-2

CET (Europe) Timezone Course Scheduled for July 1-2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the detail. Im wondering though considering that basically all of the traffic is accelerated, if having all 4 cores pulling double duty as you mention would harm it that much performance wise? I guess this is one of those things that need to be tested to be sure.

At the moment, 95% of this traffic also requires NAT. At present, this is being handled by different units, but the goal was to bring it all onto the Checkpoint. Do you think NAT'ing this amount of traffic will cause much of an increase in load?

Finally, i am still a little puzzled as to why this is causing so much stress on the boxes. If we look at the Quantum 6400 GW, this has 4 cores and sates can deal with 12Gbs of standard FW traffic, 90k connections per second, and 8M concurrent connections with max memory; this unit has 32GB of RAM.

Our numbers are way below these, but yet the boxes are stressed. What have i missed?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try 4 instances and see what happens with double duty, such a low percentage of non-accelerated traffic might not cause as many CPU fast cache misses as usual, which is why you don't normally want to mix SND and workers on the same core as main memory access is roughly 10 times slower than the CPU fast caches.

NAT will cause some additional load but I'd think it would be pretty negligible as long as NAT templates are enabled; SecureXL can perform NAT operations independently.

What kind of processors is your open hardware using? (cat /proc/cpuinfo) Would need to compare it to the 6400's CPU model to provide a meaningful answer about the CPU load relative to performance that you are seeing.

Attend my Gateway Performance Optimization R81.20 course

CET (Europe) Timezone Course Scheduled for July 1-2

CET (Europe) Timezone Course Scheduled for July 1-2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info, will see what 4 instances runs like.

CPU Info below:

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 94

model name : Intel(R) Xeon(R) CPU E3-1270 v5 @ 3.60GHz

stepping : 3

microcode : 0xdc

cpu MHz : 3600.000

cache size : 8192 KB

physical id : 0

siblings : 4

core id : 0

cpu cores : 4

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 22

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch epb intel_pt ssbd ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm mpx rdseed adx smap clflushopt xsaveopt xsavec xgetbv1 dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp md_clear spec_ctrl intel_stibp flush_l1d

bogomips : 7200.00

clflush size : 64

cache_alignment : 64

address sizes : 39 bits physical, 48 bits virtual

power management:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

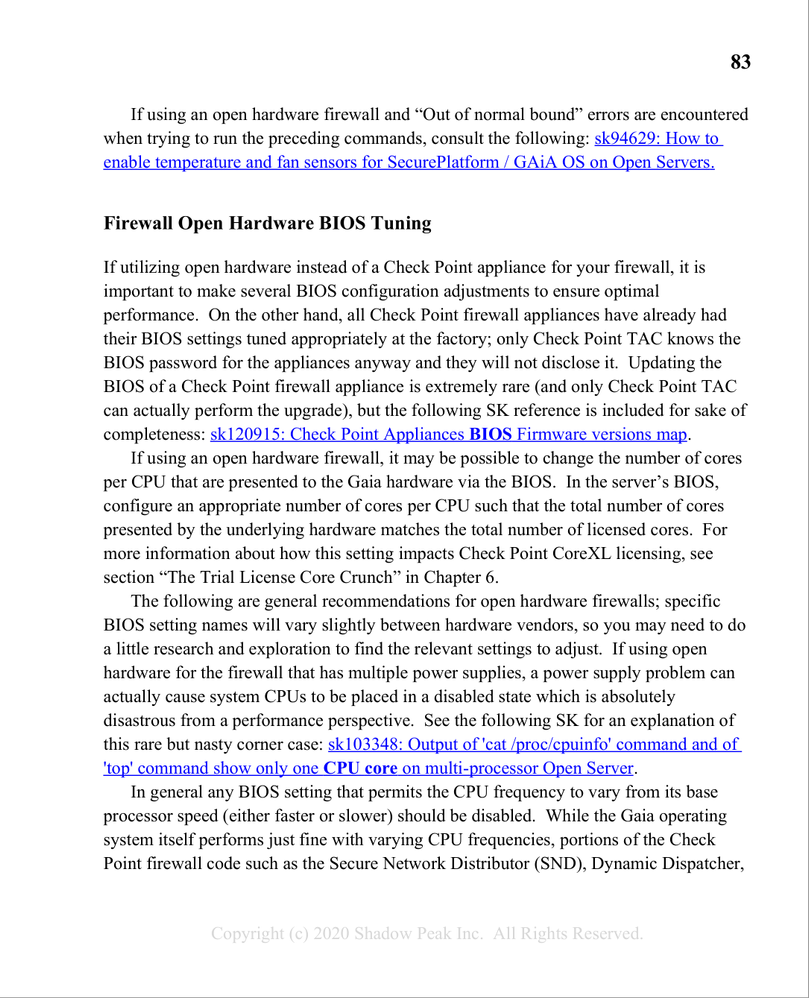

Looks like the 6400 is a Intel(R) Core(TM) i3-8100 CPU @ 3.60GHz quad core processor which appears pretty evenly matched against a Intel(R) Xeon(R) CPU E3-1270 v5. Have you optimized the BIOS settings of this open server as mentioned in the third edition of my book, the power saving settings in particular can make a huge difference:

Attend my Gateway Performance Optimization R81.20 course

CET (Europe) Timezone Course Scheduled for July 1-2

CET (Europe) Timezone Course Scheduled for July 1-2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the past of a similar case we could see a performance decrease of 30% with the wrong power saving settings on open server hardware. I fully agree with @Timothy_Hall setting all cores for SND will be a try but not a solution for production. You will have no reserve for other traffic and high peaks. If you are using 3 cores for SND and you are still had connectivity issues you need to look at another hardware or more cores. But maybe BIOS settings changed something.

For interesting, how about the performance and utilization of you’re actual solution (pfsense) which you want to replace ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

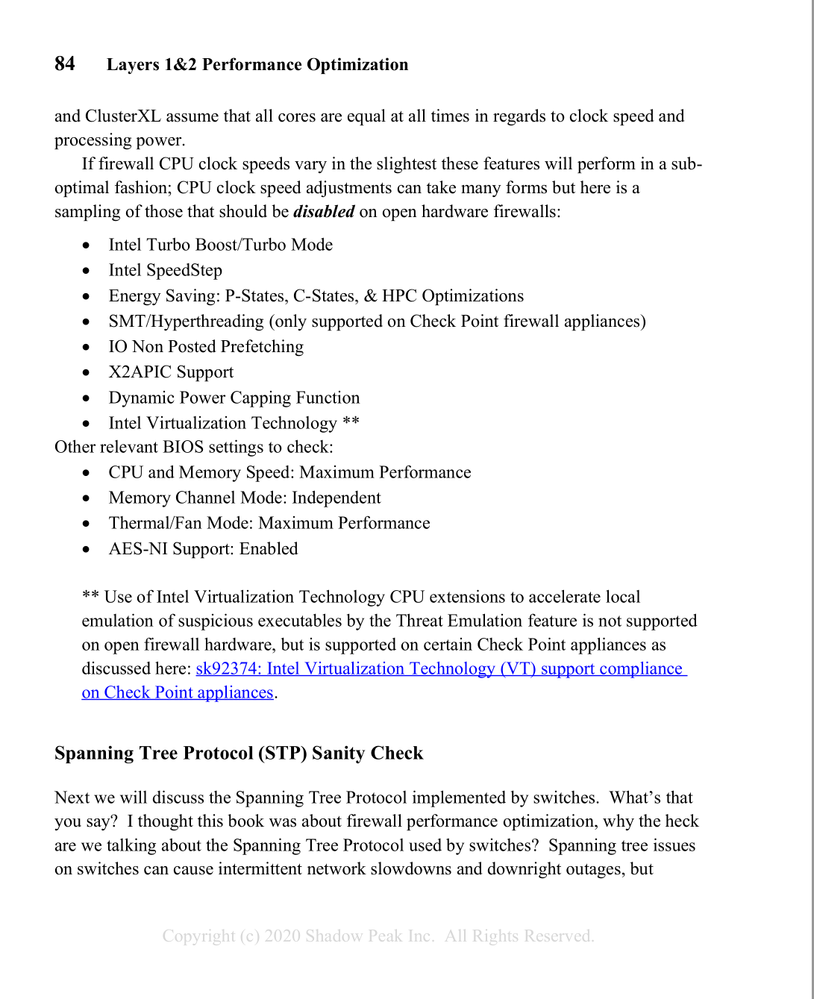

Sorry for late reply, i needed to find a suitable time to take 1 device offline to get into the bios.

They were set to performance mode, which i have tweaked in-line with recommendations above, there has not been much of a difference noticed on the overall performance.

I have not changed to using all 4 cores on double-duty as of yet.

PFSense screenshot also attached. Appreciate that it has more cores, albeit lower powered ones.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Greatsamps BIOS settings looks fine.

Your pfsense is running with 16 CPUs with an shown utilization over all CPUs of 17%. That's hardly fair to achieve the same results with an 4 core open server. I really suggest to use more cores and set them most as SND because of your traffic profile. You can use a trial license with more cores licensed to did a test. and see the results.

Yes the cores of pfsense has lower MHz but I think this is not the main problem. With only 4 cores with higher MHz I think you did not get the same performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Agree with Wolfgang, BIOS settings look good but you'd be surprised how aggressive some hardware vendors get with power saving settings in their BIOS by default. That is acceptable for most normal servers that are relatively idle most of the time, but not good for a device constantly moving large amounts of traffic through it.

Also agree that your PFSense server has around 2-3X the CPU power of the 6400 so it is unlikely you are going to be able to squeeze the same amount of performance out of your 6400.

Attend my Gateway Performance Optimization R81.20 course

CET (Europe) Timezone Course Scheduled for July 1-2

CET (Europe) Timezone Course Scheduled for July 1-2

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 19 | |

| 12 | |

| 8 | |

| 6 | |

| 6 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Wed 02 Apr 2025 @ 03:00 PM (CEST)

The Power of SASE: Smarter Security, Stronger Network - EMEAWed 02 Apr 2025 @ 02:00 PM (EDT)

The Power of SASE: Smarter Security, Stronger Network - AmericasThu 03 Apr 2025 @ 01:00 PM (CEST)

Meet the Experts: Transforming Security Operations with Impactful Intelligence!Wed 02 Apr 2025 @ 03:00 PM (CEST)

The Power of SASE: Smarter Security, Stronger Network - EMEAWed 02 Apr 2025 @ 02:00 PM (EDT)

The Power of SASE: Smarter Security, Stronger Network - AmericasThu 03 Apr 2025 @ 01:00 PM (CEST)

Meet the Experts: Transforming Security Operations with Impactful Intelligence!Wed 09 Apr 2025 @ 05:00 PM (CDT)

Unleashing Performance & Stability with Harmony Endpoint E88.70Thu 10 Apr 2025 @ 10:00 AM (EEST)

CheckMates Live Sofia - Maintenance and Upgrade Best PracticesTue 06 May 2025 @ 09:00 AM (EEST)

CheckMates Live Cyprus - Performance Optimization Best PracticesAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter