- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Bond interfaces go down after cluster member e...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bond interfaces go down after cluster member enters STANDBY / ACTIVE after reboot

Hi! Just wondering if anyone else has seen this very strange cluster behaviour after reboot.

We are running R80.40 T120, regular gateways, non-VSX

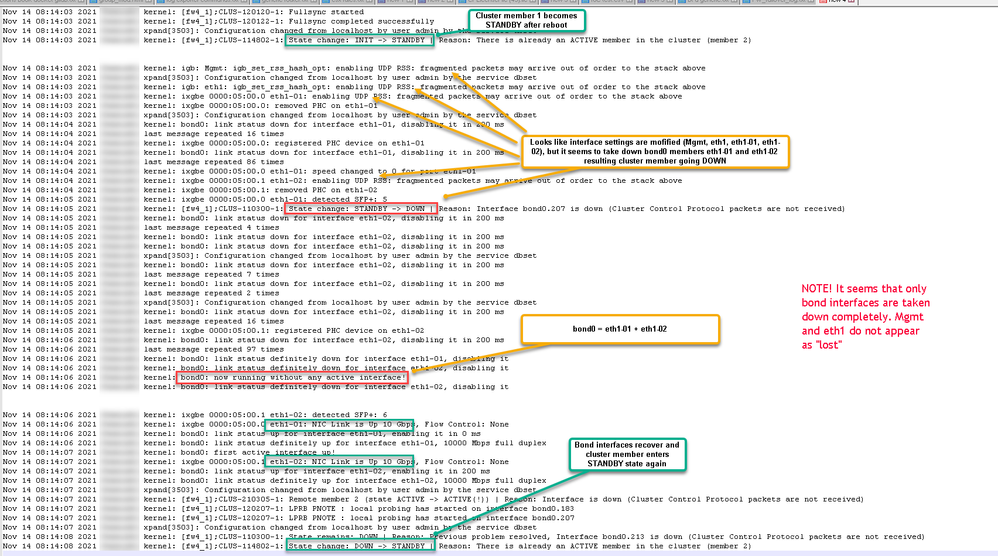

In short sequence is as follows:

- reboot cluster member (i.e. FW1 that is STANDBY)

- FW1 recovers and synchronises connections

- FW1 cluster state enters STANDBY

- few seconds later all bond members report lost link

- FW1 cluster state goes DOWN

- interface driver seems to be reloaded and interfaces become available again

- FW cluster state enters STANDBY again

Below I have full message log with comments. It seems that interface settings are modified after cluster has entered STANDBY state and that causes all bond members to go down. Regular interfaces seem to survive without link down.

The problem isn't that big if you maintain cluster state as is. But if you use "switch to higher priority member", you may end up in situation where FW1 goes ACTIVE > DOWN > ACTIVE after reboot and it has shown heaps of problems in our production network.

This is present on all our clusters and with different bond member types (i.e. 10Gbps drivers ixgbe or i40e, or 1Gbps - igb)

- Tags:

- kz

11 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting. Thanks for the detailed description. As these things happen within just a few seconds at system start it might not be easy to monitor the bond status closely via cphaprob show_bond and awk '{print FILENAME ":" $0}' /proc/net/bonding/bond*.

While I have no solution at the moment, I'd like to propose a workaround:

- Create a permanent faildevice with Status:problem so starting gateways will not become active automatically so quickly

- Set the status of the faildevice to be OK via a scheduled job 30secs after system start

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very cleaver actually Danny! Since it's not a major drama and we only noticed that on one cluster that was set to "switch to higher priority member", current workaround will be to change the mode to keep current active. So we avoid unnecessary issues with traffic. Case is open with TAC so hopefully we can get to the bottom of it 🙂 Obviously I'll post any findings / fixes here

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I actually suspect it's on physical interface level and PHC function as straight after that mod interface goes down

But that's a wild guess hehe

Nov 14 08:14:03 2021 fw1 kernel: ixgbe 0000:05:00.0 eth1-01: enabling UDP RSS: fragmented packets may arrive out of order to the stack above

Nov 14 08:14:03 2021 fw1 kernel: ixgbe 0000:05:00.0: removed PHC on eth1-01 <<<<<

Nov 14 08:14:03 2021 fw1 xpand[3503]: Configuration changed from localhost by user admin by the service dbset

Nov 14 08:14:04 2021 fw1 kernel: bond0: link status down for interface eth1-01, disabling it in 200 ms

Nov 14 08:14:04 2021 fw1 last message repeated 16 times

Nov 14 08:14:04 2021 fw1 kernel: ixgbe 0000:05:00.0: registered PHC device on eth1-01

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the CPU usage while this is happening? Are the SNDs fully utilized?

A while back with R77.30 I had a similar issue with a cluster, where the bond would suddenly go down and cause a cluster fail-over (the other way around) due to a failed check for a bond interface.

In the end it turned out the gateway was under high load at certain moments, this was due to application control being overwhelmed by a not tuned App control policy. The high load caused Gaia OS to process the interface queue not fast enough and the bond went down.

If the load is to high on your gateway after the fail-over this might also be a reason for your issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks @Markus_Genser but CPUs are not utilised at all. Remember that cluster member just booted and entered STANDBY state 🙂 but it's always good to know such tricks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

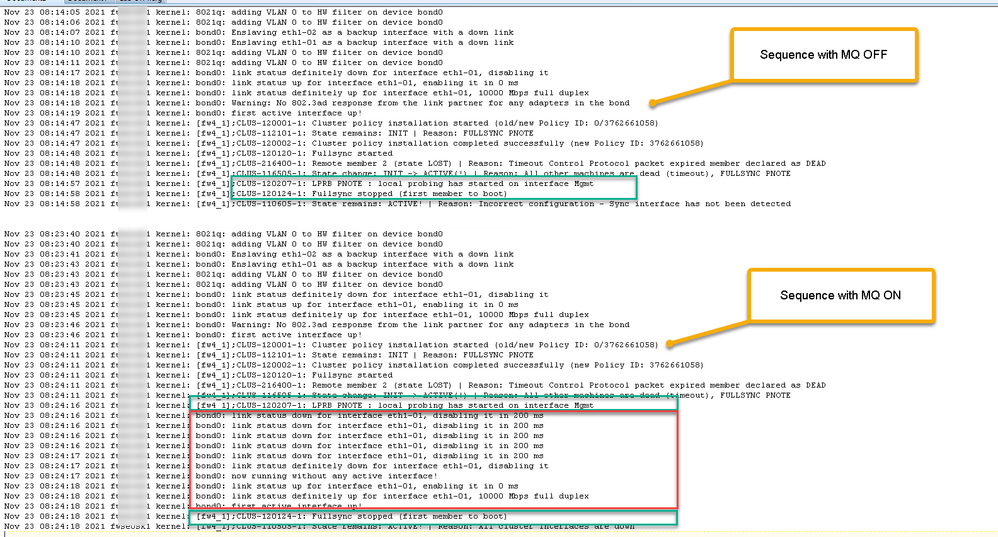

Interesting find! Tried the workaround but it didn't help but it feels like our case is actually related as we see RSS UDP being enabled (=MQ) just before interfaces go down. Have a feeling that there is a connection between MQ being configurd during boot sequence and and bond interfaces

Cause

The Security Gateway uses fwstarts to initiate the modules loading sequence. A change was made to shorten the OS boot loading time, due to this, the multi-queue configuration does not have enough time to load before the next sequence starts, which causes the interfaces to not load properly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK I think I am on something! Whilst TAC was chasing me to get HW diagnostics done on the box (despite the fact that we had seen exactly the same symptoms in multiple clusters in different continents) ... I managed to pinpoint issue to MQ. If MQ is enabled on a bond interface, it will go down during boot sequence one extra time. Tests below were done in the lab where I had only one cluster member available, so clustering state will look odd, but it clearly shows the difference with MQ OFF and ON:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for keeping us updated on your findings! 👍

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

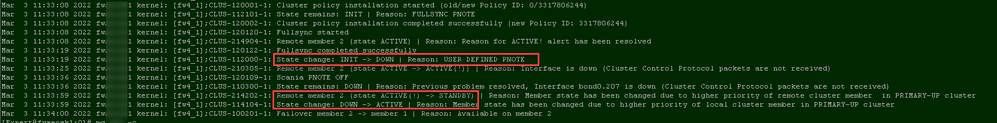

Still waiting for R&D to come up with the goods 😞 taken way too long, so took matters in own hands. Basically used @Danny idea about custom PNOTE.

Modified parts of $FWDIR/bin/fwstart, first in the middle:

if ($?highavail && ! $?IS_VSW) then

if ($fw1_vsx == 0) then

$FWDIR/bin/cphaconf set_pnote -f $FWDIR/conf/cpha_global_pnotes.conf -g register

endif

endif

# Test manual cluster DOWN till MQ reconf is applied at the end

echo "*** TEST cluster DOWN ***"

$FWDIR/bin/cphaconf set_pnote -d ForceDown -t 1 -s init register

# load sim settings (affinity)

if ((! $?VS_CTX) && ($linux == 1)) then

if ($?PPKDIR) then

$FWDIR/bin/fwaccel on

$FWDIR/bin/sxl_stats update_ac_name

if ($fw1_ipv6) then

$FWDIR/bin/fwaccel6 on

endif

--

endif

endif

and then remove PNOTE after MQ is reconfigured.

(Actually $FWDIR/bin/mq_mng_reconf_all_vs command is the one that causes the problem)

# Kernel 3.10 - apply MQ settings for all VS

##release lock of mq_mng

rm /tmp/mq_reconf_lock >& /dev/null

if ("$linuxver" != "2.6") then

$FWDIR/bin/mq_mng_reconf_all_vs >& /dev/null

endif

# Test manual cluster DOWN till bond MQ is applied - finish

echo "*** TEST cluster DOWN finsih ***"

sleep 15

$FWDIR/bin/cphaconf set_pnote -d ForceDown unregister

if ((! $?VS_CTX) && ($linux == 1)) then

# Apply Backplane Ethernet affinity settings

if ( -e /dev/adp0) then

/etc/ppk.boot/bin/sam_mq.sh

endif

endif

So startup sequence looks like this now:

And clustering messages confirm that there are no unwanted failovers:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After 4 or 5 month battle with TAC and R&D I finally have the answer! Turns out I was right from the start, the changes made in startup sequence mentioned in sk173928 are causing the problems! You basically need to do the opposite of what SK suggests and move MQ reconfigure upwards instead of end of the script.

KZ 1:0 CP 8)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmmmm, the SK says, reach out for a fix. Why did you try to apply a workaround instead?

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 21 | |

| 17 | |

| 11 | |

| 8 | |

| 7 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter