- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

Policy Insights and Policy Auditor in Action

19 November @ 5pm CET / 11am ET

Access Control and Threat Prevention Best Practices

Watch HereOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Management

- :

- Re: Postgres + IPS issues

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

Postgres + IPS issues

Hi all,

I have the following issue at a customer, for which a TAC case is open for almost 5 months, with no meaningful progress so far.

Full Mgmt/HA cluster.

Beginning of September 2022, (in-place) upgrade from R80.40 to R81.10, so far so good.

Some time later, began to notice important CPU spikes every hour on active member, and even much worse on standby.

This is when we realize that since R81.10, the sync schedule for Mgmt (from primary to secondary), is not a user configurable setting anymore, and that it's scheduled at every hour.

(terrible idea imho, for different reasons)

Over time, the length of those CPU spikes on both members keeps increasing steadily.

This also has a direct impact when a member has to be restarted, as it can need as long a 10 minutes after a restart, before reaching a "normal" state (due to the heavy Postgres processing).

Long story short, I discover that at this customer, the size of the Postgres DB is way larger than at other customers of mine.

And specifically the size of the system table "pg_largeobject".

While that table is about approximately 850 MB's elsewhere, at this customer it's currently at almost 4 GB's (and growing)

Any info regarding that largeobject's table specific usage by Checkpoint is very scarce.

But it would seem that's where the different IPS revisions are stored, and that accounts for most if not all the size of that table.

More recently, found out that for some reason, IPS revisions were never purged at this customer.

Although IPS revisions older than 30 days are supposed to be auto-purged automatically.

Which allows at least to understand the size of the DB and its steady increase.

117 revisions accumulated at this customer since early September, at ~35MB per revision, is about 4GB.

In Mgmt-HA, size of replicated DB on the secondary seems to be, even when working normally, about twice the size of primary.

(I asked, but never got a answer as whether this is "per design/ expected")

Due to the unstable situation here with the issue on the primary, size of DB on secondary frequently becomes 3 or even 4 times the size of primary.

Currently, we have to restore the secondary from backup several times a week, as its root partition fills up due to DB size.

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I’d like to elaborate a bit on the postgres disk space consumption on standby machines and the IPS purge not running issue @jgar encountered.

Postgres disk space consumption on standby machines

Roughly speaking, when deleting or updating a record in postgres, postgres does not release the remaining disk space to the OS, but keeps it for internal usage. In some cases, postgres ‘recycles’ these bytes for further records updates or creations (by ‘autovacuum’ for instance), but in some cases, due to internal calculations, it just keeps consuming more disk space and will eventually require manual cleaning. This additional disk space that can be cleaned up is called ‘bloat’.

The postgres DB owner (in this case – us J) is supposed to maintain the health of the DB.

Full sync is a Management operation that creates a lot of bloat – that is because in full sync, in simple words, we delete all the records on the synchronized machine, and copy them from the synchronizing machine.

Before R81.10, this was a heavy manual operation.

In R81.10, due to major performance improvement in full sync duration, we have automatic full sync (in every domain apart from System domain) every 5 minutes (instead of the incremental sync that wasn’t stable enough).

In the bottom line, this means that every 5 minutes we create bloat – and in some cases the automatic cleanup is not fast enough to overcome it.

This behavior impacts the most on MDSs with many standby CMAs, or SMC standby machines.

A solution to this problem is in progress.

- For MDSs, a solution is in development and should be released. It will not require a code change and should be released in an updatable way in the next few months.

- For SMCs (and SAs), several customers already received HFs with a fix for it ( @jgar – you should receive a HF next week).

Once the fix is proved as solving the problem, it will be released via Jumbo HF (PRHF-29307)

IPS purge not running on SA in Full HA environment

The issue described above with the IPS purge not running is simply a bug. The IPS purge has a mechanism to avoid deletion of IPS packages when they are suspected to be used by other domains/machines in the environment. In case of SMC/SA HA, this call failed, but was not required in the first place. This was fixed under PRHF-29565 and will be released in the next Jumbo HFs.

Thanks,

Natan

12 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We do not generally document the internal tables we use in Postgres.

pg_largeobject does seem to be related to IPS: https://support.checkpoint.com/results/sk/sk117012

Pretty sure the database growing on the secondary is not expected behavior.

Have you escalated the issue with TAC yet?

Please send me the SR privately.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you ever get a fix for this? We have exactly the same problem. The root partition on our standby manager filled up because the postgres database is much larger.

On the active

schema_name | relname | size | table_size

--------------------+-----------------------------------------------------------------+------------+-------------

pg_catalog | pg_largeobject | 16 GB | 16877387776

On the standby

schema_name | relname | size | table_size

--------------------+-----------------------------------------------------------------+------------+-------------

pg_catalog | pg_largeobject | 53 GB | 57105711104

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You didn't mention whether you have the same issue that I described on the primary?

That is, the IPS updates continuously accumulating because they fail to be purged once older then 30 days?

Do you know how to check that?

And is it also R81.10?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is R81.10

I do not know if it is IPS updates but that seems like a probable candidate. The database is only growing on the standby.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

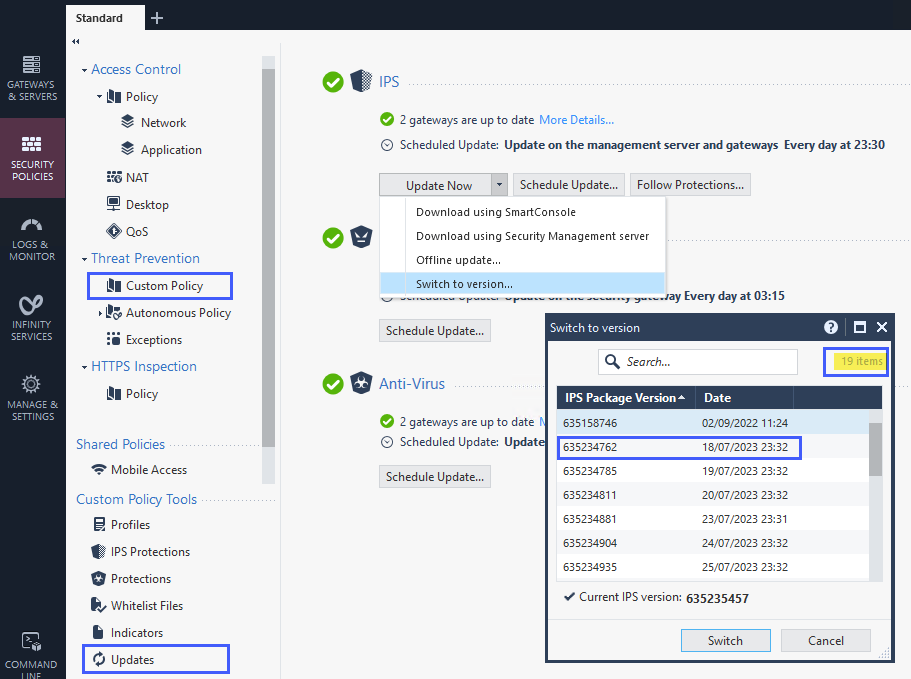

Here's how you can check whether your IPS revisions older than 30 days are purged.

As you can see, now that the issue is fixed for us, the oldest revision kept is from July 18th, so we have only 19 revs in total.

(the much older one, which correspond to the date at which the major version was either installed or upgraded to, stays present by design)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That seems indeed the problem.

With 327 revs, at an average 40 MB's each, that's approx. 13 GB's for that "pg_largeobject " table alone, which is by far the largest one inside the Postgres DB.

Once the issue will be fixed, you can expect it to drop to, and stay at, around 800 MB's.

Now regarding the secondary:

This one, at least as of today, will always be at minimum twice the size of the primary, and in some cases 3 times (which seems to be your case).

But once the root cause would be fixed on the primary, this doubling or tripling will be much less of a problem I guess.

But you'll need enough free space on it to debloat/vacuum the DB, once issue on primary fixed.

Last but not least, and to spare you the 9 months that our case took, you can reference SR#6-0003437115 and bug ID PRHF-29565 to the TAC.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Many thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did TAC eventually solve the ticket for you? Can you share what they did or instructed?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I’d like to elaborate a bit on the postgres disk space consumption on standby machines and the IPS purge not running issue @jgar encountered.

Postgres disk space consumption on standby machines

Roughly speaking, when deleting or updating a record in postgres, postgres does not release the remaining disk space to the OS, but keeps it for internal usage. In some cases, postgres ‘recycles’ these bytes for further records updates or creations (by ‘autovacuum’ for instance), but in some cases, due to internal calculations, it just keeps consuming more disk space and will eventually require manual cleaning. This additional disk space that can be cleaned up is called ‘bloat’.

The postgres DB owner (in this case – us J) is supposed to maintain the health of the DB.

Full sync is a Management operation that creates a lot of bloat – that is because in full sync, in simple words, we delete all the records on the synchronized machine, and copy them from the synchronizing machine.

Before R81.10, this was a heavy manual operation.

In R81.10, due to major performance improvement in full sync duration, we have automatic full sync (in every domain apart from System domain) every 5 minutes (instead of the incremental sync that wasn’t stable enough).

In the bottom line, this means that every 5 minutes we create bloat – and in some cases the automatic cleanup is not fast enough to overcome it.

This behavior impacts the most on MDSs with many standby CMAs, or SMC standby machines.

A solution to this problem is in progress.

- For MDSs, a solution is in development and should be released. It will not require a code change and should be released in an updatable way in the next few months.

- For SMCs (and SAs), several customers already received HFs with a fix for it ( @jgar – you should receive a HF next week).

Once the fix is proved as solving the problem, it will be released via Jumbo HF (PRHF-29307)

IPS purge not running on SA in Full HA environment

The issue described above with the IPS purge not running is simply a bug. The IPS purge has a mechanism to avoid deletion of IPS packages when they are suspected to be used by other domains/machines in the environment. In case of SMC/SA HA, this call failed, but was not required in the first place. This was fixed under PRHF-29565 and will be released in the next Jumbo HFs.

Thanks,

Natan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm just a bit confused regarding the 5-min interval that you mention between full syncs.

Because in the case of my customer, and also at another one with the same Full Mgmt/HA cluster config, I've never seen nothing but a sync every hour.

Unless what I'm seeing every hour is something else.

But it really seems to be exactly that, with high network traffic primary > secondary at that moment, and several Postgres processes putting high load on cpu's.

With just nothing of this kind happening between those 1 hour intervals.

Also, regarding your last paragraph's title, shouldn't that be IPS purge not running on SA primary ?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jgar thank you for the correction for the headline of the second paragraph.

Regarding the full sync happening every hour and not every 5 minutes, there are 2 options:

1. One of the improvements made in full sync is that unlike in the past that it will do full copy-paste, it checks for each table in the DB if there is a difference between the table in the source machine and the target machine, and only then performs copy-paste of the table. It's possible that the number of full sync the customer sees is in accordance to the amount and frequency of changes they make.

Note: this applies to the automatic full sync operation and not the manual one. The manual full sync copies and pastes everything.

2. It's possible that some 'full syncs' are skipped due to load of the system (usually FWM), which is normal. In case more than a few automatic full sync requests are skipped, you'll see a yellow triangle in Smart Console reporting an issue. So if the customer doesn't see it, I don't see a reason to be concerned.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 25 | |

| 15 | |

| 13 | |

| 10 | |

| 6 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |

Upcoming Events

Wed 19 Nov 2025 @ 11:00 AM (EST)

TechTalk: Improve Your Security Posture with Threat Prevention and Policy InsightsThu 20 Nov 2025 @ 05:00 PM (CET)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - AMERThu 20 Nov 2025 @ 10:00 AM (CST)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - EMEAWed 26 Nov 2025 @ 12:00 PM (COT)

Panama City: Risk Management a la Parrilla: ERM, TEM & Meat LunchWed 19 Nov 2025 @ 11:00 AM (EST)

TechTalk: Improve Your Security Posture with Threat Prevention and Policy InsightsThu 20 Nov 2025 @ 05:00 PM (CET)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - AMERThu 20 Nov 2025 @ 10:00 AM (CST)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - EMEAThu 04 Dec 2025 @ 12:30 PM (SGT)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - APACThu 04 Dec 2025 @ 03:00 PM (CET)

End-of-Year Event: Securing AI Transformation in a Hyperconnected World - EMEAWed 26 Nov 2025 @ 12:00 PM (COT)

Panama City: Risk Management a la Parrilla: ERM, TEM & Meat LunchAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter