- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Management

- :

- Re: Newbie question - Exporting from logfiles from...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Newbie question - Exporting from logfiles from old dates

Hi all,

I am somewhat Newbie to CheckPoint. I am looking to export data from specific dates in logfiles a few months ago. I tried using Smartview but this only has data for the past 29 days.

I was able to use the R80.30 SmartConsole, which allowed me to File > Open Log File and select logfiles for the date required, but when I choose File > Export to CSV, it only exports the data on screen and in the screen buffer. I read a posting from 2017 which stated that it was expected that the limitation of being able to open older logfiles from the Smartview would be "one of the next versions".

Is there any way of doing what I require. The extract I need could have several thousand of entries.

Thanks in advance

- Tags:

- smartview

19 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

BUMP - can anyone please confirm ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You still need to use SmartView to do this as far as I know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Easiest way for you to do that is to go to the web logs viewer (available from R80.20 in https://<log_server_IP>/smartview).

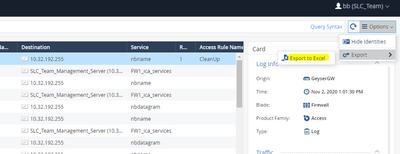

In there you can export up to 1 million logs at a time. Go to the timeframe and pick the selected date and time and then Option -> Export -> Export to Excel

If you need to export more than a million you might need to adjust timeframe. I recommend filtering the data to what you need in that timeframe first so you won't need to export the logs more than once.

Also I recommend choosing proper column profile with the data you need and export visible data and not all data if you don't have use for it.

Kind regards, Amir Senn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Amir_Senn

Can I export all logs with it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Only in chunks of a million.

What is the actual task you're trying to accomplish?

If you can provide more details about why you're trying to do this, there may be alternate solutions available.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The unit is running R80.30, and so it says that I cannot run the R80.20 console against this.

Is there any other way to retrieve the logs please ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1) All the R80.20 features in available to R80.30.

2) You don't need to open SmartConsole for this, the webapp is GUI free and available in your browser while using the IP of the log server (https://<log_server_IP>/smartview).

Kind regards, Amir Senn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, let me start again. I start off by going to Options > File > Open Log File .... and select the date of the old logfile

I then select my subset of records

I then click on Options > File > Export to Excel CSV - but this only exports what is displayed on0screen (and maybe a bit more)

Please clarify as to how to export the whole selection of records which were filtered (my subset of the whole logfile)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Go to webapp on https://<log_server_IP>/smartview , not SmartConsole(!) - see picture 1

2. Login with same credential as SmartConsole.

3. Go to Logs View - by default you only have 3 tabs when you first open the webapp. Click on the '+' sign, this will open the catalog. From there open Logs view - picture 3

4. Select the desired timeframe - picture 4

5. Put extra filters if desired in the query line and see that proper results are presented.

6. Option -> Export -> Export to Excel

Kind regards, Amir Senn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I put in the date I need (6th August), it says "No Data Found", since it's not within the last 29 days. I tested by selecting dates until I got data - and it was going back up to 29 days ago. So the only way I could retrieve the data I need was by using the client, and using the Open Log File option

Have I missed something ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see, that means that those log files are no longer indexed. Either you have index retention of 29-30 days or your emergency maintenance got triggered.

You can verify with "ls -lh $RTDIR/log_indexes/". Anyway I recommend looking at your log retention definitions on the log server object.

I will suggest one of the following actions:

1) If you only want to retain the log file you can always copy it back to the server and open it. Log files are located in "$FWDIR/log/". Make sure to copy all files with the same date - log file has a few smaller files with relevant data which are important as well.

2) Use SmartConsole as you suggested - a) open log file b) Filter the logs as much as you can c) Scroll down until you have all the results you wish and then export

3) Try to open CPlgv.exe from the SmartConsole directory. This is the old tracker. You can open the log file you desire and export it to txt (menu -> file -> export). This might not work depending on your version.

Kind regards, Amir Senn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Many thanks. Option 2 will not work because I need many thousands of lines

So to clarify, for Option 1, I just need to copy the relevant files back from the achive directory to $FWDIR/log/ and then do the extract as though the date is within the Indexed window (by selecting a specific date and selectiung the date of the files I copied) - correct ? Or do I need to force some form of indexing ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After putting the log files back in $FWDIR/log/ you will be able to open them with open log file as you do now.

This won't be indexed though. Except for online logs, the indexer only create indexes for today and yesterday log files by default. This is determined by logs creation date which is set when the log file was originally opened. We can change the "days to index" value to include August but it will also include newer files which means you need to index around 60 days and that is not recommended without more details.

Kind regards, Amir Senn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So what is the advantage of putting them back into $FWDIR/log/ ? I can currently already Open File Log and select a logfile - but then I can't Export sever thousand records. Does this mean that the logs would therefore already be in $FWDIR\log/ ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This will keep your logs stored so they won't be erased.

The reason you don't have the indexes is probably because of log maintenance. Depending on your definitions it keeps the log file and the indexes.

We have 2 kinds of maintenance:

1) Emergency - If your free space reaches the threshold for this it will start deleting the oldest log files and oldest indexes.

2) Planned - under each log server we have a definition for amount of days to keep logs and indexes.

The reason you don't have the indexes for that date is because one of the maintenance. If you didn't perform upgrade then it's probably the second one since you still have the log files.

So keeping it on another computer/server will keep them even if maintenance is triggered. If you want to access them and they we're erased by maintenance you can copy back and open.

Kind regards, Amir Senn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, but I am still confused.

Right now, in the GUI, I cannot open files other than the files which are Indexed - so this does not seem like an option for me. In the R80.30 console, I can click on Options > File > Open Log File .......... And the logfiles I want are in the list.

So simply, how do I extract all of the records I require (given the fact that there will be several thousand lines) ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I may have one more solution for you.

Connect to the log server in SSH and use "CPLogFilePrint -m su -indent -join <log_file_name>.log | grep <argument_to_filter> > <output_file>.txt"

(-m su -indent -join is optional but it arranges the logs nicely).

This will write the logs a file according to the argument you grep.

I suggest using it one without writing to a file to see that the filter works properly.

Kind regards, Amir Senn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, so I am looking for all records with a specific destination - so it would be CPLogFilePrint -m su -indent -join <log_file_name>.log | grep 12.34.56.78 > <output_file>.txt

So do I need to the dest IP address in quotes (and single or double" and then, can I run with clish ? I don't have Expert access right now

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try: grep "'dst': <IP>"

Kind regards, Amir Senn

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 22 | |

| 14 | |

| 8 | |

| 7 | |

| 5 | |

| 5 | |

| 5 | |

| 5 | |

| 4 | |

| 4 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter