- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

The Great Exposure Reset

24 February 2026 @ 5pm CET / 11am EST

CheckMates Fest 2026

Watch Now!AI Security Masters

Hacking with AI: The Dark Side of Innovation

CheckMates Go:

CheckMates Fest

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Re: Upgrading form R81.10 to R81.20

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

Upgrading form R81.10 to R81.20

I am upgrading my firewalls appliances members in a cluster from R81.10 T87 to R81.20.

I am using the Installation and Upgrade Guide R81.20 page 417 on Zero Downtime Upgrade of a Security Gateway Cluster and it is not accurate!!

Procedure:

1. On each Cluster Member, change the CCP mode to Broadcast

Important - This step does not apply to R80.30 with Linux kernel 3.10 (run the

"uname -r" command).

Best Practice - To avoid possible problems with switches around the cluster

during the upgrade, we recommend to change the Cluster Control Protocol

(CCP) (CCP) mode to Broadcast.

Step Instructions

1 Connect to the command line on each Cluster Member.

2 Log in to the Expert mode.

3 Change the CCP mode to Broadcast:

[Expert@FW1:0]# cphaconf set_ccp broadcast

Notes:

This change does not require a reboot.

This change applies immediately and survives reboot.

Actual output:

1) First issue

[Expert@FW1:0]# cphaconf set_ccp broadcast

CCP mode is only unicast

This command is obsolete

[Expert@FW1:0]#

which is ok if that is not problem since it is only unicast now.

2) do we need to remove the standby member from the cluster and add it back in?

If so what is cli commands to add/remove member from a cluster?

runnning "clusterxl_admin down

Actual output:

[Expert@FW1:0]# clusterxl_admin down

bash: clusterxl_admin: command not found

[Expert@FW1:0]#

4 Make sure the CCP mode is set to Broadcast:

cphaprob -a if

2. On the Cluster Member M2, upgrade to R81.20 with CPUSE, or perform a Clean Install of

R81.20

Important - You must reboot the Cluster Member after the upgrade or clean install.

With all that being said above what is the "exact procedure" since it is not listed?

Regards

Dave F

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, not to exaggerate now, but I had done this probably 50 times and every time I did it, below is what I followed and NEVER had an issue (forget about the versions, process was literally the same even 20 years ago lol)

I will list steps I do:

-generate backups on both cluster members (I dont bother with snapshots, but thats your choice)

-push policy before the upgrade, just to make sure all is good

-download say R81.20 (latest recommended jumbo) and verify it can be upgraded

-install R81.20 on BACKUP member and reboot

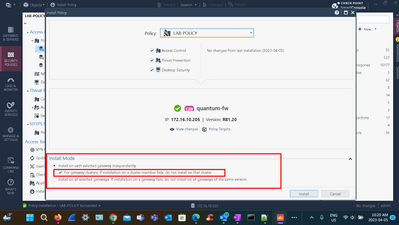

-once rebooted, change cluster version in dashboard to R81.20 and uncheck that option for cluster "dont install policy..." and once policy is done, it will ONLY apply on upgraded member

-current master is STILL master, one on R81.10

-issue failover by running clusterXL_admin down on R81.10 member, so everything goes to upgraded member and verify this by running cphaprob roles and cphaprob state commands

-if all good, upgrade R81.10 member to R81.20, install policy after reboot, just recheck option "dont install policy..." (its also indicated what option that is in zero downtime upgrade)

-once done, verify cluster state and if all good, you can fail back to original master member (just dont forget to run clusterXL_admin up) : - )

-once done, verify all...routing, nat, vpn etc

I am 100% POSITIVE in these steps, because they never failed for me.

Andy

Best,

Andy

Andy

34 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The last upgrade we did from 80.40 to 81.10 these commands were accurate. I'm also curious on the new process for Zero Downtime upgrades.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would use "set cluster member mvc on" on the R81.20 device, then once both members are running R81.20 turn it off.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply I do have a question both nodes are at R81.10 T87 so neither are at R81.20 unless "set cluster member mvc on" works on R81.10? I am only worried about the upgraded device taking control while the Active node is still R81.10.

Thanks, Dave F

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just recently did cluster upgrade from R81.10 to R81.20 exact same way I had done it for years, never changed ccp mode, mvc, nothing. Upgraded current backup, rebooted, changed version in smart console for cluster to R81.20, unchecked "dont push to cluster...", after policy install, failed over from current master to upgraded member by running clusterXL_admin down on R81.10 member, upgraded that fw, rebooted, rechecked the option I mentioned when pushing policy, made sure all was good, failed back to original master member.

Thats it, thats all, plain and simple, no issues : - )

I have perfectly working cluster in the lab on R81.10 jumbo 94, happy to do any testing for you, no issues. Currently those VMs are off as my colleague needed more resources for Aruba training, but I can always power them on after work hours and test, happy to do it.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was once on a call with guy from R&D about something unrelated and I told him that line about changing CCP mode should either be removed or fixed, he said they would do it, its been 6 years now and its still there. I cant even count how many cluster upgrades I did and I never changed ccp mode at all and everything worked fine.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply what I am concerned about after upgrading the standby node first (R81.10 -> R81.2) and it becomes the active node while Active node running R81.10 Also thinks it is the active node.

Regards, Dave F

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, not to exaggerate now, but I had done this probably 50 times and every time I did it, below is what I followed and NEVER had an issue (forget about the versions, process was literally the same even 20 years ago lol)

I will list steps I do:

-generate backups on both cluster members (I dont bother with snapshots, but thats your choice)

-push policy before the upgrade, just to make sure all is good

-download say R81.20 (latest recommended jumbo) and verify it can be upgraded

-install R81.20 on BACKUP member and reboot

-once rebooted, change cluster version in dashboard to R81.20 and uncheck that option for cluster "dont install policy..." and once policy is done, it will ONLY apply on upgraded member

-current master is STILL master, one on R81.10

-issue failover by running clusterXL_admin down on R81.10 member, so everything goes to upgraded member and verify this by running cphaprob roles and cphaprob state commands

-if all good, upgrade R81.10 member to R81.20, install policy after reboot, just recheck option "dont install policy..." (its also indicated what option that is in zero downtime upgrade)

-once done, verify cluster state and if all good, you can fail back to original master member (just dont forget to run clusterXL_admin up) : - )

-once done, verify all...routing, nat, vpn etc

I am 100% POSITIVE in these steps, because they never failed for me.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Rock well I guess I do not have your luck as recently I was upgrading from R80.40 T(most current) to R81.10 and I had 2 active nodes one with R80.40 and one with R81.10 fighting to be the one. The not so quick fix was to power down, unplug the R81.10 FW, blow it it away and reinstall as a new RMA'd device replacement.

Regards, Dave F

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure what to tell you Dave, sorry...every time I did this, whenever current backup was upgraded and rebooted, current master was ALWAYS still master, which makes perfect sense, even though it had lower software version, since rebooting backup member with higher version would never make it become master.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By the way, this is option I forgot to attach screenshot of I as referring to in my steps.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey,

The method you use to update your clusters, I like them.

But I have 2 curiosities.

What package do you use in your upgrade method?

Do you simply use the CPUSE + Hotfix (In total it would be to install 2 packages on each GW), or do you use the Blink Image on each GW (I understand that here you would only install one package).

The other thing is, during your Cluster upgrade, at some point, do you use the "cphaconfig mvc on" command?

Is that command necessary?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Buddy,

Do you use in your updates, the CPUSE package + the Hotfix package?

Or just use the Blink Image?

At least in your experience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I always use blink image.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, Andy.

To use Blink Image, you must have a minimum of space in /var/log for updates, right?

This minimum, I guess can be 10GB. Is this correct?

At some point in your updates, have you had the need to use the "cphaconf mvc on" command?

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For my own experience, I would say /var/log should have minimum 20GF free for the upgrade. As far as mvc, I never found it any useful...again, just own experience.

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Andy,

Once you manage to update the version, with the BLINK IMAGE package, this package can be deleted?

As to free up disk space again.

Could you tell me, what is the path, where the CPUSE and Blink Image packages are "hosted", when you have already installed them, please.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Buddy, we had been through this many times before, but since I like you, I will say it again lol. But, PLEASE, make sure you bookmark this community post, so you can refer to it later when needed.

See below post from smart man @G_W_Albrecht :

https://community.checkpoint.com/t5/Security-Gateways/Disk-Space-issues-on-Gateway/m-p/161537#M28601

In that post, you will also see my response:

I always do something like this. First, run df -h and see what dir is the "fullest". Then, say it shows its /var/log at, for argument sake, at 90% capacity, do something like this:

find /var/log -size +500000000c

That will look for ANY files bigger than 500 MB in /var/log. You can apply same method for any dir and any file size.

Andy

*********************************************

Now, to help you even further, also refer to below helpful sk's for this:

https://support.checkpoint.com/results/sk/sk60080

https://support.checkpoint.com/results/sk/sk33997

https://support.checkpoint.com/results/sk/sk63361

Fianlly, to answer your question, YES, its safe to delete upgrade package and its always in /var/log/CPda/repository

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have a separate management server, just upload the R81.20 CPUSE package into its repository and upgrade using SmartConsole. It handles all of the steps for you. Seriously, it's great. Saves so much headache.

For your question about removing a member from the cluster and adding it back, no, you absolutely don't remove a member from the cluster.

For your question about forcing a failover, it's clusterXL_admin. The XL is uppercase.

Be aware that a "zero-downtime upgrade" is probably not what you think it is. It means there is no point at which new connections cannot be formed, but it drops ongoing connections. You probably want a multi-version cluster upgrade, which involves the 'cphaconf mvc on'.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Personally, never found it any faster doing the process from smart console.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Agreed 🙂 Things are always slower when you are watching 😄

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I heard that LOL

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For me, it's faster both in terms of wall-clock time for one upgrade (doesn't rely on a person watching stuff to trigger the next step) and in terms of how many upgrades we can do in a month. Only a handful of people on my team have ever installed Gaia from scratch. If every upgrade requires somebody who knows what 'cphaconf mvc on' does, then one of the leads needs to be personally involved in a lot of upgrades, making them the limiting factor.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

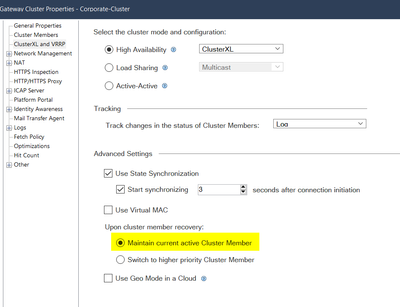

Like @Bob_Zimmerman said, I would prefer using Smartconsole, that's the best and easiest way to do all the steps of an upgrade for you. One thing to mentioned, before you do the upgrade you have to check the cluster recovery settings, this must be set to "Maintain current active Cluster Member". There are some connectivity effects during the upgrade with the other setting if you start the process with the wrong member. But with "Maintain current active Cluster Member" you are save.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good point about that screenshot @Wolfgang . Its just me, but personally, I prefer to stick with method that I know works 100%, which is upgrade from web UI. Not saying smart console method is worse, I know it works, but I just dont find it any faster or more useful, but again, thats just me.

Cheers,

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Wofgang I did double check my ClusterXL settings and it is set as you have mentioned.

Regards, Dave F

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Bob_Zimmerman noted I will add to my memory banks as I do forget to do commands exactly as noted.

Regards,

Dave F

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Is this way of upgrading, from the SmartConsole, feasible in R80.30 and R80.40 versions?

I have Clusters in both versions, and we need to bring it to R81.10, but I am not sure if the mentioned versions, work the upgrade from your SmartConsole.

I understand that if you use this upgrade method, the package to be installed is downloaded only in the SMS, correct?

Is there any "disk space" considerations on the computers?

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to upgrade the management to R81, R81.10, or R81.20 first. Older versions don't support major version upgrades from SmartConsole. As long as the management can do major version upgrades, it's just like doing the upgrade via CPUSE directly on the boxes. My memory is you should step R80.30 up to R80.40 first, then to R81+.

As for the package, you can put it on the management and have the management push it to the firewall, or you can have the management tell the firewall to download it for itself. Either way, the package ends up on the firewall in the CPUSE repository before it is installed.

There are the same disk space concerns as any other CPUSE upgrade. You need to have the space available in /var/log to import the package, and you need to have the unallocated space available in vg_splat for a snapshot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How much space should the appliances (SMS or GW) have in the /var/log/ path, to be able to update the versions with CPUSE?

Is there a recommended # of free space to have?

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 22 | |

| 22 | |

| 13 | |

| 9 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 | |

| 7 |

Trending Discussions

Upcoming Events

Thu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: AI-Generated Malware - From Experimentation to Operational RealityFri 13 Feb 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 43: Terugblik op de Check Point Sales Kick Off 2026Thu 19 Feb 2026 @ 03:00 PM (EST)

Americas Deep Dive: Check Point Management API Best PracticesThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: AI-Generated Malware - From Experimentation to Operational RealityFri 13 Feb 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 43: Terugblik op de Check Point Sales Kick Off 2026Thu 19 Feb 2026 @ 03:00 PM (EST)

Americas Deep Dive: Check Point Management API Best PracticesAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter