Background:

Current install base:

- Site 1 (Actively Processing Production Traffic)

- 1X Smart-1 5050 (MDS)

- 2X 23500 (VSX)

- Site 2 (Not yet deployed; being used as testbed)

- 1X Smart-1 5050 (MDS)

- 2X 23500 (VSX)

In preparation for an upgrade from R80.30 to R80.40 at the Site 1, we have been simulating the process using the devices at site 2. We have been going though a couple iterations of the upgrade process in order to pick up on any issues as well as to document the steps.

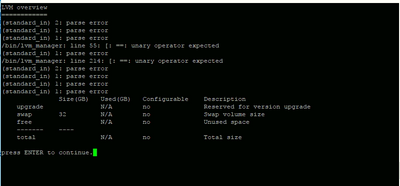

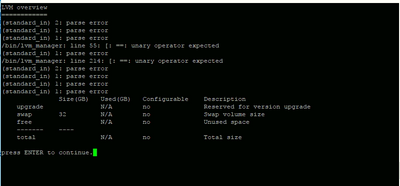

On the gateway appliances specifically, i have encountered an issue after doing a couple mock upgrades. I got the message below:

Result: Install of package Check_Point_R80.40_T294_Fresh_Install_and_Upgrade.tgz Failed

Failed to create new partition under /mnt

Contact Check Point Technical Services for further assistance.

I then proceeded to reboot the appliance in order to try again. I has been stuck in a reboot loop since. The last messages in the loop are:

Reading all physical volumes. This may take a while...

Volume group "vg_splat" not found

mount: could notKernel panic - not syncing: Attempted to kill init!

find filesystem

Entering kdb (current=0xffff81011dd0f7b0, pid 1) on processor 20 due to KDB_ENTER()

Opened a SR and they asked us to try diagnostics from the boot menu. That option as well as others had no effect on the power cycling (as in we did not get a prompt to enter any commands or select any options). We also tried doing a fresh install, both from ISOmorphic and USB DVD drive, but the console shows where it started to load drivers and not much happens after that.

Thinking this was a bug or hardware issue on the original appliance, I proceeded to attempt an upgrade on the second box, only to encounter the original message about failing to create the partition under /mnt. At this point I realized that it had something to do with the process i was following.

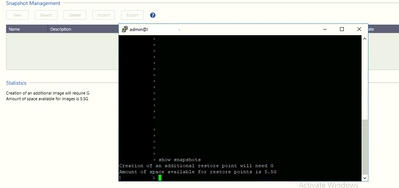

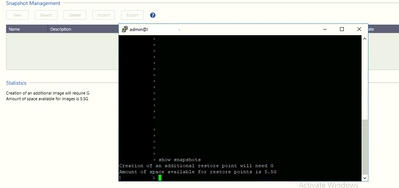

Has anyone experienced this? What was the resolution? I'm attaching some screenshots of various output received during this issue