- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

R82.10 and Rationalizing Multi Vendor Security Policies

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Latency on Links

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

Latency on Links

Hello,

We have been experiencing latency on our Bonded links. It has had a major impact on product and caused us to move our bonded gateway back to our core switch. I simply am not familiar enough with the different core configurations to make an assessment here. Originally I setup our bond with multi-queuing. Can someone assist? Here is the Super 7 output:

[Expert@CSBFW-PROD-A:0]# /usr/bin/s7pac

+-----------------------------------------------------------------------------+

| Super Seven Performance Assessment Commands v0.3 (Thanks to Timothy Hall) |

+-----------------------------------------------------------------------------+

| Inspecting your environment: OK |

| This is a firewall....(continuing) |

| |

| Referred pagenumbers are to be found in the following book: |

| Max Power: Check Point Firewall Performance Optimization - Second Edition |

| Available at http://www.maxpowerfirewalls.com/ |

| |

+-----------------------------------------------------------------------------+

| Command #1: fwaccel stat |

| |

| Check for : Accelerator Status must be enabled (R77.xx/R80.10 versions) |

| Status must be enabled (R80.20) |

| Accept Templates must be enabled |

| Message "disabled" from (low rule number) = bad |

| |

| Chapter 9: SecureXL throughput acceleration |

| Page 278 |

+-----------------------------------------------------------------------------+

| Output: |

Accelerator Status : on

Accept Templates : enabled

Drop Templates : disabled

NAT Templates : disabled by user

Accelerator Features : Accounting, NAT, Cryptography, Routing,

HasClock, Templates, Synchronous, IdleDetection,

Sequencing, TcpStateDetect, AutoExpire,

DelayedNotif, TcpStateDetectV2, CPLS, McastRouting,

WireMode, DropTemplates, NatTemplates,

Streaming, MultiFW, AntiSpoofing, Nac,

ViolationStats, AsychronicNotif, ERDOS,

NAT64, GTPAcceleration, SCTPAcceleration,

McastRoutingV2

Cryptography Features : Tunnel, UDPEncapsulation, MD5, SHA1, NULL,

3DES, DES, CAST, CAST-40, AES-128, AES-256,

ESP, LinkSelection, DynamicVPN, NatTraversal,

EncRouting, AES-XCBC, SHA256

+-----------------------------------------------------------------------------+

| Command #2: fwaccel stats -s |

| |

| Check for : Accelerated conns/Totals conns: >25% good, >50% great |

| Accelerated pkts/Total pkts : >50% great |

| PXL pkts/Total pkts : >50% OK |

| F2Fed pkts/Total pkts : <30% good, <10% great |

| |

| Chapter 9: SecureXL throughput acceleration |

| Page 287, Packet/Throughput Acceleration: The Three Kernel Paths |

+-----------------------------------------------------------------------------+

| Output: |

Accelerated conns/Total conns : 0/35 (0%)

Accelerated pkts/Total pkts : 0/3983956 (0%)

F2Fed pkts/Total pkts : 3983956/3983956 (100%)

PXL pkts/Total pkts : 0/3983956 (0%)

QXL pkts/Total pkts : 0/3983956 (0%)

+-----------------------------------------------------------------------------+

| Command #3: grep -c ^processor /proc/cpuinfo && /sbin/cpuinfo |

| |

| Check for : If number of cores is roughly double what you are excpecting, |

| hyperthreading may be enabled |

| |

| Chapter 7: CorexL Tuning |

| Page 239 |

+-----------------------------------------------------------------------------+

| Output: |

6

HyperThreading=disabled

+-----------------------------------------------------------------------------+

| Command #4: fw ctl affinity -l -r |

| |

| Check for : SND/IRQ/Dispatcher Cores, # of CPU's allocated to interface(s) |

| Firewall Workers/INSPECT Cores, # of CPU's allocated to fw_x |

| R77.30: Support processes executed on ALL CPU's |

| R80.xx: Support processes only executed on Firewall Worker Cores|

| |

| Chapter 7: CoreXL Tuning |

| Page 221 |

+-----------------------------------------------------------------------------+

| Output: |

CPU 0: eth1-04

CPU 1: Sync Mgmt

CPU 2:

CPU 3:

CPU 4:

CPU 5:

All:

+-----------------------------------------------------------------------------+

| Command #5: netstat -ni |

| |

| Check for : RX/TX errors |

| RX-DRP % should be <0.1% calculated by (RX-DRP/RX-OK)*100 |

| TX-ERR might indicate Fast Ethernet/100Mbps Duplex Mismatch |

| |

| Chapter 2: Layers 1&2 Performance Optimization |

| Page 28-35 |

| |

| Chapter 7: CoreXL Tuning |

| Page 204 |

+-----------------------------------------------------------------------------+

| Output: |

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

Mgmt 1500 0 43600162 2 0 0 36013528 0 0 0 BMRU

Sync 1500 0 61606193 0 0 0 283637876 0 0 0 BMRU

eth1-04 1500 0 5426765033 0 495 495 4930747640 0 0 0 BMRU

lo 16436 0 8398237 0 0 0 8398237 0 0 0 LRU

interface eth1-04: There are no RX drops

+-----------------------------------------------------------------------------+

| Command #6: fw ctl multik stat |

| |

| Check for : Large # of conns on Worker 0 - IPSec VPN/VoIP? |

| Large imbalance of connections on a single or multiple Workers |

| |

| Chapter 7: CoreXL Tuning |

| Page 241 |

| |

| Chapter 8: CoreXL VPN Optimization |

| Page 256 |

+-----------------------------------------------------------------------------+

| Output: |

fw: CoreXL is disabled

+-----------------------------------------------------------------------------+

| Command #7: cpstat os -f multi_cpu -o 1 -c 5 |

| |

| Check for : High SND/IRQ Core Utilization |

| High Firewall Worker Core Utilization |

| |

| Chapter 7: CoreXL Tuning |

| Page 197 |

+-----------------------------------------------------------------------------+

| Output: |

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 8| 8| 84| 16| ?| 4|

| 2| 5| 6| 89| 11| ?| 4|

| 3| 4| 5| 91| 9| ?| 4|

| 4| 4| 4| 92| 8| ?| 4|

| 5| 4| 4| 92| 8| ?| 4|

| 6| 4| 4| 93| 7| ?| 4|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 8| 8| 84| 16| ?| 4|

| 2| 5| 6| 89| 11| ?| 4|

| 3| 4| 5| 91| 9| ?| 4|

| 4| 4| 4| 92| 8| ?| 4|

| 5| 4| 4| 92| 8| ?| 4|

| 6| 4| 4| 93| 7| ?| 4|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 14| 16| 70| 30| ?| 2726|

| 2| 4| 11| 86| 14| ?| 2726|

| 3| 5| 8| 87| 13| ?| 5452|

| 4| 5| 6| 89| 11| ?| 2726|

| 5| 8| 9| 84| 16| ?| 2726|

| 6| 10| 9| 81| 19| ?| 5452|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 14| 16| 70| 30| ?| 2726|

| 2| 4| 11| 86| 14| ?| 2726|

| 3| 5| 8| 87| 13| ?| 5452|

| 4| 5| 6| 89| 11| ?| 2726|

| 5| 8| 9| 84| 16| ?| 2726|

| 6| 10| 9| 81| 19| ?| 5452|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 10| 26| 64| 36| ?| 694|

| 2| 3| 9| 89| 11| ?| 694|

| 3| 5| 8| 87| 13| ?| 694|

| 4| 5| 10| 86| 14| ?| 694|

| 5| 6| 14| 80| 20| ?| 695|

| 6| 7| 13| 80| 20| ?| 697|

---------------------------------------------------------------------------------

+-----------------------------------------------------------------------------+

| Thanks for using s7pac |

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are number of things that can be improved here. For one - to remove all the virtual switches that extend single VLAN from external interface to virtual firewall. They only consume already precious system resources without adding any benefit. The only VSW you could keep is 14 that's connected to both VS10 and VS15.

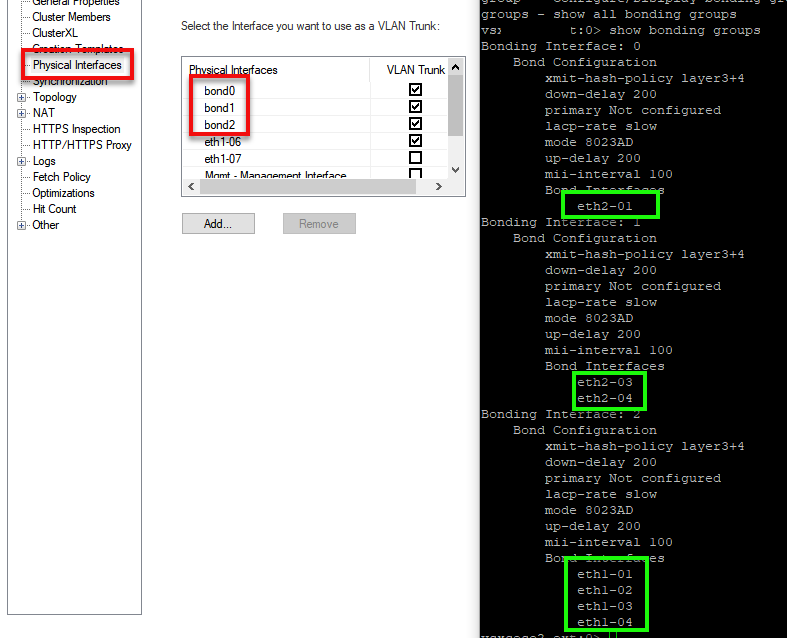

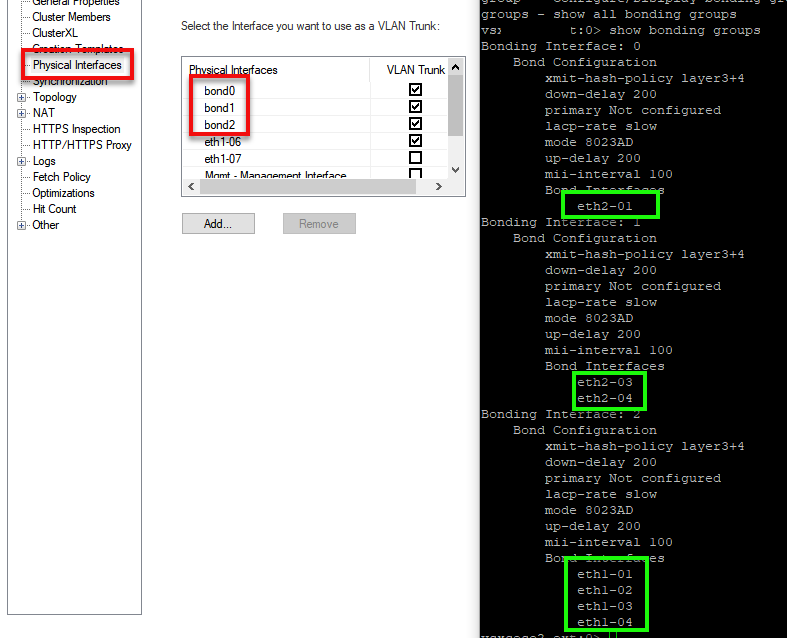

It also appears that bonding is not set correctly - bond members (physical interfaces should not be defined in VSX topology, for example below I only define bondX in VSX object Physical interfaces, not ethX-Y

That potentially could cause issues as it is incorrect configuration.

In your case you would need to remove eth1-05 and eth1-08 from VS14 as they appear to be forming bond0

The same goes for eth1-02 and eth1-03 forming bond1 on VS16 - remove them.

Then remove all four (eth1-02,eth1-03,eth1-05,eth1-08) from VSX Physical interfaces - VSX should only see bond name not it's members.

We can take it privately further to stop sharing too much details publicly ![]()

25 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question: Why is CoreXL disabled?

Everything seems to be going F2F/slowpath through only one core, not sure if that high F2F is due to CoreXL being off or if you are experiencing fragmentation issues. Not surprised at all that you are seeing high latency.

Please provide output of:

enabled_blades

fwaccel stats -p

--

CheckMates Break Out Sessions Speaker

CPX 2019 Las Vegas & Vienna - Tuesday@13:30

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess he should check HyperThreading too...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm reading that hyper threading on the 12400 series needs to be enabled in the bios by support.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It appears that I have 5 physical cores. So I plan to enable corexl and set 2 of these cores for the FW instances if further research proves this to be the best path. This system has been a bit of a lesson in hard knocks so I really appreciate your taking the time to assist.

[Expert@a:0]# vsenv 10

Context is set to Virtual Device a:10_PRODFW01 (ID 10).

[Expert@a:10]# enabled_blades

fw vpn cvpn urlf av appi identityServer

[Expert@a:10]# vsenv 15

Context is set to Virtual Device a:15_PRODFW02 (ID 15).

[Expert@a:15]# enabled_blades

fw identityServer

F2F packets:

--------------

Violation Packets Violation Packets

-------------------- --------------- -------------------- ---------------

pkt is a fragment 0 pkt has IP options 4335

ICMP miss conn 40 TCP-SYN miss conn 3578

TCP-other miss conn 39 UDP miss conn 1930086

other miss conn 3603 VPN returned F2F 0

ICMP conn is F2Fed 0 TCP conn is F2Fed 4831

UDP conn is F2Fed 51060 other conn is F2Fed 0

uni-directional viol 0 possible spoof viol 0

TCP state viol 0 out if not def/accl 0

bridge, src=dst 0 routing decision err 0

sanity checks failed 0 temp conn expired 0

fwd to non-pivot 0 broadcast/multicast 0

cluster message 0 partial conn 0

PXL returned F2F 0 cluster forward 782

chain forwarding 0 general reason 0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have 6 cores. Based on your traffic path distribution I'd recommend that 4 of them be Firewall Workers and 2 be SND/IRQ cores. Doesn't look like the high F2F is caused by IP frags, so no concerns there.

I wouldn't worry about hyperthreading for the moment, I'd focus on getting CoreXL enabled & configured first, run production traffic for a few days, then run the Super Seven again. The only legit reasons you might have CoreXL disabled are:

- Still using the ancient Traditional Mode VPN's (encrypt actions will be present in the rulebase)

- Using route-based VPNs/VTIs (resolved in R80.10 gateway)

- Using QoS blade (resolved in R77.10 gateway)

There are a few other corner cases that require disabling CoreXL, but these are the big ones. See sk61701: CoreXL Known Limitations for the complete list. Since you are using VSX, also please see Kaspars Zibarts great VSX tuning post below; he will be presenting this VSX tuning material at CPX Vienna 2019 right after my presentation: https://community.checkpoint.com/message/29117-security-gateway-performance-optimization-vsx

--

CheckMates Break Out Sessions Speaker

CPX 2019 Las Vegas & Vienna - Tuesday@13:30

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you. Considering that this is VSX, would we use the cpconfig method or assign new firewall instances via smart dashboard?

Also, I have been looking for a way to assign addition cores to the SND. Could you point me in the right direction?

Finally, based on your post above, we should be ok to enable corexl. The one thing that confuses me is this output:

| Chapter 7: CoreXL Tuning |

| Page 221 |

+-----------------------------------------------------------------------------+

| Output: |

CPU 0: eth1-04

CPU 1: Sync Mgmt

CPU 2:

CPU 3:

CPU 4:

CPU 5:

All:

Does this mean that 2 of our cores are already in use? eth1-04 is a trunk.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ok, now i understand why do we see 100% f2f - its traffic originating from vs0 not beeing accelerated, thats totally normal.

can you share fw ctl affinity -l from vs0 context pls?

sounds like your system is underpowered in all honesty if i see 15 virtual systems on 6 cores.. that will be tough ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A:0> fw ctl affinity -l

Sync: CPU 1

Mgmt: CPU 1

eth1-04: CPU 0

VS_0 fwk: CPU 2 3 4 5

VS_1 fwk: CPU 2 3 4 5

VS_2 fwk: CPU 2 3 4 5

VS_4 fwk: CPU 2 3 4 5

VS_5 fwk: CPU 2 3 4 5

VS_6 fwk: CPU 2 3 4 5

VS_7 fwk: CPU 2 3 4 5

VS_8 fwk: CPU 2 3 4 5

VS_9 fwk: CPU 2 3 4 5

VS_10 fwk: CPU 2 3 4 5

VS_11 fwk: CPU 2 3 4 5

VS_12 fwk: CPU 2 3 4 5

VS_13 fwk: CPU 2 3 4 5

VS_14 fwk: CPU 2 3 4 5

VS_15 fwk: CPU 2 3 4 5

VS_16 fwk: CPU 2 3 4 5

VS_17 fwk: CPU 2 3 4 5

VS_18 fwk: CPU 2 3 4 5

VS_19 fwk: CPU 2 3 4 5

VS_20 fwk: CPU 2 3 4 5

VS_21 fwk: CPU 2 3 4 5

VS_22 fwk: CPU 2 3 4 5

VS_23 fwk: CPU 2 3 4 5

VS_24 fwk: CPU 2 3 4 5

Two of these are firewalls, the others are virtual switches.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

for 2 virtual firewalls, change to corresponding environment with vsenv and outpout

fw ctl multik stat

fw ctl pstat

fw tab -t connections -s

so theres only one interface thats 10G i assume?

why do you have so many switches? ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VS 10:

[Expert@B:10]# fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 2-5 | 15185 | 33064

1 | Yes | 2-5 | 9421 | 31854

2 | Yes | 2-5 | 9476 | 30371

3 | Yes | 2-5 | 9271 | 33260

[Expert@B:10]# fw ctl pstat

Virtual System Capacity Summary:

Physical memory used: 23% (2235 MB out of 9679 MB) - below watermark

Kernel memory used: 25% (398 MB out of 1587 MB) - below watermark

Virtual memory used: 63% (1266 MB out of 1986 MB) - below watermark

Used: 722 MB by FW, 544 MB by zeco

Concurrent Connections: 42% (42096 out of 99900) - below watermark

Aggressive Aging is disabled

Hash kernel memory (hmem) statistics:

Total memory allocated: 445249932 bytes in 109023 (4084 bytes) blocks

Total memory bytes used: 333974208 unused: 111275724 (24.99%) peak: 527506424

Total memory blocks used: 108243 unused: 780 (0%) peak: 138212

Allocations: 574308181 alloc, 0 failed alloc, 569880037 free

System kernel memory (smem) statistics:

Total memory bytes used: 572596840 peak: 741113564

Total memory bytes wasted: 1662360

Blocking memory bytes used: 449015932 peak: 568585876

Non-Blocking memory bytes used: 123580908 peak: 172527688

Allocations: 5214856 alloc, 0 failed alloc, 5102620 free, 0 failed free

vmalloc bytes used: 0 expensive: no

Kernel memory (kmem) statistics:

Total memory bytes used: 457375052 peak: 1406784900

Allocations: 575986613 alloc, 0 failed alloc

571555656 free, 0 failed free

Cookies:

621606431 total, 0 alloc, 0 free,

1072073 dup, 2838224069 get, 40057397 put,

776547219 len, 1101469 cached len, 0 chain alloc,

0 chain free

Connections:

30983804 total, 20978640 TCP, 9554630 UDP, 408658 ICMP,

41876 other, 323 anticipated, 85822 recovered, 42096 concurrent,

98822 peak concurrent

Fragments:

1059899 fragments, 528442 packets, 257 expired, 0 short,

0 large, 0 duplicates, 0 failures

NAT:

30689449/0 forw, 27549777/0 bckw, 26317983 tcpudp,

522474 icmp, 16759410-56510809 alloc

Sync:

Version: new

Status: Able to Send/Receive sync packets

Sync packets sent:

total : 55006222, retransmitted : 974, retrans reqs : 849, acks : 13868

Sync packets received:

total : 248639624, were queued : 752507, dropped by net : 1350

retrans reqs : 748, received 34452 acks

retrans reqs for illegal seq : 0

dropped updates as a result of sync overload: 0

Callback statistics: handled 33092 cb, average delay : 1, max delay : 4

[Expert@B:10]# fw tab -t connections -s

HOST NAME ID #VALS #PEAK #SLINKS

localhost connections 8158 41830 128549 150211

VS15:

[Expert@B:15]# fw ctl multik stat

fw: CoreXL is disabled

[Expert@B:15]# fw ctl pstat

Virtual System Capacity Summary:

Physical memory used: 23% (2235 MB out of 9679 MB) - below watermark

Kernel memory used: 25% (398 MB out of 1587 MB) - below watermark

Virtual memory used: 34% (675 MB out of 1986 MB) - below watermark

Used: 131 MB by FW, 544 MB by zeco

Concurrent Connections: 2% (7484 out of 349900) - below watermark

Aggressive Aging is disabled

Hash kernel memory (hmem) statistics:

Total memory allocated: 27975400 bytes in 6850 (4084 bytes) blocks

Total memory bytes used: 23057976 unused: 4917424 (17.58%) peak: 40957040

Total memory blocks used: 6610 unused: 240 (3%) peak: 10393

Allocations: 1909400154 alloc, 0 failed alloc, 1909207018 free

System kernel memory (smem) statistics:

Total memory bytes used: 81773640 peak: 97879108

Total memory bytes wasted: 400312

Blocking memory bytes used: 28955788 peak: 43079544

Non-Blocking memory bytes used: 52817852 peak: 54799564

Allocations: 1230850 alloc, 0 failed alloc, 1223630 free, 0 failed free

vmalloc bytes used: 0 expensive: no

Kernel memory (kmem) statistics:

Total memory bytes used: 76612064 peak: 146028360

Allocations: 1909494711 alloc, 0 failed alloc

1909301299 free, 0 failed free

Cookies:

99580802 total, 0 alloc, 0 free,

870 dup, 523653081 get, 17350090 put,

140840608 len, 6666 cached len, 0 chain alloc,

0 chain free

Connections:

3454399 total, 2753386 TCP, 480241 UDP, 175747 ICMP,

45025 other, 5787 anticipated, 231 recovered, 7484 concurrent,

8449 peak concurrent

Fragments:

6664 fragments, 3332 packets, 0 expired, 0 short,

0 large, 0 duplicates, 0 failures

NAT:

8434507/0 forw, 16950416/0 bckw, 16931443 tcpudp,

59932 icmp, 69081-302234 alloc

Sync:

Version: new

Status: Able to Send/Receive sync packets

Sync packets sent:

total : 3948529, retransmitted : 85, retrans reqs : 40, acks : 1869

Sync packets received:

total : 17654161, were queued : 165113, dropped by net : 40

retrans reqs : 83, received 763 acks

retrans reqs for illegal seq : 0

dropped updates as a result of sync overload: 0

Callback statistics: handled 735 cb, average delay : 1, max delay : 8

[Expert@B:15]# fw tab -t connections -s

HOST NAME ID #VALS #PEAK #SLINKS

localhost connections 8158 7412 8449 22073

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do you have any graphs for CPU usage per core? and total throughput? how many virtual systems are actually configured?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

if you dont have those, could you share cpview from both virtual firewalls and fwaccel stats -s

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, I was trying to find a good view in the monitoring tool are are the other outputs:

VS10:

| CPU: |

| |

| CPU User System Idle I/O wait Interrupts |

| 0 27% 43% 29% 0% 41,511 |

| 1 22% 37% 41% 1% 41,513 |

| 2 19% 26% 55% 0% 41,514 |

| 3 23% 20% 57% 0% 83,031 |

| 4 25% 27% 48% 0% 83,033 |

| 5 20% 26% 53% 0% 41,517 |

[Expert@B:10]# fwaccel stats -s

Accelerated conns/Total conns : 755/44149 (1%)

Delayed conns/(Accelerated conns + PXL conns) : 397/42669 (0%)

Accelerated pkts/Total pkts : 1153863/110514208 (1%)

F2Fed pkts/Total pkts : 5581172/110514208 (5%)

PXL pkts/Total pkts : 103779173/110514208 (93%)

QXL pkts/Total pkts : 0/110514208 (0%)

VS15:

[Expert@B:15]# fwaccel stats -s

Accelerated conns/Total conns : 6664/7360 (90%)

Delayed conns/(Accelerated conns + PXL conns) : 1/6702 (0%)

Accelerated pkts/Total pkts : 9030680127/10905730520 (82%)

F2Fed pkts/Total pkts : 37660303/10905730520 (0%)

PXL pkts/Total pkts : 1837390090/10905730520 (16%)

QXL pkts/Total pkts : 0/10905730520 (0%)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

great stuff! much better idea about the setup now ![]()

VS10 - most traffic taking medium path, 4 cores allocated. System has hit the roof of 100k concurrent connections at some point.

VS15 - most traffic accelerated, corexl disabled, so runing on one core. not too busy by the looks of it - 7k concurrent connentions. i would reduce max thats set to 350k to something more reasonable as it will never handle that much with one core anyways

back to the original question - did you want to move eth1-04 to bond? how much traffic you are pushing through now and what do you want to achieve with the bond? this is 12400 box?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We want to bond the interface below. (currently eth1-06)

wrp965 Link encap:Ethernet HWaddr 00:12:C1:83:30:1E

inet addr:omitted Bcast:omitted Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:534457 errors:0 dropped:0 overruns:0 frame:0

TX packets:4349380 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:24415300 (23.2 MiB) TX bytes:944052433 (900.3 MiB)

All of our latency issues are on the VS15 context. We want to bond these interfaces (already setup and ready) and we may need to enable corexl. At this point I'm not sure how to do this since VS10 is already allocated the 4 available cores. What do you think?

This is a 12400 box P220, one card with 8 1 gb interfaces. 12 gb of ram.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also, we have quite a few interfaces on these firewalls which is the reason for the virtual switches I believe.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Physical interfaces can be attached directly to virtual firewall, you dont need a switch in front of it. Feels like thats what you are doing? Can you run ifconfig | grep Link on VS0, VS10 and VS15? just to confirm topology

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry and sim affinity -l on vs0. going to bed now! ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good night! Below is the output that you requested.

[Expert@B:0]# ifconfig | grep Link

Mgmt Link encap:Ethernet HWaddr 00:1C:7F:53:73:91

Sync Link encap:Ethernet HWaddr 00:1C:7F:53:73:90

eth1-04 Link encap:Ethernet HWaddr 00:1C:7F:53:81:AD

lo Link encap:Local Loopback

[Expert@B:0]# vsenv 10

Context is set to Virtual Device CSBFW-PROD-B_PRODFW01 (ID 10).

[Expert@B:10]# ifconfig | grep Link

lo10 Link encap:Local Loopback

wrp640 Link encap:Ethernet HWaddr 00:12:C1:83:30:00

wrp641 Link encap:Ethernet HWaddr 00:12:C1:83:30:02

wrp642 Link encap:Ethernet HWaddr 00:12:C1:83:30:04

wrp643 Link encap:Ethernet HWaddr 00:12:C1:83:30:06

wrp644 Link encap:Ethernet HWaddr 00:12:C1:83:30:08

wrp646 Link encap:Ethernet HWaddr 00:12:C1:83:30:0C

wrp647 Link encap:Ethernet HWaddr 00:12:C1:83:30:0E

wrp648 Link encap:Ethernet HWaddr 00:12:C1:83:30:0A

wrp649 Link encap:Ethernet HWaddr 00:12:C1:83:30:22

wrp650 Link encap:Ethernet HWaddr 00:12:C1:83:30:24

wrp651 Link encap:Ethernet HWaddr 00:12:C1:83:30:26

wrp652 Link encap:Ethernet HWaddr 00:12:C1:83:30:28

wrp653 Link encap:Ethernet HWaddr 00:12:C1:83:30:2A

wrp654 Link encap:Ethernet HWaddr 00:12:C1:83:30:1C

[Expert@B:10]# vsenv 15

Context is set to Virtual Device CSBFW-PROD-B_PRODFW02 (ID 15).

[Expert@B:15]# ifconfig | grep Link

lo15 Link encap:Local Loopback

wrp960 Link encap:Ethernet HWaddr 00:12:C1:83:30:12

wrp961 Link encap:Ethernet HWaddr 00:12:C1:83:30:14

wrp962 Link encap:Ethernet HWaddr 00:12:C1:83:30:1A

wrp963 Link encap:Ethernet HWaddr 00:12:C1:83:30:18

wrp964 Link encap:Ethernet HWaddr 00:12:C1:83:30:2E

wrp965 Link encap:Ethernet HWaddr 00:12:C1:83:30:1E

wrp966 Link encap:Ethernet HWaddr 00:12:C1:83:30:20

wrp967 Link encap:Ethernet HWaddr 00:12:C1:83:30:2C

[Expert@B:15]# vsenv 0

Context is set to Virtual Device CSBFW-PROD-B (ID 0).

[Expert@B:0]# sim affinity -l

Mgmt : 1

Sync : 1

eth1-01 : 0

eth1-04 : 0

eth1-05 : 1

eth1-06 : 1

eth1-07 : 0

eth1-08 : 0

Multi queue interfaces: eth1-02 eth1-03

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you will share the same cores as with VS10 since you only have 4 cores. you can enabled it smartdashboard by editing vs oject, can attach screenshots tomorrow

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume that it is here:

VS15:

VS10:

My quest is, can we add one core to VS 15 while 4 cores are assigned to VS10. Management is concerned that VS10 will be impacted by this change. All of our websites are on this VS.

Again, you guys are amazing. I really appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually they both share the same cores already if you look at fw ctl affinity output earlier:

A:0> fw ctl affinity -l

VS_10 fwk: CPU 2 3 4 5

VS_15 fwk: CPU 2 3 4 5

the only difference is that VS15 will keep "kicking" around that one core it has across all 2-3-4-5 one at a time.

Could you share cpview first page for both VS15 and VS10 (the summary)

Then all interfaces summary:

for vsnbr in {1..24}; do vsenv $vsnbr 2>&1 > /dev/null; echo --- "VS-$vsnbr ---";ifconfig | grep Link | egrep -v "^lo|br" | awk '{print $1}'; done

Just that I understand usage of virtual switches better

and cpmq get -v

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VS 15:

VS10:

Interface output:

[Expert@B:10]# for vsnbr in {1..24}; do vsenv $vsnbr 2>&1 > /dev/null; echo --- "VS-$vsnbr ---";ifconfig | grep Link | egrep -v "^lo|br" | awk '{print $1}'; done

--- VS-1 ---

eth1-01

wrpj646

--- VS-2 ---

wrpj964

--- VS-3 ---

wrpj964

--- VS-4 ---

eth1-04.9

wrpj647

--- VS-5 ---

eth1-04.10

wrpj641

--- VS-6 ---

eth1-04.12

wrpj640

--- VS-7 ---

eth1-04.13

wrpj643

--- VS-8 ---

eth1-04.16

wrpj642

--- VS-9 ---

eth1-04.35

wrpj644

--- VS-10 ---

wrp640

wrp641

wrp642

wrp643

wrp644

wrp646

wrp647

wrp648

wrp649

wrp650

wrp651

wrp652

wrp653

wrp654

--- VS-11 ---

eth1-04.6

wrpj961

--- VS-12 ---

eth1-04.36

wrpj960

--- VS-13 ---

eth1-04.55

wrpj963

--- VS-14 ---

bond0

eth1-05

eth1-08

wrpj648

wrpj962

--- VS-15 ---

wrp960

wrp961

wrp962

wrp963

wrp964

wrp965

wrp966

wrp967

--- VS-16 ---

bond1

eth1-02

eth1-03

wrpj965

--- VS-17 ---

eth1-04.39

wrpj966

--- VS-18 ---

eth1-04.28

wrpj649

--- VS-19 ---

eth1-07

wrpj650

--- VS-20 ---

eth1-04.96

wrpj652

--- VS-21 ---

eth1-04.97

wrpj651

--- VS-22 ---

eth1-04.98

wrpj653

--- VS-23 ---

eth1-04.95

wrpj967

--- VS-24 ---

eth1-04.3

wrpj654

[Expert@B:0]# cpmq get -v

Active igb interfaces:

eth1-01 [Off]

eth1-02 [On]

eth1-03 [On]

eth1-04 [Off]

eth1-05 [Off]

eth1-07 [Off]

eth1-08 [Off]

The rx_num for igb is: 2 (default)

multi-queue affinity for igb interfaces:

eth1-02:

irq | cpu | queue

-----------------------------------------------------

91 0 TxRx-0

99 1 TxRx-1

eth1-03:

irq | cpu | queue

-----------------------------------------------------

115 0 TxRx-0

123 1 TxRx-1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are number of things that can be improved here. For one - to remove all the virtual switches that extend single VLAN from external interface to virtual firewall. They only consume already precious system resources without adding any benefit. The only VSW you could keep is 14 that's connected to both VS10 and VS15.

It also appears that bonding is not set correctly - bond members (physical interfaces should not be defined in VSX topology, for example below I only define bondX in VSX object Physical interfaces, not ethX-Y

That potentially could cause issues as it is incorrect configuration.

In your case you would need to remove eth1-05 and eth1-08 from VS14 as they appear to be forming bond0

The same goes for eth1-02 and eth1-03 forming bond1 on VS16 - remove them.

Then remove all four (eth1-02,eth1-03,eth1-05,eth1-08) from VSX Physical interfaces - VSX should only see bond name not it's members.

We can take it privately further to stop sharing too much details publicly ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Kaspars has been exptremely helpful here. We have decided to add 4 cores to our internal VS, restructure our VSW interfaces and fix some bond issues. We believe that this will resolve our issues.

Thank you,

Terry

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 18 | |

| 11 | |

| 6 | |

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 | |

| 2 | |

| 2 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationTue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationTue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter