- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Re: IDLE CPU on 6 core appliance

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

IDLE CPU on 6 core appliance

I have a 12400 appliance with 6 cores and 8 interfaces all 8 interfaces are in use as well as the sync and management interfaces. this appliance is part of a 2 node active/passive cluster.

Soft irq has been allocated to cpu0 and cpu1 on both nodes of the cluster.

When I run cpview on the active node A, I see that 1 cpu (cpu 0) is 100% idle if I failover to node B then there are no idle cpu's

looking at the cpu affinity (see below) I can see that the interface assignment to each CPU is different on both nodes.

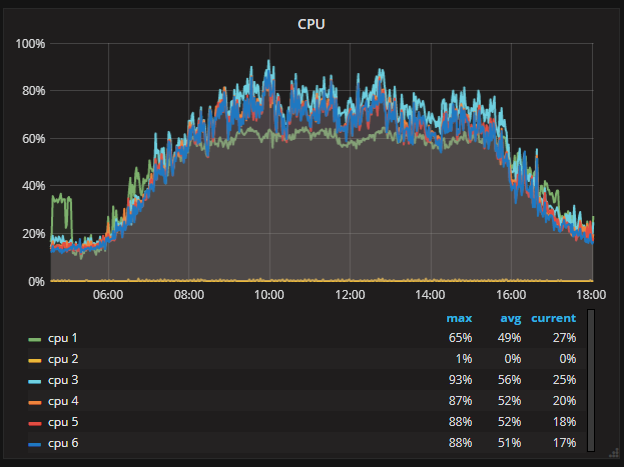

Performance charts for my active appliance show that cpu0 runs at 75-80% during peak time and cpu1 barely breaks a sweat at less than 1% max over 7 days.

Should I think about changing the cpu affinity to better apportiona load on the cpu's ?

Node A

Interface eth1-05 irq 186 CPU 0

Interface eth1-06 irq 202 CPU 1

Interface eth1-07 irq 218 CPU 1

Interface eth1-08 irq 234 CPU 0

Interface eth1-01 irq 59 CPU 0

Interface eth1-02 irq 75 CPU 1

Interface eth1-03 irq 91 CPU 1

Interface eth1-04 irq 107 CPU 0

Interface Sync irq 171 CPU 1

Interface Mgmt irq 155 CPU 0

Kernel fw_0 CPU 5

Kernel fw_1 CPU 4

Kernel fw_2 CPU 3

Kernel fw_3 CPU 2

Daemon wsdnsd CPU 2 3 4 5

Daemon lpd CPU 2 3 4 5

Daemon pdpd CPU 2 3 4 5

Daemon usrchkd CPU 2 3 4 5

Daemon fwd CPU 2 3 4 5

Daemon mpdaemon CPU 2 3 4 5

Daemon pepd CPU 2 3 4 5

Daemon rad CPU 2 3 4 5

Daemon vpnd CPU 2 3 4 5

Daemon in.acapd CPU 2 3 4 5

Daemon in.asessiond CPU 2 3 4 5

Daemon cprid CPU 2 3 4 5

Daemon cpd CPU 2 3 4 5

Node B

Interface eth1-05 irq 186 CPU 0

Interface eth1-06 irq 202 CPU 1

Interface eth1-08 irq 234 CPU 1

Interface eth1-01 irq 67 CPU 0

Interface Sync irq 171 CPU 0

Interface eth1-02 irq 83 CPU 1

Interface eth1-03 irq 107 CPU 1

Interface Mgmt irq 155 CPU 0

Interface eth1-04 irq 123 CPU 0

Kernel fw_0 CPU 5

Kernel fw_1 CPU 4

Kernel fw_2 CPU 3

Kernel fw_3 CPU 2

Daemon in.acapd CPU 2 3 4 5

Daemon wsdnsd CPU 2 3 4 5

Daemon pepd CPU 2 3 4 5

Daemon rad CPU 2 3 4 5

Daemon in.asessiond CPU 2 3 4 5

Daemon usrchkd CPU 2 3 4 5

Daemon fwd CPU 2 3 4 5

Daemon pdpd CPU 2 3 4 5

Daemon lpd CPU 2 3 4 5

Daemon mpdaemon CPU 2 3 4 5

Daemon vpnd CPU 2 3 4 5

Daemon cprid CPU 2 3 4 5

Daemon cpd CPU 2 3 4 5

5 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Automatic interface affinity will dynamically spread interface traffic processing across your SND/IRQ cores (cores 0 & 1) based on traffic loads, so it is not unusual to see the interfaces assigned to slightly different SND/IRQ cores on the two cluster members.

Whether you should adjust the default split between SND/IRQ cores (cores 0 & 1) and Firewall Workers (cores 2-5) depends mainly on how much traffic is accelerated on your system. Please provide the following command outputs run on the active cluster member for recommendations:

fw ver

fwaccel stats -s

netstat -ni

fwaccel stat

enabled_blades

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your help. Also to note the interfaces are configured as 4 bonded interfaces each bond being a high availability pair. On nodea all the standby interfaces are assigned to cpu 1 which would indicate that split across cpu's is not particulary "load balanced."

Further info below.

# fw ver

This is Check Point's software version R80.20 - Build 047

# fwaccel stats -s

Accelerated conns/Total conns : 1202/33715 (3%)

Accelerated pkts/Total pkts : 21963285170/50752857993 (43%)

F2Fed pkts/Total pkts : 7415368470/50752857993 (14%)

F2V pkts/Total pkts : 777807714/50752857993 (1%)

CPASXL pkts/Total pkts : 3519180973/50752857993 (6%)

PSLXL pkts/Total pkts : 17855023380/50752857993 (35%)

QOS inbound pkts/Total pkts : 0/50752857993 (0%)

QOS outbound pkts/Total pkts : 0/50752857993 (0%)

Corrected pkts/Total pkts : 0/50752857993 (0%)

# netstat -ni

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

Mgmt 1500 0 0 0 0 0 0 0 0 0 BMU

Sync 1500 0 122563045 0 0 0 0 0 0 0 BMsRU

bond0 1500 0 243962102 0 0 0 566693973 0 0 0 BMmRU

bond1 1500 0 23406903897 0 0 0 38342643499 0 0 0 BMmRU

bond2 1500 0 25627339818 0 0 0 20356887097 0 0 0 BMmRU

bond3 1500 0 17683706652 468 0 0 12772979254 0 0 0 BMmRU

bond3.856 1500 0 7785013865 0 0 0 3492034026 0 0 0 BMmRU

bond3.857 1500 0 1713417867 0 0 0 2115165381 0 0 0 BMmRU

bond3.861 1500 0 38266829 0 0 0 49687477 0 0 0 BMmRU

bond3.879 1500 0 8120683060 0 0 0 7098512060 0 0 0 BMmRU

bond3.880 1500 0 22082896 0 0 0 17580305 0 0 0 BMmRU

eth1-01 1500 0 23406272534 0 0 0 38342641573 0 0 0 BMsRU

eth1-02 1500 0 631584 0 0 0 2068 0 0 0 BMsRU

eth1-03 1500 0 261428 0 0 0 0 0 0 0 BMsRU

eth1-04 1500 0 25627078484 0 0 0 20356887296 0 0 0 BMsRU

eth1-05 1500 0 17679464530 468 0 0 12772979265 0 0 0 BMsRU

eth1-06 1500 0 4242135 0 0 0 0 0 0 0 BMsRU

eth1-07 1500 0 5846443 0 0 0 6610995 0 0 0 BMRU

eth1-08 1500 0 121399058 0 0 0 566693976 0 0 0 BMsRU

lo 16436 0 172125635 0 0 0 172125635 0 0 0 LRU

# fwaccel stat

+-----------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+-----------------------------------------------------------------------------+

|0 |SND |enabled |eth1-05,eth1-06,eth1-07, |

| | | |eth1-08,eth1-01,eth1-02, |

| | | |eth1-03,eth1-04,Sync, |

| | | |Mgmt |Acceleration,Cryptography |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,NULL,3DES,DES,CAST, |

| | | | |CAST-40,AES-128,AES-256,ESP, |

| | | | |LinkSelection,DynamicVPN, |

| | | | |NatTraversal,AES-XCBC,SHA256 |

+-----------------------------------------------------------------------------+

Accept Templates : disabled by Firewall

Layer FWINT01_Production Security disables template offloads from rule #323

Throughput acceleration still enabled.

Drop Templates : disabled

NAT Templates : disabled by Firewall

Layer FWINT01_Production Security disables template offloads from rule #323

Throughput acceleration still enabled.

# enabled_blades

fw vpn urlf av appi ips identityServer SSL_INSPECT anti_bot mon vpn

# cphaprob show_bond bond0

Bond name: bond0

Bond mode: High Availability

Bond status: UP

Configured slave interfaces: 2

In use slave interfaces: 2

Required slave interfaces: 1

Slave name | Status | Link

----------------+-----------------+-------

Sync | Backup | Yes

eth1-08 | Active | Yes

# cphaprob show_bond bond1

Bond name: bond1

Bond mode: High Availability

Bond status: UP

Configured slave interfaces: 2

In use slave interfaces: 2

Required slave interfaces: 1

Slave name | Status | Link

----------------+-----------------+-------

eth1-01 | Active | Yes

eth1-02 | Backup | Yes

#cphaprob show_bond bond2

Bond name: bond2

Bond mode: High Availability

Bond status: UP

Configured slave interfaces: 2

In use slave interfaces: 2

Required slave interfaces: 1

Slave name | Status | Link

----------------+-----------------+-------

eth1-03 | Backup | Yes

eth1-04 | Active | Yes

#cphaprob show_bond bond3.880

Bond name: bond3.880

Bond mode: High Availability

Bond status: UP

Configured slave interfaces: 2

In use slave interfaces: 2

Required slave interfaces: 1

Slave name | Status | Link

----------------+-----------------+-------

eth1-05 | Active | Yes

eth1-06 | Backup | Yes

Further info below.

# fw ver

This is Check Point's software version R80.20 - Build 047

# fwaccel stats -s

Accelerated conns/Total conns : 1202/33715 (3%)

Accelerated pkts/Total pkts : 21963285170/50752857993 (43%)

F2Fed pkts/Total pkts : 7415368470/50752857993 (14%)

F2V pkts/Total pkts : 777807714/50752857993 (1%)

CPASXL pkts/Total pkts : 3519180973/50752857993 (6%)

PSLXL pkts/Total pkts : 17855023380/50752857993 (35%)

QOS inbound pkts/Total pkts : 0/50752857993 (0%)

QOS outbound pkts/Total pkts : 0/50752857993 (0%)

Corrected pkts/Total pkts : 0/50752857993 (0%)

# netstat -ni

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

Mgmt 1500 0 0 0 0 0 0 0 0 0 BMU

Sync 1500 0 122563045 0 0 0 0 0 0 0 BMsRU

bond0 1500 0 243962102 0 0 0 566693973 0 0 0 BMmRU

bond1 1500 0 23406903897 0 0 0 38342643499 0 0 0 BMmRU

bond2 1500 0 25627339818 0 0 0 20356887097 0 0 0 BMmRU

bond3 1500 0 17683706652 468 0 0 12772979254 0 0 0 BMmRU

bond3.856 1500 0 7785013865 0 0 0 3492034026 0 0 0 BMmRU

bond3.857 1500 0 1713417867 0 0 0 2115165381 0 0 0 BMmRU

bond3.861 1500 0 38266829 0 0 0 49687477 0 0 0 BMmRU

bond3.879 1500 0 8120683060 0 0 0 7098512060 0 0 0 BMmRU

bond3.880 1500 0 22082896 0 0 0 17580305 0 0 0 BMmRU

eth1-01 1500 0 23406272534 0 0 0 38342641573 0 0 0 BMsRU

eth1-02 1500 0 631584 0 0 0 2068 0 0 0 BMsRU

eth1-03 1500 0 261428 0 0 0 0 0 0 0 BMsRU

eth1-04 1500 0 25627078484 0 0 0 20356887296 0 0 0 BMsRU

eth1-05 1500 0 17679464530 468 0 0 12772979265 0 0 0 BMsRU

eth1-06 1500 0 4242135 0 0 0 0 0 0 0 BMsRU

eth1-07 1500 0 5846443 0 0 0 6610995 0 0 0 BMRU

eth1-08 1500 0 121399058 0 0 0 566693976 0 0 0 BMsRU

lo 16436 0 172125635 0 0 0 172125635 0 0 0 LRU

# fwaccel stat

+-----------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+-----------------------------------------------------------------------------+

|0 |SND |enabled |eth1-05,eth1-06,eth1-07, |

| | | |eth1-08,eth1-01,eth1-02, |

| | | |eth1-03,eth1-04,Sync, |

| | | |Mgmt |Acceleration,Cryptography |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,NULL,3DES,DES,CAST, |

| | | | |CAST-40,AES-128,AES-256,ESP, |

| | | | |LinkSelection,DynamicVPN, |

| | | | |NatTraversal,AES-XCBC,SHA256 |

+-----------------------------------------------------------------------------+

Accept Templates : disabled by Firewall

Layer FWINT01_Production Security disables template offloads from rule #323

Throughput acceleration still enabled.

Drop Templates : disabled

NAT Templates : disabled by Firewall

Layer FWINT01_Production Security disables template offloads from rule #323

Throughput acceleration still enabled.

# enabled_blades

fw vpn urlf av appi ips identityServer SSL_INSPECT anti_bot mon vpn

# cphaprob show_bond bond0

Bond name: bond0

Bond mode: High Availability

Bond status: UP

Configured slave interfaces: 2

In use slave interfaces: 2

Required slave interfaces: 1

Slave name | Status | Link

----------------+-----------------+-------

Sync | Backup | Yes

eth1-08 | Active | Yes

# cphaprob show_bond bond1

Bond name: bond1

Bond mode: High Availability

Bond status: UP

Configured slave interfaces: 2

In use slave interfaces: 2

Required slave interfaces: 1

Slave name | Status | Link

----------------+-----------------+-------

eth1-01 | Active | Yes

eth1-02 | Backup | Yes

#cphaprob show_bond bond2

Bond name: bond2

Bond mode: High Availability

Bond status: UP

Configured slave interfaces: 2

In use slave interfaces: 2

Required slave interfaces: 1

Slave name | Status | Link

----------------+-----------------+-------

eth1-03 | Backup | Yes

eth1-04 | Active | Yes

#cphaprob show_bond bond3.880

Bond name: bond3.880

Bond mode: High Availability

Bond status: UP

Configured slave interfaces: 2

In use slave interfaces: 2

Required slave interfaces: 1

Slave name | Status | Link

----------------+-----------------+-------

eth1-05 | Active | Yes

eth1-06 | Backup | Yes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is a representation of the peak period CPU usage. 5 Cpu's average above 50% and cpu2 is very lazy.

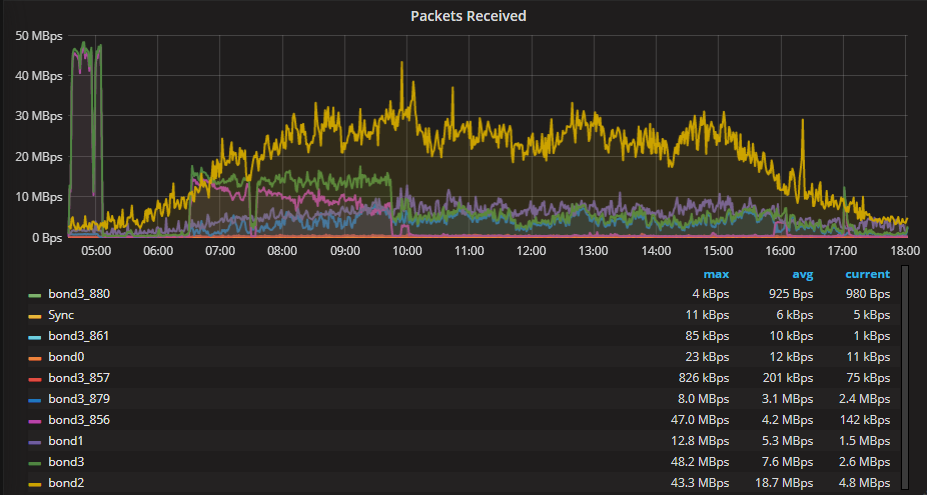

Network throughput for the same period

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on everything you provided it looks like the system is handling your traffic load just fine. Are you having performance problems? The load may not be high enough for automatic interface affinity to move things around enough to make the distribution more even in your case. You don't seem to be hitting the wall at all, so in that case it doesn't matter so much if everything is perfectly balanced.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are not having any performance issues. We are considering if we add the functions of a different gateway cluster (Juniper SRX) onto this one and wondering if it has enough capacity. This raised the issue of why one cpu is doing nothing and looking into if it is possible do make it share the load and thus reduce overall cpu load. I believe changing the affinity for the various interfaces will only affect 2 of the CPU's and thus not really reduce the overall CPU load.

You don't see an issue with the CPU running at >70% ?

The unit is over 3 years old and due for replacement shortly so most likely a higher spec'd unit will replace it in the next 12-18 months.

What I am hearing is that it is most likely best to not try to fix something that isn't broken.

You don't see an issue with the CPU running at >70% ?

The unit is over 3 years old and due for replacement shortly so most likely a higher spec'd unit will replace it in the next 12-18 months.

What I am hearing is that it is most likely best to not try to fix something that isn't broken.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 19 | |

| 10 | |

| 10 | |

| 9 | |

| 6 | |

| 3 | |

| 2 | |

| 2 | |

| 2 | |

| 1 |

Upcoming Events

Thu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter