- CheckMates

- :

- Products

- :

- CloudMates Products

- :

- Cloud Network Security

- :

- Discussion

- :

- centralizing AWS VPC endpoint/privatelink inspecti...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Are you a member of CheckMates?

×- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

centralizing AWS VPC endpoint/privatelink inspection in a Transit Gateway-centered architecture

I want to share quickly some steps in implementing the following scenario:

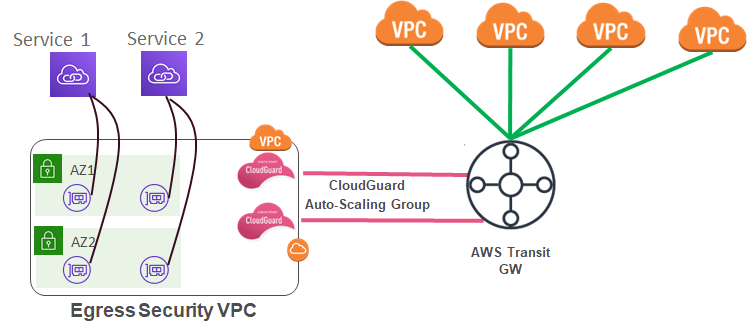

1) you are interconnecting your VPCs using a transit gateway (TGW)

2) you're using Check Point TGW-integrated autoscaling group to inspect egress, inter-VPC and/or AWS<->on prem traffic

3) you want to consume a service through an interface VPC endpoint (AKA Privatelink) and want to inspect traffic flowing between your resources and that service

How do you achieve that?

Here's an outline of the steps

The easy part:

0) Make sure that, if the VPC that has the Check Point gateways (the Egress Security VPC, in the pic above) has a VPC-attachment to the TGW, then that VPC attachment is NOT propagating routes to TGW route tables associated with any spoke VPCs with resources that need access to the service. (that was a fun sentence to write!)

1) create the VPC endpoint (VPCe) on some subnet, dedicated to that purpose, inside the VPC that has the Check Point Cloudguard (CGI) Autoscaling Group (ASG) deployed. We'll call that VPC the "CGI-VPC".

2)In SmartConsole, create the appropriate access rules allowing traffic from the appropriate resources within your spoke VPC to the IP addresses of the VPC endpoints you created for the service

3) If this is not configured correctly (as it should if you followed the steps in the userguide), make sure that traffic to this service is going to be "hide" source NATed by the gateways

The non trivial part (all having to do with DNS!)

in general, the AWS DNS resolver inside the CGI VPC itself (where the VPCe are) can be easily configured to resolve the native name of the service you're consuming through the VPCe, to the private IP addresses on those VPCe (which are implemented as ENIs on the subnet).

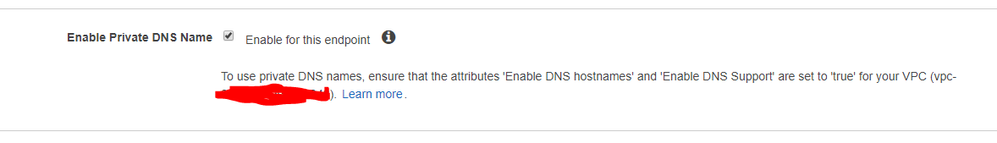

this is where you enable it in the AWS VPC console when you create the VPCe

so e.g, if you created an SNS endpoint in a VPC in us-east-1, then after the deployment, sns.us-east-1.amazonaws.com will resolve to the IP addresses of the ENIs that implement this VPCe.

The challenge is making this the case also for the spoke VPCs, i.e., making it the case that sns.us-east-1.amazonaws.com will resolve to the same private IP addresses, on the CGI VPC, also when requested from the spokes.

Here's one way to do it

a) in AWS Route53 console, create an "inbound endpoint" in the CGI VPC. (you can use the same 2 subnets that are used for the VPCe). record the IP addresses set for this inblound endpoint

b) in the AWS Route53 console, create an "outbound endpoint" in the CGI VPC (you can use the same 2 subnets as above).

c) In AWS Route53 console, create a rule with the following details

c1) type: forward

c2) domain name: put here the FQDN of the service you're trying to provide access to

c3) VPCs: Here you'll have to enter all the spoke VPCs. Note that Whenever a new VPC is created that needs the service, you'll have to add it to this rule

c4) Outbound Endpoint: here you pick the outbound endpoint you created in step b.

c5) Target IP addresses: here you enter, individually, the IP addresses of the ENIs that were created for the inbound endpoint in step a.

d) do a sanity check on all the security groups:

d1) on the ENIs of the outbound endpoints only an outbound rule is really required that allows DNS to the inbound endpoint's IP addresses

d2) on the ENIs of the inbound endpoint an inbound rule is required that allows DNS from the addresses of outbound endpoint's ENIs. I believe that an outbound rules is also required for DNS to the native AWS resolver in the VPC

d3) on the ENIs of the VPCe, the service itself, inbound rules are required, on the port of the service (usually 443) from the subnets where the Check Point Cloudguard gateways live.

And that's it!

Please let me know if i missed something...

Y

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

How will accommodate this design gateway VPCes (e. g. S3), which are route-based ?

Many thanks for your time and support,

andrei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Never tried but I think it should work pretty much the same way except that you don't need to play with DNS at all (e.g., DNS queries for S3 would resolve to the same public ip addresses).

the trick is to

1) only enable the S3 endpoint gateway on the security VPC, where the gateways live, and not on the spoke VPCs, and

2) make sure that the cloudguard gateways hide sNAT outbound traffic (which they need to be configured to do anyhow)