- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

The Great Exposure Reset

24 February 2026 @ 5pm CET / 11am EST

AI Security Masters E4:

Introducing Cyata - Securing the Agenic AI Era

AI Security Masters E3:

AI-Generated Malware

CheckMates Go:

CheckMates Fest

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Hybrid Mesh

- :

- Firewall and Security Management

- :

- VSX Tuning Question

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VSX Tuning Question

Hi Guys,

I have found many interesting articles about VSX tuning here in the forum:

https://community.checkpoint.com/t5/VSX/Interface-Affinity-with-VSX/td-p/51136

I understand that, but how exactly do I set this up under VSV and which CLI commands do I have to use?

Is here a sample file that allows me to set the SecureXL and CoreXL instances?

For example, how do I set Multiqueueing/SecureXL for Core 0,1,2,3,17,18,19,20 and CoreXL for VS1 to Core 4,5,21,22

Which CLI commands do I have to use to make the settings permanent?

Regards

Christian

40 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Knowledgebase and documentation will be your friend to solve such questions. You can find your answers here:

Multi-Queue Management for Check Point R80.30 with Gaia 3.10 kernel

CoreXL Dynamic Dispatcher in R77.30 / R80.10 and above

Best Practices - Security Gateway Performance (Part (6-2-B) Show / Hide CoreXL syntax - in VSX mode)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It depends little on SW release as MQ commands have changed in R80.30.

You would need to provide more details regarding interfaces to give you exact commands for SXL and MQ - which will use MQ and which SXL and how loaded are they. Commads in principle

fw ctl affinity -s

mq_mng

cpmq

As for CoreXL it's more straight forward.

fw ctl affinity -s -d -vsid 1 -cpu 4 5 21 22

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Guys,

Has anyone come across this which is related to R80.40 tuning and if so has anyone actually done this and seen any improvements?

The list of Microsoft domains that will be queried from the updatable objects for Microsoft Office365:

https://endpoints.office.com/endpoints/worldwide?clientrequestid=b10c5ed1-bad1-445f-b386-b919946339a...

The domains being queried are on this list.

Requests are doubled by adding www. prefix to each query causing alot of NXDomain result.

This can be fix this with a kernel parameter to prevent these lookups:

To prevent nxdomain set kernel add_www_prefix_to_domain_name to 0 on the fly:

fw ctl set int add_www_prefix_to_domain_name 0

And to make the change permanent (survive reboot) add a line to

$FWDIR/boot/modules/fwkern.conf:

add_www_prefix_to_domain_name=0

And, to further reduce the queries you can consider modifying

rad_kernel_domain_cache_refresh_interval to double it's current value.

By doing these changes you could reduce the queries related to FQDN domain objects + updatable objects, to approximately 25% of their current level.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I actually feel fairly happy now since DNS requests are sent only from one CoreXL core instead of all. So the 25% saving isn't a major problem atm for us.

Finally rolled out T91 on our VSX two nights ago and currently verifying efficiency of passive DNS learning that's used to improve wildcard domains in updatable objects. So far looking good!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since going to R80.40 with T91 we have seen better core utilisation and majority of issues seen in R80.20 have gone, however we are still plagued with bursts of latency which we still have not got to the bottom of.

Hence looking at all avenues of tuning.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do you observe latency btw? We had lots of issues with our 26k VSX due to RX ring buffer size and also our Cisco core honoring TX pause frames sent by FW. We ended up turning off flow control and increasing RX buffer size

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At the moment we have a couple of physical boxes between DMZ in two different VSs on the same appliance do health check pings, we have seen this go from response times of xx to xxx and even xxxx and there is no real sense to it.

I'm convinced its relate to load and not enough CPU power to move the traffic through fast enough. The traffic is coming across our 10G links and the RX ring size is 3072.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

very odd ring size btw, shouldn't it be 2^ i.e 1024, 2048, 4096?

check your switch port counters for tx/rx pause frames

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can really see an issue on the switchport stats:

Port-channelx is up, line protocol is up (connected)

Hardware is EtherChannel, address is 70db.987c.cf02 (bia 70db.987c.cf02)

MTU 1500 bytes, BW 20000000 Kbit/sec, DLY 10 usec,

reliability 255/255, txload 3/255, rxload 6/255

Encapsulation ARPA, loopback not set

Keepalive set (10 sec)

Full-duplex, 10Gb/s, media type is N/A

input flow-control is on, output flow-control is unsupported

Members in this channel: Te3/3 Te4/3

ARP type: ARPA, ARP Timeout 04:00:00

Last input never, output never, output hang never

Last clearing of "show interface" counters 4w2d

Input queue: 0/2000/0/0 (size/max/drops/flushes); Total output drops: 2

Queueing strategy: fifo

Output queue: 0/40 (size/max)

30 second input rate 518857000 bits/sec, 112327 packets/sec

30 second output rate 265545000 bits/sec, 100090 packets/sec

104738914441 packets input, 74668261892153 bytes, 0 no buffer

Received 90219610 broadcasts (84792897 multicasts)

0 runts, 0 giants, 0 throttles

6 input errors, 5 CRC, 0 frame, 0 overrun, 0 ignored

0 input packets with dribble condition detected

93610942452 packets output, 52207415240111 bytes, 0 underruns

0 output errors, 0 collisions, 1 interface resets

0 unknown protocol drops

0 babbles, 0 late collision, 0 deferred

0 lost carrier, 0 no carrier

0 output buffer failures, 0 output buffers swapped out

--------------------------

TenGigabitEthernet3/3 is up, line protocol is up (connected)

Hardware is Ten Gigabit Ethernet Port, address is 70db.987c.cf02 (bia 70db.987c.cf02)

MTU 1500 bytes, BW 10000000 Kbit/sec, DLY 10 usec,

reliability 255/255, txload 3/255, rxload 7/255

Encapsulation ARPA, loopback not set

Keepalive set (10 sec)

Full-duplex, 10Gb/s, link type is auto, media type is 10GBase-SR

input flow-control is on, output flow-control is on

ARP type: ARPA, ARP Timeout 04:00:00

Last input 00:00:09, output never, output hang never

Last clearing of "show interface" counters 4w2d

Input queue: 0/2000/0/0 (size/max/drops/flushes); Total output drops: 10

Queueing strategy: fifo

Output queue: 0/40 (size/max)

30 second input rate 300241000 bits/sec, 66998 packets/sec

30 second output rate 142683000 bits/sec, 51599 packets/sec

59057703735 packets input, 42452892002095 bytes, 0 no buffer

Received 65049344 broadcasts (59840885 multicasts)

0 runts, 0 giants, 0 throttles

0 input errors, 0 CRC, 0 frame, 0 overrun, 0 ignored

0 input packets with dribble condition detected

49083158628 packets output, 28965234988365 bytes, 0 underruns

0 output errors, 0 collisions, 1 interface resets

0 unknown protocol drops

0 babbles, 0 late collision, 0 deferred

0 lost carrier, 0 no carrier

0 output buffer failures, 0 output buffers swapped out

-------------------------------

TenGigabitEthernet4/3 is up, line protocol is up (connected)

Hardware is Ten Gigabit Ethernet Port, address is 70db.987c.cf0a (bia 70db.987c.cf0a)

MTU 1500 bytes, BW 10000000 Kbit/sec, DLY 10 usec,

reliability 255/255, txload 3/255, rxload 6/255

Encapsulation ARPA, loopback not set

Keepalive set (10 sec)

Full-duplex, 10Gb/s, link type is auto, media type is 10GBase-SR

input flow-control is on, output flow-control is on

ARP type: ARPA, ARP Timeout 04:00:00

Last input 00:00:01, output never, output hang never

Last clearing of "show interface" counters 4w2d

Input queue: 0/2000/0/0 (size/max/drops/flushes); Total output drops: 15

Queueing strategy: fifo

Output queue: 0/40 (size/max)

30 second input rate 256706000 bits/sec, 48327 packets/sec

30 second output rate 147684000 bits/sec, 50826 packets/sec

45697398443 packets input, 32224952791572 bytes, 0 no buffer

Received 25182232 broadcasts (24962680 multicasts)

0 runts, 0 giants, 0 throttles

6 input errors, 5 CRC, 0 frame, 0 overrun, 0 ignored

0 input packets with dribble condition detected

44543786394 packets output, 23247419066906 bytes, 0 underruns

0 output errors, 0 collisions, 1 interface resets

0 unknown protocol drops

0 babbles, 0 late collision, 0 deferred

0 lost carrier, 0 no carrier

0 output buffer failures, 0 output buffers swapped out

Did also check flow_control on the checkpoint appliance:

# ethtool -a eth3-01

Pause parameters for eth3-01:

Autonegotiate: off

RX: on

TX: on

# ethtool -a eth3-02

Pause parameters for eth3-02:

Autonegotiate: off

RX: on

TX: on

# ethtool -S eth3-02 | grep -i flow_control

tx_flow_control_xon: 5

rx_flow_control_xon: 0

tx_flow_control_xoff: 949

rx_flow_control_xoff: 0

# ethtool -S eth3-01 | grep -i flow_control

tx_flow_control_xon: 4

rx_flow_control_xon: 0

tx_flow_control_xoff: 726

rx_flow_control_xoff: 0

# netstat -ni

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

Mgmt 1500 0 81334370 0 0 0 220791303 0 0 0 BMsRU

Sync 1500 0 89840333 0 0 0 1214076035 0 0 0 BMsRU

bond0 1500 0 45908035350 0 104892 0 53128920091 0 0 0 BMmRU

bond1 1500 0 160720368 0 0 0 223132821 0 0 0 BMmRU

bond2 1500 0 489526962 0 0 0 1368598249 0 0 0 BMmRU

eth1-07 1500 0 399676323 0 0 0 154522202 0 0 0 BMsRU

eth1-08 1500 0 79384560 0 0 0 2341413 0 0 0 BMsRU

eth3-01 1500 0 22531934214 0 45033 0 26259792806 0 0 0 BMsRU

eth3-02 1500 0 23376087366 0 59859 0 26869129491 0 0 0 BMsRU

lo 65536 0 7158451 0 0 0 7158451 0 0 0 LNRU

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would start with RX ring buffer increase to 4096 and/or turning off flow control

ethtool -A INTERFACE_NAME rx off tx off

as said - we had extremely bursty traffic on our main bond to core (more or less all VLANs are connected over that bond) and rx pause frames caused total stop in traffic that transpired in delayed ping packets for example, up to 2secs...

how's CPU load for MQ for those interfaces?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Although I didn't state this explicitly in my book, a power of 2 should always be used to size the ring buffers. While it is not a strict requirement and will obviously work if a power of 2 sizing is not used, it will increase the overhead required to maintain and access the elements of the ring buffer. See here for the rather technical explanation:

https://stackoverflow.com/questions/10527581/why-must-a-ring-buffer-size-be-a-power-of-2

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Tim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Kaspars.

Will certainly increase the ringsize 4096 and will try disabling the flow control. SND load it between 35 -40%, current have 4 cores assigned to this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to confirm - we saw massive improvement with R80.40 (before R80.30) - MQ improvements were massive. So make sure your MQ cores are not struggling too

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I put forward the suggestions to TAC, and they advised me not to turn of flow control on the interfaces, at this point they want to increase the core count on every VS to 22 via Smartconsole (we are not using manual affinity).

Now this lead me to think about Tim's rule, "Thou must not allocate cores on a VS across physical cores"....This is the way 😉

So my thoughts on this are, when allocating CPU cores via Smartconsole we are allocating virtual cores in usermode, however when doing manual affinity we are allocating cores in kernel mode, is my thinking correct?

If not why on earth would TAC tellme to allocate 22 cores per VS? This would tellme it could do more harm then good.

Thus far TAC have not answered my question regarding the below either:

add_www_prefix_to_domain_name=0 (Default = 1)

rad_kernel_domain_cache_refresh_interval=120 (Default=60)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The R80.40 VSX Administration Guide (CoreXL for Virtual Systems) indicates that assigning a number of cores per VS in the Smart Console actually creates an equal amount of copies of that VS without being limited by the number of CPU, but increasing memory usage.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So TAC are basically saying just allow all VSs to utilise all the cores as required by spawning equal number for fwk processes? (note that we purposely only using 22 cores out of the 31 total so we can allocate more SNDs).

Any ideas on the additional parameters I've suggested?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I dont't know about the RAD parameters, but my understanding is as follows, CoreXL for VS other than VS0 is mainly there to increase parallel handling capacity at the expense of memory and they will use whatever CPU they have on the machine, hence the recommendation to not go over the number of physical cores.

Now the guide also states that allocating too much instance can have a performance impact, I suppose due to resource mapping spread over a lot of instances.

Since you're engaged with TAC, I would think they're the best positioned to provide you a definitive answer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand why TAC is not recommending to turn off flow control. It all depends on your network design. Since we have our FW connected to the core in one big bond (80Gb) then sending TX pause requests from FW to core caused full traffic stop on ALL Vlans configured in that bond I'm afraid. And that resulted in massive ping delays appro every 5mins

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We do have a similar setup, 20GB bond with majority of the vlans going through there. I guess at this point I need to go with what TAC are saying and see what happens.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it's fairly straight forward - if you disable flow control, your switch/router won't honour TX pause requests and will keep sending packets towards FW interface. And once ring buffer is full, packets will get discarded. For us it was a "better" solution than having short "blackouts" caused by flow control.

It was all to do with extremely burtsy traffic profile.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At least its an option on the table to try.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Kaspars,

Have you figured out how to monitor a VSW via SNMP? Our internet facing interface is attached to a VSW and then the VSs are connected to this. So far I can't find anything that tells you how to discover this and monitor. Lots of finger pointing between Solarwinds and Checkpoint which does not help us.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What exactly did you want to monitor? Throughput?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes - so we have a clear picture of all the throughput and history data for this. Ideally would like to monitor the actually CPU utilisation per VS as well, but at the moment this seems to just pull the generic CPU value for the appliance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For interfaces - I just pull combined throughput on whole interface from VS0. I don't collect stats per VLAN if that's what you were thinking. But will check.

As for CPU, it depends how you have done CPU allocation - I have dedicated CPU cores for VSWs so it's easy to see with a generic CPU load polling 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

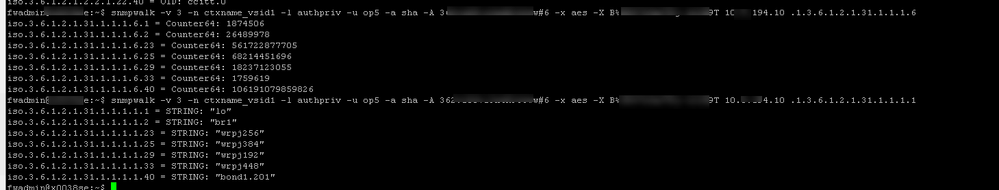

It seems like you can pull stats using SNMP v3 directly from VSW as well

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Got it working

snmpwalk -v 3 -n vsid<x> -l authPriv -u <userid> -a sha -A <shapassword> -x aes -X <aespassword> <MGMT IP of appliance>

PRTG works with this as I can specfic the context id, not looked at Solarwinds yet.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Million dollar question, do you know if this picks up the right number of cores so we can tell actually how much CPU the VS is using?

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 45 | |

| 28 | |

| 14 | |

| 13 | |

| 11 | |

| 8 | |

| 7 | |

| 7 | |

| 6 | |

| 6 |

Upcoming Events

Mon 23 Feb 2026 @ 11:00 AM (EST)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - AMERTue 24 Feb 2026 @ 10:00 AM (CET)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - EMEATue 24 Feb 2026 @ 11:00 AM (EST)

Under The Hood: CloudGuard Network Security for Azure Virtual WANMon 23 Feb 2026 @ 11:00 AM (EST)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - AMERTue 24 Feb 2026 @ 10:00 AM (CET)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - EMEATue 24 Feb 2026 @ 11:00 AM (EST)

Under The Hood: CloudGuard Network Security for Azure Virtual WANThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Introducing Cyata, Securing the Agentic AI EraFri 06 Mar 2026 @ 08:00 AM (COT)

Check Point R82 Hands‑On Bootcamp – Comunidad DOJO PanamáAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter