Hi

Our customer is using 6500 appliance and we're observing an uneven load of MultiQueue cores. They're using R80.40, JHF T78

Affinity:

[Expert@FW01:0]# fw ctl affinity -l -r

CPU 0:

CPU 1: fw_5

in.asessiond lpd topod fwucd pepd in.acapd mpdaemon usrchkd pdpd vpnd wsdnsd rtmd in.geod rad fwd cprid cpd

CPU 2: fw_3

in.asessiond lpd topod fwucd pepd in.acapd mpdaemon usrchkd pdpd vpnd wsdnsd rtmd in.geod rad fwd cprid cpd

CPU 3: fw_1

in.asessiond lpd topod fwucd pepd in.acapd mpdaemon usrchkd pdpd vpnd wsdnsd rtmd in.geod rad fwd cprid cpd

CPU 4:

CPU 5: fw_4

in.asessiond lpd topod fwucd pepd in.acapd mpdaemon usrchkd pdpd vpnd wsdnsd rtmd in.geod rad fwd cprid cpd

CPU 6: fw_2

in.asessiond lpd topod fwucd pepd in.acapd mpdaemon usrchkd pdpd vpnd wsdnsd rtmd in.geod rad fwd cprid cpd

CPU 7: fw_0

in.asessiond lpd topod fwucd pepd in.acapd mpdaemon usrchkd pdpd vpnd wsdnsd rtmd in.geod rad fwd cprid cpd

All:

Interface eth1: has multi queue enabled

Interface eth2: has multi queue enabled

Interface eth3: has multi queue enabled

Interface eth4: has multi queue enabled

Interface Sync: has multi queue enabled

Interface Mgmt: has multi queue enabled

Multiqueue:

[Expert@FW01:0]# mq_mng -o -vv

Total 8 cores. Multiqueue 2 cores: 4,0

i/f type state mode cores

------------------------------------------------------------------------------------------------

Mgmt igb Up Dynamic (2/2) 0(63),4(64)

eth1 igb Up Dynamic (2/2) 0(45),4(65)

eth2 igb Up Dynamic (2/2) 0(49),4(66)

eth3 igb Up Dynamic (2/2) 0(53),4(67)

eth4 igb Up Dynamic (2/2) 0(57),4(68)

------------------------------------------------------------------------------------------------

Mgmt <igb> max 2 cur 2

0e:00.0 Ethernet controller: Intel Corporation I211 Gigabit Network Connection (rev 03)

------------------------------------------------------------------------------------------------

eth1 <igb> max 2 cur 2

03:00.0 Ethernet controller: Intel Corporation I211 Gigabit Network Connection (rev 03)

------------------------------------------------------------------------------------------------

eth2 <igb> max 2 cur 2

05:00.0 Ethernet controller: Intel Corporation I211 Gigabit Network Connection (rev 03)

------------------------------------------------------------------------------------------------

eth3 <igb> max 2 cur 2

07:00.0 Ethernet controller: Intel Corporation I211 Gigabit Network Connection (rev 03)

------------------------------------------------------------------------------------------------

eth4 <igb> max 2 cur 2

09:00.0 Ethernet controller: Intel Corporation I211 Gigabit Network Connection (rev 03)

core interfaces queue irq rx packets tx packets

------------------------------------------------------------------------------------------------

0 eth4 eth4-TxRx-0 57 25769 23193

eth3 eth3-TxRx-0 53 1545150 904071

eth2 eth2-TxRx-0 49 433865741 466353128

eth1 eth1-TxRx-0 45 911210633 1500606188

Mgmt Mgmt-TxRx-0 63 9716772 2463400

4 eth4 eth4-TxRx-1 68 622046 662303

eth3 eth3-TxRx-1 67 952119 1662619

eth2 eth2-TxRx-1 66 390618898 446742240

eth1 eth1-TxRx-1 65 942002645 425528973

Mgmt Mgmt-TxRx-1 64 1238135 17131300

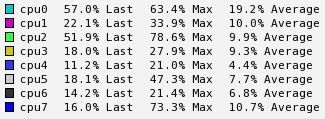

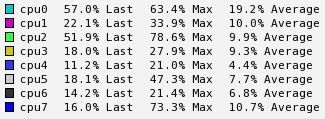

This is the data for CPU usage for a day. It shows that CPU4 is utilised a lot less than CPU0.

@Timothy_Hall probably has the best idea why this could be happening 🙂