Root cause has been found.

Maybe in the future somebody would encounter similar problem, so I will try to make the story longer.

Customer was complaining, that sometimes only during weekends is loosing connections towards servers. We are still running VSX R77.30 with latest hotfix, no IPS blade running. Later on customer provided times, when connection has been lost (exact date omitted):

Monday 00:07 - 01:42

Sunday 00:16 - 00:25

Monday 00:05 - 01:33

Saturday 15:25 - 15:56

Sunday 00:20 - 01:33

Monday 00:10 - 01:48

Sunday 00:19 - 00:31

Monday 00:05 - 02:11

having such info with staggering recurrence , we did check first traffic logs. They appeared "business as usual", no unexpected drops, nothing else suspicious. Due to amount of affected servers, we picked up combination source and destination, and for few weekends we setup tcpdumps:

vsenv 10

fwaccel off

nohup tcpdump -s 96 -w /var/log/TCPDUMPoutside -C 200 -W 100 -Z root -i bond0.123 host 20.30.40.50 and host 10.20.30.40

nohup tcpdump -s 96 -w /var/log/TCPDUMPinside -C 200 -W 100 -Z root -i bond1.456 host host 20.30.40.50 and host 10.20.30.40

nohup tcpdump -s 96 -w /var/log/TCPDUMParp -C 200 -W 100 -Z root -i bond1.456 arp

So, from weekends we did have dumps. There was clearly visible, some connections were not able get through, re-transmissions, pings with missing reply and so on. Surprisingly it took many seconds, 30 or more. Then for minute was traffic visible, again drops, again visible.

We reviewed traffic logs, nothing. We did try to review cpview history. As it is VSX, history is available for main gateway, not for particular virtual system (or I am wrong?), the only suspicious was sudden increase of interrupts, those were evenly distributed among all cores, so we assumed that no elephant session occurred. CPU history did show also minor increase, but in nothing to worry about. Even support case has been opened, believed, that we missed something. Sure, we missed!

We run on that weekend also: "nohup fw ctl zdebug -vs 10 drop | grep --line-buffered '20.30.40.50|10.20.30.40' | tee /var/log/fw_ctl_zdebug_drop_LOG.txt" which came empty. So far I had no time to review why, also we did not clearly figure out, why debug was switch itself off after few hours of running, even if there was no rulebase update, no IPS update and so on.

So I took strong coffee around midnight and run manually "fw ctl zdebug -vs 10 drop", just only watching, how text goes. It is an internal firewall, occasionally rulebase drop, otherwise very quiet. Then around time reported a real havoc started, stream of:

;[vs_10];[tid_0];[fw4_0];fw_log_drop_ex: Packet proto=6 10.1.2.3:37995 -> 10.20.30.50:1720 dropped by fwconn_memory_check Reason: full connections table;

So, customer was right, we were dropping traffic without any log.

From output was found, that source server is a testing server with product called Nessus, scanning every single tcp/udp port on every server behind that firewall. Further more, customer specifically demanded (like a year ago) to have opening "src server, any, any", therefore all traffic from that server was permitted in rulebase and written to connection table. It have opened 3000 new connections per second, exhausting connection table in less a minute. Some already established connections survived it, many newly created connections were dropped, neither permission no drop in traffic logs.

What we missed in this case is one important hint (using cpview where such is not visible):

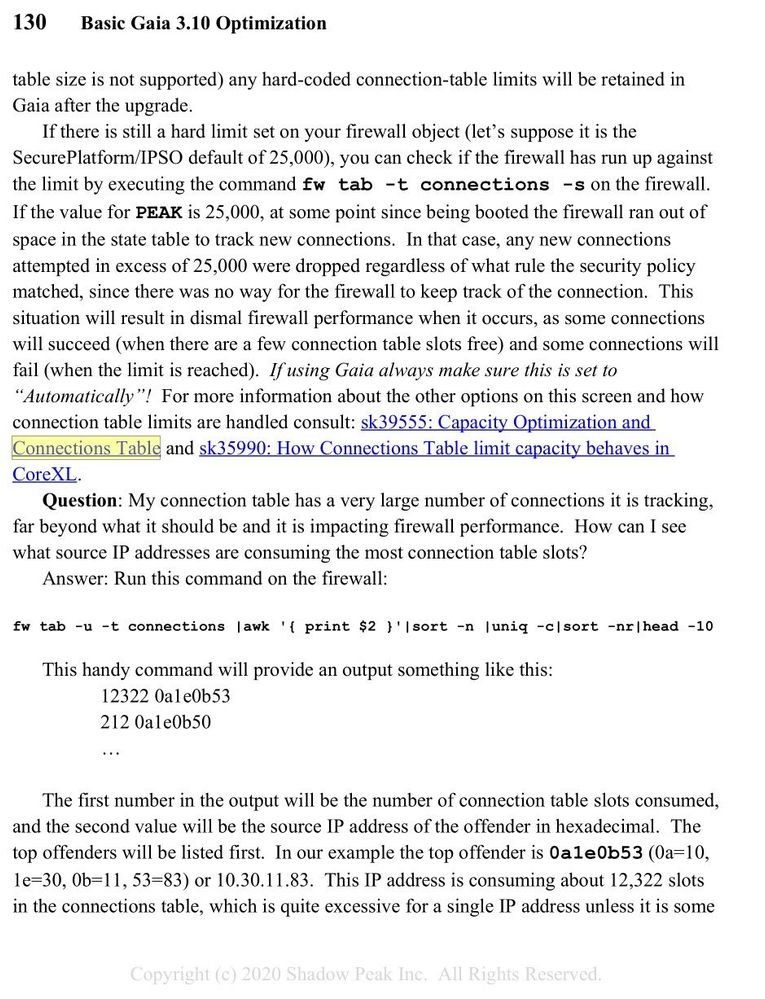

[Expert@FW:10]# fw tab -t connections -s

HOST NAME ID #VALS #PEAK #SLINKS

localhost connections 8158 5130 24901 15333

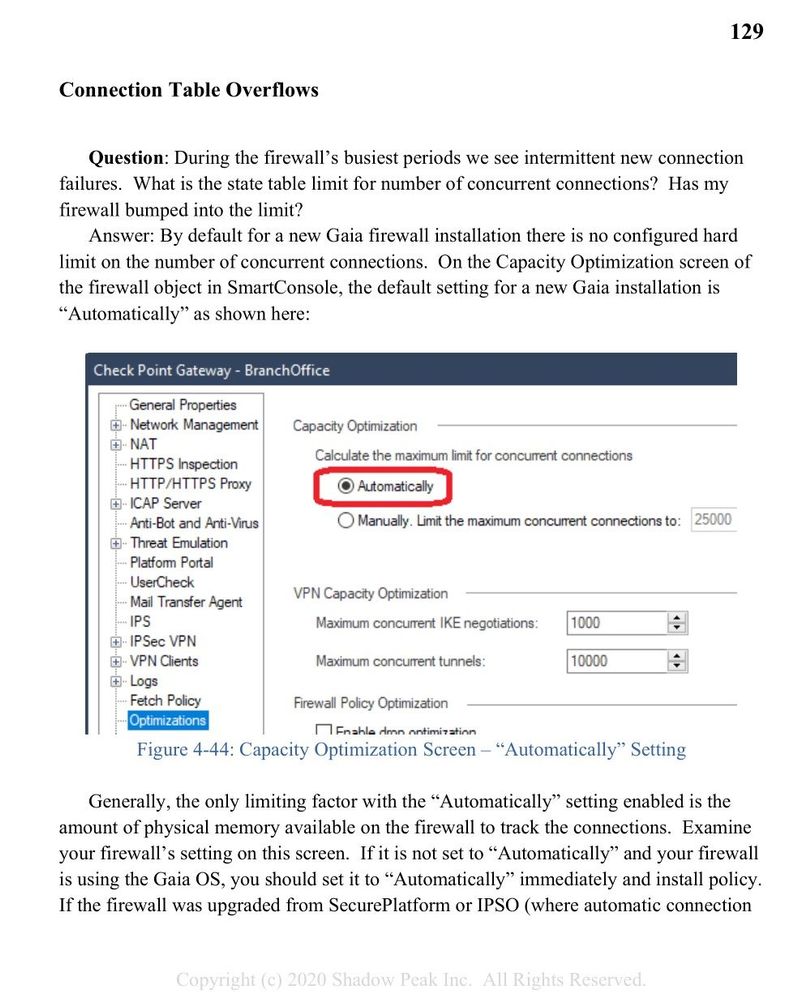

We know, that customer has virtual system with maximum of 25 000 connections, such hint should not be missed. Also from past experience I had "knowledge", that full connection table is visible in traffic logs, but in that past case it was IPS blade complaining about connection table capacity, this customer has no IPS blade activated.

Case is solved, root cause has been found. I hope, that it might help somebody else in the future.