- Products

Quantum

Secure the Network IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloudGuard CloudMates

Secure the Cloud CNAPP Cloud Network Security CloudGuard - WAF CloudMates General Talking Cloud Podcast - Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Share your Cyber Security Insights

On-Stage at CPX 2025

Simplifying Zero Trust Security

with Infinity Identity!

CheckMates Toolbox Contest 2024

Make Your Submission for a Chance to WIN up to $300 Gift Card!

CheckMates Go:

What's New in R82

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Quantum

- :

- Security Gateways

- :

- Re: Site to Site Route Based VPN in Checkpoint

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Site to Site Route Based VPN in Checkpoint

Hi,

I understand in Checkpoint we can configure the Site to Site VPN using policy based and its recommended as well for Checkpoint. But many of other vendors works with Site to Site Route based VPN.... Is there any (simple) way, we can configure the Route based Site to Site VPN with Checkpoint. Please share the steps/ relevant docs.

Thanks,

CSR

24 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The official Check Point documentation does a pretty good job of guiding you through route-based VPN planning and setup using VPN Tunnel Interfaces (VTIs):

Gateway Performance Optimization R81.20 Course

now available at maxpowerfirewalls.com

now available at maxpowerfirewalls.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, i already went through this doc but its so complex configuration. I tried it but didn't work... I was looking for some simple steps which can be used to configure it.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

I could not open the link , could you please share the correct one

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I fixed the link in Tim's post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Saying you tried the documentation and it didn’t work doesn’t help us help you.

What precise steps did you take?

What is the precise setup in question?

What version/JHF of the gateways and management?

Screenshots will probably help also.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You want to configure route based VPN just because other vendors are usually configured using that?

From my perspective that makes no sense.

Yes, there are use cases vor route based but i never had the need.

and now to something completely different - CCVS, CCAS, CCTE, CCCS, CCSM elite

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What you are saying is absolutely right... I never required this configuration as Policy based VPN works absolutely fine on Checkpoint regardless of other side configuration method...

Actually I'm doing a POC for a customer and they want to evaluate Policy based VPN as well... That's why I reached out to this portal....

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried these steps on R81 with latest hotfixes and Smart Cloud management R81 version.

Sure will try again and share the screenshots...

I was wondering if it can be configured simple way like we confgure policy based VPNs.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Perhaps someone could proof read the documentation, the very first two commands are both out dated and make references to the wrong IPs in the diagram.

Diagram:

Herewith some speed notes on creating route based IPSec tunnels between two ClusterXL gateways. What we also see often is that the management server will be internal to one ClusterXL whilst then being external to another. Remember to set a NAT address for the management server, so that implied rules are created to get the CRL requests through to the management server from the remote gateway. You may also need to temporarily create a local host entry for 'management-server' to map to the public IP, so that it can retrieve the CRL list as part of the first connection. Once the VPN tunnels are up you can change the remote gateway to use your AD DNS servers for resolution.

IPSec VTI between gateways:

Create mesh community 'Routed VPN' and add clustered gateways, set one tunnel per gateway pair and permanent. Gateways may need to resolve public NAT IP of management server to retrieve the certificate revocation list (CRL) and may otherwise log 'invalid certificate' erros.

Carve up a /29 subnet for the VTIs (route based IPSec): 10.150.166.24/29

jb1-cluster 10.150.166.25 10.150.166.30 db1-cluster

jb1-fw01 10.150.166.26 10.150.166.29 db1-fw01

jb1-fw02 10.150.166.27 10.150.166.28 db1-fw02

jb1-fw01:

clish

add vpn tunnel 1 type numbered local 10.150.166.26 remote 10.150.166.30 peer db1-cluster

set interface vpnt1 state on

set interface vpnt1 mtu 1500

jb1-fw02:

clish

add vpn tunnel 1 type numbered local 10.150.166.27 remote 10.150.166.30 peer db1-cluster

set interface vpnt1 state on

set interface vpnt1 mtu 1500

db1-fw01:

clish

add vpn tunnel 1 type numbered local 10.150.166.29 remote 10.150.166.25 peer jb1-cluster

set interface vpnt1 state on

set interface vpnt1 mtu 1500

db1-fw02:

clish

add vpn tunnel 1 type numbered local 10.150.166.28 remote 10.150.166.25 peer jb1-cluster

set interface vpnt1 state on

set interface vpnt1 mtu 1500

Update security policy:

Update network interfaces on the ClusterXL objects, so that you can set the VIP floating IP on the vpnt1 interfaces, this would be set as 10.150.166.25 for 'jb1-cluster' and 10.150.166.30 for 'db1-cluster'. Remember to install policy...

You should now be able to ping the remote cluster IP on each of the gateways, for example in expert mode:

[Expert@jb1-fw01:0]# ifconfig vpnt1

vpnt1 Link encap:IPIP Tunnel HWaddr

inet addr:10.150.166.26 P-t-P:10.150.166.30 Mask:255.255.255.255

UP POINTOPOINT RUNNING NOARP MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

[Expert@jb1-fw01:0]# ping 10.150.166.30

PING 10.150.166.30 (10.150.166.30) 56(84) bytes of data.

64 bytes from 10.150.166.30: icmp_seq=1 ttl=64 time=12.7 ms

64 bytes from 10.150.166.30: icmp_seq=2 ttl=64 time=11.0 ms

^C

--- 10.150.166.30 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 11.073/11.917/12.761/0.844 ms

Now enable dynamic routing over the VTIs:

jb1-fw01 & jb1-fw02:

set router-id 196.1.1.6 # external VIP

set ospf interface vpnt1 area backbone on

set ospf interface vpnt1 hello-interval 1

set ospf interface vpnt1 dead-interval 10

set ospf interface vpnt1 cost 10

set ospf interface vpnt1 priority 1

set ospf interface vpnt1 authtype cryptographic key 1 algorithm md5 key 1 secret xxxxxxxxxxxxxxxx

set ospf instance default area backbone range 10.150.166.25/32 restrict on

set inbound-route-filter ospf2 instance default restrict-all-ipv4

set inbound-route-filter ospf2 instance default route 10.0.0.0/8 between 8 and 31 on

set inbound-route-filter ospf2 instance default route 172.16.0.0/12 between 12 and 31 on

set inbound-route-filter ospf2 instance default route 192.168.0.0/16 between 16 and 31 on

set route-redistribution to ospf2 instance default from interface all on

set route-redistribution to ospf2 instance default from static-route all-ipv4-routes on

db1-fw01 & db1-fw02:

set router-id 41.1.1.26 # external VIP

set ospf interface vpnt1 area backbone on

set ospf interface vpnt1 hello-interval 1

set ospf interface vpnt1 dead-interval 10

set ospf interface vpnt1 cost 10

set ospf interface vpnt1 priority 1

set ospf interface vpnt1 authtype cryptographic key 1 algorithm md5 key 1 secret xxxxxxxxxxxxxxxx

set ospf instance default area backbone range 10.150.166.30/32 restrict on

set inbound-route-filter ospf2 instance default restrict-all-ipv4

set inbound-route-filter ospf2 instance default route 10.0.0.0/8 between 8 and 31 on

set inbound-route-filter ospf2 instance default route 172.16.0.0/12 between 12 and 31 on

set inbound-route-filter ospf2 instance default route 192.168.0.0/16 between 16 and 31 on

set route-redistribution to ospf2 instance default from interface all on

set route-redistribution to ospf2 instance default from static-route all-ipv4-routes on

CRL retrieval:

Enable NAT on management server to construct implied rules and switch all gateways to use external IPs

May be needed temporarily to cache the CRL:

add host name checkpoint-management ipv4-address 196.1.1.4 (or whatever IP is natted to the management-server)

https://supportcenter.checkpoint.com/supportcenter/portal?eventSubmit_doGoviewsolutiondetails=&solut...

Debug IPSec:

1st session:

vpn debug trunc

vpn debug on TDERROR_ALL_ALL=5

fwaccel off

fw monitor -e "accept;" -o /var/log/fw_mon_traffic.cap

end:

<ctrl>-c

fwaccel on

vpn debug off

vpn debug ikeoff

2nd session:

fw ctl debug 0

fw ctl debug -buf 32000

fw ctl debug -m fw + conn drop vm crypt

fw ctl debug -m VPN all

fw ctl kdebug -T -f > /var/log/kernel_debug.txt

end:

<ctrl>-c

fw ctl debug 0

archive:

cd /;

tar -czf /root/sk63560.tgz /var/log/fw_mon_traffic.cap /var/log/kernel_debug.txt $FWDIR/log/ike.elg* $FWDIR/log/ikev2.xml* $FWDIR/log/vpnd.elg*;

rm -f /var/log/fw_mon_traffic.cap /var/log/kernel_debug.txt;

View $FWDIR/log/ike.elg* (IKEv1) or $FWDIR/log/ikev2.xml* (IKEv2) using IKEView utility to debug in seconds what's going on, then find the actual reason in the logs. IKEView may complain about an invalid certificate, although we're using the built-in SIC certs, due to a new remotely managed gateway perhaps not having the ability to resolve the management server name to the required IP, hence not being able to retrieve the CRL and cache it. Bit of a chicken and egg story...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Uri_Lewitus for the documentation update 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do i need to add routing for vti ipsec tunnel?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When using a route-asked VPN, traffic that goes through the VPN must have a route through the VTI interface (either statically defined or through dynamic routing protocols).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks. it worked.

But why I can't see logs on vti tunnel interface. the policy have been created without the vpn community added. vpn column set to any. tunnel is working fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not clear what the question is.

What precisely do you expect to see?

What do you see instead?

Screenshots (with sensitive details redacted) would be helpful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can I create multiple tunnel with one VTI interface?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Like I have configured VTI 15 on smb gateway and same VTI 15 on other two gateways. It will work or not?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As far as I know you can.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

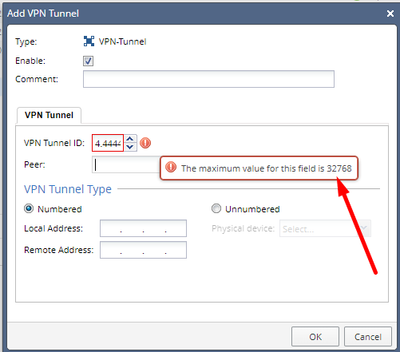

Can I create and use VTIs more than 99? How many VTIs I can use? I created 255 VTIs and imported them into the topology in SmartConsole for the purpose of testing. Will VTI work with numbers over 99?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wanted to know the answer to this question because on another (3.Party) site Tunnel ID is 1008, and However, in the admin guide, it states that Tunnel IDs must be identical and must be between 1 and 99.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had that problem once with customer whose partner was using PAN on the other end and they simply got rid of their runnel ID and that fixed the issue. Honestly, I have no clue where that setting even is on CP side and how to modify it, never seen it myself. UNLESS, its referring to VTI tunnel id maybe, then that has to be it...

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I learnt something new today. Though I must have set up probably dozens of these tunnels before, I honestly NEVER paid much attention to that setting, but thats probably cause I never done one to Palo Alto, always to Azure and AWS.

But, shows max valud can be up to 32768 for tunnel ID

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

Can we create route based VPN tunnels in a VS (virtual system) in VSLS set up?

I'm trying to build a route-based IPSec L2L tunnel for a VS (in VSLS set up running R80.40) to Azure VPN gateway and use BGP route advertisements. Is this achievable? I've seen in documentation that VTI is not supported in VSX environment. Is it still not supported?

Is there any other way to build a route based VPN with BGP advertisements?

Appreciate your comments and findings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VTIs are not supported with VSX in R80.40, but support for this was introduced in R81. See this very informative SK: sk79700: VSX supported features

Gateway Performance Optimization R81.20 Course

now available at maxpowerfirewalls.com

now available at maxpowerfirewalls.com

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 19 | |

| 15 | |

| 15 | |

| 6 | |

| 6 | |

| 6 | |

| 5 | |

| 5 | |

| 5 | |

| 4 |

Upcoming Events

Tue 05 Nov 2024 @ 05:00 PM (CET)

Americas CM LIVE: - 30 Years for the CISSP certification - why it is still on topThu 07 Nov 2024 @ 05:00 PM (CET)

What's New in CloudGuard - Priorities, Trends and Roadmap InnovationsTue 05 Nov 2024 @ 05:00 PM (CET)

Americas CM LIVE: - 30 Years for the CISSP certification - why it is still on topTue 12 Nov 2024 @ 03:00 PM (AEDT)

No Suits, No Ties: From Calm to Chaos: the sudden impact of ransomware and its effects (APAC)Tue 12 Nov 2024 @ 10:00 AM (CET)

No Suits, No Ties: From Calm to Chaos: the sudden impact of ransomware and its effects (EMEA)Tue 12 Nov 2024 @ 02:00 PM (CET)

Part 1: Harnessing AI to Prevent Cyber Attacks and Enhance Defense - EMEATue 19 Nov 2024 @ 12:00 PM (MST)

Salt Lake City: Infinity External Risk Management and Harmony SaaSWed 20 Nov 2024 @ 02:00 PM (MST)

Denver South: Infinity External Risk Management and Harmony SaaSAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2024 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter