- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Firewall Uptime, Reimagined

How AIOps Simplifies Operations and Prevents Outages

Introduction to Lakera:

Securing the AI Frontier!

Check Point Named Leader

2025 Gartner® Magic Quadrant™ for Hybrid Mesh Firewall

HTTPS Inspection

Help us to understand your needs better

CheckMates Go:

SharePoint CVEs and More!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: RX out of buffer drops on 25/40/100G (Mellanox...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RX out of buffer drops on 25/40/100G (Mellanox) interfaces

After having an SR open for quite a while and doing a loop on same questions and suggestions for a few times, I’m slowly losing hope in getting anywhere near a logical explanation.

I would kindly ask if anyone is/was having the same “problem” as I am.

I’ll try to be short in the explanation:

After migrating from an older open server HW (minor SW change R81.10 JHF82 to JHF94) from a 10G Intel i340-t2 copper to a 25G Mellanox Connect-X4, we started to observe RX drops on both bond slave interfaces (on the previous hardware it was 0). There’s not really a big amount of them (100 – 200 frames every 30s) but they are constantly increasing. (RX packets:710147729181 errors:0 dropped:11891177)

Details:

Connection: Logical Bond (LACP) 2x 25G

Implementation type: datacentre FW (only FW blade currently) with a 10/6 CoreXL/SND split. Most of the traffic is accelerated.

Accelerated pkts/Total pkts : 1452240020060/1468567378332 (98%)

6 RX queues on the mentioned interfaces.

RX-ring size: 1024

Traffic rates: average: 1G - 2G, PPS 200 – 300K; Bond traffic balance is in average a 10% difference. Most of the traffic is on those 25G data interfaces.

CPU load: SND CPU cores: 10 – 25%

CoreXL CPU cores: 5 – 15%

No CPU spikes not even near 100%. Drops are observed on the 10 – 25% CPU load.

Interrupt distribution is more or less normal:

68: 2121940581 0 0 0 0 0 eth4-0

69: 1 1614573236 2 0 0 0 eth4-1

70: 1 0 351831748 0 2 0 eth4-2

71: 1 0 0 2941469923 0 0 eth4-3

72: 1 0 0 0 1388895963 0 eth4-4

73: 1 0 0 0 0 761200896 eth4-5

112: 3360525067 0 0 0 0 0 eth6-0

113: 1 4282830995 2 0 0 0 eth6-1

114: 1 0 3228875557 0 2 0 eth6-2

115: 1 0 0 330241849 0 0 eth6-3

116: 1 0 0 0 687642450 0 eth6-4

117: 1 0 0 0 0 1837144760 eth6-5

Driver version:

driver: mlx5_core

version: 5.5-1.0.3 (07 Dec 21)

firmware-version: 14.32.2004 (DEL2810000034)

Jumbo frames enabled (no change from previous HW)

RX drop type: rx_out_of_buffer: 11891177

Which should translate to …. CPU or OS was too lazy or overloaded to process all the frames listed in the RX ring buffer.

Default coalesce parameters:

Coalesce parameters for eth4:

Adaptive RX: on TX: on

rx-usecs: 32

rx-frames: 64

rx-usecs-irq: 0

rx-frames-irq: 0

In desperation tried to optimize those without any noticeable difference:

Coalesce parameters for eth4:

Adaptive RX: off TX: off

rx-usecs: 8

rx-frames: 32

rx-usecs-irq: 0

rx-frames-irq: 0

I could be wrong with the following: a “dummy” calculation of a maximal possible number of frames in the RX ring buffer (if CPU is coping with its tasks): Traffic rate 300K PPS /2 per bond slave= 150K PPS

Convert to micro seconds: 0.15 PPus

rx-usec=8 / rx-frames=32 ….a frame descriptor in the RX buffer should wait 8us before an Interrupt is generated and sent to one of the 6 available CPUs and a max of 32 descriptors should be written before an Interrupt.

With my traffic rate:

8us/0.15ppus = 53 frames which is more than 32 so in theory an interrupt is generated every 4.8us (32 x 0.15). More than 32 descriptors should not be present in the RX buffer (of course, there are also other parameters that affect that)…far from the current 1024….

OS weight and budget are fixed values (64, 300)

I’m probably missing something here 😊

To not make it just an “open server” thing, I’m observing the same behavior on a Maestro Backplane 100G interfaces (same Mellanox driver) on 16600 modules. CPU load is also not much different than the one mentioned here.

The obvious solution: increase the RX ring size. I’m trying to find a logical explanation for this.

Would appreciate any thoughts or experience on that matter.

28 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume you started with default value of 256 RX buffer? Sometimes 1024 is not enough you can double it to 2048 and even 4096.

Please be aware that counters only reset after reboot. So make a note of the value of drops before the change and check if they still increase after the change. Give it couple of hours during peak hours.

Also maybe the output this on the relevant interfaces could show some more relevant information:

ethtool -S <IF name>

-------

Please press "Accept as Solution" if my post solved it 🙂

Please press "Accept as Solution" if my post solved it 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the replay. Well on the new HW I already started with 1024 - on the previous one I had the default 256 (on the 10G int). With the same traffic and same number of RX queues I would suppose the 1024 should be enough in regards to the old HW didn't have any RX drops.

ethtoll -S shows only "out of buffer" drops.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please provide output of netstat -ni counters for the relevant interface along with its full ethtool -S (interface) output. Not all RX-DRPs are traffic getting lost that you actually care about; starting in Gaia 3.10 receipt of unknown EtherTypes and improper VLAN tags will cause RX-DRP to increment. sk166424: Number of RX packet drops on interfaces increases on a Security Gateway R80.30 and higher ...

If RX-DRP is <0.1% of RX-OK for an interface, generally that is OK and you should not increase ring buffer size in a quest to make RX-DRP absolutely zero. Such a quest can lead you to Bufferbloat and actually make performance worse, and in some cases much worse. We can discuss some other options after seeing the command outputs.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the replay also....

I have added a file with the cmd outputs. My type of drops seems not to be related to the sk166424. I agree regarding the really small percentage of them - they are not affecting the production traffic, or at least no one is noticing them 🙂 I'm just trying to find some logic in them. Eventually I'll increase the RX buffer.

Some time ago I had a similar problem on a 21k appliance where in the end a NIC driver update solved the problem...but it was a really long time ago.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Those are legit ring buffer drops but the RX-DRP rate is so low I wouldn't worry about it.

Are the RX-DRPs incrementing slowly & continuously? Or do you get a clump of them during a policy installation? sar -n EDEV can help reveal if the RX-DRPs are continuous or only happening en masse during policy installations.

I was under the impression that the default ring buffer sizes for Intel 1GB is 256, Intel 10GB is 512, and Mellanox/NVIDIA 25GB+ is 1024 so you may already be at the default ring buffer setting.

If you still want to increase it I'd suggest doubling the default size (do not crank it to the maximum) and going from there, but both before and after doing so I'd strongly advise running this bufferbloat test through the interface with the enlarged ring buffer to validate you haven't made jitter substantially worse:

https://www.waveform.com/tools/bufferbloat

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RX-DRPs are incrementing continuously...but in clumps of i.e. 500 - 1000 packets get dropped every 60s. They are not related to policy installs. (attached log and short drop statistics)

Regarding the defaults; my bad, I have gone over the configuration again and apparently while I have increased the RX-buffer on the 1G interfaces to 1024, I did the same for 25G without checking the default, which is as you said 1024....so, I'm on defaults on the 25G. Sorry for the misleading info.

But still, why do you think those drop happen? If we look at the CPU usage, it should easily handle the current packet rate and never go over the current RX ring size with the current coalesce timings. Unless my bond split over two physical NICs ( 2 x (2x 25G NIC)) is worsening the thing.

Tasks: 423 total, 2 running, 421 sleeping, 0 stopped, 0 zombie

%Cpu0 : 0.0 us, 0.0 sy, 0.0 ni, 88.0 id, 0.0 wa, 2.0 hi, 10.0 si, 0.0 st

%Cpu1 : 0.0 us, 0.0 sy, 0.0 ni, 85.9 id, 0.0 wa, 2.0 hi, 12.1 si, 0.0 st

%Cpu2 : 0.0 us, 0.0 sy, 0.0 ni, 87.9 id, 0.0 wa, 2.0 hi, 10.1 si, 0.0 st

%Cpu3 : 0.0 us, 0.0 sy, 0.0 ni, 84.2 id, 0.0 wa, 3.0 hi, 12.9 si, 0.0 st

%Cpu4 : 0.0 us, 0.0 sy, 0.0 ni, 89.0 id, 0.0 wa, 2.0 hi, 9.0 si, 0.0 st

%Cpu5 : 0.0 us, 1.0 sy, 0.0 ni, 90.1 id, 0.0 wa, 2.0 hi, 6.9 si, 0.0 st

%Cpu6 : 1.0 us, 4.9 sy, 0.0 ni, 94.1 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu7 : 0.0 us, 3.1 sy, 0.0 ni, 96.9 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu8 : 0.0 us, 4.0 sy, 0.0 ni, 95.0 id, 0.0 wa, 0.0 hi, 1.0 si, 0.0 st

%Cpu9 : 2.0 us, 3.0 sy, 0.0 ni, 95.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu10 : 0.0 us, 6.0 sy, 0.0 ni, 94.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu11 : 1.0 us, 4.0 sy, 0.0 ni, 95.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu12 : 0.0 us, 3.0 sy, 0.0 ni, 95.0 id, 0.0 wa, 1.0 hi, 1.0 si, 0.0 st

%Cpu13 : 1.0 us, 4.0 sy, 0.0 ni, 95.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu14 : 0.0 us, 6.0 sy, 0.0 ni, 93.0 id, 0.0 wa, 1.0 hi, 0.0 si, 0.0 st

%Cpu15 : 1.0 us, 3.0 sy, 0.0 ni, 95.0 id, 0.0 wa, 1.0 hi, 0.0 si, 0.0 st

KiB Mem : 64903508 total, 46446860 free, 12609436 used, 5847212 buff/cache

KiB Swap: 33551748 total, 33551748 free, 0 used. 51006384 avail Mem

I don't want to waste your time on a thing that is actually not critical. As I said, I'm just trying to make some logic out of those drops.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree the SND CPUs should be able to keep up given their low load. Once again the RX-DRP rate is negligible and fixing it won't make anywhere close to a noticeable difference.

However since you are on open server I assume you are using a static CoreXL split. You could try increasing the number of SNDs, as doing so will create additional ring buffer queues to help spread out the traffic more and prevent RX-DRPs. However if the issue is being caused by elephant/heavy flows (fw ctl multik print_heavy_conn), the packets of a single elephant flow will always be "stuck" to the same SND core and its single ring buffer for that interface, so adding more SNDs and queues for an interface will probably not help.

So there are two things that may be happening:

1) When a SoftIRQ run starts on the dispatcher, it is able to completely empty all ring buffers and does not have to stop short. However the ring buffer completely fills up and overflows before the next SoftIRQ run starts. This is the less likely of the two scenarios and if it is occurring I don't think there is much you can do about it, other than perhaps adjusting something to make SoftIRQ runs happen more often.

2) The SoftIRQ run is being stopped short due to the maximum allowable number of frames having been processed or two jiffies going by. Because the emptying was incomplete, an overflow of the ring buffer is more likely before the next SoftIRQ run can start. See the following 2 pages from my book below, in the age of 25Gbps+ interfaces perhaps the default value of net.core.netdev_budget is insufficient and should be increased. But be warned that doing so could upset the delicate balance of CPU utilization between SoftIRQ runs and CPU utilization by the sim (SecureXL) driver on a SND core.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have already checked the softnet_stats but was unable to correlate the output to my drops. Although the third column is showing that some SoftIRQ stopped early those numbers do not change in correlation to the increasing number of RX-DRP:

i.e.:

when I first checked a few days ago:

526d9b30 00000000 0000001b

2373b998 00000000 00000046

ffb1af7e 00000000 00000039

9623181c 00000000 0000003c

27ed8578 00000000 00000037

260510b2 00000000 00000039

and from today:

ab2bae59 00000000 0000001b

58b6c52c 00000000 00000046

757e1d0b 00000000 0000003a

ff2fa192 00000000 0000003e

b58f086d 00000000 00000037

d51737f5 00000000 00000039

00b951bc 00000000 00000000

00a862d8 00000000 00000000

00bb74b7 00000000 00000000

00cc18e3 00000000 00000000

00de0e4b 00000000 00000000

00d1e151 00000000 00000000

00c31fc0 00000000 00000000

00bcc946 00000000 00000000

00ac045d 00000000 00000000

00c0c86b 00000000 00000000

I would interpret this output that although some SoftIRQ are eventually terminated earlier, not packets are getting dropped - is this correct?

Regarding adding more CPUs to SND and eventually lower the number of packets in each RX buffer would make sense if I had reasonably high SND CPU usage. As you wrote, wouldn't help with elephant flows which are the usual reason for CPU spikes...but fortunately I'm not in the elephant flow territory.

I wouldn't even be digging into those drops if I had them also on the previous hardware with 10G interfaces (I must correct myself as you said, the default buffer on 10G Intel was 512 and not 256)....

Thanks a lot for your opinions/suggestions. I'll probably go and increase the RX buffer to 2048 and stop digging into this matter.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Right some SoftIRQ runs are ending early based on that output but those are very low numbers. However even through a few runs were ended early, that does not necessarily mean that RX-DRPs will occur as a result, as the next SoftIRQ run may commence and start emptying the ring buffer again before it fills up completely. I guess if you want to increase the ring buffer size a reasonable amount for peace of mind that will be fine.

After thinking about this some more, I believe the reason you started seeing this when you moved from Intel to Mellanox/NVIDIA adapters is due to the difference in how these adapters handle a full ring buffer. Allow me to explain.

When certain advanced Intel NIC hardware/drivers attempt to DMA a frame from the NIC hardware buffer into a Gaia memory socket buffer (referenced with descriptors contained in the ring buffer), if the ring buffer is full at that time the frame is "pulled back", the NIC waits a moment, then tries to transfer it again hoping a slot has opened up. However suppose that the ring buffer is perennially full and frames are constantly being pulled back and having to try again. Eventually enough incoming frames pile up in the NIC hardware buffer that it too overruns and incoming frames from the wire begin to be lost. The NIC indicates this condition by incrementing both RX-DRP and RX-OVR in "lock step" for each lost frame, in other words these two counters always have exactly the same value for that interface. Even though technically the loss was due to an overrun of the NIC's hardware buffer, it was actually caused by backpressure from the perennially full ring buffer.

On older Intel NIC hardware/drivers the ability to "pull back" the frame and try again did not exist, and the frame would simply be lost if the ring buffer was full with RX-DRP incremented, along with the appropriate fifo/missed error counter. I would speculate that the Mellanox/NVIDIA adapters do not have the "pull back" capability which I find a bit surprising considering how supposedly advanced these NICs are, or perhaps they have some kind of limit on how many times a single frame can be pulled back, and when they eventually give up and drop the frame RX-DRP is incremented.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That theory would explain why there's no RX-OVR drops - could be, thanks. I would also find strange that Mellanox is not having the "pull back" function implemented or it really reaches the "retry count"....

But every reason for those drops points to the system not being able to pull the packets out of the ring buffer in time - but this is in contradiction with softnet_stats which are saying that the NAPI poll did not leave any packets behind - at least from the stats point of view.

I still having trouble to imagine that after the NIC writes the frames into RAM via DMA and in my case (at least from my understanding) NIC with RSS (6x queue) has 6 x 1024 frames of space to write and read from it and with a really low loaded CPUs not able to cope with that. Unless there's something with ring buffer space reservation for each queue - I would suppose that RX memory reservation size is in line with the (possible) accepted max frame size on the interface, where mine is Jumbo 9216 - does not really make much sense 🙂

Unless the counter rx-out-of-buf is named inappropriately and means also other than just RX buffer on a queue was exhausted.

Thanks Timothy for your explanations. I will stop here in digging deeper as after all the articles red and talks, I'm starting to find myself in a loop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As there are just RX-DRP but 0 RX-OVR it's IMHO not a CPU/hw-limit problem. The network sends packages to the system which are not related. Maybe not configured VLAN(s) on GAiA....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, indeed I though about those drops but still they should be shown under different stats rather than my "rx_out_of_buffer" which at least by name, should not point to "not related packets". I also should have had them on the previous hardware - as in regards to network there was no change - drops appeared right after I switched to the new servers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have very similar problems with the 25G NICs from Mellanox.

We have had the high error rate since migrating to these systems.

However, the situation became significantly worse with the update to R81.20 and the associated update of the NIC drivers.

https://network.nvidia.com/pdf/firmware/ConnectX4-FW-12_22_1002-release_notes.pdf

Since then, after a policy push, we have had the problem of losing a number of applications in monitoring.

Case is pending.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Exactly, the impact of NIC driver updates is covered in my Gateway Performance Optimization Class as something to watch out for upon an upgrade or Jumbo HFA application. It does not look like the mlx* driver version shipped with R81.20 GA has been updated yet, at least as of Take 24. Here is the relevant course content which you may find useful:

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not entirely sure if I understood the difference between version and firmware (ethtool -i) correctly, but it's correct, we also have the version from your table (kernel driver?).

However, the firmware has changed, as announced in the release notes of R81.20.

https://downloads.checkpoint.com/fileserver/SOURCE/direct/ID/125378/FILE/CP_R81.20_ReleaseNotes.pdf

driver: mlx5_core

version: 5.5-1.0.3 (13 May 22)

firmware-version: 12.22.1002 (CP_0000000001)

Unfortunately, we did not analyze the version or parameters in detail before the update.

But it could also be that other parameters have changed. For example, flow control is now activated on the 25G Mellanox cards, but it is off on the 10G Intel cards.

(ethtool -a):

RX: on

TX: on

Unhappily, after the last push we deleted the counters with a reboot, so we are not sure whether a pause flag was sent to the switch during the push (or shortly after) (flow control is switched off here).

Regrettably, we are currently not allowed to carry out any further tests.

The SK for permanently switching off Flow Control also sounds a bit strange.

https://support.checkpoint.com/results/sk/sk61922

Especially since it is also disabled for all other cards in the system.

So we are currently relatively unsure what caused our problem and what is the best way to fix it.

Back to R81.10? Actually unfortunate and the firmware of the SFP remains up to date. Because of the same driver, shouldn't this be a problem?

Use an old SFP (with old firmware)? Update to the R81.20 Take26 (from 24)?

Are there other parameters like ring buffer or something similar?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Version" is the version of the Gaia kernel driver used to access the NIC hardware. "firmware-version" is the level of software in the BIOS on the NIC hardware itself. Actually it does appear that the firmware can be downgraded, see here:sk141812: Installing and Configuring Dual 40GbE and Dual 100/25GbE I/O card in Applicable Check Poin...

However I have found it a bit distressing that the Mellanox/NVIDIA cards have required firmware updates on the NIC card itself after being shipped to a customer, and that the mlx driver seems to be constantly getting major updates as shown in my table above. I can't recall ever needing to update the firmware on any Intel-based NICs for a gateway, and the kernel driver versions for Intel-based NICs are rarely updated, and when they are it is very minor. I understand that the Mellanox cards are on the bleeding edge as far as speed & capabilities, but whatever that firmware update fixed must have been pretty major, as firmware updates always run the risk of bricking the card if anything goes wrong.

Generally pause frames (EthernetFlow Control) are disabled by default for Intel-based NICs but enabled for Mellanox NICs. Not really a fan of pause frames myself as they can to some degree interfere with TCP's congestion control mechanism and degrade performance in certain situations; would be very interested to hear the justification for why they are enabled by default for Mellanox cards. However even if pause frames are enabled on the NIC, the switch will ignore them unless it is configured to honor them.

As far as where to go from here, please post the output of the following expert mode command so we can see what Multi-Queue is doing:

mq_mng -o -v

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like Multi-Queue is doing a pretty good job of balancing the traffic between the various dispatcher cores.

One thing that is slightly unusual: there are 42 queues (dispatcher cores) available for Multi-Queue but the mlx driver is only using 30 cores, while the Intel i40e driver is utilizing 38 (supposedly i40e can use up to 64, while igb can only use 2-16 depending on NIC hardware and has 8 in use). I was under the impression that the Mellanox cards could utilize up to 60 queues/dispatchers, but on your system it is stopping short at 30. This may be a limitation of the 25Gbps cards you are using, whereas perhaps the Mellanox 40/100 Gbps cards can utilize 60. That would be something ask TAC about.

Only other thing is that Multi-Queue thinks it can utilize 42 cores, are you sure you have that many dispatchers in your CoreXL split? Are you sure that a Firewall Worker Instance is not also accidentally assigned to one of these 42 dispatcher cores? This will cause issues and is a common problem on VSX systems.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Timothy,

thank you very much for these tips.

We had a maintenance window yesterday where we tried out a lot.

Checkpoint itself pointed us to this SK: https://support.checkpoint.com/results/sk/sk180437

Since this unfortunately didn't work, we updated to the latest take (26), also without any improvement.

Then we looked at the balancing again and quickly reset it to default, which unfortunately didn't help either.

We also took another close look at the rx_err rate and considered whether a ring buffer change would help.

Since the error rate is well below 1%, we think that's fine.

However, we have noticed that the error rate on the passive is always higher.

So today we're going to investigate whether the switches are sending things that Checkpoint doesn't support or something like that.

Unfortunately, we still have our actual problem, which is that some applications fail after a policy push.

The fact that it is due to the bonds with the 25G cards is just a theory because we have not seen any problems on the bonds with the 10G cards so far (here the RX_ERR rate is always 0).

Best regards

Lars

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

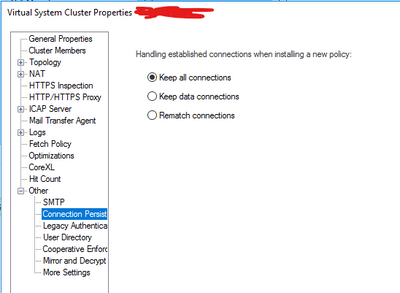

unrelated to NICs - but what are your connection persistence?

we have connection issues with 'rematch' when IPS is enabled when installing policy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you. We have this enabled too. Unfortunateley, that hasn't changed anything either.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you say error rate do you mean RX-DRP, RX-OVR or RX-ERR? Can you please post the output of netstat -ni and ethtool -S (interface) for the problematic Mellanox interface(s). If it is truly RX-ERRs you are taking that is usually some kind of physical issue. Are they clumping up during policy installs or constantly incrementing? sar -n EDEV

If they are clumping during a policy install that might cause enough packet loss for sensitive applications to abort. The suggestion above to set "keep all" will help rule out a rematch issue where the existing connection is not allowed to continue by stateful inspection after a policy install, but I doubt that is directly related to your Mellanox NIC issue. Can you see if the dying application connection survives in the connections table after a policy install (fw ctl conntab)? If it does but stops working anyway that could be something going on at the NIC level. I also wonder whether the NIC is trying to do some kind of "helpful" hardware acceleration/offloading for certain connections that gets broken by a policy install?

What Transmit Hash balancing are you using for your Mellanox-based bonds? Another thing to try is drop all but one physical interface from that bond (leaving a "bond of one") and see if that impacts the problem as I suppose it could be something with LACP/802.3ad.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunatley, we have not yet checked whether they remain in the connection table during policy push.

Also the RX statistics we haven't checked during policy installation.

We'll keep an eye on it during next maintenance window.

Do you mean the mode? We configured this:

set bonding group 1 mode 8023AD

set bonding group 1 lacp-rate slow

set bonding group 1 mii-interval 100

set bonding group 1 down-delay 200

set bonding group 1 up-delay 200

set bonding group 1 xmit-hash-policy layer2

set bonding group 2 mode 8023AD

set bonding group 2 lacp-rate slow

set bonding group 2 mii-interval 100

set bonding group 2 down-delay 200

set bonding group 2 up-delay 200

set bonding group 2 xmit-hash-policy layer2

Bond1 is eth5-01 and eth6-01 and Bond2 is eth5-02 and eth6-02 (Both 25G Mellanox Cards)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. Here are a few observations but it is unlikely that they are related to applications dying when you push policy.

1) Based on the ethtool and sar outputs, something in your network is regularly sending a Ethernet frame (probably some kind of broadcast) that contains more frame payload data than the 1500 MTU you are using. This packet will be discarded by the NIC and increment the RX-ERR/rx_jabbers_phy/rx_oversize_pkts_phy/rxfram error counters. Unfortunately there is no way to see what this packet is via tcpdump/fw monitor/cppcap as it is discarded at the NIC hardware level and there is no way to change that behavior on most NICs. You'll need a specialized sniffer appliance with customized NIC firmware to see these errored frames. For an amusing (but highly traumatizing at the time) story about how I found this out the hard way, see here: It's Not the firewall It is possible that Intel NICs don't consider this kind of frame an error and happily accept it; I doubt this is traffic you actually care about.

2) You are using the Layer 2 XOR transmit hash policy for balancing between the multiple physical interfaces of your two bonds. For Bond1 this default algorithm is working well keeping the physical interfaces relatively balanced; you must have a lot of diverse MAC addresses on those networks other than only the firewall and some kind of core/perimeter router. However it is NOT working well for Bond2, which must not have a diverse set of MAC addresses present and/or be some kind of transit network, and the underlying physical interface load is imbalanced enough to cause problems. Would highly recommend setting Layer 3+4 on Bond2 on both the firewall and switch side; probably wouldn't hurt to do it for Bond1 as well just to standardize your configuration.

3) The RX-DRP/rx_out_of_buffer counters appear to be legit full ring buffer drops and not unknown EtherTypes. The drop rate is really low (0.044%) but does seem to be happening in clumps (not regularly) that may indicate big spikes in traffic load or a policy installation event. I would suggest grabbing a copy of the netstat -ni counters prior to a policy installation, installing policy, and check them again. Did any applications die, and was it correlated to an RX-DRP on a relevant interface the application is using? Given how low the overall rate is I would find this highly unlikely, but it is worth investigating. If this does indeed appear to be the case, this is one of the very very few cases where increasing the interface ring buffer size is justified, as you appear to have plenty of dispatcher/SND cores. But the fact Multi-Queue is stopping short at 30 queues for these 25Gbps interfaces when it should be able to go much higher should be investigated first; however that may just be a hardware limit of the specific NIC similar to the Intel I211 interface's limit of 2 queues when using the igb driver which supports up to 16 queues.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow, first of all, thank you for going into such in-depth analysis with us. We had also already considered switching the Bond1 to a new Bond with 10G cards to test whether the problems would then go away.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Doubling the RX ring buffer brought the rx-drp to a standstill.

The rx-err continue to count up continuously.

During the policy push it was actually faster than usual.

The test with switching a bond to 2 *10G with Intel (same Cisco Nexus switch) brought no change.

Unfortunately, there are drp and err here too.

At least for us, the Mellanox SFP can now be removed from the list of suspects.

Checkpoint is currently analyzing the recorded data.

I will post here if there is a solution for us, even if it is a fallback to R81.10 Snapshot.

We are currently looking deeper at: https://support.checkpoint.com/results/sk/sk115963

Update:

After we installed a Hotfix on October 3rd we realise yesterday that it had nothing to do with the URL filter blade at all. The problem lies somewhere in the advanced Networking with SecureXL. The problem does not occur with SecureXL switched off.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

did you get any feedback or outcome from CheckPoint?

Seeing here the same, even on a clusterXL standby device.

Regards

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 23 | |

| 12 | |

| 9 | |

| 8 | |

| 8 | |

| 6 | |

| 5 | |

| 5 | |

| 4 | |

| 4 |

Upcoming Events

Wed 22 Oct 2025 @ 11:00 AM (EDT)

Firewall Uptime, Reimagined: How AIOps Simplifies Operations and Prevents OutagesTue 28 Oct 2025 @ 11:00 AM (EDT)

Under the Hood: CloudGuard Network Security for Google Cloud Network Security Integration - OverviewAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter