I'm getting interesting appliance behaviour with increased traffic due to home working situation.

Traffic has grow considerably through the gateway but no core exceeds 60% load.

We have five 10Gbps interfaces configured in three bonds all using MQ. With the traffic increase we started seeing rx_missed_errors on 4 out 5 interfaces. I run this oneliner to get discard percentage:

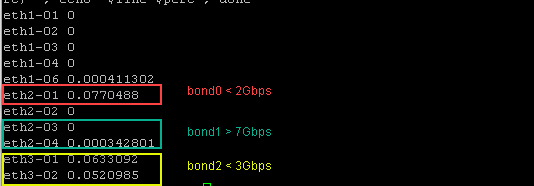

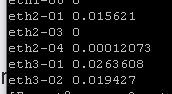

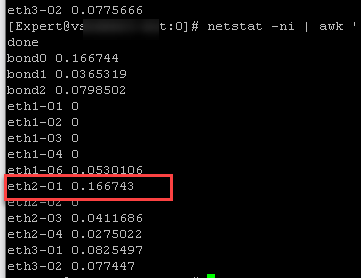

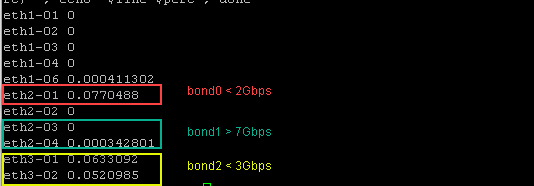

ifconfig | awk '/Link /{print $1}' | grep ^eth | while read line; do perc=`ethtool -S $line | awk '/rx_packets/{tot=$2} /rx_missed_errors/{err=$2 ; tot = tot + 1; perc = err * 100 / tot; print perc}'`; echo "$line $perc"; done

We are getting close to the magic 0.1% recommended by @Timothy_Hall and it makes me nervous as we are far off appliance performance limits.

What's peculiar is that not the busiest interface that has most discards, actually one with the least traffic (bond0 / eth2-01)

Of course I could chuck more CPU at it, but considering it runns at 60% now, i shouldn't really see any discards if you ask me.

MQ hs 6 cores configured and all seem to be running roughly at the same level.

I might try to increase ring buffer on my standby node from 512 to 1024 just for the test.

But any other thoughts are welcome!

P.S. tried already to disable optimised drops but it did not help.