- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Playing with benchmarking tools, is there a pr...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Playing with benchmarking tools, is there a preferred direction???

Hello Check Pointers,

i have a question, maybe you can enlighten me with your experience on benchmarking and performance tuning ...

I have an old firewall, OpenServer, 4 CPU´s no blades, just FW enabled. During working hours its totaly overloaded, only SecureXL keeps it alive :-).

Out of working hours the load is of course very low and we achive 1G wire speed, yes really!

But during working hours, the speed from LAN to DMZ is horrible, other way is "good"

Yes sure the firewall has reached its end, a replacement is planned!

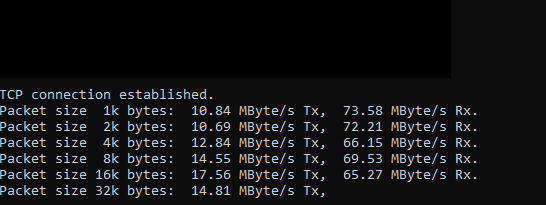

When we do a benchmark, we use NetIO, not the best i know, but pretty common in the geman speaking world, we see some connections are ALWAYS much faster then others.

LAN -> DMZ is always SLOW

DMZ -> LAN is always FAST

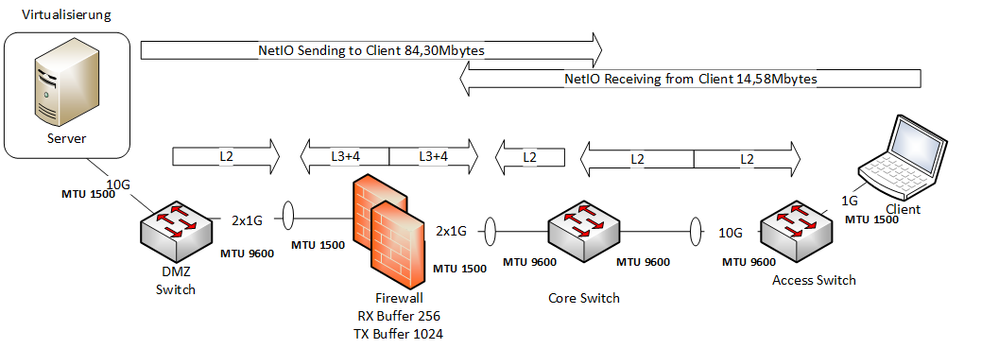

i have a quick drawing.

Overall Question, why is a benchmark from LAN to DMZ bad and a test DMZ to LAN good?

Is a prefered direction existing?

The benchmark tool uses the same SRC & DST Ports for both directions. UDP & TCP

You see we have different MTU and different Load Sharing Settings on the Interfaces. (Firewall has L3+4 distribution, Switch L2)

Different port speeds causing different Window Size?

Bond on LAN interface is Onboard Nic and BroadCom, a double NoGo 🙂

I know this is way to less information to get a precise answer ...

But besides of replacing this firewall hardware what are your thoughts on this?

best regards

Thomas.

10 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The answer to your question will be highly dependent on your code level, what is it? If R80.10 or lower, fragmentation due to the differing MTUs is going to kill you as fragmented traffic cannot be accelerated in R80.10 or lower. Other possibility to explain different throughput depending on direction is network interface errors, which will be revealed by netstat -ni. Looks like you may have changed ring buffer sizes from the defaults, almost never a good idea and can easily make things worse. Also Broadcom NICs are terrible and should never be used to carry production traffic.

Please provide the output of the "Super Seven", ideally taken when the firewall is under heavy load and I can give you a more definitive answer:

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Timothy,

as always the story is longer then my first explanation ...

we did an update from R77.30 to R80.30 Take 155, after some time we encountered a slowness.

I see NO network interface errors, no Drops at all!

no indication for fragmentations.

iam looking forward to get access and run your super7 to provide you with more details!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

R80.30 has a known problem where the TLS parser is invoked inappropriately which causes performance issues, fixed in Jumbo HFA Take 219+ and you are running 155...does this apply to your scenario:

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

so here the Super7 Output ...

+-----------------------------------------------------------------------------+

| Super Seven Performance Assessment Commands v0.4 (Thanks to Timothy Hall) |

+-----------------------------------------------------------------------------+

| Inspecting your environment: OK |

| This is a firewall....(continuing) |

| |

| Referred pagenumbers are to be found in the following book: |

| Max Power 2020: Check Point Firewall Performance Optimization - 3rd Edition |

| |

| or when specifically mentioned in |

| |

| Max Power: Check Point Firewall Performance Optimization - Second Edition |

| |

| Available at http://www.maxpowerfirewalls.com/ |

+-----------------------------------------------------------------------------+

| Command #1: fwaccel stat |

| |

| Check for : Accelerator Status must be enabled (R77.xx/R80.10 versions) |

| Status must be enabled (R80.20 and higher) |

| Accept Templates must be enabled |

| Message "disabled" from (low rule number) = bad |

| |

| Chapter 7: SecureXL throughput acceleration & SMT |

| Chapter 8: Access Control Policy Tuning |

| Page 286 |

+-----------------------------------------------------------------------------+

| Output: |

+-----------------------------------------------------------------------------+

|Id|Name |Status |Interfaces |Features |

+-----------------------------------------------------------------------------+

|0 |SND |enabled |eth8,eth9,eth0,eth1,eth3,|

| | | |eth4,eth5,eth7 |Acceleration,Cryptography |

| | | | |Crypto: Tunnel,UDPEncap,MD5, |

| | | | |SHA1,NULL,3DES,DES,CAST, |

| | | | |CAST-40,AES-128,AES-256,ESP, |

| | | | |LinkSelection,DynamicVPN, |

| | | | |NatTraversal,AES-XCBC,SHA256 |

+-----------------------------------------------------------------------------+

#### THIS IS NOT THE BEST ... THIS MUST BE FINETUNED I KNOW! ###

Accept Templates : disabled by Firewall

Layer ---Drop Templates : disabled

NAT Templates : disabled by Firewall

Layer ---

+-----------------------------------------------------------------------------+

| Command #2: fwaccel stats -s |

| |

| Check for : Accelerated conns/Totals conns: >50% desired, >75% ideal |

| Accelerated pkts/Total pkts : >50% great |

| PXL pkts/Total pkts : >50% OK |

| F2Fed pkts/Total pkts : <30% good, <10% great |

| |

| Chapter 7: SecureXL throughput acceleration & SMT |

| Chapter 8: Access Control Policy Tuning |

| Page 288 |

+-----------------------------------------------------------------------------+

| Output: |

Accelerated conns/Total conns : 6703/67259 (9%)

Accelerated pkts/Total pkts : 30103888360/44020630400 (68%)

F2Fed pkts/Total pkts : 451395237/44020630400 (1%)

F2V pkts/Total pkts : 119988419/44020630400 (0%)

CPASXL pkts/Total pkts : 0/44020630400 (0%)

PSLXL pkts/Total pkts : 13465346803/44020630400 (30%)

QOS inbound pkts/Total pkts : 0/44020630400 (0%)

QOS outbound pkts/Total pkts : 0/44020630400 (0%)

Corrected pkts/Total pkts : 0/44020630400 (0%)

+-----------------------------------------------------------------------------+

| Command #3: grep -c ^processor /proc/cpuinfo && /sbin/cpuinfo |

| |

| Check for : If number of cores is roughly double what you are excpecting, |

| hyperthreading may be enabled |

| |

| Chapter 6: CoreXL & Multi-Queue |

| Page 175 |

+-----------------------------------------------------------------------------+

| Output: |

4

#### THIS IS AN IBM OBENSERVER NOT AN APPLIANCE! ###

HyperThreading=disabled

+-----------------------------------------------------------------------------+

| Command #4: fw ctl affinity -l -r |

| |

| Check for : SND/IRQ/Dispatcher Cores, # of CPU's allocated to interface(s) |

| Firewall Workers/INSPECT Cores, # of CPU's allocated to fw_x |

| R77.30: Support processes executed on ALL CPU's |

| R80.xx: Support processes only executed on Firewall Worker Cores|

| |

| Chapter 6: CoreXL & Multi-Queue |

| Page 193 |

+-----------------------------------------------------------------------------+

| Output: |

CPU 0: eth8 eth9 eth0 eth1 eth3 eth4 eth5 eth7

CPU 1: fw_2

wsdnsd fwd vpnd mpdaemon lpd in.asessiond rtmd cpd cprid

CPU 2: fw_1

wsdnsd fwd vpnd mpdaemon lpd in.asessiond rtmd cpd cprid

CPU 3: fw_0

wsdnsd fwd vpnd mpdaemon lpd in.asessiond rtmd cpd cprid

All:

+-----------------------------------------------------------------------------+

| Command #5: netstat -ni |

| |

| Check for : RX/TX errors |

| RX-DRP % should be <0.1% calculated by (RX-DRP/RX-OK)*100 |

| TX-ERR might indicate Fast Ethernet/100Mbps Duplex Mismatch |

| |

| Chapter 2: Layers 1&2 Performance Optimization |

| Page 68-80 |

| |

| Chapter 6: CoreXL & Multi-Queue |

| Page 179 |

+-----------------------------------------------------------------------------+

| Output: |

Kernel Interface table

Iface MTU Met RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg

bond0 1500 0 10810656204 0 0 0 9770272262 0 0 0 BMmRU

bond0.1004 1500 0 6090717681 0 0 0 6012406754 0 0 0 BMmRU

bond0.1005 1500 0 4718182186 0 0 0 3836726057 0 0 0 BMmRU

bond0.1006 1500 0 2019 0 0 0 8153 0 0 0 BMmRU

bond0.1007 1500 0 25425 0 0 0 31600 0 0 0 BMmRU

bond0.1030 1500 0 46021 0 0 0 52089 0 0 0 BMmRU

bond0.1031 1500 0 1641519 0 0 0 2037836 0 0 0 BMmRU

bond1 1500 0 10840079092 0 159 0 10711067502 0 0 0 BMmRU

bond1.1001 1500 0 3165593126 0 0 0 2920683462 0 0 0 BMmRU

bond1.1002 1500 0 2273374428 0 0 0 2137544956 0 0 0 BMmRU

bond1.1008 1500 0 47222589 0 0 0 45028303 0 0 0 BMmRU

bond1.1010 1500 0 4168233 0 0 0 3964803 0 0 0 BMmRU

bond1.1014 1500 0 18936042 0 0 0 12233215 0 0 0 BMmRU

bond1.1015 1500 0 1676377650 0 0 0 608444189 0 0 0 BMmRU

bond1.1016 1500 0 26136974 0 0 0 27259574 0 0 0 BMmRU

bond1.1017 1500 0 1891577675 0 0 0 2182792432 0 0 0 BMmRU

bond1.1024 1500 0 2736033 0 0 0 2165633 0 0 0 BMmRU

bond1.1025 1500 0 0 0 0 0 5 0 0 0 BMmRU

bond1.1094 1500 0 1096857550 0 0 0 2263968718 0 0 0 BMmRU

bond1.1095 1500 0 344011256 0 0 0 126396012 0 0 0 BMmRU

bond1.1096 1500 0 57293602 0 0 0 59563484 0 0 0 BMmRU

bond1.1097 1500 0 3 0 0 0 8153 0 0 0 BMmRU

bond1.1098 1500 0 3 0 0 0 8153 0 0 0 BMmRU

bond1.1100 1500 0 111448482 0 0 0 123177644 0 0 0 BMmRU

bond1.3001 1500 0 2021 0 0 0 8151 0 0 0 BMmRU

bond1.3002 1500 0 102850256 0 0 0 194671619 0 0 0 BMmRU

bond1.3011 1500 0 21451673 0 0 0 46844978 0 0 0 BMmRU

bond2 1500 0 8794071237 0 26723 0 10068447949 0 0 0 BMmRU

bond2.1013 1500 0 8614527101 0 0 0 9666734887 0 0 0 BMmRU

bond2.1520 1500 0 32077120 0 0 0 26240130 0 0 0 BMmRU

bond2.1521 1500 0 17487131 0 0 0 19886502 0 0 0 BMmRU

bond2.1522 1500 0 7 0 0 0 8151 0 0 0 BMmRU

bond2.1550 1500 0 25819654 0 0 0 24048269 0 0 0 BMmRU

bond2.1580 1500 0 102690997 0 0 0 332519248 0 0 0 BMmRU

bond3 1500 0 430623279 0 0 0 212809864 0 0 0 BMmRU

bond3.1003 1500 0 414118512 0 0 0 157904849 0 0 0 BMmRU

bond3.1012 1500 0 16462730 0 0 0 54952999 0 0 0 BMmRU

eth0 1500 0 2311777931 0 0 0 4927868792 0 0 0 BMsRU

eth1 1500 0 4142939995 0 9 0 5349009299 0 0 0 BMsRU

eth3 1500 0 389943270 0 0 0 110072023 0 0 0 BMsRU

eth4 1500 0 8498878761 0 0 0 4842403951 0 0 0 BMsRU

eth5 1500 0 6697139520 0 150 0 5362058554 0 0 0 BMsRU

eth7 1500 0 40680011 0 0 0 102737841 0 0 0 BMsRU

eth8 1500 0 4456758570 0 0 0 4423256669 0 0 0 BMsRU

eth9 1500 0 4337312667 0 26723 0 5645191280 0 0 0 BMsRU

lo 16436 0 156204 0 0 0 156204 0 0 0 LRU

interface eth0: There are no RX drops

interface eth1: There are no RX drops

interface eth3: There are no RX drops

interface eth4: There are no RX drops

interface eth5: There are no RX drops

interface eth7: There are no RX drops

interface eth8: There are no RX drops

interface eth9: There are no RX drops

+-----------------------------------------------------------------------------+

| Command #6: fw ctl multik stat |

| |

| Check for : Large # of conns on Worker 0 - IPSec VPN/VoIP? |

| Large imbalance of connections on a single or multiple Workers |

| |

| Chapter 6: CoreXL & Multi-Queue |

| Page 216 |

| |

| Chapter 9: Site-to-Site VPN Optimization |

| Page 329 |

| |

| Max Power: Check Point Firewall Performance Optimization - Second Edition |

| Chapter 7: CoreXL Tuning |

| Page 241 |

| |

| Chapter 8: CoreXL VPN Optimization |

| Page 256 |

+-----------------------------------------------------------------------------+

| Output: |

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 3 | 23101 | 29698

1 | Yes | 2 | 21817 | 29293

2 | Yes | 1 | 23289 | 28789

+-----------------------------------------------------------------------------+

| Command #7: cpstat os -f multi_cpu -o 1 -c 5 |

| |

| Check for : High SND/IRQ Core Utilization |

| High Firewall Worker Core Utilization |

| |

| Chapter 6: CoreXL & Multi-Queue |

| Page 173 |

+-----------------------------------------------------------------------------+

| Output: |

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 55| 45| 55| ?| 77859|

| 2| 5| 26| 69| 31| ?| 77861|

| 3| 4| 28| 69| 31| ?| 77861|

| 4| 3| 26| 71| 29| ?| 77863|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 55| 45| 55| ?| 77859|

| 2| 5| 26| 69| 31| ?| 77861|

| 3| 4| 28| 69| 31| ?| 77861|

| 4| 3| 26| 71| 29| ?| 77863|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 56| 44| 56| ?| 71746|

| 2| 1| 29| 71| 29| ?| 71751|

| 3| 1| 26| 73| 27| ?| 71755|

| 4| 1| 25| 74| 26| ?| 71757|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 56| 44| 56| ?| 71746|

| 2| 1| 29| 71| 29| ?| 71751|

| 3| 1| 26| 73| 27| ?| 71755|

| 4| 1| 25| 74| 26| ?| 71757|

---------------------------------------------------------------------------------

Processors load

---------------------------------------------------------------------------------

|CPU#|User Time(%)|System Time(%)|Idle Time(%)|Usage(%)|Run queue|Interrupts/sec|

---------------------------------------------------------------------------------

| 1| 0| 55| 46| 54| ?| 23471|

| 2| 2| 23| 75| 25| ?| 23476|

| 3| 1| 26| 74| 26| ?| 23479|

| 4| 1| 21| 78| 22| ?| 46966|

---------------------------------------------------------------------------------

+-----------------------------------------------------------------------------+

| Thanks for using s7pac |

+-----------------------------------------------------------------------------+

we did run a cpsizeme too of course, a new hardware is on its way, but the replacement will take some time!

best regards

Thomas.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

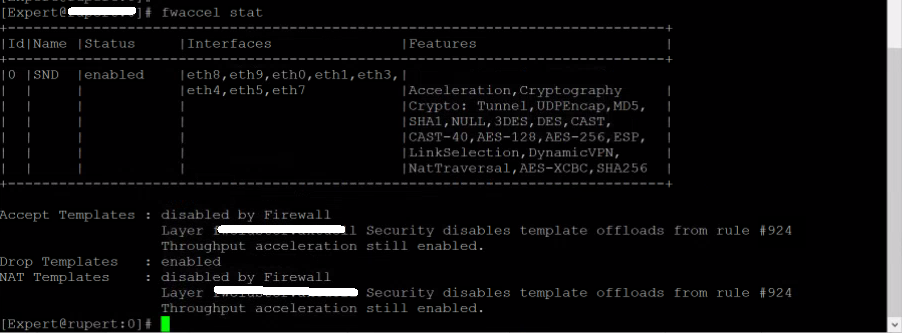

Your policy layer name is too long in the SmartConsole, please shorten it to 31 or less characters, install policy, run fwaccel stat again, and post the output. Based on the 9% accept templating rate there probably needs to be some optimizations there.

Depending on what blades you have enabled that 30% PXL percentage might be OK, or it might be the TLS parser issue I mentioned. Please provide output of enabled_blades.

Everything else including network counters and CoreXL split look fine.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

i changed the policy name and here is the output!

i saw that DHPC relay still used the old legacy protocolls, and stopped accept remplates at rule #11.

i moved them to the buttom, #924.

also i will switch to the new services in a maintenance window ...

blades are as followss:

enabled_blades

fw mon vpn

so its really not that much!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

but the main reason of my question ... is still how why is there be a prefence in a certain direction when i do a speed test?

its clear the firwall is old and has reache its end ... but how to explain that some directions are fast and other are slow?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Assuming there is not some kind asymmetry in the network routing, tracking down directional speed differences like that is going to be tough.

Try running top and hit 1 if needed to display all individual cores. Start one of your benchmark tests and watch the individual core utilizations carefully, do any of them drop to around 0% idle? If so that is the bottleneck.

If none of them drop to near 0% idle, at that point you need to gather a packet capture and pull it into Wireshark for protocol analysis at the TCP level. Wireshark can help with locating TCP zero windows and other network protocol-based conditions that can slow you down; the essential question you need to answer is whether the slower performance is being caused by latency (or jitter) or flat-out packet loss. In general performing that type of advanced analysis directly from the CLI is difficult. You can try running your packet capture through the cpmonitor tool built into Gaia for some fast statistical analysis (sk103212: Traffic analysis using the 'CPMonitor' tool), but I think you'll need the power of Wireshark analysis for this one.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Open a case with TAC to get behind this...

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, yes we have a TAC case running already

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 22 | |

| 17 | |

| 11 | |

| 8 | |

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter