- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

CheckMates Fest 2026

Join the Celebration!

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

R82.10 and Rationalizing Multi Vendor Security Policies

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Maximum reachable bandwidth 3800

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maximum reachable bandwidth 3800

Hello,

We have an IPsec tunnel between two sites, each with a 3800. The available Internet bandwidth is 1 GBit/sec, and the latency is about 12 msec. There is an IP test node behind the firewall on both sides. We can't achive more than 300 Mbit/sec between the two test nodes over the tunnel. The encryption is AES 256, but even if we set the encryption to none, it stays at this maximum bandwidth. The firewalls have R80.40 JHF 190, all blades (except FW, VPN) are disabled during the tests, exceptions cphwd_medium_path_qid_by_cpu_id = 1

cphwd_medium_path_qid_by_mspi = 0

are set. We do not see a CPU overload.

We ran the download tests with simple http download and also using iperf.

If we connect the test notes directly to the Internet without the Firewalls, we reach the maximum bandwidth of 1 GBit/sec.

If we perform an additional parallel download via a second tunnel to another site with a 3800, the bandwidth doubles!

What is your experience? Is there a maximum bandwidth per connection that is limited by the hardware of the Firewall? Do you have ever seen this magical 300 MBit/sec?

Thanks

19 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's expected behavior for a single heavy "elephant" flow.

This is constrained by the fact only a single core handles the specific flow.

We have started to address this in R81.20 with Hyperflow, which we are doing a Techtalk on next week.

Since you're only using Firewall and VPN, Hyperflow wouldn't help as it only helps Medium Path inspection currently.

However, there are other improvements to VPN that might improve speed somewhat.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a TAC case with customer at the moment and they get, if lucky, maybe 20% bandwidth speeds through the VPN tunnel (other side is Fortigate). Everything was verified on the other end, Fortinet TAC did bunch of checks and TAC asked us on CP side to change the MSS value.

I honestly have no idea where TAC guy got the info that 10-20% is the most you would get through the VPN, because to me, I have a hard time believing that. I was thinking there was a way to actually change mss values per vpn community, but does not seem so.

Things CP TAC suggested so far that we did:

-install latest jumbo for R81.10 (though in all fairness, that is a suggestion no matter the problem)

-cluster failover

-try disable sxl

-vpn accel off for that specific tunnel

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

iked being multithreaded in R81.20 should help performance a bit.

However, VPN traffic not involving other blades will still be constrained to what a single SND core can handle (per flow).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Generally if outright speed is what you're after you may need to adjust some things, refer:

https://support.checkpoint.com/results/sk/sk73980

With that said I see you've tried different encryption methods without success. Do you see individual CPU/cores peaking?

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That was the first thing that initial engineer asked us to do and it did not change anything, it was exact same issue. Not saying its not successful for others, but it was not for us.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Per @PhoneBoy above were your tests also running a single flow or multiple threads?

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Multiple.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Noted, in that case the issue is different than that stated by @Juergen_Blumens here.

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

True...every case is different 🙂

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

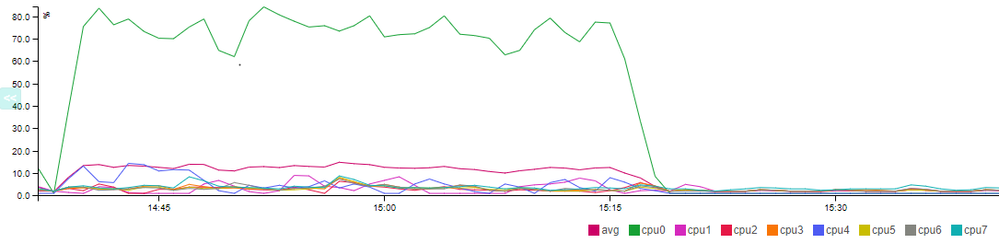

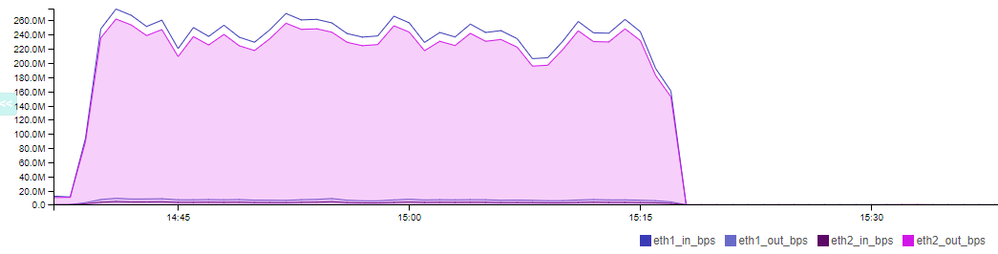

This is the CPU load and the Bandwidth.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see this lengthy thread which hashes out the performance of the 3800 for VPNs:

Check Point CPAP-SG3800 and expected performance l...

You are almost certainly saturating a single SND core with your fully-accelerated VPN traffic and it simply cannot go any faster. Unfortunately the 3800 uses a ultra-low voltage processor architecture, whose individual cores are at least 2-3 times slower than Xeon cores. Intel tries to make up for this by having more cores available (8 in your case) which doesn't help your situation. I did make some rather unorthodox "last ditch" recommendations in the prior thread that may help you, check them out.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply and the reference to the other thread. We also tried with a 6200 and did not get more bandwidth. Is the architecture comparable to the 3800?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes comparable in that many / multiple parallel TCP or UDP connections are needed for best results.

Recent versions have introduced technologies such as Hyperflow to contend with similar non-VPN scenarios (for systems with +8 CPU cores).

sk178070: HyperFlow in R81.20 and higher

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know the precise CPU type in the 6200, but the 3800 is rated for 2.75Gbps of VPN throughput while the 6200 is rated for 2.57Gbps, so I'd say they are comparable. I'm assuming these numbers are for all available cores being used simultaneously for VPN, not just one.

As I mentioned earlier the graphs show that VPN traffic is fully saturating a single SND core, and there is no way to spread the traffic of a single tunnel across multiple SND cores that I know of. Hyperflow does not help with VPN traffic at this time and neither does Lightspeed. Multi-core VPN only applies on Firewall Worker/Instance cores.

One non-intuitive thing to try: set 3DES for IPSec/Phase 2, measure performance, then set IPSec/Phase 2 for AES-128 and measure again. The AES-128 speed should be at least double that of 3DES, if not you are bumping up against some other kind of limitation other than firewall CPU.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

3800 uses an Atom C3758 (8c8t, 3.125W/c), while the 6200 uses a Pentium G5400 (2c4t, 29W/c). I would expect a 6200 to perform significantly better with a single traffic flow, since it has nearly ten times the power budget to work with.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would agree, which makes me think that he is bumping against some other kind of performance limitation, not necessarily on the firewall itself. The 3DES/AES-128 test I mentioned in a prior post should help reveal what is going on.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A packet capture to see TCP window sizes, MSS (and derived MTU) values would also be helpful perhaps.

Namely to confirm fragmentation has been dealt with by enabling MSS clamping etc.

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Even though the 3800 model has 8 cores which is the minimum required to support Hyperflow, I find it interesting that sk178070 was just updated to state that the 3800 model does *not* support Hyperflow.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, the 3800 does not support Hyperflow due to hardware limitations (not specific to the number of cores available).

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 13 | |

| 11 | |

| 10 | |

| 8 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 3 |

Upcoming Events

Fri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationTue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsFri 09 Jan 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 42: Looking back & forwardThu 22 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 2: Hacking with AI: The Dark Side of InnovationTue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter