- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

Quantum SD-WAN Monitoring

Watch NowCheckMates Fest 2026

Watch Now!AI Security Masters

Hacking with AI: The Dark Side of Innovation

MVP 2026: Submissions

Are Now Open!

Overlap in Security Validation

Help us to understand your needs better

CheckMates Go:

R82.10 and Rationalizing Multi Vendor Security Policies

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: High cpu utilization VSX

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

High cpu utilization VSX

Hello:

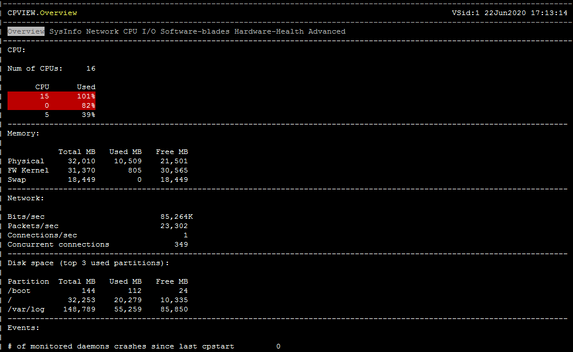

We are detecting high CPU usage in the virtual firewalls that we have. How can I know what is causing it?

The firewall when you execute the command "top", indicates that the PID 14995 - COMMAND - fwk5_dev, has a CPU% above 100.

In snmp v3 monitoring, I get the High CPU alert. Checking indicates that CPU # 768 is the one with this behavior. (this is indicated by the spectrum tool)

===================================================================

top - 17:15:10 up 17 days, 5:08, 1 user, load average: 4.17, 4.55, 3.99

Tasks: 502 total, 4 running, 498 sleeping, 0 stopped, 0 zombie

Cpu(s): 11.3%us, 2.9%sy, 0.0%ni, 79.2%id, 0.0%wa, 0.1%hi, 6.4%si, 0.0%st

Mem: 32779220k total, 32229544k used, 549676k free, 903992k buffers

Swap: 18892344k total, 260k used, 18892084k free, 20568592k cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

14995 admin 0 -20 860m 250m 64m S 112 0.8 267:59.91 fwk5_dev

15117 admin 0 -20 1241m 633m 85m S 37 2.0 4296:42 fwk3_dev

18274 admin 15 0 735m 204m 40m S 35 0.6 36:59.04 fw_full

14902 admin 0 -20 1105m 496m 84m S 15 1.6 3562:06 fwk13_dev

19721 admin 15 0 0 0 0 R 15 0.0 508:07.98 cphwd_q_init_ke

===================================================================

I have 16 CPUs, how to know which one is using the largest CPU?

How do I interpret the output of the CPVIEW that identifies two with high cpu.

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @raquinog,

Can you send some more screenshots:

# fw ctl affinity -l -a

# fwaccel stats -s

# top (press 1 to show cors)

More can be seen in the output of the CLI commands.

I think you do a lot of content inspection. So the PSLXL path will probably be very busy.

If this is the case you can adjust the affinity if necessary.

---

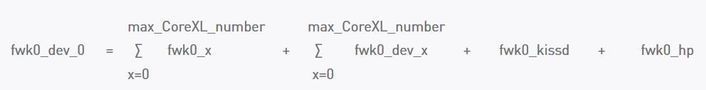

A small calculation sample for the utilization of process fwkX:

- fwk0_X -> fw instance thread that takes care for the packet processing

- fwk0_dev_X -> the thread that takes care for communication between fw instances and other CP daemons

- fwk0_kissd -> legacy Kernel Infrastructure (obsolete)

- fwk0_hp -> (high priority) cluster thread

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

21 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @raquinog,

Can you send some more screenshots:

# fw ctl affinity -l -a

# fwaccel stats -s

# top (press 1 to show cors)

More can be seen in the output of the CLI commands.

I think you do a lot of content inspection. So the PSLXL path will probably be very busy.

If this is the case you can adjust the affinity if necessary.

---

A small calculation sample for the utilization of process fwkX:

- fwk0_X -> fw instance thread that takes care for the packet processing

- fwk0_dev_X -> the thread that takes care for communication between fw instances and other CP daemons

- fwk0_kissd -> legacy Kernel Infrastructure (obsolete)

- fwk0_hp -> (high priority) cluster thread

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Use:

ps -aux

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a similar issue.

I have a pair of 15600 appliances (32 total cores with dynamic dispatcher on), on this I have 6 Virtual Systems, out of which three are busy. One of these has over 250,000 concurrent connections (has peaked around 350,000) and I've allocated 8 cores to it (VS1). This VS runs FW/IPS/AV/ABOT. The other two are high volume low connections so these currently hit about 1Gbps but has about 40,000 concurrent connections (It should be noted that normally these would be much busier). I've allocated 6 cores each (VS2 - 3). These are running FW/IPS/AV/ABOT/URL Filtering/Application Control; using top I can see the fwk process for each fo the VS runs around 150%, clear other processes are getting hit. The question is what and why considering these devices should be able to handle much more load.

The gateways are running R80.20 with JHFA183.

The current core breakdown is this:

VS1 = 8 cores

VS2 = 6 cores

VS3 = 6 cores

VS4 = 2 cores

VS5 = 1 core

VS6 = 1 core

I'm going to assume 6 for SND multi-queue

When everything is running on a single node we have seen latency issue and packet drops on VS2 - 3.

Using out monitoring tools I can only see all the CPU cores which indicates about 50 - 55% usage per core (of course there would be spike). I would like to know a couple of things.

1. Can we over subscribe core allocation?

2. How on earth can we actually monitor the correct number of cores per VS using SNMP tool such as solarwinds or PRTG. I've looked at the documentation and it pretty much does not work for CPU monitoring (yes I can get everything else correctly by polling the VS's themselves).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1: Yes you can oversubscribe, I would also run VSLS so you can use both boxes.

If you really want you can run the boxes at 100% load on both of them 🙂

I wouldn´t recommend it. But its possible, its more or less how much pain do you want to have in a hardware failure or during upgrades.

Just a comment regarding the 150% load, that means its using 1.5 Instanses.

If you allocate 8 instanses meaning your 100% load is 800%

2: monitoring VS actual load isn´t possible, it gives incorrect values.

The values given by the SNMP for CPU is NOT per VS, but for the box or for a specific core.

Had a long case with check point R&D regarding this, not sure if/when its going to be fixed.

Regards,

Magnus

https://www.youtube.com/c/MagnusHolmberg-NetSec

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1: Yes you can oversubscribe, I would also run VSLS so you can use both boxes.

- Great, that's what I suspected, clearly oversubscription needs to be sensible. One of my biggest issues is the ability for Solarwinds/PRTG to report the actually CPU usage per VS, which does not work (Just picks up 32 cores per VS)

Just a comment regarding the 150% load, that means its using 1.5 Instances.

If you allocate 8 instances meaning your 100% load is 800%

- Exactly hence when I hit 150% for VS2 (example which has 6 cores) I'm not even using 40% of the total cores available hence cannot see why I would hit latency or packet loss issues.

2: monitoring VS actual load isn´t possible, it gives incorrect values.

The values given by the SNMP for CPU is NOT per VS, but for the box or for a specific core.

Had a long case with check point R&D regarding this, not sure if/when its going to be fixed.

- Thankyou for feeling my pain! Checkpoint please listen and help resolve this. I appreciate this may be a challenge considering dynamic dispatching. I can only see this working if manual affinity was used in this way the same cores would be used.

Do we know if there has been an improvement in this area for R81?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Btw are you using MultiQ on your interfaces, so its not the cores assigned to the NIC that is going bananas?

Have had that issue before when i didn´t realize a box we took over didn´t have nics supporting multiQ (open server)

As we didn´t trust the SNMP monitoring on CPUs so we first notice it when the delay did go up and affected traffic.

On a 10G nic without MultiQ peak would be arround 3.5 -> 4 Gbit in total.

https://www.youtube.com/c/MagnusHolmberg-NetSec

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are running multi-queue but the processors seems ok for this:

Mgmt: CPU 0

Sync: CPU 16

eth1-07: CPU 17

eth1-08: CPU 17

VS_0 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_1 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_2 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_3 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_4 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_5 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_6 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_7 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_8 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_9 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

VS_10 fwk: CPU 2 3 4 5 6 7 8 9 10 11 12 13 14 15 18 19 20 21 22 23 24 25 26 27 28 29 30 31

Interface eth3-01: has multi queue enabled

Interface eth3-02: has multi queue enabled

# cpmq get -v

Active ixgbe interfaces:

eth3-01 [On]

eth3-02 [On]

Active igb interfaces:

Mgmt [Off]

Sync [Off]

eth1-04 [Off]

eth1-07 [Off]

eth1-08 [Off]

eth2-08 [Off]

The rx_num for ixgbe is: 4 (default)

multi-queue affinity for ixgbe interfaces:

CPU | TX | Vector | RX Bytes

-------------------------------------------------------------

0 | 0 | eth3-01-TxRx-0 (83) | 4630323900886

| | eth3-02-TxRx-0 (131) |

1 | 2 | eth3-01-TxRx-2 (99) | 4837081863067

| | eth3-02-TxRx-2 (147) |

2 | 4 | |

3 | 6 | |

4 | 8 | |

5 | 10 | |

6 | 12 | |

7 | 14 | |

8 | 16 | |

9 | 18 | |

10 | 20 | |

11 | 22 | |

12 | 24 | |

13 | 26 | |

14 | 28 | |

15 | 30 | |

16 | 1 | eth3-01-TxRx-1 (91) | 4964000363908

| | eth3-02-TxRx-1 (139) |

17 | 3 | eth3-01-TxRx-3 (107) | 4768026041847

| | eth3-02-TxRx-3 (155) |

18 | 5 | |

19 | 7 | |

20 | 9 | |

21 | 11 | |

22 | 13 | |

23 | 15 | |

24 | 17 | |

25 | 19 | |

26 | 21 | |

27 | 23 | |

28 | 25 | |

29 | 27 | |

30 | 29 | |

31 | 31 | |

TAC jumped on to have a look at the optimization which they was good, but something is not adding up.

I went to the cpsizeme tool on the Checkpoint site, said I wanted 5Gbps and was running 10VS with Gen V TP (exclude TE/TX), this basically said I should have 95% grown.

I'm running probably 3Gbps with the following blades turned on fw urlf av appi ips anti_bot mon and my overall CPUs when everything runs on one node is above 60% I've now split this across the 15600s to reduce impact to service however the numbers are not adding up.

Here fwaccel output from all the VSs:

Node1:

VS5:

# fwaccel stats -s

Accelerated conns/Total conns : 16/228876 (0%)

Accelerated pkts/Total pkts : 59546699579/60324119190 (98%)

F2Fed pkts/Total pkts : 777419611/60324119190 (1%)

F2V pkts/Total pkts : 1465272077/60324119190 (2%)

CPASXL pkts/Total pkts : 0/60324119190 (0%)

PSLXL pkts/Total pkts : 59456751619/60324119190 (98%)

CPAS inline pkts/Total pkts : 0/60324119190 (0%)

PSL inline pkts/Total pkts : 0/60324119190 (0%)

QOS inbound pkts/Total pkts : 0/60324119190 (0%)

QOS outbound pkts/Total pkts : 0/60324119190 (0%)

Corrected pkts/Total pkts : 0/60324119190 (0%)

# fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 2-15+ | 29806 | 39089

1 | Yes | 2-15+ | 31863 | 38537

2 | Yes | 2-15+ | 21908 | 37993

3 | Yes | 2-15+ | 32414 | 38856

4 | Yes | 2-15+ | 31566 | 37112

5 | Yes | 2-15+ | 31386 | 39640

6 | Yes | 2-15+ | 31160 | 38202

7 | Yes | 2-15+ | 30519 | 38076

VS6:

# fwaccel stats -s

Accelerated conns/Total conns : 1463/12117 (12%)

Accelerated pkts/Total pkts : 8982729259/9018937348 (99%)

F2Fed pkts/Total pkts : 36208089/9018937348 (0%)

F2V pkts/Total pkts : 87959368/9018937348 (0%)

CPASXL pkts/Total pkts : 0/9018937348 (0%)

PSLXL pkts/Total pkts : 8836491935/9018937348 (97%)

CPAS inline pkts/Total pkts : 0/9018937348 (0%)

PSL inline pkts/Total pkts : 0/9018937348 (0%)

QOS inbound pkts/Total pkts : 0/9018937348 (0%)

QOS outbound pkts/Total pkts : 0/9018937348 (0%)

Corrected pkts/Total pkts : 0/9018937348 (0%)

]# fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 2-15+ | 6669 | 9765

1 | Yes | 2-15+ | 5513 | 9201

VS7:

# fwaccel stats -s

Accelerated conns/Total conns : 0/1410 (0%)

Accelerated pkts/Total pkts : 2045822488/2049716107 (99%)

F2Fed pkts/Total pkts : 3893619/2049716107 (0%)

F2V pkts/Total pkts : 5894107/2049716107 (0%)

CPASXL pkts/Total pkts : 0/2049716107 (0%)

PSLXL pkts/Total pkts : 2042983760/2049716107 (99%)

CPAS inline pkts/Total pkts : 0/2049716107 (0%)

PSL inline pkts/Total pkts : 0/2049716107 (0%)

QOS inbound pkts/Total pkts : 0/2049716107 (0%)

QOS outbound pkts/Total pkts : 0/2049716107 (0%)

Corrected pkts/Total pkts : 0/2049716107 (0%)

# fw ctl multik stat

CoreXL is disabled

Node2:

VS8 (I can on this one F2F percentage, this one has site-to-site VPNs):

# fwaccel stats -s

Accelerated conns/Total conns : 0/0 (0%)

Accelerated pkts/Total pkts : 6542264/38410121 (17%)

F2Fed pkts/Total pkts : 31867857/38410121 (82%)

F2V pkts/Total pkts : 591/38410121 (0%)

CPASXL pkts/Total pkts : 0/38410121 (0%)

PSLXL pkts/Total pkts : 1138908/38410121 (2%)

CPAS inline pkts/Total pkts : 0/38410121 (0%)

PSL inline pkts/Total pkts : 0/38410121 (0%)

QOS inbound pkts/Total pkts : 0/38410121 (0%)

QOS outbound pkts/Total pkts : 0/38410121 (0%)

Corrected pkts/Total pkts : 0/38410121 (0%)

# fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 2-15+ | 117 | 5210

1 | Yes | 2-15+ | 111 | 6037

VS9:

# fwaccel stats -s

Accelerated conns/Total conns : 22/33167 (0%)

Accelerated pkts/Total pkts : 6362020813/6397033174 (99%)

F2Fed pkts/Total pkts : 35012361/6397033174 (0%)

F2V pkts/Total pkts : 219158034/6397033174 (3%)

CPASXL pkts/Total pkts : 0/6397033174 (0%)

PSLXL pkts/Total pkts : 6122277162/6397033174 (95%)

CPAS inline pkts/Total pkts : 0/6397033174 (0%)

PSL inline pkts/Total pkts : 0/6397033174 (0%)

QOS inbound pkts/Total pkts : 0/6397033174 (0%)

QOS outbound pkts/Total pkts : 0/6397033174 (0%)

Corrected pkts/Total pkts : 0/6397033174 (0%)

# fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 2-15+ | 3816 | 13537

1 | Yes | 2-15+ | 3993 | 7176

2 | Yes | 2-15+ | 3742 | 8666

3 | Yes | 2-15+ | 4201 | 7778

4 | Yes | 2-15+ | 3947 | 7443

5 | Yes | 2-15+ | 3931 | 7500

6 | Yes | 2-15+ | 3949 | 7052

7 | Yes | 2-15+ | 3964 | 6830

VS10:

# fwaccel stats -s

Accelerated conns/Total conns : 252/31621 (0%)

Accelerated pkts/Total pkts : 6743652274/6772265207 (99%)

F2Fed pkts/Total pkts : 28612933/6772265207 (0%)

F2V pkts/Total pkts : 273241968/6772265207 (4%)

CPASXL pkts/Total pkts : 0/6772265207 (0%)

PSLXL pkts/Total pkts : 6711806652/6772265207 (99%)

CPAS inline pkts/Total pkts : 0/6772265207 (0%)

PSL inline pkts/Total pkts : 0/6772265207 (0%)

QOS inbound pkts/Total pkts : 0/6772265207 (0%)

QOS outbound pkts/Total pkts : 0/6772265207 (0%)

Corrected pkts/Total pkts : 0/6772265207 (0%

# fw ctl multik stat

ID | Active | CPU | Connections | Peak

----------------------------------------------

0 | Yes | 2-15+ | 4326 | 5910

1 | Yes | 2-15+ | 4488 | 6212

2 | Yes | 2-15+ | 4372 | 7937

3 | Yes | 2-15+ | 4399 | 6425

4 | Yes | 2-15+ | 4422 | 6094

5 | Yes | 2-15+ | 4379 | 5975

6 | Yes | 2-15+ | 4269 | 6043

7 | Yes | 2-15+ | 4419 | 5877

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VS8 (I can on this one F2F percentage, this one has site-to-site VPNs):

# fwaccel stats -s

Accelerated conns/Total conns : 0/0 (0%)

Accelerated pkts/Total pkts : 6542264/38410121 (17%)

F2Fed pkts/Total pkts : 31867857/38410121 (82%)

That F2F percentage is way too high, what other blades do you have enabled in that VS other than IPSec VPN? vsenv 8;enabled_blades

Any chance you are using SHA-384 for your VPNs? Depending on your code version doing so can keep VPN traffic from getting accelerated by SecureXL and force it F2F. Also make sure you haven't accidentally enabled Wire Mode unless you need it for route-based VPNs. See here: https://community.checkpoint.com/t5/General-Topics/SecureXL-100-F2Fed-80-30/m-p/95704

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VS5:

fw av ips anti_bot mon

VS6:

fw urlf av appi ips anti_bot content_awareness mon

VS7:

fw vpn urlf av appi ips anti_bot mon vpn (Very strange output lists vpn twice?)

VS8:

fw vpn urlf av appi ips anti_bot mon vpn (Very strange output lists vpn twice?)

VS9:

fw urlf av appi ips anti_bot mon

VS10:

fw urlf av appi ips anti_bot mon

- Wire mode not enabled (was considering enabling this for Internal Site-to-Sites)

- Where not using SHA384, but we are using SHA256 and DH group 19 for some of the VPNs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For VS8 try this from expert mode, note that this will temporarily disable Threat Prevention, use at your own risk:

vsenv 8

ips off

fw amw unload

fwaccel stats -r

(wait 2 minutes)

fwaccel stats -s

ips on

fw amw fetch local

Did the F2F % temporarily go way down as a result of this test? If so you have some tuning to do in Threat Prevention.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

8]# fwaccel stats -s

Accelerated conns/Total conns : 1/13 (7%)

Accelerated pkts/Total pkts : 30060710/180653501 (16%)

F2Fed pkts/Total pkts : 150592791/180653501 (83%)

F2V pkts/Total pkts : 3380/180653501 (0%)

CPASXL pkts/Total pkts : 0/180653501 (0%)

PSLXL pkts/Total pkts : 22900827/180653501 (12%)

CPAS inline pkts/Total pkts : 0/180653501 (0%)

PSL inline pkts/Total pkts : 0/180653501 (0%)

QOS inbound pkts/Total pkts : 0/180653501 (0%)

QOS outbound pkts/Total pkts : 0/180653501 (0%)

Corrected pkts/Total pkts : 0/180653501 (0%)

8]# ips off

IPS is disabled

Please note that for the configuration to apply for connections from existing templates, you have to run this command with -n flag which deletes existing templates.

Without '-n', it will fully take effect in a few minutes.

8]# fw amw unload

Unloading Threat Prevention policy

Unloading Threat Prevention policy succeeded

8]# fwaccel stats -r

(Waited about 4 mins)

8]# fwaccel stats -s

Accelerated conns/Total conns : 0/1 (0%)

Accelerated pkts/Total pkts : 792/11953 (6%)

F2Fed pkts/Total pkts : 11161/11953 (93%)

F2V pkts/Total pkts : 2/11953 (0%)

CPASXL pkts/Total pkts : 0/11953 (0%)

PSLXL pkts/Total pkts : 772/11953 (6%)

CPAS inline pkts/Total pkts : 0/11953 (0%)

PSL inline pkts/Total pkts : 0/11953 (0%)

QOS inbound pkts/Total pkts : 0/11953 (0%)

QOS outbound pkts/Total pkts : 0/11953 (0%)

Corrected pkts/Total pkts : 0/11953 (0%)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yikes, definitely not Threat Prevention driving the high F2F.

Is there any Remote Access VPN traffic on this gateway? That traffic will have to go F2F much of the time so it can be handled in process space by vpnd, for Visitor Mode handling and such. There were some recent optimizations for Remote Access VPN introduced which are not present in your code level, see here:

sk168297: Large scale support in VPN Remote Access Visitor-Mode

sk167506: High number of Visitor Mode users in CPView or in output of "vpn show_tcpt" command

Beyond that you'll need to engage with TAC to have them run a debug to determine why so much traffic is winding up F2F. That debug can be a bit dangerous and is best pursued with TAC.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have no Client to Site VPNs on this, but certainly we do have Site-to-Site VPN to non managed GWs.

Thanks Tim - do already have a TAC case raised and cpinfos for all the VSs sent over on Friday (no responses yet - but I suspect R&D are going to get involved).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK great, please post what they eventually find out. Hopefully this thread will help them rule out a few things right off the bat.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Will do Tim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi magnus,

I have Distribute all Virtual Systems so that each cluster member is equally loaded but still one of the CPU is utilizing 100%.

Cluster Mode: Virtual System Load Sharing (Active Up)

Please suggest the next action needs to be taken.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

According to CP TAC After "top" command. Do Shift+H to get true cp usage.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

20100 admin 18 0 276m 171m 23m R 99 0.3 6994:27 rad_resp_slow_1

20099 admin 18 0 276m 171m 23m R 99 0.3 6991:47 rad_resp_slow_0

20101 admin 18 0 276m 171m 23m R 98 0.3 6770:31 rad_resp_slow_2

326 admin 0 -20 5141m 4.4g 547m R 58 7.0 4574:45 fwk5_2

325 admin 0 -20 5141m 4.4g 547m S 55 7.0 4161:26 fwk5_1

329 admin 0 -20 5141m 4.4g 547m S 54 7.0 4348:47 fwk5_5

328 admin 0 -20 5141m 4.4g 547m R 53 7.0 4300:13 fwk5_4

331 admin 0 -20 5141m 4.4g 547m R 53 7.0 3985:35 fwk5_7

330 admin 0 -20 5141m 4.4g 547m R 52 7.0 4018:28 fwk5_6

327 admin 0 -20 5141m 4.4g 547m R 51 7.0 4433:13 fwk5_3

324 admin 0 -20 5141m 4.4g 547m S 49 7.0 3919:58 fwk5_0

31349 admin 0 -20 1093m 575m 196m S 17 0.9 1295:48 fwk7_0

32255 admin 0 -20 1505m 926m 249m S 15 1.4 3326:08 fwk6_0

32256 admin 0 -20 1505m 926m 249m S 14 1.4 3458:54 fwk6_1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Believe it or not still no closer to resolving my issue, in fact TAC made it worse. What I have done is increased the SNDs from 4 to 6, so I have 26 worker cores. Was then advised to allocate 26 cores on each VS. My CPU utilisation shot up to nearly 80-85%.

I'm struggling to see how a 15600 in VSX mode and most of the NGTP blades can ever reach 4Gbps, let alone 10Gbps as stated on the application sizing guide (and selected VSX with 10 VS).

Logic I would apply is 10Gbps should equal 80% CPU utilisation (estimated), after all why would a vendor size an appliance knowing it needs 100% CPU to reach the top bandwidth rate, which would then make the system unstable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I also think Checkpoint should be updating there hardware to include SSL/hardware accelerator chips. We all know 80% or more traffic is encrypted which means https inspection is becoming more and more relevant, however the throughput/price point leaves customer looking at other vendors that have this built in.

Food for thought guys.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Latest update on my issue:

TAC could not suggest how many fwk processes I should spawn per VS, so looking at the top output made an educated guess, my CPU levels are now running healthier (note we not using manual affinity).

We have 6 SND cores allocated and 26 worker cores now according to cpview.

VS4 =1 core

VS5 = 6 cores

VS6 = 2 cores

VS7 = 1 core

VS8 = 2 cores

VS9 = 8 cores

VS10 = 8 cores

so we have a slight oversubscription.

TAC are suggesting the below now which makes no sense.

"I went through some random perf reports and it looks like that most of the reports are filled with swapper or FWKs (the highly utilized one was fwk1 on the 31st March report).

Regarding swapper - The official description of the process is that swapper is executed when no other tasks are running on the CPU. In other words, swapper means that the CPU did nothing and ran to rest so we can

Now, as we see a Virtual Switch is highly utilized it looks like its happening due to Multiple Virtual System are connected to this Virtual Switch.

If traffic should pass from one Virtual System via Virtual Switch to another Virtual System, then 'wrp' jump takes place. Meaning - the traffic does not physically pass via the Virtual Switch. Instead, by design, traffic is forwarded (F2F) to the Check Point kernel.

As a result, in cases of a high amount of traffic, the 'fwk_dev' process that belongs to the relevant Virtual Switch might cause high CPU utilization.

As the fine-tuning on the systems is already done and secureXL is already optimized.

I would suggest segregating the Virtual Switch here and re-routing the traffic via some other V-SWs as well by adding some more V-SWs to the VSX system.

This might require a design change but at the moment, We do not see any other possible fine-tuning."

My observations:

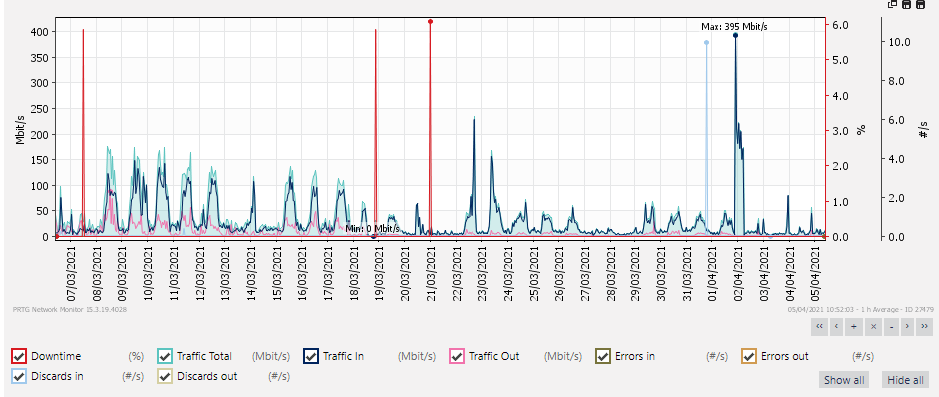

Is not by design that a VSW should be used to connect multiple VS so they can use a common physical interface?

I have 5 VS's using this VSW, and in the current climate this is not heavily used.

The VSW is used to connect a interface to the VS's on a common internet facing vlan.

The bandwidth stats for the VSW over the last 30days is attached, and you can see its hardly used.

We are not using the VSW to pass traffic between VS's.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 30 | |

| 22 | |

| 9 | |

| 8 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 | |

| 5 |

Upcoming Events

Tue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: Exposing AI Vulnerabilities: CP<R> Latest Security FindingsFri 13 Feb 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 43: Terugblik op de Check Point Sales Kick Off 2026Thu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudTue 27 Jan 2026 @ 11:00 AM (EST)

CloudGuard Network Security for Red Hat OpenShift VirtualizationFri 13 Feb 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 43: Terugblik op de Check Point Sales Kick Off 2026Thu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Powering Prevention: The AI Driving Check Point’s ThreatCloudAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter