- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Access Control and Threat Prevention Best Practices

5 November @ 5pm CET / 11am ET

Firewall Uptime, Reimagined

How AIOps Simplifies Operations and Prevents Outages

Overlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Spark Management Portal and More!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Full mesh redundancy HA cluster

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

Full mesh redundancy HA cluster

Im trying to make a full mesh redundancy on my HA cluster. I have read the admin guide but it says nothing on how to configure a full mesh redundancy. I created bond interfaces(802.3ad) which has two sub interfaces on each of the gateways. I have two core switches and configured LACP on it. The network is up but i am not able to ping the secondary gateway and i can see that the bond interface on the 2nd gateway is down while the bond interface on the primary is up.

regards,

Nima

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you’re on R80.10, you’re on an End of Support release.

Further, it uses a very old version of the Linux kernel (2.6), making it less likely such a configuration will even work.

Since you found this in the R81.10 documentation, I recommend upgrading to this release if you’re going to try to use this configuration.

At the very least, you should be able to get TAC support if you can’t make it work properly.

24 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>> I have read the admin guide but it says nothing on how to configure a full mesh redundancy

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Albrecht,

I didnt see a topic on how i could configure the bond interfaces in a full mesh redundancy.

regards,

Nima

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here it states: Bonding provides High Availability of NICs. If one fails, the other can function in its place. But just read further:

Configuring a Bond Interface in High Availability Mode

On each Cluster Member, follow the instructions in the R81 Gaia Administration Guide - Chapter Network Management - Section Network Interfaces - Section Bond Interfaces (Link Aggregation).

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It still doesnt tell me on how to configure a full mesh redundancy .

regards,

Nima

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you study all relevant topics from start of the ClusterXL Admin Guide ?

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You keep saying "full mesh redundancy". Could you define exactly what you mean by that for us? That term isn't meaningful on its own without further information. A diagram may be helpful.

What sorts of faults are you trying to defend against?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

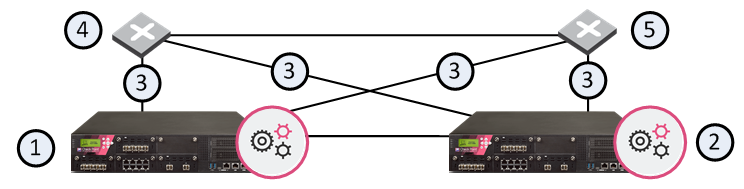

By Full mesh redundancy i mean something like this..

When i run the command cphaconf show_bond bond1 all the slave interfaces are shown as active on both the checkpoint gateways but the status of the bond interface is shown as down on the gateway which is currently on standby and UP on the gateway which is currently taking the network load.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On the switches, have you configured two separate LACP bonds (one per gateway with two interfaces each) or one big one with all four interfaces?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On the switch i have configured one lacp interface with four slave interfaces.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So 4 and 5 are two physically separate switches capable of multi-chassis link aggregation (Cisco MEC, vPC, or similar)?

There is no way to have a single aggregate link with members on multiple Check Point servers.

Separately, I cannot advise more strongly against using multi-chassis link aggregation technologies. They lead to bad availability design elsewhere, which causes outages to be both more frequent and much more severe than they would have been. I say this from direct personal experience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

4 and 5 are two physically separate servers and the switch is using ciscos stackwise virtual domain

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why would you do it as shown? I also second what @Bob_Zimmerman said, this looks like a bad design.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In my 15 years dealing with CP, I had never seen that before. Not saying its not possible, but cant really find any documentation about it either.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, you are right 🙂 Removed my previous comment. This is... interesting

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't think I've seen that documentation before. Interesting.

It's talking about active/backup transmit link selection (e.g, set bonding group 0 mode active-backup). This topology can't be achieved with LACP. The switches should not be aware of the link aggregation. As far as they are concerned, the ports leading to the firewalls are plain access or tagged ports.

While that would be functional, it has some complicated availability implications. Active/backup bonds receive on all members, but only transmit on one. Only loss of layer 2 link would cause the firewall to switch to the alternate interface. If something failed past the immediately-connected switches causing traffic through only one to work, the firewalls are unlikely to be able to tell. It might be possible for ClusterXL to tell as long as fw1 was using switch 4 primarily and fw2 was using switch 5 primarily. Then, if a link between the switches failed, the cluster heartbeats on that interface would fail, which could cause a failover of the firewall cluster. To maintain this pathing, you would need to specify a primary link for the bond (e.g, set bonding group 0 primary eth2).

I would test this extensively before depending on it.

Edit: No, wait. If each switch is operating correctly in isolation, but one of them has no access to the broader network, the cluster heartbeats wouldn't fail. fw1 would transmit to switch 4, which is still able to get to fw2. fw2 would transmit to switch 5, which is still able to get to fw1.

I'm not sure there's a good way to get this topology to tolerate failures which cut one of the switches off from the broader network.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I honestly never seen that part of doc before, I guess my "searching" skills are not as good as yours Guenther : - )

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, theres no proper documentation on how we can achieve a full mesh redundancy... it only says we can do it.. I have read countless documentation at this point and i stumbled on R81.10 clusterXL documentation which tells us about group bonding. dont know if that will work on r80.10..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you’re on R80.10, you’re on an End of Support release.

Further, it uses a very old version of the Linux kernel (2.6), making it less likely such a configuration will even work.

Since you found this in the R81.10 documentation, I recommend upgrading to this release if you’re going to try to use this configuration.

At the very least, you should be able to get TAC support if you can’t make it work properly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PhoneBoy definitely gave you most logical answer. And to be 100% blunt about it, the fact is TAC is way more likely to get any customer out there best support if you are on the latest or one below latest version.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For full mesh redundancy you need much knowledge and many skills, so there is no easy cookbook available ! You can always have CP Professional Services configure it for the customer if some steps are not clear or just ask TAC.

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have tried full mesh redundancy with LACP bonds in juniper and cisco switches and the issue had always been the same. The functionality of the gateway works as per the design but the Standby gateway becomes unreachable after the reboot. I had previously configured for this customer using p2p links in a full mesh redundancy setup. I used individual ip addresses instead of sharing one ip address for a bond interface. 2 ip addresses for 2 interfaces(not a bond interface). But since the old network admin had left the customers office, the new admin changed the network topology and was adamant on using LACP for the full mesh redundancy. So the reason for creating this post was to know whether if its possible to have such network topology using LACP and bond interfaces. And as per the many comments from this post and from hours and hours of reading admin guides available in the checkpoint website, i am certain that its not possible to achieve such a topology using LACP, I think the issue here is a bad network topology. Thank you all for sharing your insights on this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you ask TAC for an official statement ?

CCSP - CCSE / CCTE / CTPS / CCME / CCSM Elite / SMB Specialist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

K, lets start with basics...if you say those interfaces are down on backup member, can you send output of below in clish:

Lets assume interface name is bond007

show interface bond007

Then from expert mode run below:

cpstat fw -f interfaces

cpstat fw -f all

Please send output of everything (please blour out any sensitive info).

Cheers.

Best,

Andy

Andy

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 25 | |

| 21 | |

| 11 | |

| 9 | |

| 9 | |

| 8 | |

| 7 | |

| 7 | |

| 6 | |

| 5 |

Upcoming Events

Wed 05 Nov 2025 @ 11:00 AM (EST)

TechTalk: Access Control and Threat Prevention Best PracticesThu 06 Nov 2025 @ 10:00 AM (CET)

CheckMates Live BeLux: Get to Know Veriti – What It Is, What It Does, and Why It MattersTue 11 Nov 2025 @ 05:00 PM (CET)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - AMERTue 11 Nov 2025 @ 10:00 AM (CST)

Hacking LLM Applications: latest research and insights from our LLM pen testing projects - EMEAAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter