- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Combining all interfaces in one bond, how bad ...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Combining all interfaces in one bond, how bad is this practice?

I have a couple of clients who have proposed a design where you put all internal and Internet VLANs in a single bond. One client has dual 10G interfaces which they want to bond and another wants to bond eth1 through eth8. The end result is the same. All VLANs, including Internet in the same logical interface.

I have my own concerns about this approach (resource allocation, availability and security etc) but wanted to hear what the combined knowledge of this community thinks about it. I'm no design architect and these designs were proposed by architects so I need more confidence before I oppose this design. If my concerns are at all valid of course.

- Tags:

- best practices

- design

18 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ilmo,

Well I think that's could be a good idea. I would ask you to take care of Sync interface : still use a dedicated interface.

Cybersecurity Evangelist, CISSP, CCSP, CCSM Elite

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Xavier,

I have DMI and a proper sync interface. I was talking about normal traffic interfaces.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Conceptually, there is nothing wrong with this approach. In VSX implementations, its common to use trunk for multi-VLAN allocation.

You may run in some LACP compatibility issues, (I've seen one just recently with Cisco 3850 switches), but other than that, I'd say it is OK for 10G interfaces.

My personal preference regarding 1G interfaces is not to utilize those to the full capacity of the appliance, in case you'll need to mirror the traffic, enable asymmetric connectivity of the cluster members to a non-redundant resources, etc..

If someone from CP can tell us how the buffer sizes of the bonded interfaces with VLANs are being allocated, It'll be great.

Additionally, I'd like to better understand the implications of bonding interfaces that belong to modules in different slots.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the 10G interface case it's a standard two appliance cluster consisting of 5900 appliances connected to a Cisco Nexus cluster. I havent encountered any LACP issues there luckily.

The other installation is two 5600 standard appliances configured as VSLS cluster.

I realize that VSX or not may affect the decision as well.

My concerns are that:

- You can't just pull the plug on the Internet interface in case of an DDOS like attack because it's a VLAN and your firewall might not be very responsive in such an event.

- What happens if an unknowing new admin sets up a trunk interface and allows all VLANs? They might not realise they've just moved your internet over there as well.

- Also, you would have to manually allocate CPU resources to this bond interface unless you're comfortable with running all your traffic on a single core. Am I wrong?

- I might come off as conservative, but I'd feel a lot more at ease having separated unknown traffic (Internet) from known traffic (LAN) and not actually put my core switch between the ISP router and the firewall.

If these aren't legit conserns with this type of design then I'm all OK with it.

Thanks for helping guys!

/ Ilmo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let's try to address those one at a time:

My concerns are that:

- You can't just pull the plug on the Internet interface in case of an DDOS like attack because it's a VLAN and your firewall might not be very responsive in such an event.

- >>1. You can always bag the VLAN on the switch. You may have an option to do the same via LOM or console. That being said, if you have the option of separating external traffic from internal on the interface level, I'd do that as well.

- What happens if an unknowing new admin sets up a trunk interface and allows all VLANs? They might not realize they've just moved your internet over there as well.

- >> so long as there are no corresponding VLANs configured on Check Point devices, this traffic will be ignored. The only downside to this scenario is that there be a lot of L2 frames with wrong 802.1Q tags being discarded.

- Also, you would have to manually allocate CPU resources to this bond interface unless you're comfortable with running all your traffic on a single core. Am I wrong?

- >>Tim Hall will probably be able to give you more intelligent answer on this subject.

- I might come off as conservative, but I'd feel a lot more at ease having separated unknown traffic (Internet) from known traffic (LAN) and not actually put my core switch between the ISP router and the firewall.

- >> I share your sentiment coming from conventional infrastructure background myself, but as most of modern deployments rely on SDNs, the switching fabric really becoming a consumable commodity. Consider cloud-based deployments: you are defining vSEC instances and their interfaces, but in reality, all of those interfaces share common bonded interface connected to the top-of-the-rack switches and aggregation switches.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Many thanks Vlad!

I'm sure Tim Hall will have good input. I wanted to buy his book in Barcelona but he didn't accept credit card ^_^

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At least you've got to be there:) I have not yet ever made it to CPX:(

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have to ask, would the 5900 appliance be able to handle a fully saturated 10G link? What blades are they going to use?

For the 8x1G bonded links, I would make sure that they are spread over different ASIC groups on the upstream switches. Again I question if the 5600 would be able to handle 8Gbps throughput if you have multiple blades enabled.

- Jason

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think you've brought up very valid questions about blades running on those appliances.

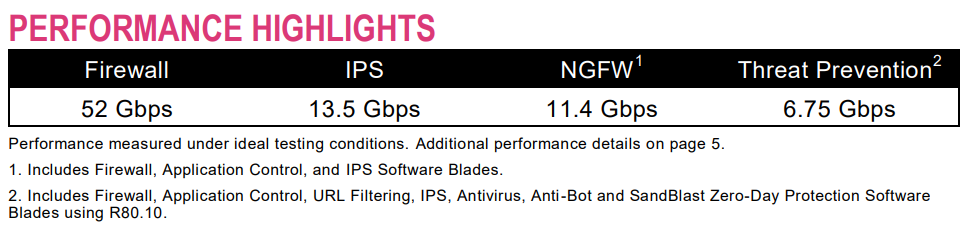

For 5900 appliance, under "Ideal" conditions, the numbers are:

For 5600:

So the port aggregation may make sense from flexibility and reliability point of view, (i.e. trunking and failover), but not necessarily from performance.

So long as the architect is taking these figures into account, it is still a feasible approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My experience with enabling the various blades is that its difficult to achieve ideal conditions. For example, you can employ URL filtering and Application control easily enough and have negligible load increase on your gateway. As soon as you enable HTTPS filtering, you not only add the extra decryption/encryption tasks onto your CPU load, you are no longer able to pass the traffic through acceleration, which increases CPU load more as the traffic needing inspection is increased.

While the physical connectivity architecture of the solution is sound, the practical use may not be so depending on the blades employed in the solution. Ilmo Anttonen may want to relay this information to his customers for further discussion.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the input both of you, Aleksei and Jason!

Very valid points you are raising as I think both of the clients wants to run all available features except for https inspection and threat extraction (not purchased). I wasn't part of the discussion when the models were decided, but from experience I know that it's not uncommon that the architect suggests a model which will suit all the customers needs and then the customer decides for a model two steps below because it looks a lot cheaper and thinks that will suffice.

I'm not saying this is the case this time, but it wouldn't be a big surprise. Anyhow, I will raise the question with the architect.

From the numbers presented above it seems like anything above 3 interfaces in a bond is waste of interfaces on the 5600 installation. Very good to know.

Big thanks, all of you.

/ Ilmo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ilmo, you're welcome!

One other thing to consider. If the gateway is providing east/west protection, you may want to also consider the remote possibility of vlan hopping to break out of a protected network. In order to execute that type of exploit, the attacker would need some type of access to ports on switches that are willing to trunk. If you have the east and west side both going through a single bonded group of interfaces, then it might be possible to break across into a privileged network. The simple answer to this is to make sure the switches are configured correctly to not allow that to happen.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For me it depends on the placement of a gateway. If we are talking about a cluster of hardware appliances and they are situated on the perimeter, case of an external firewall, then I would use separate interfaces for external and internal connections, I even would try to use different physical switches for external and internal zones. I'm kind of conservative in that sense too. In case of physically separated interfaces and switches we have less possibilities to do some totally bad configuration (on switches mostly), we have segmentation of devices by functions.

But as Vladimir mentioned it is a different case with VSX and cloud gateways. And for totally internal firewall I wouldn't have any issues with using one bond for all networks. I tend to agree with Vladimir on the topic of gathering all 1G interfaces into one bond, better to leave 2 interfaces for possible future changes in the network.

I had one case when I couldn't use bond interfaces for external connections. I configured two physical interfaces from an appliance into bond, I added vlan interfaces on it, and the additional trick was that there was only 1 external public IP address for this office. So I needed to configure private IP addresses (192.168.X.X) for cluster nodes and public IP address for the cluster IP. Usually this setup with different IPs work without any issues, just need to add a proper route on the cluster. But it was not working with bond and vlan interface, when all these things configured in the same time. It was R77.30 with the latest Jumbo Hotfix available at that time. We contacted Check Point Support, they took a look, we tried to fix it, but we didn't have too much time for this implementation and for all possible debugs, so our conclusion was that this setup with these three things combined is not working (bond + vlan + public and private IPs on one interface). When I used only one interface for external connections (+ vlan + public and private IPs on one interface) it started to work.

At the same time we had 3 interfaces combined into bond for internal networks, so it was not a configuration issue or some problem with switches.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The only thing I would ask, do you think you are paranoid enough? Better practice is the separate internet and intranet traffic on different switches. The theory is that one day, someone will 0 day VLAN or just hack the box. Then you can bypass the firewall if it is in the same switch. Then you would have 2 bonds and not loop back on the interface for everything.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe I am ![]()

Unfortunately (or rather luckily because i prefer working with firewalls) I'm not involved in the network planning. I'm just there to install firewalls, enable blades and and make sure everything is updated and working before I hand it over to the admins and give them instructions on how to handle every day operations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The presence of a bonded interface doesn't have much of an impact performance-wise as far as the SND/IRQ cores are concerned servicing SoftIRQs from the interfaces. When two or more interfaces are bonded, the physical interfaces are still handled individually as far as SoftIRQ processing. If they are all very busy and SecureXL automatic affinity is enabled, each physical interface's SoftIRQ processing will probably end up on a different SND/IRQ core assuming there are enough to go around. So the usual tuning recommendations of possibly adding more SND/IRQ cores and possibly enabling Multi-Queue to avoid RX-DRPs still applies.

As far as using NIC ports that are "on-board" vs located on an expansion card, I have no idea if having the physical interfaces associated with a bond all be part of the same slot vs. being "diversified" between different slots would help or hurt. I'd think it would depend on the hardware architecture. However there is one limitation I am aware of: the built-in I211 NIC cards on the 3200/5000/15000/23000 series can only have a maximum of 2 queues defined for use with Multi-Queue, while the expansion cards can have at least four or even up to 16 depending on the card model. sk114625: Multi-Queue does not work on 3200 / 5000 / 15000 / 23000 appliances when it is enabled for...

So I'd *probably* want to avoid using the onboard NICs on these models for the bond if possible, but the traffic levels may well not get high enough to make a meaningful difference as the processing cores will run out of gas long before this queue limitation starts to matter.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For what its worth we run 8 x 10G VPC bond and its perfect from a networking/failover viewpoint. I think Cisco call this "firewall on-a-stick". I dont like the old school separation quoted here. If you run both north and south switches then there are two failure scenarios and you also have to maintain additional switches. We also configure CoreXL and interface buffers. To my mind, none of your other concerns would change our design.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

Just my two cents worth ..

I have some customers who have had this design and they have not experienced any issues.

Obviously the Vlan hopping is a security consideration but it does work.

Rgds

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 19 | |

| 17 | |

| 13 | |

| 8 | |

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Tue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter