- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- Re: Cluster XL - Failover due to Fullsync PNOTE O...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cluster XL - Failover due to Fullsync PNOTE ON

Hello All,

We faced an unexpected failover due to Fullsync PNOTE ON error CLUS-120108.

According to SK125152

| CLUS- 120108 | Fullsync PNOTE <ON | OFF> | ON - problem |

After the failover, I had verified that sync communication is ok and this member is in standby mode in the cluster.

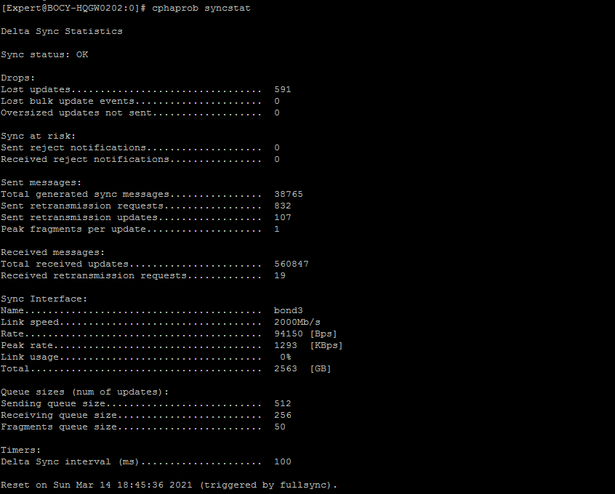

In addition see below syncstat statistics.

Does anyone face the same issue? Do you know what trigger this behavior?

Thanks

12 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Full sync only happens when the second member is coming from boot/initialization, and before it becomes the fully operational cluster member (usually in standby mode). Check the uptime, it seems to me one of your boxes rebooted itself.

Also, fullsync PNOTE should not cause a failover. Please post the logs you got and full message

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You did something with SNMP settings, which then called for cpstop/cpstart, which in it turn, caused Active member to go down.

It says it right there in your messages:

Mar 14 18:44:24 2021 HQ pm[16877]: Disabled snmpd

Mar 14 18:44:24 2021 HQ xpand[16895]: Configuration changed from localhost by user admin by the service /usr/sbin/snmpd

Mar 14 18:44:24 2021 HQ snmpd: Destroying the lists of sensors

Mar 14 18:44:24 2021 HQ pm[16877]: Reaped: snmpd[8268]

Mar 14 18:44:26 2021 HQ kernel: [fw4_1];CLUS-120108-2: Fullsync PNOTE ON

Mar 14 18:44:26 2021 HQ kernel: [fw4_1];CLUS-120130-2: cpstop

Mar 14 18:44:26 2021 HQ kernel: [fw4_1];CLUS-113500-2: State change: ACTIVE -> DOWN | Reason: FULLSYNC PNOTE - cpstop- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We did not change something manually on the SNMP configuration. I already had open a case with support and I will update the post accordingly.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure, please keep us posted.

This line, however suggests something has been done:

Mar 14 18:44:24 2021 HQ xpand[16895]: Configuration changed from localhost by user admin- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello all ,

The support cannot find the root cause of the issue.

The only suggestion that provided since the issue occurs once is to update to the latest jumbo take (we are on 78) because resolves many performance and stability issues.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Val is definitely correct...based on that message in the logs you sent, seems that someone manually changed something in the config. Maybe try below command...cd /var/log and then run grep -i PNOTE messages.*

Andy

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 14 18:44:26 2021 HQ kernel: [fw4_1];CLUS-120108-2: Fullsync PNOTE ON

Mar 14 18:44:26 2021 HQ kernel: [fw4_1];CLUS-113500-2: State change: ACTIVE -> DOWN | Reason: FULLSYNC PNOTE - cpstop

Mar 14 18:44:28 2021 HQ kernel: [fw4_0];fwhak_drv_report_process_state: no running process, reporting pnote fwd

Mar 14 18:44:30 2021 HQ kernel: [fw4_1];CLUS-120105-2: routed PNOTE ON

Mar 14 18:44:31 2021 HQ kernel: [fw4_0];fwhak_drv_report_process_state: no running process, reporting pnote cphad

Mar 14 18:45:34 2021 HQ kernel: [fw4_1];CLUS-113601-2: State remains: INIT | Reason: FULLSYNC PNOTE - cpstart

Mar 14 18:45:36 2021 HQ kernel: [fw4_1];CLUS-100201-2: Failover member 2 -> member 1 | Reason: FULLSYNC PNOTE - cpstop

Mar 14 18:46:22 2021 HQ kernel: [fw4_1];CLUS-120207-2: LPRB PNOTE : local probing has started on interface bond1.399

Mar 14 18:46:51 2021 HQ kernel: [fw4_1];CLUS-120207-2: LPRB PNOTE : local probing has started on interface bond2

Mar 14 18:46:52 2021 HQ kernel: [fw4_1];CLUS-120207-2: LPRB PNOTE : local probing had stopped on interface bond2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I have an error that has to do with a VSX Cluster, which fails to "form", as apparently there is an error with the configuration, or at least is what I understand according to the following message that I have filtered.

It is an environment that is on VMWARE, but the interfaces of both members that make the VSX Cluster, are using the Eth2 interface as SYNC interface.

Could someone guide me on how to correct this problem?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You may need to enable promiscuous mode or like that in your VMWare environment for that virtual network segment. Something is preventing the CCP packets.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can the alert that one “sees” in the CLI of the VSX Cluster be a “false positive”?

At the SmartConsole level, everything looks fine, in “green”, and the main thing, is that the VSX Cluster, does not appear “alarmed”.

I can install policies without errors on every 1 of my VS's that I have created, however at the CLI level, it looks like the cluster is broken.

At times I see that the Eth2 interface, which is the SYNC interface, is “DOWN”, and at other times, I see what I am sharing in this update.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, the CLI output is reliable. Your Sync interface is having issues that need investigating.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 21 | |

| 20 | |

| 19 | |

| 8 | |

| 7 | |

| 3 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |

Upcoming Events

Fri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Fri 12 Dec 2025 @ 10:00 AM (CET)

Check Mates Live Netherlands: #41 AI & Multi Context ProtocolTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter