- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

The Great Exposure Reset

24 February 2026 @ 5pm CET / 11am EST

CheckMates Fest 2026

Watch Now!AI Security Masters

Hacking with AI: The Dark Side of Innovation

CheckMates Go:

CheckMates Fest

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Security Gateways

- :

- CRC Errors

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

CRC Errors

Guys, I've a little problem with my checkpoint apppliance, I using in cluster HA when the primary is set as Active I receive a lot of CRC message in the interface, when I use the command " clusterXL_admin down" and my firewall converge to other appliance, this erros about crc is gone. I followed sk61922.

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like your switch is actually seeing the XOFF requests from the firewall and counting them as an input pause.

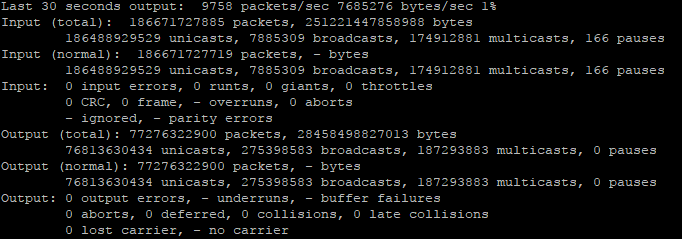

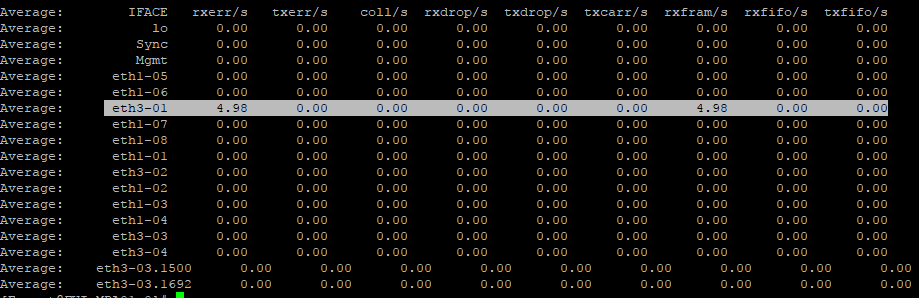

Based on your sar output it looks like the CRC errors (grouped under "rxfram") are clumpy. Tough to say what it going on, but might be interesting to note the number of pause/tx_offs at one point in time, wait for a CRC/rxfram clump to occur and then see if the pause/tx_off counters (or any other interesting ones) have incremented. My guess is no, but it is worth checking.

I have a vague recall of seeing switches occasionally spewing some kind of control frame that the firewall's NIC would log as a runt/short or framing error but can't remember if it was CDP, something related to STP, or perhaps even duplex negotiation. Those "errored" frames can't be viewed with tcpdump anyway.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

11 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

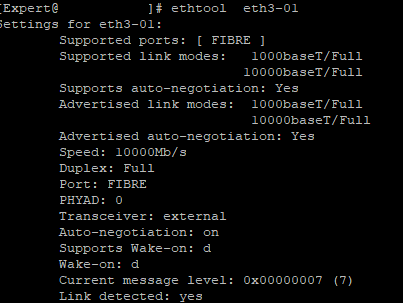

Check your duplex/speed settings on both firewalls and corresponding switch ports. If they are the same, I would check/replace cable. SFP also can cause that.

You can see duplex settings with ethtool command or show interface in clish

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I Checked, I changed SFP , cable , both sides are set the same configuration. I think this is a problem in module , because, i chanced too the slot and de problem continued

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

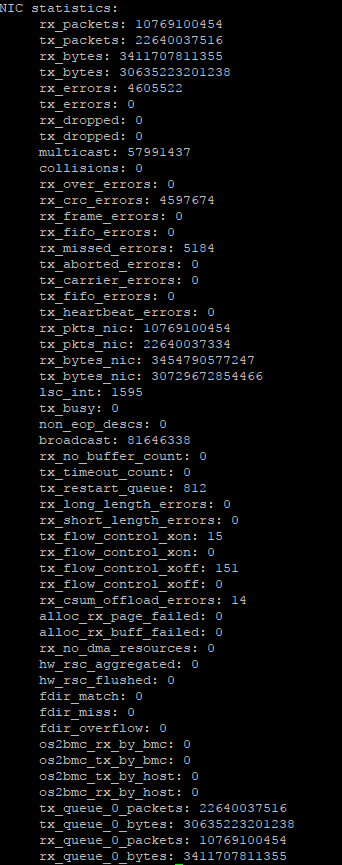

Also note these values:

tx_flow_control_xon: 15

tx_flow_control_xoff: 151

rx_flow_control_xon: 0

rx_flow_control_xoff: 0

Gigabit ethernet provides flow control between NIC and the switch. When your NIC starts to get low on RAM buffering it will send a pause frame XOFF to the switch, telling switch to stop sending for a bit. After the NIC has enough buffering capability again it will now send a new frame, this time XON telling the switch to restart the sending of the frames. The values for rx_flow_control_xon/xoff are 0 because the flow control is off/disabled on the switch. So the switch will not react to the NIC telling it to slow down. You don't have any overruns so I don't know if this is a big problem. Tim Hall might help you by analyzing these errors.

What type/category of cable are you using?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

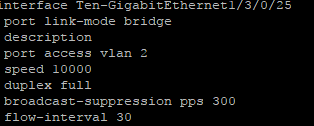

I'm using a optical fiber OM4 LCxLC 10Gb.

config on switch:

firewall:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CRC errors are generally physical problems with the cable being used such as electrical shorts or possibly electromagnetic interference in the case of copper. The latter can generally only happen if there is a network cable in a long run next to power cables/conduits. Could possibly be a bad switch port or a bad NIC port on the firewall but that is pretty rare, usually it is an issue with the cable or connector. Could also be a duplex mismatch if using Fast Ethernet but duplex mismatches are practically impossible with Gigabit Ethernet in use.

Any CRC or other errors being shown on the switch port the firewall is attached to? Also are the CRC errors happening in clumps or slowly accumulating over long periods of time? Use sar -n EDEV to investigate the frequency of those CRC errors occurring. The CRC error rate really should be zero, but the errored frame rate due to CRC errors on your interface is a mere 0.043% which is pretty negligible. Unfortunately there is no easy way to capture these CRC-errored frames with tcpdump since the Ethernet NIC card/driver will not actually forward them up to the operating system for processing.

As far as tx_flow_control_xon and tx_flow_control_xoff being nonzero yet no actual NIC overruns occurred (RX-OVR), my interpretation is that the firewall NIC was coming close to an buffer overrun condition and issued the XOFF, but did not actually overrun and lose any frames. Probably not related to the CRC errors.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I looked in my switch and no have any CRC errors in this interface, CRC erros happening in slowly accumalation, for minute increase 100 or less.

sar -n EDEV:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like your switch is actually seeing the XOFF requests from the firewall and counting them as an input pause.

Based on your sar output it looks like the CRC errors (grouped under "rxfram") are clumpy. Tough to say what it going on, but might be interesting to note the number of pause/tx_offs at one point in time, wait for a CRC/rxfram clump to occur and then see if the pause/tx_off counters (or any other interesting ones) have incremented. My guess is no, but it is worth checking.

I have a vague recall of seeing switches occasionally spewing some kind of control frame that the firewall's NIC would log as a runt/short or framing error but can't remember if it was CDP, something related to STP, or perhaps even duplex negotiation. Those "errored" frames can't be viewed with tcpdump anyway.

--

Second Edition of my "Max Power" Firewall Book

Now Available at http://www.maxpowerfirewalls.com

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I looked , but no have any incremented. I think is a problem with module SPF+

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Don't know if it matters but you have hardcoded speed and duplex settings on switch and used auto-negotiation on your firewall NIC. Since Tim mentions duplex negotiation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Enis Dunic , i changed on switch for auto-negotiation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Guys, I think that i'll change the module SFP (CPAC-2-10F),

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 37 | |

| 29 | |

| 11 | |

| 10 | |

| 7 | |

| 6 | |

| 5 | |

| 4 | |

| 4 | |

| 4 |

Upcoming Events

Tue 10 Feb 2026 @ 03:00 PM (CET)

NIS2 Compliance in 2026: Tactical Tools to Assess, Secure, and ComplyTue 10 Feb 2026 @ 02:00 PM (EST)

Defending Hyperconnected AI-Driven Networks with Hybrid Mesh SecurityThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: AI-Generated Malware - From Experimentation to Operational RealityFri 13 Feb 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 43: Terugblik op de Check Point Sales Kick Off 2026Tue 10 Feb 2026 @ 03:00 PM (CET)

NIS2 Compliance in 2026: Tactical Tools to Assess, Secure, and ComplyTue 10 Feb 2026 @ 02:00 PM (EST)

Defending Hyperconnected AI-Driven Networks with Hybrid Mesh SecurityThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: AI-Generated Malware - From Experimentation to Operational RealityFri 13 Feb 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 43: Terugblik op de Check Point Sales Kick Off 2026About CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter