I'm unclear on exactly what you are asking, you have a 8/8 split on a 16 core box with SMT enabled. Your question seems to be one of these three things:

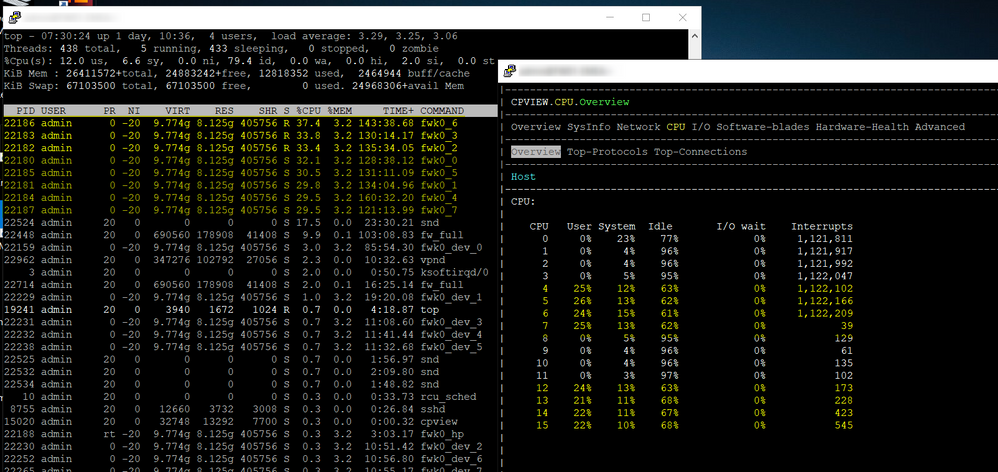

1) Mapping of Physical CPUs to Firewall worker instances - Firewall instances 0 & 1 are on the same physical CPU 7/15 via SMT threads, Firewall instances 2 & 3 are on the same physical CPU 6/14 via SMT threads, Firewall instances 4 & 5 are on the same physical CPU 5/13 via SMT threads, Firewall instances 6 & 7 are on the same physical CPU 4/12 via SMT threads. All eight remaining cores (0-3, 8-11) are SNDs with Multi-Queue enabled. This looks correct but granted can be a bit confusing, USFW is also enabled.

2) Load difference between CPUs designated SND vs. Firewall Worker/Instance - I would speculate that the vast majority of your traffic is being handled in the Medium Path (either PXL or CPAS) which has to be handled by the workers. This can be checked with fwaccel stats -s. If the distribution of load amongst CPUs looked significantly different before the upgrade, it is possible that the upgrade changed the distribution of traffic such that significantly less traffic can be fully accelerated by SecureXL and handled on the SND cores. If you upgraded from R80.10 or earlier, be advised that SecureXL was significantly overhauled in R80.20 and a split adjustment may be necessary; the gist of the changes is that the SNDs were relieved of multiple duties that were transferred to the workers thus increasing their load.

3) Higher load on CPU 0 (SND) vs. other SNDs - This may be related to Remote Access VPN connections not being properly balanced across the SNDs which was fixed in the latest Jumbo HFAs.

Or was your question something else?

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course