Still waiting for R&D to come up with the goods 😞 taken way too long, so took matters in own hands. Basically used @Danny idea about custom PNOTE.

Modified parts of $FWDIR/bin/fwstart, first in the middle:

if ($?highavail && ! $?IS_VSW) then

if ($fw1_vsx == 0) then

$FWDIR/bin/cphaconf set_pnote -f $FWDIR/conf/cpha_global_pnotes.conf -g register

endif

endif

# Test manual cluster DOWN till MQ reconf is applied at the end

echo "*** TEST cluster DOWN ***"

$FWDIR/bin/cphaconf set_pnote -d ForceDown -t 1 -s init register

# load sim settings (affinity)

if ((! $?VS_CTX) && ($linux == 1)) then

if ($?PPKDIR) then

$FWDIR/bin/fwaccel on

$FWDIR/bin/sxl_stats update_ac_name

if ($fw1_ipv6) then

$FWDIR/bin/fwaccel6 on

endif

--

endif

endif

and then remove PNOTE after MQ is reconfigured.

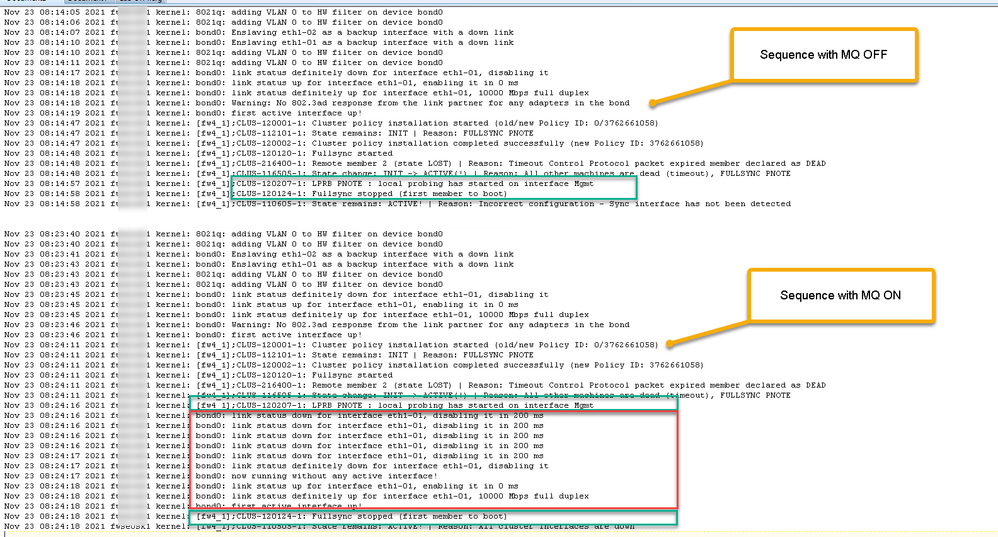

(Actually $FWDIR/bin/mq_mng_reconf_all_vs command is the one that causes the problem)

# Kernel 3.10 - apply MQ settings for all VS

##release lock of mq_mng

rm /tmp/mq_reconf_lock >& /dev/null

if ("$linuxver" != "2.6") then

$FWDIR/bin/mq_mng_reconf_all_vs >& /dev/null

endif

# Test manual cluster DOWN till bond MQ is applied - finish

echo "*** TEST cluster DOWN finsih ***"

sleep 15

$FWDIR/bin/cphaconf set_pnote -d ForceDown unregister

if ((! $?VS_CTX) && ($linux == 1)) then

# Apply Backplane Ethernet affinity settings

if ( -e /dev/adp0) then

/etc/ppk.boot/bin/sam_mq.sh

endif

endif

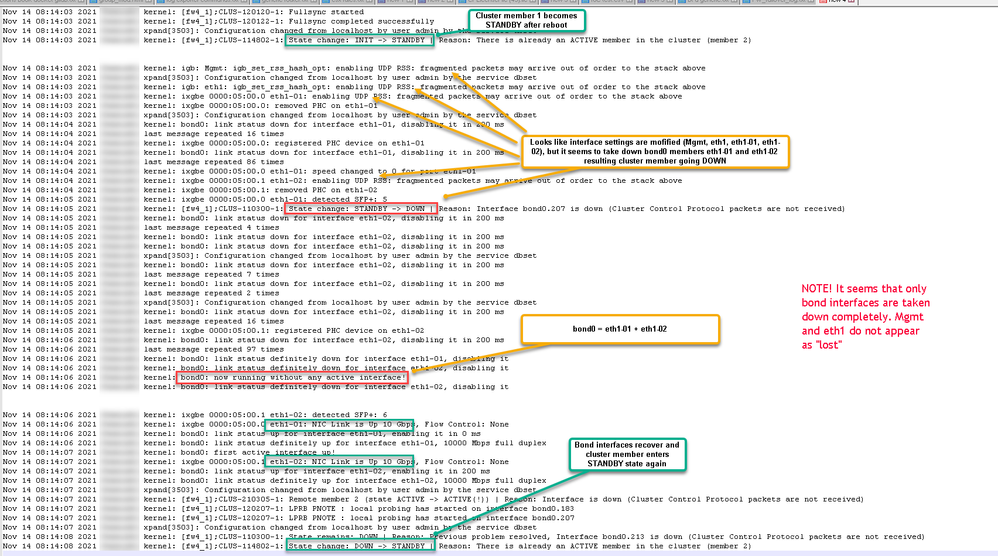

So startup sequence looks like this now:

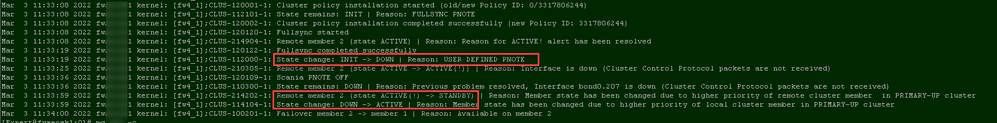

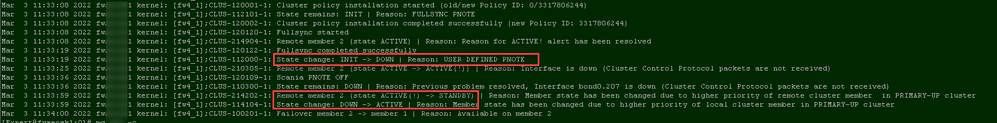

And clustering messages confirm that there are no unwanted failovers: