- Products

Quantum

Secure the Network IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloudGuard CloudMates

Secure the Cloud CNAPP Cloud Network Security CloudGuard - WAF CloudMates General Talking Cloud Podcast - Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

Share your Cyber Security Insights

On-Stage at CPX 2025

Simplifying Zero Trust Security

with Infinity Identity!

CheckMates Toolbox Contest 2024

Make Your Submission for a Chance to WIN up to $300 Gift Card!

CheckMates Go:

What's New in R82

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Quantum

- :

- Security Gateways

- :

- Re: Blocking malicious IP addresses (sk103154) in ...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

Blocking malicious IP addresses (sk103154) in VSX

UPDATE 04/08/2020: Please visit this page to download the latest version of the script: https://www.francescoficarola.com/check-point-automated-ip-blacklist/

Hello everyone,

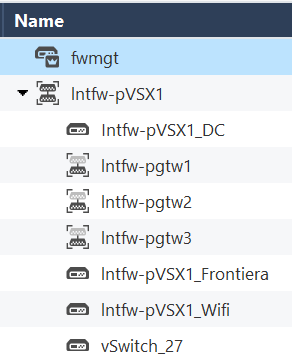

my configuration is the following:

- A cluster of three security gateways (R80.20)

- Three Virtual Systems (configured on the three security gateways as follow: active/standby/backup)

I already activated the IOC Feed functionality on one of my VS to block outgoing traffic through Anti-Bot & Anti-Virus blades (sk132193), but I'd like to block incoming malicious traffic as well. I read the sk103154 documentation, which says the script must be ran on the management server.

I followed all steps, but when I run the script, it returns the following error:

[Expert@xntfw-pmgt1:0]# ./ip_block_activate.sh -a on -g gw_list -f feed_urls -s /home/admin/blacklist/ip_block.sh

Error: could not retrieve FWDIR from 10.100.97.101

Error: could not retrieve FWDIR from 10.100.97.101

(10.100.97.101 is the VS' IP)

Indeed, if I run the command responsible of that error into the script, I don't receive any output:

[Expert@xntfw-pmgt1:0]# cprid_util -server 10.100.97.101 getenv -attr "FWDIR"

[Expert@xntfw-pmgt1:0]#

but, if I run the same command with the management IP of the Security Gateway, then it gives me the following output:

[Expert@xntfw-pmgt1:0]# cprid_util -server 192.168.77.192 getenv -attr "FWDIR"

/opt/CPsuite-R80.20/fw1

So... is this functionality available for VSX environments?

Thanks,

Francesco

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One more suggestion. You can create a dynamic object and then fill it with output of https://secureupdates.checkpoint.com/IP-list/TOR.txt feed via GW side script. Then, use that object in a drop rule on top of the policy. Also, that should be done on VS context.

You can take bits and pieces from Office365 script here: https://community.checkpoint.com/t5/API-CLI-Discussion-and-Samples/Basic-script-for-importing-IP-Add...

I still think leveraging MGMT API is easier. Set an empty group, repopulate it with TOR from time to time, publish, push policy.

20 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can see you are running this in VS0 content. That is the first mistake. Also, use the absolute path for VS FWDIR folder

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm running in VS0 because that is the management server.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Got it, you are correct.

MDS or SMS? If former, you have to specify mdsenv first.

Also, the SK does not mention VSX among supported targets. I have reached to the case owner for some clarification.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SMS.

Ok, thanks. I'll wait for any news.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

have you received any news?

Thank you very much,

Francesco

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not just yet, still waiting for the reply. Thanks for your patience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After reviewing the script, it is based on the physical GW context. Per VS modification is possible, but I do not find it too practical.

Please consider using regular SAM rules instead https://supportcenter.checkpoint.com/supportcenter/portal?eventSubmit_doGoviewsolutiondetails=&solut...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@_Val_: thank you. Yes, I could use SAM rules, but things are a bit different in that case. I mean, it is supposed to work on monitoring, not on feeds.

May you please share the VS modification for the sk103154?

Thanks again for your support!

Francesco

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The script relies on SAM rules, that is the first fact. It creates SAM rules from the feed every 20 minutes and deletes the old ones. Everything is done assuming it is a physical FW, not VSX, running on Gaia. VSX mode is not verified, so it tries to run and fails for you.

You need a completely different method for VSX. The tool should be completely re-written.

So coming to your original question, this tool is not supported for VSX. If you need something automated, take the feed and set up block rules through MGMT API, or, as already suggested, use SAM rules.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One more suggestion. You can create a dynamic object and then fill it with output of https://secureupdates.checkpoint.com/IP-list/TOR.txt feed via GW side script. Then, use that object in a drop rule on top of the policy. Also, that should be done on VS context.

You can take bits and pieces from Office365 script here: https://community.checkpoint.com/t5/API-CLI-Discussion-and-Samples/Basic-script-for-importing-IP-Add...

I still think leveraging MGMT API is easier. Set an empty group, repopulate it with TOR from time to time, publish, push policy.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @_Val_ . I will try with MGMT API.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The SK now says: Not supported on VSX Gateway and on Scalable Platforms.

As it should. Just FYI

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @_Val_,

I'm just trying to "adapt" the script you linked to my use-case. I've made some changes and everything works well, but I have some problems with blacklists containing a high number of IPs because the API session expires.

For instance, the issue occurs if I try to import the FireHOL Level 3 list (containing more than 17K IPs). Please see the script attached.

I've also added a session-timeout of 1 hour to the login call (line 62):

mgmt_cli login user $v_cpuser password $v_cpuserpw session-timeout 3600 --format json > id.txt

In doing so, the script could import more IPs, but not enough to complete the whole list. Indeed, after around 3K-4K IPs, the session always expires:

code: "generic_err_wrong_session_id"

message: "Wrong session id [oLZge4cBkVQqZSYdLHX0awi3p9PsXnW-VmINXBjMcoc]. Session may be expired. Please check session id and resend the request."

In order to avoid the expiration, I've also added a keepalive before each addition of network object (line 116):

... { print "mgmt_cli keepalive -s id.txt > /dev/null 2>&1; ...

Unfortunately, nothing changed.

Furthermore, in order to save changes "step-by-step", I've added a publish action every 500 additions of network object (line 118-119):

awk '{print;} NR % 500 == 0 { print "mgmt_cli publish -s id.txt"; }' $v_diff_add_sh > $v_diff_add_sh_awk

mv $v_diff_add_sh_awk $v_diff_add_sh

Do you have any suggestions to keep "alive" the session? I can't understand why it expires if there's the keepalive before every network object addition.

Thanks,

Francesco

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Look here: https://community.checkpoint.com/t5/API-CLI-Discussion-and-Samples/Web-API-timeout/td-p/52741

In short, it is not API timeout, it is POST Apache timeout (see the answer in the thread). I would recommend breaking down the list to smaller portion, posting them separately within the same script.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

UPDATE 04/08/2020: Please visit this page to download the latest version of the script: https://www.francescoficarola.com/check-point-automated-ip-blacklist/

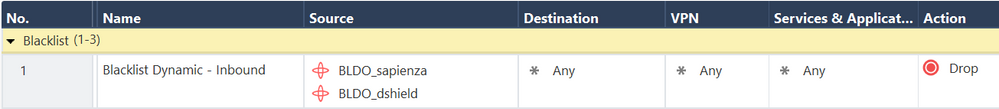

@_Val_Thank you for the information. Anyway, I changed my approach because mgmt_cli requires too much time to complete a blacklist of more than 20K objects. Furthermore, it adds real objects in the database and that should be avoided. So, I followed your suggestion and I studied dynamic objects; then I wrote another script (based on opendbl.net script).

I love sharing information, so I've attached all the code.

USAGE

- Create the following paths on your Security Gateway:

- mkdir -p /scripts/blacklist/feeds

- mkdir -p /scripts/blacklist/logs

- Upload the scripts in /scripts/blacklist of your Security Gateway

- Change the value of the variable VSID in each file (in a future version I'll change the scripts with a file inclusion having all common variables). For instance, my Virtual System ID where I'm using dynamic objects is 4.

- Run the file blacklist.sh as follow:

- ./blacklist.sh sapienza on

- ./blacklist.sh dshield on

- Check if task have been scheduled:

- ./blacklist.sh sapienza stat

- ./blacklist.sh dshield stat

- cpd_sched_config print

- ./blacklist.sh sapienza stat

- Check if the dynamic objects file has been filled

- vsenv <id>

- dynamic_objects -l | grep name

- dynamic_objects -l | less

- Create the same dynamic objects on your Smart Console (press F9 to open the CLI and execute the following commands)

- add dynamic-object name "BLDO_sapienza" comments "A set of top blacklists on the Internet" color "red"

- add dynamic-object name "BLDO_dshield" comments "DShield blacklist" color "red"

- Create your rule using the dynamic objects, for instance:

- Publish and Install Policy

That's all... have fun!

- Tags:

- blocklist

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ola Francesco, I'd like to download the scripts, but links are not working.

How do I download them from Checkmates?

Thanks in advance

Gerard

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Gerard_van_Lee1

I will publish scripts on my web site asap to be downloaded. I let you know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Gerard_van_Lee1Sorry for the tremendous delay, but I was very busy in past weeks. I eventually published the script and wrote the instruction on my website, please visit: https://www.francescoficarola.com/check-point-automated-ip-blacklist/

Hope this can help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very nice info collated on your website [Check Point] Automated IP Blacklist v2 - Francesco Ficarola

I have one question..i need blacklisting to be done bidirectionally.. do i need to enable fwaccel dos config for internal also for bidirectional blocking ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Firts of all, thanks for sharing, it's nice !!

I write this message because we had today a big production impact with one of the list provide by FireHOLE.

Yesterday, we setup this script for the first time and everything was fine. We had only set the https://raw.githubusercontent.com/ktsaou/blocklist-ipsets/master/firehol_level3.netset list in the source.

This morning, i read on the FireHole website that the Level 1 is recommended, so i just change the level3 to level1 in the script. Few seconds later, we lost the communication with the cluster and it was completely run out of communication.

After investigation, the main difference between the levels 1 and 3 is the presence of 0.0.0.0/8 in the level 1's.

I don't understand why it block anything because it's suppose to be for broadcast messages only.

After unloadlocal + diable the rule in management and push policy again, cluster is now alive and running fine, but my SI Management is not fine with this incident.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 19 | |

| 16 | |

| 15 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 | |

| 5 | |

| 4 |

Upcoming Events

Tue 05 Nov 2024 @ 10:00 AM (CET)

EMEA CM LIVE: 30 Years for the CISSP certification - why it is still on topTue 05 Nov 2024 @ 05:00 PM (CET)

Americas CM LIVE: - 30 Years for the CISSP certification - why it is still on topThu 07 Nov 2024 @ 05:00 PM (CET)

What's New in CloudGuard - Priorities, Trends and Roadmap InnovationsTue 05 Nov 2024 @ 10:00 AM (CET)

EMEA CM LIVE: 30 Years for the CISSP certification - why it is still on topTue 05 Nov 2024 @ 05:00 PM (CET)

Americas CM LIVE: - 30 Years for the CISSP certification - why it is still on topTue 12 Nov 2024 @ 03:00 PM (AEDT)

No Suits, No Ties: From Calm to Chaos: the sudden impact of ransomware and its effects (APAC)Tue 12 Nov 2024 @ 10:00 AM (CET)

No Suits, No Ties: From Calm to Chaos: the sudden impact of ransomware and its effects (EMEA)Tue 19 Nov 2024 @ 12:00 PM (MST)

Salt Lake City: Infinity External Risk Management and Harmony SaaSWed 20 Nov 2024 @ 02:00 PM (MST)

Denver South: Infinity External Risk Management and Harmony SaaSAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2024 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter