- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

The Great Exposure Reset

24 February 2026 @ 5pm CET / 11am EST

CheckMates Fest 2026

Watch Now!AI Security Masters

Hacking with AI: The Dark Side of Innovation

CheckMates Go:

CheckMates Fest

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Hybrid Mesh

- :

- Firewall and Security Management

- :

- Re: Best Practice for HA sync interface

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Best Practice for HA sync interface

What is the best practice for the sync interface when connecting 2 cluster members using ClusterXL?

We have always connected the cluster members together using the sync interface between them. Just curious if that is according to best practice vs. connecting the members directly to a switch for sync.

19 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From my experience I can say it depends if is a physical cluster or virtual.

If it's physical the sync interface should be in a dedicated vlan and you should keep attention on CCP traffic. Some switches can cause problems with multicast traffic so you have to switch to CCP in broadcast.

In pre R80.10 you have to specify the cluster ID (or MAC magic or fwha_mac_magic) on both members (default value 1) and it must not be in conflict with other cluster on the network.

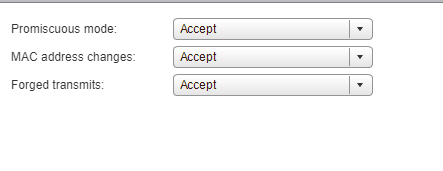

In a virtual environment (vmware) you have to check the security settings of the portgroups where the vNICs are connected on.

Further info can be found on this sk Connecting multiple clusters to the same network segment (same VLAN, same switch) or How to set ClusterXL Control Protocol (CCP) in Broadcast / Multicast mode in ClusterXL

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And is it a common/best practice to bond sync interfaces as well? So dedicated two interfaces in a bond used for sync only.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would say that bonding the sync interface is a very good idea.

Having 2 sync interfaces is not supported.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In my experience the preference was always a direct connection vs using a switch in between cluster members for sync. The reason behind this is if the switch experiences a failure it can cause the cluster to become unstable. A direct connection just removes a potential failure point/latency point. If the firewalls are not physically close enough to do a direct connection then using a switch is fine but my recommendation is always direct.

Bonding the sync interface is the correct way to do it as 2 sync interfaces (while technically supported) will cause issues. However, IMO I wouldn't bother bonding the Sync interface unless:

1) You find that you are over-running your sync interface with traffic. In most deployments this won't be the case, you need to be moving a lot of data or have some other special circumstances.

2) If you need a little extra redundancy. If you find you want to account for a single port failure in your cluster design then go ahead and bond but in my view you're not gaining a whole lot of redundancy. Just my .02

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In our environment, we have 2 nodes 500 km away, so using direct connection is not possible.

Using 10G link is also recommended, preffered as 2x 10G bond interface.

Increased of RX/TX ring size is also good to have.

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Found this thread and am pretty sure it answers my question but wanted to make sure no change of opinion over the last 2 and a bit years.

I'm favouring a direct gateway to gateway sync, using a bonded pair of 10G interfaces. Should I consider anything else or is this the ideal scenario?

The devices are in the same DC, about 40 - 50m apart as the cable is run. I have the option of switches if I want to but prefer P2P if that is still favoured.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can do direct-wired sync, and it is supported, but it will cause problems. Not may, will. The TAC had already recommended against direct-wired sync for years when I started in 2006.

One of the things a cluster member monitors to determine its health is its interface status. With direct-wired sync, if one member dies or is rebooted, the remaining member sees its interface go down. This is a failure, and the member can't tell if its interface died, or if something outside it failed, so it causes the member to evaluate whether it is the healthiest member left in the cluster. This is a pretty conservative process, as you really don't want a member whose NIC failed thinking it is healthiest and claiming the VIPs while the other member is also claiming the VIPs.

Among other things, a member checking to see if it is healthiest will ping addresses on all of its monitored interfaces (and on the highest and lowest VLANs of monitored interfaces with subinterfaces). If it gets a response, the interface is marked as good. If it doesn't get a response, the member interprets this as further evidence that it is the one with the problem and therefore should not take over the VIPs.

This can easily lead to situations where you reboot one member to install an update, and the other member thinks it has failed and goes down.

The best way I've seen to run sync is two interfaces in a non-LACP bond (round robin works) going to two totally separate switches. If one switch dies? No problem: the bond just handles it. If one member dies? No problem: the other member has link on all of its interfaces. If the link between one of the switches and one of the members dies? No problem: the members realize that sync is broken, but the interfaces are still up, so no failover is triggered; you just lose redundancy.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Bob. I needed this. It's so obvious and how we currently have it. Makes sense to carry this into the new DC's.

Have a agreat weekend.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Absolutely! While in the TAC, I handled a fair number of tickets from people whose clusters were not working how they expected. Direct-wired sync was the most common cause, and the most common issue it caused was intentionally rebooting one member would take the whole cluster down. The other member would just refuse to take over because it thought it had failed.

Check Point's cluster status state machine is complicated, and can easily cause problems if you don't know about some of the things it does. When set up properly, installing an update should not disrupt traffic at all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As a Current Check Point Tech, having your sync cables directly connected is fine and even recommended here is a link to the Doc.

Connecting the physical network interfaces of the Cluster Members directly using a cross-cable. In a cluster with three or more members, use a dedicated hub or switch.

all that will happen is the active gateway will go from Active to Active attention or (Active!) with a ! as it no longer sees the sync interface as up, but the other fw is down so it stays up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Note how at the bottom of that page, there is a link to Supported Topologies for Synchronization Network. All four listed topologies have a switch in the middle.

If you use a cable for sync with no switch in the path and you reboot one member, the remaining member will start probing on all monitored interfaces to see if it can get responses from things. If it finds a monitored interface other than the one which is down where it can't reach anything, it will refuse to take over. If it's already Active, it may actually go Down.

By default, the highest and lowest VLANs on a given physical interface or bond are monitored. This means if you are in the process of adding a new highest VLAN or a new lowest VLAN and it doesn't have anything on it yet, and you are using direct-wired sync, rebooting one cluster member may cause the other member to go Down. This also applies if you are decommissioning the highest VLAN or lowest VLAN, the endpoints in that VLAN are evacuated, but the VLAN hasn't been removed from the cluster configuration.

If it didn't do this, a failure could cause both members to try to become Active at the same time, which is worse than the cluster going down entirely.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In addition to that, if you are using trunk interface with more VLANs on it, the synchronization VLAN has to be the lowest VLAN.

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hello bob,

this is not clear to me.

why a switch in the middle should fix the problem that you are describing? if a member is rebooted, switch or not the other member will have the same result for probing other interfaces.

I wanna add just only one question: any recommendation about bandiwidth to estimate? i read in some thread that sync interface should be abble to carry 10% of total throughput about connected interfaces, it is true?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"One of my interfaces is down" is an ambiguous failure. Did the connected device fail? Did the cable fail? Did my interface fail? Ambiguous failures trigger more checks to try to detect if the member is okay or if it is the one which failed.

"I can't hear from my peer on any interfaces, but all my interfaces are still up" is not ambiguous, so it doesn't trigger the probing in the first place. The goal of the switch is to make the failure unambiguous.

As for sync throughput capacity, 10% is a decent starting point, but it depends on your traffic patterns. Sync traffic volume depends on connection rate rather than throughput. A firewall in front of an authoritative public DNS server will see a much higher sync load than a firewall in front of an FTP server. Excluding virtual connections like DNS from sync entirely saves a lot of load. Delayed sync for maybe-short-lived-maybe-not connections like HTTP (used all the time to download small files like HTML documents, but also to download multi-gigabyte filesystem images) also saves load. I have dealt with a few firewalls which needed more than 1g of sync throughput. I've seen one with unreasonable sync expectations (sync DNS and everything immediately between six cluster members) which needed 10g of throughput. I don't think I've ever seen one with reasonable expectations which needed 10g of throughput.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear community, Happy new year !!

Allowing myself to bump this thread.

Where do we stand today as having the Sync/Failover link connected through a L2 switch ? I’m changing a 12600 cluster with a 6600 and I cannot directly connect the two members of my new cluster using the RJ45 ports, and the 4 fiber ports will be dedicated to the production traffic. So I’ve configured a bonding with 2 eth interfaces for sync purpose and a L2 between the firewalls, through a few switches.

If I remember correctly, in the old days CheckPoint didn’t recommended having a switch between the firewalls for HA in case of a failure of a switch between the two, but it seems ok now according to the admin guide: https://sc1.checkpoint.com/documents/R81.10/WebAdminGuides/EN/CP_R81.10_ClusterXL_AdminGuide/Topics-...

But I was hoping you had some feedback or recommendation about this usage.

Thank you all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Per the current documentation having switches in-path is recommended, provided sufficient redundancy exists.

With that said are you able to share more about your scenario I.e. is this all within a single location etc?

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chris, thanks for your feedback.

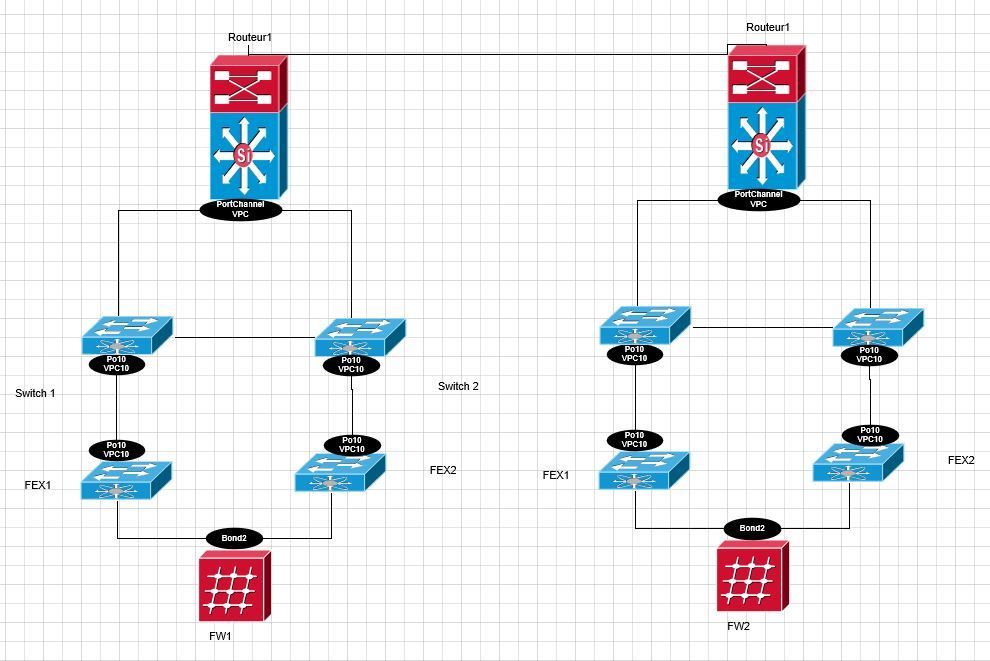

The cluster is located on the same data center, it's just that the 2 firewalls are distant from each other, preventing an RJ45 connection. The 2 sync interfaces are connected to different FEX and every switch and routeur on the path is fully redundant. Attaching a simple diagram of the Sync connection I’ve set-up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Josh,

which configuration did you adopted? ty

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I ended up creating the L2 between the 2 firewalls as in the diagram, it's working as expected, with failover and everything.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 56 | |

| 44 | |

| 16 | |

| 14 | |

| 12 | |

| 11 | |

| 10 | |

| 10 | |

| 9 | |

| 7 |

Upcoming Events

Thu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: AI-Generated Malware - From Experimentation to Operational RealityFri 13 Feb 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 43: Terugblik op de Check Point Sales Kick Off 2026Thu 19 Feb 2026 @ 03:00 PM (EST)

Americas Deep Dive: Check Point Management API Best PracticesThu 12 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 3: AI-Generated Malware - From Experimentation to Operational RealityFri 13 Feb 2026 @ 10:00 AM (CET)

CheckMates Live Netherlands - Sessie 43: Terugblik op de Check Point Sales Kick Off 2026Thu 19 Feb 2026 @ 03:00 PM (EST)

Americas Deep Dive: Check Point Management API Best PracticesAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter