- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Network & SASE

- :

- Remote Access VPN

- :

- R80.30 to R81.10 - Endpoint client fails to negoti...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

R80.30 to R81.10 - Endpoint client fails to negotiate with the R81.10 box

We are part way through a firewall migration from R80.30 to R81.10.

Both new boxes are built, the passive firewall has been turned off, and the configuration imported to the new R81.10 passive firewall.

Failover of the cluster has worked flawlessly, and we have conducted tests, and everything appears to work with one exception.

Endpoint clients refuse to VPN connect. I have tried an E86.00, E86.30, and E86.60 client, and none of them work, with the eventual error being that it could could not negotiate a connection. SNX, and Capsule VPN, on both Windows 11, and iPhone, work just fine. Even the really old CLI-enabled SNX copy we have works fine.

Restarting services on the primary, failing back to the R80.30 box, and all VPN services are fully restored.

Has anyone else experienced this?

Howard

Labels

- Labels:

-

Open Server

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Issue was eventually resolved with a no-NAT rule for the IP address the VPN clients connect to, this information obtained from our support partner.

It seems that our R80.30 firewalls were affected by this to a degree, and two responses were sent to the client. One from the hide-NAT rule, and one from the IP address the responses should come from. The upshot was that all Endpoint clients were using Visitor mode rather than NAT-T.

On the R81.10 firewalls this didn't seem to work correctly, and instead of working in Visitor mode they disconnected after a few seconds.

A no-NAT rule sitting just above the existing hide NAT rule allowed the Endpoint clients to connect, and NAT-T connections could be seen with "vpn tu tlist".

Both firewall have been replaced with new R81.10 boxes, and everything is working fine. I understand a fix for this behaviour should be included in a forthcoming JHF.

16 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which Jumbo was applied to the R81.10 gateways?

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

66

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any corresponding drop logs in SmartConsole or symptoms that align to sk175704?

CCSM R77/R80/ELITE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sadly nothing like that. You could see our accounts were authenticated via RADIUS, and the connection was successful. Then it would hang for a few minutes, then the response box would come up again. It did that perhaps three times before finally giving up stating that it could not negotiate a connection.

I just had another look over the SK. So while we didn't see any dropped traffic in the logs we do have a mixture of VPN rules.

We have a new VPN layer rule, using Access Roles, and have been gradually migrating services from the Legacy rules above.

The one thing I would add is this was not an in-place upgrade from R80.30, which that SK seems to imply. This was a new R81.10 server, where we have used the process to import the exported configuration on to that new box, so it has never had R80.30 on it.

set clienv on-failure continue

load configuration fw1_new-hardware

set clienv on-failure stop

save config

Similarly, the R81.10 management server was freshly built, using an exported database from the old R80.30 management server.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had seen customers have this issue before and in my experience, it was ALWAYS caused by some custom file either on mgmt or gateways that had to do with vpn config. So, either trac.config, or trac_client_1.ttm file in most cases. Not sure if you guys have that configured, but throwing it out there. As @Chris_Atkinson mentioned, any other relevant logs you can find? Do you know if anyone generated any logs from the VPN client?

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That was one of the things our support partner suggesting checking prior to migration, and they found no alterations to the "trac_client_1.ttm" file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What you could do is cd $FWDIR/conf on both mgmt and gateways and do ls -lh trac*

This will show you if there is more than original trac_client_1.ttm file, so it would tell us 100% what is used.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good idea. For good measure I ran this on the management server, and both the R80.30, and R81.10 firewalls.

ls -lh trac*

-rwxr-xr-x 1 admin bin 7.2K Jun 13 10:47 trac_client_1.ttm

ls -lh trac*

-rw-r----- 1 admin bin 7.2K Sep 24 2019 trac_client_1.ttm

ls -lh trac*

-rw-r----- 1 admin bin 7.2K Jun 30 2021 trac_client_1.ttm

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check the content of it on mgmt server.

Best,

Andy

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can't see anything that stands out, that might be something we added. I was going to post its contents here, but it's a long file.

I copied the file from both the management server, and the R81.10 server, and then used Notepad++ to compare them

I ran a further compare with the file from the R80.30 server, and that was identical too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Howard_Gyton ,

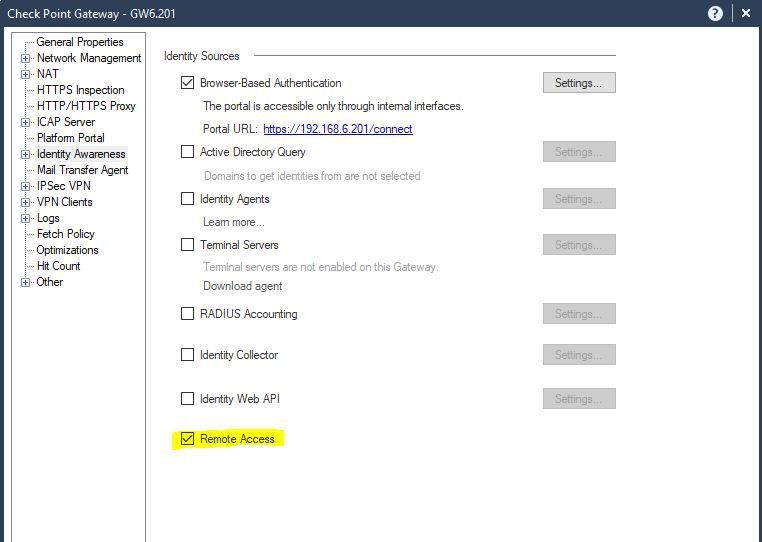

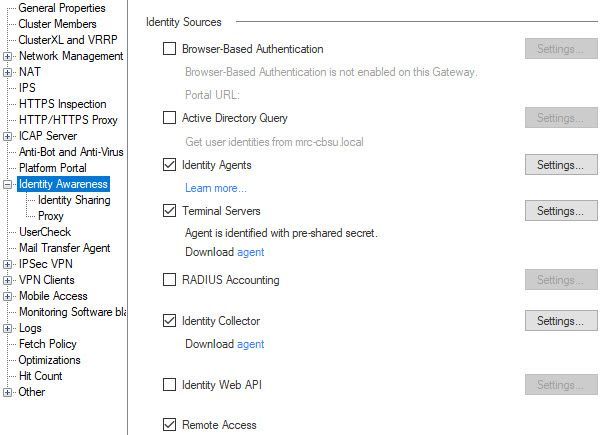

you mention access role but did you enable Remote Access check box under identity Awareness configuration?

if you didn't check this box, can you please enable it and try re-test?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, we have that set. If we didn't it wouldn't work on our R80.30 firewall, where normal functionality is seen for all features.

As I may have mentioned, we believe the failure is in Phase 2 of the negotiation. Last night we ran some further tests, and played around with the encryption, and data integrity settings. Briefly we turned everything on, including DES/3DES, and even then the Endpoint clients wouldn't connect to the R81.10 firewall, but work quite happily with the R80.30 firewall. We of course changed those settings back.

But again, SNX, and Capsule VPN remain unaffected, and could connect to the R81.10 firewall, even if I set my phone to use IPSec rather than SSL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ok, i though you configured access role only in R81.10, miss understood the story 🙂

I will contact you offline via email and we will continue it from there.

Thanks,

Ilya

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What are your link selection settings?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Selected address from topology table" VIP selected

"Operating system routing table"

Tracking=None

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Issue was eventually resolved with a no-NAT rule for the IP address the VPN clients connect to, this information obtained from our support partner.

It seems that our R80.30 firewalls were affected by this to a degree, and two responses were sent to the client. One from the hide-NAT rule, and one from the IP address the responses should come from. The upshot was that all Endpoint clients were using Visitor mode rather than NAT-T.

On the R81.10 firewalls this didn't seem to work correctly, and instead of working in Visitor mode they disconnected after a few seconds.

A no-NAT rule sitting just above the existing hide NAT rule allowed the Endpoint clients to connect, and NAT-T connections could be seen with "vpn tu tlist".

Both firewall have been replaced with new R81.10 boxes, and everything is working fine. I understand a fix for this behaviour should be included in a forthcoming JHF.

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 4 | |

| 3 | |

| 2 | |

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Upcoming Events

Tue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsTue 16 Dec 2025 @ 05:00 PM (CET)

Under the Hood: CloudGuard Network Security for Oracle Cloud - Config and Autoscaling!Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter