- Products

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- Learn

- Local User Groups

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

- Partners

- More

- ABOUT CHECKMATES & FAQ

- Sign In

- Leaderboard

- Events

The Great Exposure Reset

24 February 2026 @ 5pm CET / 11am EST

AI Security Masters E4:

Introducing Cyata - Securing the Agenic AI Era

AI Security Masters E3:

AI-Generated Malware

CheckMates Go:

CheckMates Fest

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- Hybrid Mesh

- :

- Firewall and Security Management

- :

- Policy Install Failed - Problem With The Commit Fu...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jump to solution

Policy Install Failed - Problem With The Commit Function

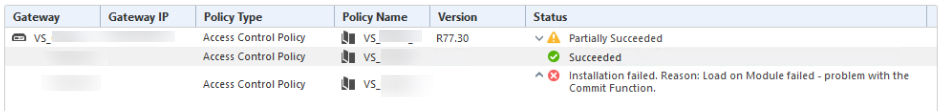

I have a feeling this one is going to require a call to TAC, but does anyone have any experience troubleshooting this one? I've got one VS in a VSLS VSX Cluster containing 3 Virtual Switches and 5 Virtual Systems that I get this Policy install error on. All other VS's install policy just fine. The VSX Cluster is R77.30 with R80.10 SMS.

The strange thing is that it happens once the Policy Install progress hits 99%. It was my understanding (based on this very comprehensive and helpful writeup) that the Policy Install procedure was all but completed once the progress bar hit 99%?

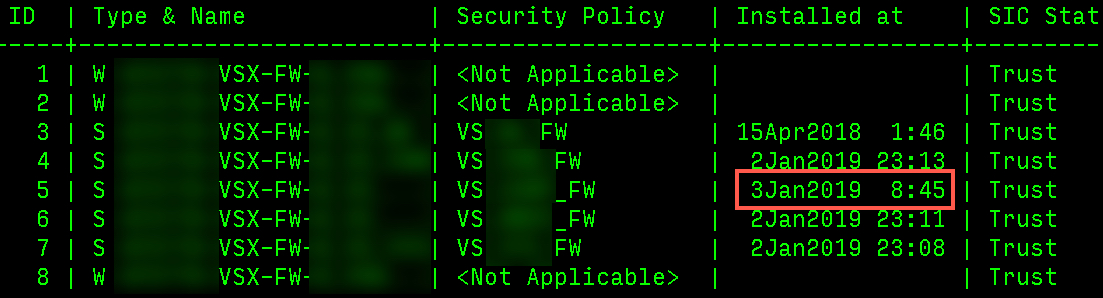

When I look at vsx stat -v, It appears that VSX thinks the policy installed. The "Installed at" time matches with when the Policy Install fails.

I am able to verify the Policy in Smart Console without any errors but I'm not sure where to begin troubleshooting this since it appears that the Gateway thinks the policy installed successfully but the management server doesn't.

Thanks!

R80 CCSA / CCSE

Labels

- Labels:

-

Policy Installation

1 Solution

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to close the loop here in case anyone else should encounter this problem, the final solution was to perform a SIC reset on the individual VS as outlined in sk34098.

Kaspars Zibarts suggestion of reset_gw would have also worked since that procedure performs a full SIC reset as part of the vsx_util reconfigure process.

In the end, it came down to TAC feeling very certain the individual SIC reset would resolve it and my ability to try the SIC reset during the normal course of troubleshooting vs. waiting for a maintenance window to do the reset_gw. ![]()

Thanks to all who contributed their suggestions and help here! If nothing else, I learned a handful of other troubleshooting steps and commands through this thread that I otherwise wouldn't have!

R80 CCSA / CCSE

21 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How many cluster members do you have and did you verify that policy installed on all members? I would start with cpd.elg logs

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are 2 members in the cluster. I didn't think to check both clusters. I habitually was checking just the one that the VS is active on. However, it does appear that the policy is not installing on the other cluster member. The install dates are different across the two.

I'll start digging through the cpd.elg logs on this Gateway and see if anything interesting and relevant pops up. Thanks for the suggestion!

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

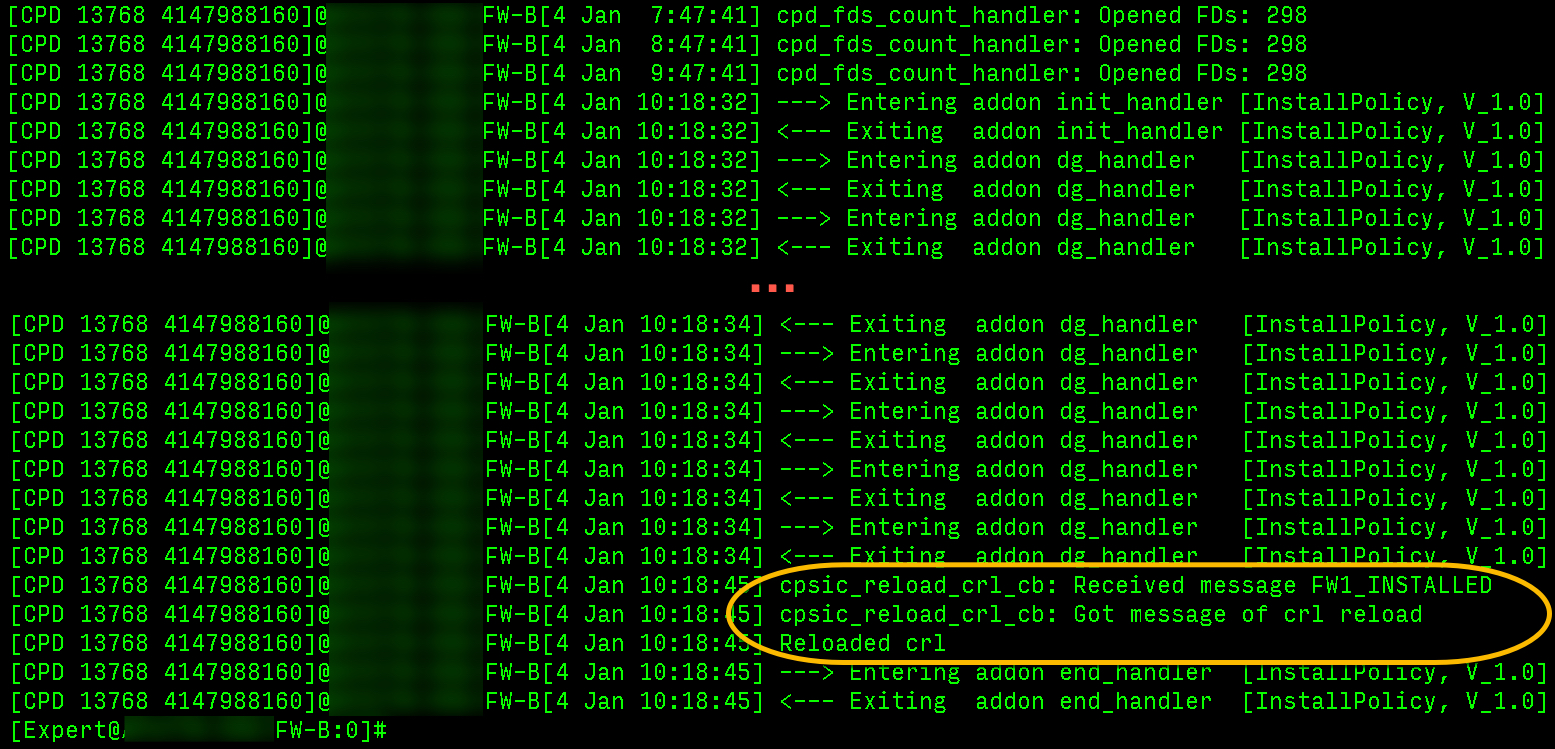

The plot thickens... it looks like there is a positive confirmation the policy installed on the Cluster Member that shows the current policy install date:

However, it seems to just enter/exit "addon end_handler" without showing any confirmation that the policy install succeeded (or failed) on the Gateway that doesn't show the current policy install date.

I was hoping for something a little more definitive in the logs pointing to a reason. But there is definitely a difference between the two cluster members.

I wonder if the cpd process just needs restarting? Maybe it's time for a cpstop/start in a maintenance window?

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are couple of SKs about cpd debug that would show you more messages in the log. For example

Additionally check fwm.elg. But yes - if you have it as an option - reboot the gateway that exibits the problem and check which jumbo hotfix you are on and if there's a newer version that might have fixes for cpd or policy installation / VSX

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to be clear, the fwm.elg log only exists on the SMS side, correct?

Thanks for the SK on debugging CPD. I got about 140,000+ lines of output when I ran it while pushing policy. I'm thinking this may be the point where it is more beneficial to engage TAC because without any guidance of what I'm looking for, it seems like searching for a needle in a haystack.

If nothing else, I can arm TAC with a lot of information when opening the SR to hopefully move things along quickly!

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

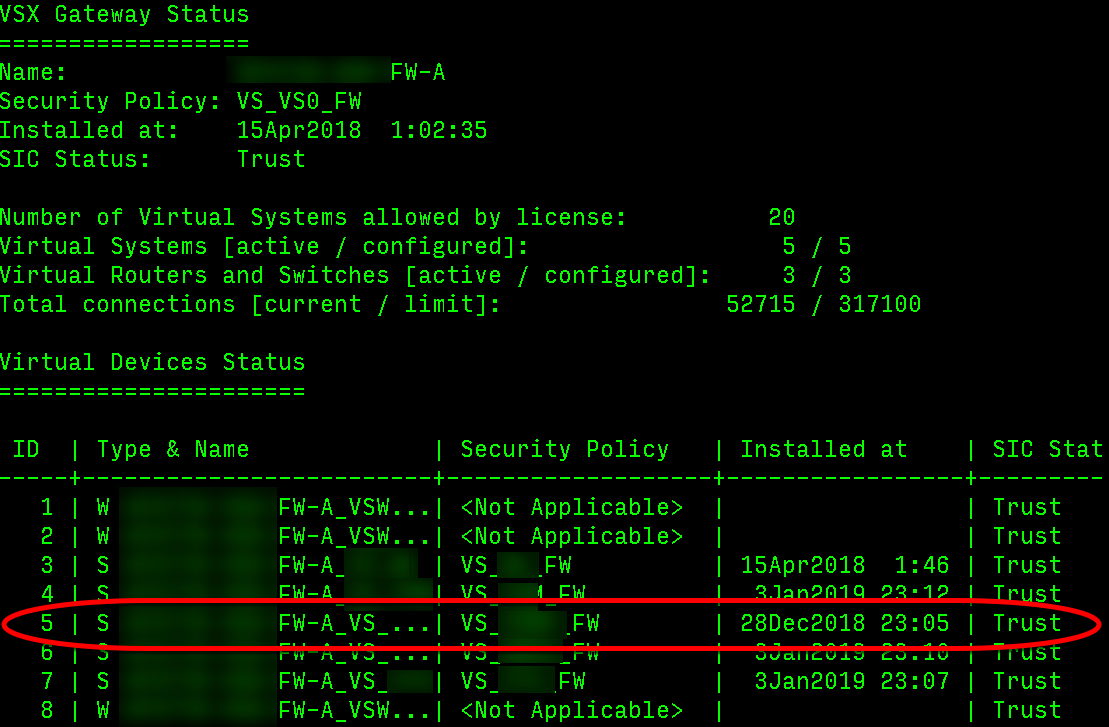

BTW, how does vsx stat -v output looks like on that gateway? Is SIC established to the failing VS?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Everything looks OK to my eyes:

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

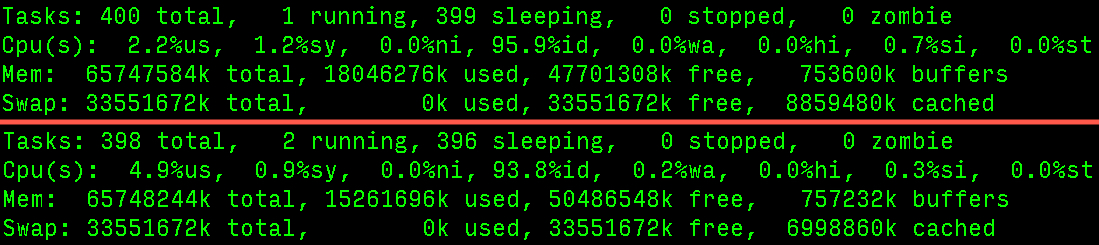

Please check the status of free RAM on the policy installation target.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the suggestion! I think the memory looks pretty good:

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

check free HDD space on mgmt and/or VSX.

Kind regards,

Jozko Mrkvicka

Jozko Mrkvicka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This seemed like a great place to start, but disk space looks pretty good. Both VSX clusters are using the same amount of disk space. The SMS should have plenty, too!

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please open an SR with TAC if you did not do that already

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I opened an SR on Friday. TAC supplied a policy debug script. I expect we’ll make some good progress today once I get rolling working through that process.

Thanks,

Dan

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

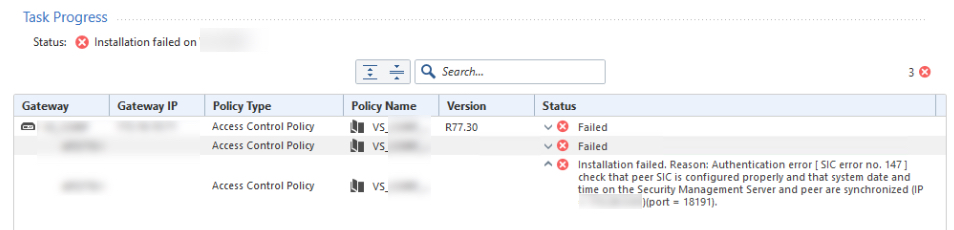

This one is still making the rounds with TAC. We were provided a vs_reconfigure BASH script to run against the VS to rebuild it. While the script seemed to run successfully, and the GW was able to pull policy from the SMS, we are still unable to push policy to it.

Now, we do get a SIC error despite the SIC status still showing as Trust in the output of vsx stat -v.

Strangely, I am able to modify the route table and push the VS config successfully through SmartConsole. I have a feeling we will be resetting SIC on the individual VS, but it seems strange that everything seems to work and communicate up to a certain point.

Very strange... I'll do my best to update with whatever the resolution ends up being as this seems to be a unique one!

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would probably do full rebuild of the box. First reset_gw on firewall (this way you will keep all basic non-vsx config) and then vsx_util reconfigure on mgmt. Something seems very "stuck" there if TAC was not able to resolve it so far. Not too sure how many times have you done it, but it's not as complicated and dangerous as it sounds. I would avoid resetting sic on individual Vs - never had full success with it. Something always didn't work correctly at the end.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wasn't aware reset_gw was an option. Would you just run this from vs0 to basically blow away all the VSX config from the Gateway?

By "keep all basic non-vsx config", I'm taking that to mean the underlying GAIA config and OS remains. So, this isn't a rebuild in the sense of a total reinstall of GAIA + HFA's to the GW? I'm familiar with the "vsx_util reconfigure" process and am pretty comfortable with that.

I was thinking along these lines, but I didn't realize there was a way to remove just the VSX configs! It would save a lot of headache of having to reimage the appliance and put everything back in place. I can mention it to the folks at TAC working with me. Is there an SK explaining this anywhere?

Thanks for the input, this could be very helpful!

-Dan

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yap! Has saved me on number of occasions ![]() and very popular command in my lab where I rebuild them constantly to test stuff

and very popular command in my lab where I rebuild them constantly to test stuff ![]()

Just save your show configuration output as pain text just in case of course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks again for this suggestion. I think this is the way to go. Now, I just need to get this squeezed into a maintenance window!

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have two VMs available, I would always suggested lab testing just to make sure. And don't forget the snapshot ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Precisely how I planned on spending my day today!

R80 CCSA / CCSE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to close the loop here in case anyone else should encounter this problem, the final solution was to perform a SIC reset on the individual VS as outlined in sk34098.

Kaspars Zibarts suggestion of reset_gw would have also worked since that procedure performs a full SIC reset as part of the vsx_util reconfigure process.

In the end, it came down to TAC feeling very certain the individual SIC reset would resolve it and my ability to try the SIC reset during the normal course of troubleshooting vs. waiting for a maintenance window to do the reset_gw. ![]()

Thanks to all who contributed their suggestions and help here! If nothing else, I learned a handful of other troubleshooting steps and commands through this thread that I otherwise wouldn't have!

R80 CCSA / CCSE

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 53 | |

| 35 | |

| 14 | |

| 13 | |

| 11 | |

| 11 | |

| 9 | |

| 8 | |

| 7 | |

| 7 |

Upcoming Events

Mon 23 Feb 2026 @ 11:00 AM (EST)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - AMERTue 24 Feb 2026 @ 10:00 AM (CET)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - EMEATue 24 Feb 2026 @ 11:00 AM (EST)

Under The Hood: CloudGuard Network Security for Azure Virtual WANMon 23 Feb 2026 @ 11:00 AM (EST)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - AMERTue 24 Feb 2026 @ 10:00 AM (CET)

Latest updates on Quantum Spark including R82 features and Spark Management zero touch - EMEATue 24 Feb 2026 @ 11:00 AM (EST)

Under The Hood: CloudGuard Network Security for Azure Virtual WANThu 26 Feb 2026 @ 05:00 PM (CET)

AI Security Masters Session 4: Introducing Cyata, Securing the Agentic AI EraFri 06 Mar 2026 @ 08:00 AM (COT)

Check Point R82 Hands‑On Bootcamp – Comunidad DOJO PanamáAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2026 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter