- Products

Network & SASE IoT Protect Maestro Management OpenTelemetry/Skyline Remote Access VPN SASE SD-WAN Security Gateways SmartMove Smart-1 Cloud SMB Gateways (Spark) Threat PreventionCloud Cloud Network Security CloudMates General CloudGuard - WAF Talking Cloud Podcast Weekly ReportsSecurity Operations Events External Risk Management Incident Response Infinity AI Infinity Portal NDR Playblocks SOC XDR/XPR Threat Exposure Management

- Learn

- Local User Groups

- Partners

- More

This website uses Cookies. Click Accept to agree to our website's cookie use as described in our Privacy Policy. Click Preferences to customize your cookie settings.

- Products

- AI Security

- Developers & More

- Check Point Trivia

- CheckMates Toolbox

- General Topics

- Products Announcements

- Threat Prevention Blog

- Upcoming Events

- Americas

- EMEA

- Czech Republic and Slovakia

- Denmark

- Netherlands

- Germany

- Sweden

- United Kingdom and Ireland

- France

- Spain

- Norway

- Ukraine

- Baltics and Finland

- Greece

- Portugal

- Austria

- Kazakhstan and CIS

- Switzerland

- Romania

- Turkey

- Belarus

- Belgium & Luxembourg

- Russia

- Poland

- Georgia

- DACH - Germany, Austria and Switzerland

- Iberia

- Africa

- Adriatics Region

- Eastern Africa

- Israel

- Nordics

- Middle East and Africa

- Balkans

- Italy

- Bulgaria

- Cyprus

- APAC

AI Security Masters

E1: How AI is Reshaping Our World

MVP 2026: Submissions

Are Now Open!

What's New in R82.10?

Watch NowOverlap in Security Validation

Help us to understand your needs better

CheckMates Go:

Maestro Madness

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- CheckMates

- :

- Products

- :

- General Topics

- :

- Re: Multi-Queue and LACP configuration

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Are you a member of CheckMates?

×

Sign in with your Check Point UserCenter/PartnerMap account to access more great content and get a chance to win some Apple AirPods! If you don't have an account, create one now for free!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Multi-Queue and LACP configuration

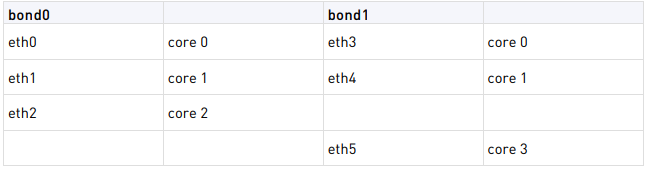

In the ClusterXL Admin Guide it states when utilizing Link Aggregation "To get the best performance, use static affinity for Link Aggregation", where it shows and recommends examples where you set the affinities for the bond interfaces to different cores. This makes sense to me as you would not want a LACP bond to have the slave interfaces pinned to the same cpu core. Example below:

However with Multi-Queue various documentation states NOT to manually set affinities as it will cause performances issues.

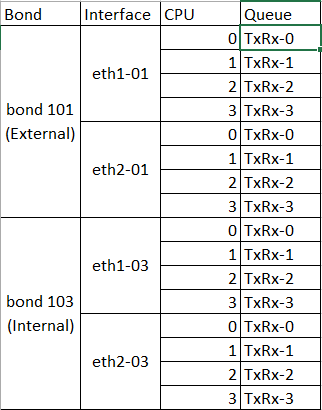

If this is the case, is it safe to have Multi-Queue enabled on 10gb interfaces that are a part of a lacp bond where the queue map to the same CPU cores? Specifically I have two LACP bond interfaces consisting of 2 10gb interfaces with Multi-Queue enable on all four 10gb interfaces. Bond to Interface to CPU mapping below:

3 Replies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The recommendation in the ClusterXL guide to use static interface affinities is outdated. It assumes that SecureXL is disabled (and thus automatic interface affinity is not active at all) or that automatic interface affinity does not do a good job of balancing traffic among the interfaces. This latter assumption was definitely the case in R76 and earlier, but automatic interface affinity was substantially improved in R77+ and I have not needed to set static interface affinities for quite a long time.

Multi-Queue does not directly care about bond/aggregate interfaces, it is simply enabled on the underlying physical interfaces. MQ simply allows all SND/IRQ cores (up to certain limits) to have their own queues for an enabled interface that they empty independently. The packets associated with a single connection are always "stuck" to the same queue/core every time to avoid out of order delivery, and I assume there is some kind of balancing performed for new connections among the queues for a particular interface. You would most definitely NOT want any kind of static interface affinities defined on an interface with Multi-Queue enabled, as doing so would interfere with the Multi-Queue sticking/balancing mechanism. The likely result would be overloading of individual SND/IRQ cores, and even possibly out-of-order packet delivery which is very undesirable.

Gaia 4.18 (R82) Immersion Tips, Tricks, & Best Practices Video Course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

Now Available at https://shadowpeak.com/gaia4-18-immersion-course

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| What is Multi Queue? |

|---|

It is an acceleration feature that lets you assign more than one packet queue and CPU to an interface.

When most of the traffic is accelerated by the SecureXL, the CPU load from the CoreXL SND instances can be very high, while the CPU load from the CoreXL FW instances can be very low. This is an inefficient utilization of CPU capacity.

By default, the number of CPU cores allocated to CoreXL SND instances is limited by the number of network interfaces that handle the traffic. Because each interface has one traffic queue, only one CPU core can handle each traffic queue at a time. This means that each CoreXL SND instance can use only one CPU core at a time for each network interface.

Check Point Multi-Queue lets you configure more than one traffic queue for each network interface. For each interface, you can use more than one CPU core (that runs CoreXL SND) for traffic acceleration. This balances the load efficiently between the CPU cores that run the CoreXL SND instances and the CPU cores that run CoreXL FW instances.

Important - Multi-Queue applies only if SecureXL is enabled.

| Multi-Queue Requirements and Limitations |

|---|

- Multi-Queue is not supported on computers with one CPU core.

- Network interfaces must use the driver that supports Multi-Queue. Only network cards that use the igb (1Gb), ixgbe (10Gb), i40e (40Gb), or mlx5_core (40Gb) drivers support the Multi-Queue.

- You can configure a maximum of five interfaces with Multi-Queue.

- You must reboot the Security Gateway after all changes in the Multi-Queue configuration.

- For best performance, it is not recommended to assign both SND and a CoreXL FW instance to the same CPU core.

- Do not change the IRQ affinity of queues manually. Changing the IRQ affinity of the queues manually can adversely affect performance.

- Multi-Queue is relevant only if SecureXL and CoreXL is enabled.

- Do not change the IRQ affinity of queues manually. Changing the IRQ affinity of the queues manually can adversely affect performance.

- You cannot use the “sim affinity” or the “fw ctl affinity” commands to change and query the IRQ affinity of the Multi-Queue interfaces.

- The number of queues is limited by the number of CPU cores and the type of interface driver:

|

Network card driver |

Speed |

Maximal number of RX queues |

|

igb |

1 Gb |

4 |

|

ixgbe |

10 Gb |

16 |

|

i40e |

40 Gb |

14 |

|

mlx5_core |

40 Gb |

10 |

- The maximum RX queues limit dictates the largest number of SND/IRQ instances that can empty packet buffers for an individual interface using that driver that has Multi-Queue enabled.

- Multi-Queue does not work on 3200 / 5000 / 15000 / 23000 appliances in the following scenario (sk114625)

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

More informations about Multi-Queue you found here:

R80.x Performance Tuning Tip – Multi Queue

➜ CCSM Elite, CCME, CCTE ➜ www.checkpoint.tips

Leaderboard

Epsum factorial non deposit quid pro quo hic escorol.

| User | Count |

|---|---|

| 17 | |

| 10 | |

| 10 | |

| 8 | |

| 7 | |

| 3 | |

| 2 | |

| 2 | |

| 2 | |

| 2 |

Upcoming Events

Thu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldThu 18 Dec 2025 @ 10:00 AM (CET)

Cloud Architect Series - Building a Hybrid Mesh Security Strategy across cloudsThu 08 Jan 2026 @ 05:00 PM (CET)

AI Security Masters Session 1: How AI is Reshaping Our WorldAbout CheckMates

Learn Check Point

Advanced Learning

YOU DESERVE THE BEST SECURITY

©1994-2025 Check Point Software Technologies Ltd. All rights reserved.

Copyright

Privacy Policy

About Us

UserCenter